当前位置:网站首页>NLP introduction + practice: Chapter 3: gradient descent and back propagation

NLP introduction + practice: Chapter 3: gradient descent and back propagation

2022-07-26 01:06:00 【ZNineSun】

List of articles

Last one : 《nlp introduction + actual combat : Chapter two :pytorch To get started with 》

1. What is the gradient ?

gradient : It's a vector , derivative + The fastest changing direction ( Direction of learning parameters )

Review machine learning

collecting data x. Building machine learning model f, obtain

f ( x , w ) = Y p r e d i c t f(x,w)=Y_{predict} f(x,w)=Ypredict

In other words, a series of predicted values will be obtained after our model calculation

How to judge whether the model is good or bad :

l o s s = ( Y p r e d i c t − Y t r u e ) 2 − > Return to loss l o s s = Y t r u e ∗ l o g ( Y p r e d i c t ) − > Classified loss loss=(Y_{predict}-Y_{true})^2 -> Return to loss \\ loss=Y_{true}*log(Y_{predict}) -> Classified loss loss=(Ypredict−Ytrue)2−> Return to loss loss=Ytrue∗log(Ypredict)−> Classified loss

The goal is : Through adjustment ( Study ) Parameters w, As low as possible loss. So how do we adjust w Well ?

Choose a starting point at random W0, Certificate adjustment W0, Give Way loss The function takes the minimum value .

w How to update :

1. Calculation w Gradient of ( derivative )

Δ w = f ( w + 0.0000001 ) − f ( w − 0.0000001 ) 2 ∗ 0.0000001 \Delta w=\frac{f(w+0.0000001)-f(w-0.0000001)}{2*0.0000001} Δw=2∗0.0000001f(w+0.0000001)−f(w−0.0000001)

2. to update w

w = w − α Δ w w=w-\alpha \Delta w w=w−αΔw

among :

- △w<0, signify w Will increase

- △w>0, signify w Will decrease

summary : Gradient is the change trend of multivariate function parameters ( Direction of parameter learning ), When there is only one independent variable, it is called derivative , Multivariate is partial derivative .

2. Calculation of partial derivative

2.1 Common derivative calculation

2.2 Partial derivative of multivariate function

3. Back propagation algorithm

3.1 Computational graphs and back propagation

Calculation chart : The graph of function is described by graph

Such as J(a,b,c) = 3(a + bc), Make u=a+v,v=bc, Then there are J(u)=3u

Draw it into a calculation diagram, which can be expressed as

After drawing the calculation diagram , You can see it clearly Forward calculation The process of

Then, partial derivatives are obtained for each node , Can have :

For back propagation , Because what we ultimately want is

- J Yes a Partial derivative of

- J Yes b Partial derivative of

- J Yes c Partial derivative of

But we are directly right a,b,c There is no way to find partial derivatives , therefore , According to the picture above , We can see that the process of back propagation is a process from right to left , The independent variables (a, b, c) Their partial derivatives are the product of the gradients on the line :

d J d u = 3 \frac{dJ}{du}=3 dudJ=3

d J d b = d J d u ∗ d u d v ∗ d v d b = 3 ∗ 1 ∗ c \frac{dJ}{db}=\frac{dJ}{du}*\frac{du}{dv}*\frac{dv}{db}=3*1*c dbdJ=dudJ∗dvdu∗dbdv=3∗1∗c

d J d c = d J d u ∗ d u d v ∗ d v d c = 3 ∗ 1 ∗ b \frac{dJ}{dc}=\frac{dJ}{du}*\frac{du}{dv}*\frac{dv}{dc}=3*1*b dcdJ=dudJ∗dvdu∗dcdv=3∗1∗b

3.2 Back propagation in neural networks

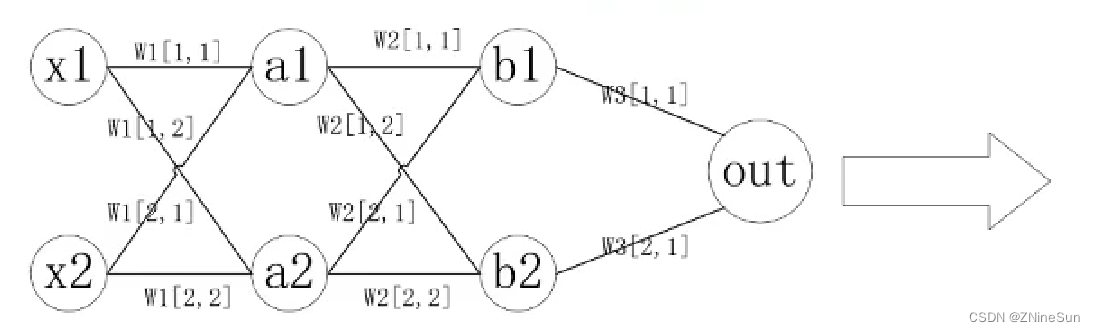

3.2.1 Schematic diagram of neural network

w1,w2,…,wn Indicates the second of the network n Layer weight

wn[i,j] It means the first one n Layer i Neurons , Connect to page n+1 Layer j The weight of each neuron .

Such as :w3[2,1]: Represents the weight of the second neuron in the third layer to the first neuron in the fourth layer

3.2.2 Calculation diagram of neural network

among :

- △out: It is the result of deriving the predicted value according to the loss function

- f function : It can be understood as activation function

Joining us requires △out Yes w1[1,2] Partial derivative of , You can see the following

from w1[1,2] To △out There are two paths , They are the red line and the blue line , So we just need to add the product of the two paths in the green box and multiply it by the path value outside the green box , give the result as follows :

The formula is divided into two parts :

- 1. Outside the brackets : The red line on the left

- 2. In brackets

- 1. To the left of the plus sign : The red line on the right

- 2 To the right of the plus sign : Blue line section

But do it , When the model is big , The amount of calculation is very large

So the idea of back propagation is to find the gradient of one of the parameters , Then update , As shown in the figure below :

The calculation process is as follows :

Update the sum of parameters to continue back propagation

The calculation process is as follows :

Continue back propagation

The calculation process is as follows :

The above process is the disassembly of the following formula :

We are thinking about , We need the results calculated in the process of forward propagation when we back propagate , So we need to keep the traces of forward propagation , This is in pytorch Will be reflected .

边栏推荐

- Small sample learning data set

- Diablo: immortality mobile game how to open more brick moving and novice introduction brick moving strategy

- Matlab bitwise and or not

- web中间件日志分析脚本3.0(shell脚本)

- The difference and application of in and exists in SQL statement

- Django database addition, deletion, modification and query

- How can I become an irreplaceable programmer?

- How can a team making facial mask achieve a revenue of more than 1 million a day?

- LVGL官方+100ASK合力打造的中文输入(拼音输入法)组件,让LVGL支持中文输入!

- The application and principle of changing IP software are very wide. Four methods of dynamic IP replacement are used to protect network privacy

猜你喜欢

Game thinking 17: Road finding engine recast and detour learning II: recast navigation grid generation process and limitations

用 QuestPDF操作生成PDF更快更高效!

ZABBIX monitoring host and resource alarm

Half of the people in the country run in Changsha. Where do half of the people in Changsha run?

嵌入式开发:技巧和窍门——设计强大的引导加载程序的7个技巧

android sqlite先分组后排序左连查询

Unityvr robot Scene 3 gripper

Detailed explanation of rest assured interface testing framework

The task will be launched before the joint commissioning of development

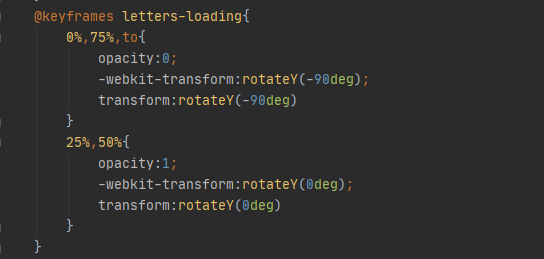

EasyCVR页面添加Loading加载效果的实现过程

随机推荐

Travel + strategy accelerated landing, jietu new product matrix exposure

[RTOS training camp] continue the program framework, tick interrupt supplement, preview, after-school homework and evening class questions

If the native family is general, and the school is also a college on the rotten street, how to go on the next journey

El table scroll bar settings

JDBC connection database (idea version)

SQL的CASE WHEN

Lua基础语法

Processes and threads

[RTOS training camp] equipment subsystem, questions of evening students

1.30 升级bin文件添加后缀及文件长度

Game thinking 17: Road finding engine recast and detour learning II: recast navigation grid generation process and limitations

【软件开发规范二】《禁止项开发规范》

Working principle of ZK rollups

How to copy and paste QT? (QClipboard)

Detailed explanation of at and crontab commands of RHCE and deployment of Chrony

The difference and application of in and exists in SQL statement

What is the dynamic IP address? Why do you recommend dynamic IP proxy?

【软件开发规范三】【软件版本命名规范】

【RTOS训练营】上节回顾、空闲任务、定时器任务、执行顺序、调度策略和晚课提问

200 yuan a hair dryer, only a week, to achieve 2million?