当前位置:网站首页>Pymoo学习 (7):并行化Parallelization

Pymoo学习 (7):并行化Parallelization

2022-07-25 18:59:00 【因吉】

1 引入

在实际中,并行化可以显著提升优化的效率。对于基于Population的算法,可以通过并行化评估本身,实现对一组解决方案的评估。

2 向量化矩阵运算

一种方法是使用Numpy矩阵运算,它已用于几乎所有在Pymoo中实现的测试问题。默认情况下,elementwise_evaluation设置为False,这意味着_evaluate检索一组解决方案。 因此,输入矩阵 x x x的每一行是一个个体,每一列是一个变量:

import numpy as np

from pymoo.core.problem import Problem

from pymoo.algorithms.soo.nonconvex.ga import GA

from pymoo.optimize import minimize

class MyProblem(Problem):

def __init__(self, **kwargs):

super().__init__(n_var=10, n_obj=1, n_constr=0, xl=-5, xu=5, **kwargs)

def _evaluate(self, x, out, *args, **kwargs):

out["F"] = np.sum(x ** 2, axis=1)

res = minimize(MyProblem(), GA())

print('Threads:', res.exec_time)

输出如下:

Threads: 1.416006326675415

3 Starmap接口

Starmap由Python标准库multiprocessing.Pool.starmap提供,可以方便的进行并行化。此时需要设置elementwise_evaluation=True,意味着每一次调用_evaluate只评估一个方案。

3.1 线程

import numpy as np

from pymoo.core.problem import Problem

from pymoo.core.problem import starmap_parallelized_eval

from pymoo.algorithms.soo.nonconvex.pso import PSO

from pymoo.optimize import minimize

from multiprocessing.pool import ThreadPool

class MyProblem(Problem):

def __init__(self, **kwargs):

super().__init__(n_var=10, n_obj=1, n_constr=0, xl=-5, xu=5, **kwargs)

def _evaluate(self, x, out, *args, **kwargs):

out["F"] = np.sum(x ** 2, axis=1)

n_threads = 8

pool = ThreadPool(n_threads)

problem = MyProblem(runner=pool.starmap, func_eval=starmap_parallelized_eval)

res = minimize(problem, PSO(), seed=1, n_gen=100)

print('Threads:', res.exec_time)

输出如下:

Threads: 0.5501224994659424

3.2 进程

import multiprocessing

n_proccess = 8

pool = multiprocessing.Pool(n_proccess)

problem = MyProblem(runner=pool.starmap, func_eval=starmap_parallelized_eval)

res = minimize(problem, PSO(), seed=1, n_gen=100)

print('Processes:', res.exec_time)

输出如下:

Processes: 1.1640357971191406

3.3 Dask

更高级的方法是将评估函数分配给几个worker。在Pymoo中推荐使用Dask。

注:可能需要安装以下库:

pip install dask distributed

代码如下:

import numpy as np

from dask.distributed import Client

from pymoo.core.problem import dask_parallelized_eval

from pymoo.core.problem import Problem

from pymoo.algorithms.soo.nonconvex.pso import PSO

from pymoo.optimize import minimize

class MyProblem(Problem):

def __init__(self, **kwargs):

super().__init__(n_var=10, n_obj=1, n_constr=0, xl=-5, xu=5, **kwargs)

def _evaluate(self, x, out, *args, **kwargs):

out["F"] = np.sum(x ** 2, axis=1)

if __name__ == '__main__':

client = Client()

client.restart()

print("STARTED")

client = Client()

problem = MyProblem(runner=client, func_eval=dask_parallelized_eval)

res = minimize(problem, PSO(), seed=1, n_gen=100)

print('Dask:', res.exec_time)

输出如下:

STARTED

Dask: 1.30446195602417

4 个性化并行

4.1 线程

import numpy as np

from multiprocessing.pool import ThreadPool

from pymoo.core.problem import Problem

from pymoo.algorithms.soo.nonconvex.pso import PSO

from pymoo.optimize import minimize

class MyProblem(Problem):

def __init__(self, **kwargs):

super().__init__(n_var=10, n_obj=1, n_constr=0, xl=-5, xu=5, **kwargs)

def _evaluate(self, X, out, *args, **kwargs):

def my_eval(x):

return (x ** 2).sum()

params = [[X[k]] for k in range(len(X))]

F = pool.starmap(my_eval, params)

out["F"] = np.array(F)

if __name__ == '__main__':

pool = ThreadPool(8)

problem = MyProblem()

res = minimize(problem, PSO(), seed=1, n_gen=100)

print('Threads:', res.exec_time)

输出如下:

Threads: 1.0212376117706299

4.2 Dask

import numpy as np

from dask.distributed import Client

from pymoo.algorithms.soo.nonconvex.pso import PSO

from pymoo.core.problem import Problem

from pymoo.optimize import minimize

class MyProblem(Problem):

def __init__(self, *args, **kwargs):

super().__init__(n_var=10, n_obj=1, n_constr=0, xl=-5, xu=5,

elementwise_evaluation=False, *args, **kwargs)

def _evaluate(self, X, out, *args, **kwargs):

def fun(x):

return np.sum(x ** 2)

jobs = [client.submit(fun, x) for x in X]

out["F"] = np.row_stack([job.result() for job in jobs])

if __name__ == '__main__':

client = Client(processes=False)

problem = MyProblem()

res = minimize(problem, PSO(), seed=1, n_gen=100)

print('Dask:', res.exec_time)

client.close()

输出如下:

Dask: 19.102460861206055

参考文献

【1】https://pymoo.org/problems/parallelization.html

【2】https://blog.csdn.net/u013066730/article/details/105821888

边栏推荐

- Everyone can participate in the official launch of open source activities. We sincerely invite you to experience!

- Youwei low code: use resolutions

- Alibaba cloud technology expert haochendong: cloud observability - problem discovery and positioning practice

- How to prohibit the use of 360 browser (how to disable the built-in browser)

- 21 days proficient in typescript-4 - type inference and semantic check

- Summer Challenge [FFH] this midsummer, a "cool" code rain!

- F5:企业数字化转型所需六大能力

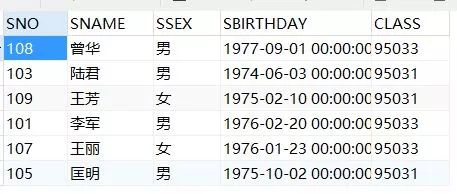

- MySQL子查询篇(精选20道子查询练习题)

- Vc/pe is running towards Qingdao

- Osmosis extends its cross chain footprint to poca through integration with axelar and moonbeam

猜你喜欢

SQL realizes 10 common functions of Excel, with original interview questions attached

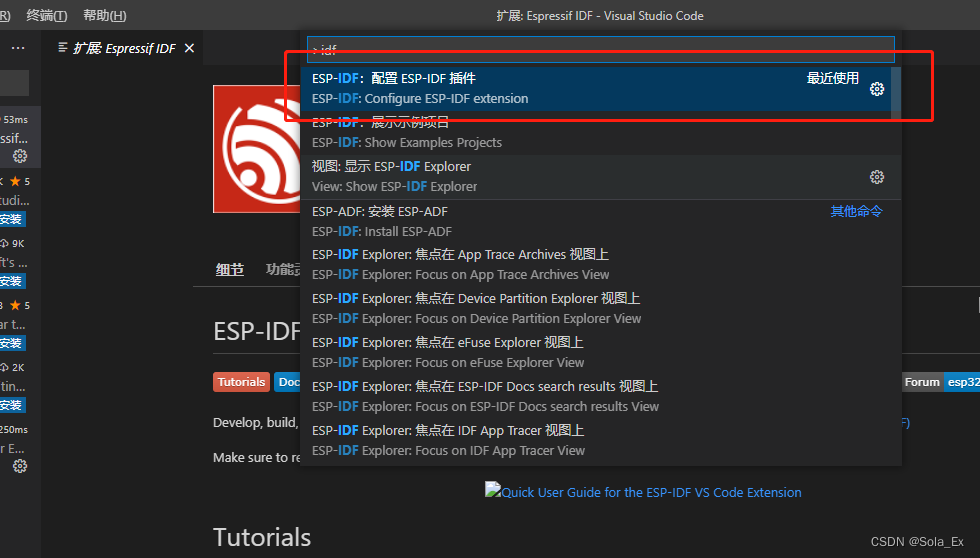

Esp32 S3 vscode+idf setup

Advanced software testing - test classification

Baklib:制作优秀的产品说明手册

The auction house is a VC, and the first time it makes a move, it throws a Web3

Northeast people know sexiness best

Analysis of the internet jam in IM development? Network disconnection?

Excellent test / development programmers should make breakthroughs and never forget their original intentions, so that they can always

Dynamic memory management

人人可参与开源活动正式上线,诚邀您来体验!

随机推荐

srec_cat 常用参数的使用

With a market value of 30billion yuan, the largest IPO in Europe in the past decade was re launched on the New York Stock Exchange

【919. 完全二叉树插入器】

[translation] LFX 2022 spring tutor qualification opening - apply for CNCF project before February 13!

Address book (I)

Pyqt5 click qtableview vertical header to get row data and click cell to get row data

MySQL sub query (selected 20 sub query exercises)

Weak network test tool -qnet

Ultimate doll 2.0 | cloud native delivery package

The bank's wealth management subsidiary accumulates power to distribute a shares; The rectification of cash management financial products was accelerated

Care for front-line epidemic prevention workers, Haocheng JIAYE and Gaomidian sub district office jointly build the great wall of public welfare

【加密周报】加密市场有所回温?寒冬仍未解冻!盘点上周加密市场发生的重大事件!

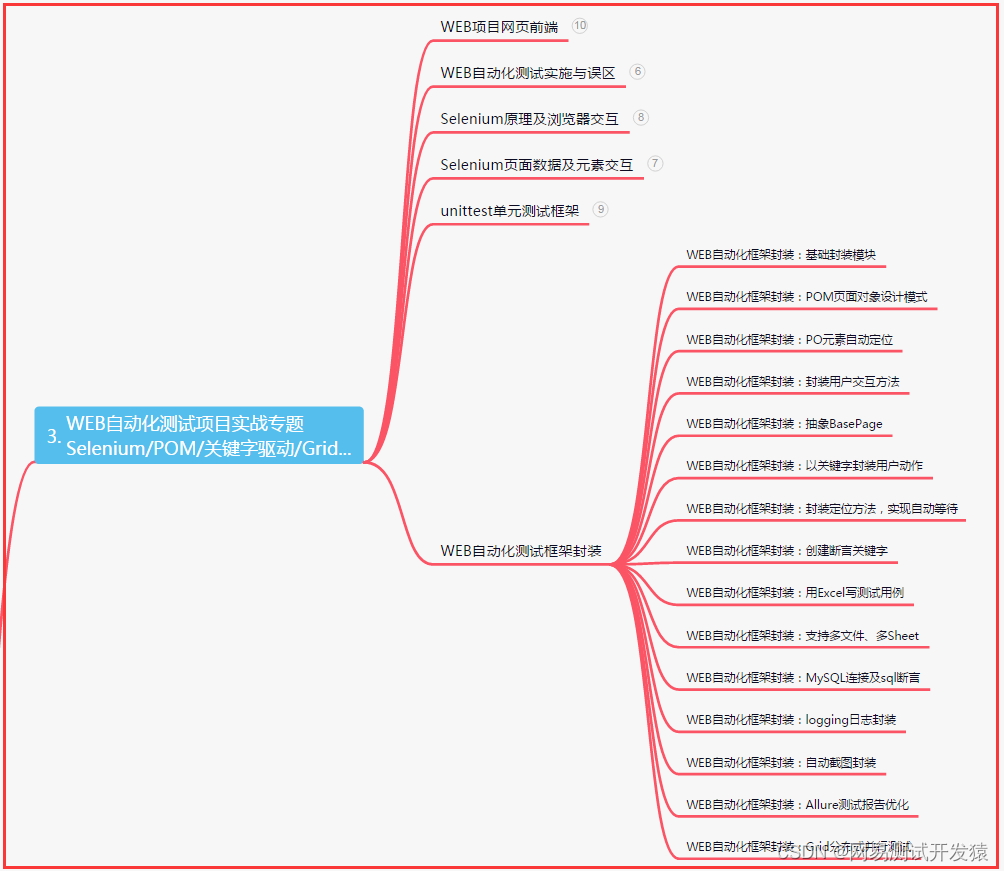

APP测试点(思维导图)

Huawei switch system software upgrade and security vulnerability repair tutorial

Analysis of the internet jam in IM development? Network disconnection?

「Wdsr-3」蓬莱药局 题解

聚智云算,向新而生| 有孚网络“专有云”开启新纪元

7/24 训练日志

给生活加点惊喜,做创意生活的原型设计师丨编程挑战赛 x 选手分享

【帮助中心】为您的客户提供自助服务的核心选项