当前位置:网站首页>Overview of multisensor fusion -- FOV and bev

Overview of multisensor fusion -- FOV and bev

2022-07-23 07:42:00 【Hermit_ Rabbit】

0. brief introduction

After reading a lot about multisensor work , Here the author summarizes the methods of multi-sensor fusion . This article will start with a single sensor , And summarize in the direction of multi-sensor step by step . Previous 《 Detailed explanation of multi-sensor fusion 》 The blog introduces the classification of multi-sensor and the ability of data transmission from the algorithm level , and 《 Multisensor fusion sensing -- Sensor external parameter calibration and online calibration learning 》 The blog introduces readers how to calibrate multi-sensor from the calibration layer . This article will summarize the classification of multisensors and the author's understanding and thinking of multisensors from the perspective of methods .

1. FOV visual angle

FOV As one of the closest human perspectives , Has a long history , Today, 2D\3D object detection All from FOV Start from Perspective . In terms of deep learning ,FOV Compared with the popular in recent years BEV methods , It has a faster corresponding speed (BEV Most of them are in use Transformer) And more data sets . however ,FOV There are also some drawbacks to our information : Occlusion problem , Scale problem ( Different objects have different scales at different depths )、 Difficult to integrate with other modes 、 High fusion loss (Lidar Radar Etc. are suitable for BEV visual angle ) etc. .

1.1 FOV— Object recognition 2D

There is not much here , Basically, the mainstream object recognition algorithms in previous years are FOV Completed from the perspective . Its recognition algorithm is mainly divided into one-stage, two-stage, anchor-based, anchor-free These kinds of . Here we give an example of recent years anchor-free The pioneering work of the article FCOS. This model is the first one that is not based on anchor, But it can be based on anchor Comparison of single-stage or two-stage target detection models .FCOS Redefine target detection , Detection based on each pixel ; It uses multi-level prediction to improve the recall rate and solve the ambiguity caused by overlapping bounding boxes . It puts forward “center-ness” Branch , Helps suppress detected low-quality bounding boxes , And greatly improve overall performance . It avoids complex calculations , For example, union intersection (IoU),FCOS Method is also used for VFNet、YOLOX And other models .

1.2 FOV— Object recognition 3D

Here we give a FCOS3D Methods . The author of the paper FCOS On the basis of , Yes Reg Branch for partial modification , Make it possible to return centerpoint At the same time , Add other indicators : Center offset 、 depth 、3D bbox Size, etc , The realization of will 2D The detector is used for 3D Detector crossing . besides , Include YOLO3D Etc , The traditional 2D detector After simple modification, it is directly used in 3D The method of detection .

1.3 FOV— Depth estimation

Due to the rapid development of deep learning , In recent years, depth estimation has also appeared , One of the more famous is Pseudo-LiDAR. For stereo or monocular images , First, predict the depth map ( From parallax map to depth map ), Then project it backward into the three-dimensional point cloud in the lidar coordinate system . It is called pseudo lidar , And deal with it like lidar —— Any detection algorithm based on lidar can be applied .

Working solutions using depth estimation are mainly divided into three methods :

- Training specialized backbone Encoding depth information , But this method is not accurate

- Process the depth information into pseudo-lidar As point cloud information

- It's through BEV Learn in a way BEV Feature to image mapping , Avoid the error loss caused by direct prediction of depth information .

The author did an experiment , The left column in the figure below is the original depth map and its corresponding pseudo-lidar. After convolution of the original depth map, the depth map in the upper right corner is obtained , And then generate pseudo-lidar( The lower right corner ). Can see , The convoluted depth map produces great depth deformation .

1.4 FOV— Laser camera fusion

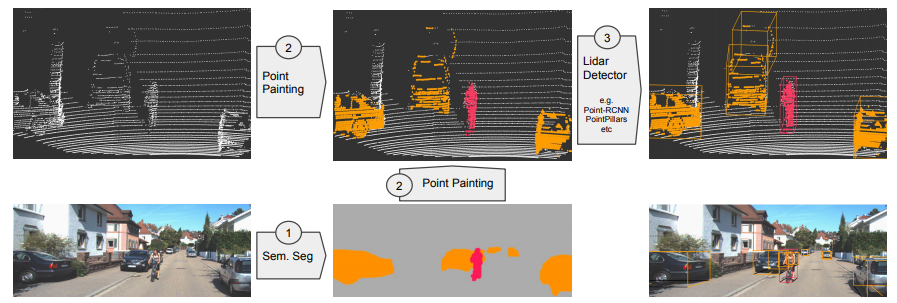

PointPainting: Image aided point cloud detection , parallel . The author thinks that ,FOV to BEV Inaccurate depth information will lead to poor fusion effect , stay FOV The sparsity of pure point cloud detection results in the problem of false recognition and poor classification , In order to solve the problem of lack of texture information in pure LIDAR point cloud , With PointPainting The first fusion method integrates the image segmentation results into the point cloud image , Enrich point cloud semantics , Improved detection performance .

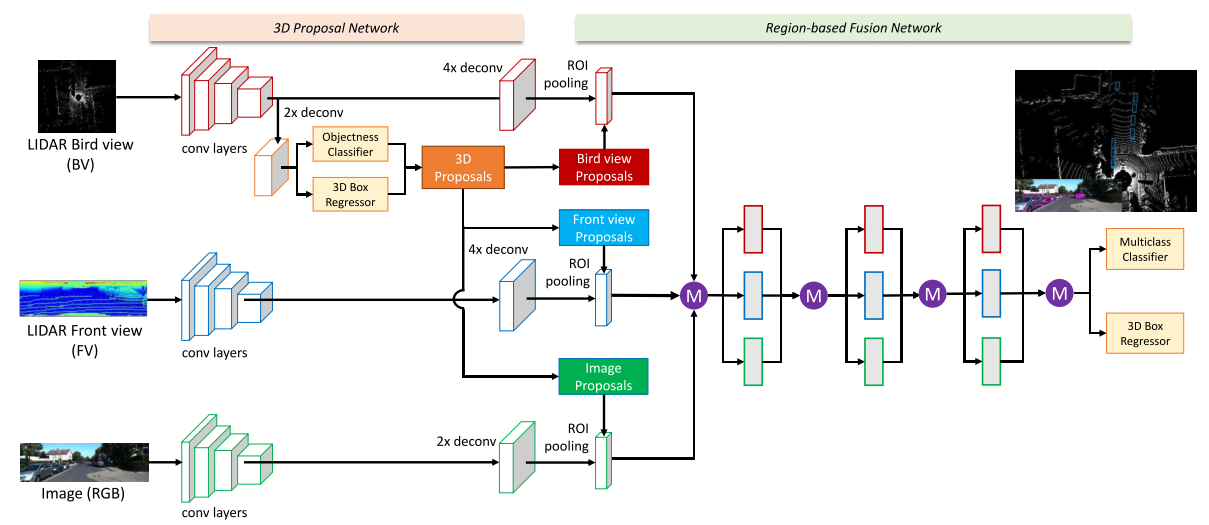

MV3D:lidar Auxiliary image , parallel . The author believes that , Radar point cloud mainly has the following problems :1) sparsity (2) Disorder (3) Redundancy , Here, point clouds and images are used as input . There are two processing formats of point cloud : The first is to build a top view (BV), The construction method is to rasterize the point cloud , Form a three-dimensional grid , Each grid is the highest height of the radar point in the grid , Each layer of grid acts as a channel, Then add reflectivity (intensity) And density (density) Information about ; The second is to build the front view (FV), Project the radar point cloud into the cylindrical coordinate system , There is also an article called range view, Then rasterize , Form a two-dimensional grid in a cylindrical coordinate system , Build height 、 Reflectivity and density channel.

F-PointNet: Image aided radar , Serial . In the article RGB Running on the image 2D detector, Produced 2D bbox Used to define 3D The cone contains the foreground target point cloud . Then based on the 3D Point cloud (centerfusion Our inspiration comes from this ), Use PointNet++ Network implementation 3D Instance segmentation and subsequent implementation 3D Bounding box estimates .

2. BEV visual angle

BEV It's an aerial view (Bird’s Eye View) For short , Also known as God's perspective , yes A perspective or coordinate system used to describe the perceived world (3D),BEV It is also used to refer to one in the field of computer vision End to end 、 By neural network Visual information , From image space to BEV The technology of space . Here is a Shanghai Artificial Intelligence Laboratory 2022 year 6 month 22 Live sharing of the day —《BEV perception : A new paradigm of the next generation autonomous driving perception algorithm 》

BEV perception : A new paradigm of the next generation autonomous driving perception algorithm

BEV Features have the following advantages :1. It can support multi-sensor fusion , Facilitate downstream multi task sharing feature.2. Different objects in BEV There is no deformation problem from the perspective , It can make the model focus on solving classification problems .3. It can fuse multiple perspectives to solve the occlusion problem and object overlap problem . however ,BEV Features also have some problems , for example grid The size of affects the granularity of detection , And there are a lot of background storage redundancy , because BEV It stores global semantic information .

2.1 BEV Where is it generally used

Although theoretically BEV It can be applied in front 、 in 、 During post fusion , However, it is difficult to realize the former integration , Generally very few generals BEV Application in front of fusion , Occasionally, it is also used in post fusion , More will be applied to feature level fusion between data level fusion and target level fusion , That is, the integration of .

The so-called pre integration , It refers to collecting the data of each sensor , After data synchronization , Fuse these raw data . Its advantage is that it can process information as a whole , Let data be fused earlier , So as to make the data more relevant . But the challenge is also obvious , Because visual data and LIDAR point cloud data are heterogeneous data , Its coordinate system is different , When merging , You can only put point clouds in the image space , Provide depth information to the image , Or in the point cloud coordinate system , By coloring point clouds or rendering features , And let the point cloud have richer semantic information .

The so-called integration , That is to extract the middle layer features of each sensor through the neural network model ( That is, effective features ), Then the effective main features of multiple sensors are fused , Thus, it is more likely to get the best reasoning . For effective features in BEV Space fusion , First, less data loss , Second, the consumption of calculating power is also less ( Relative to the former fusion ), So in general BEV There are many fusion in space .

The so-called post fusion , It refers to the deep learning model reasoning of each sensor for the target object , So as to output the results with the sensor's own attributes , And integrate at the decision-making level . Its advantage is that different sensors carry out target recognition independently , Good decoupling , And each sensor can be redundant backup to each other . However, there are also shortcomings in later integration , When the respective sensors are fused after target recognition , A lot of effective information has been lost in the middle , Affect the perception accuracy , And the final fusion algorithm , It is still a rule-based approach , The confidence of the sensor should be set according to the prior knowledge , The limitations are obvious .

… Please refer to Ancient Moon House

边栏推荐

猜你喜欢

电子招标采购商城系统:优化传统采购业务,提速企业数字化升级

UE4引擎的CopyTexture, CopyToResolveTarget

remove函数的实现

【每日一题】757. 设置交集大小至少为2

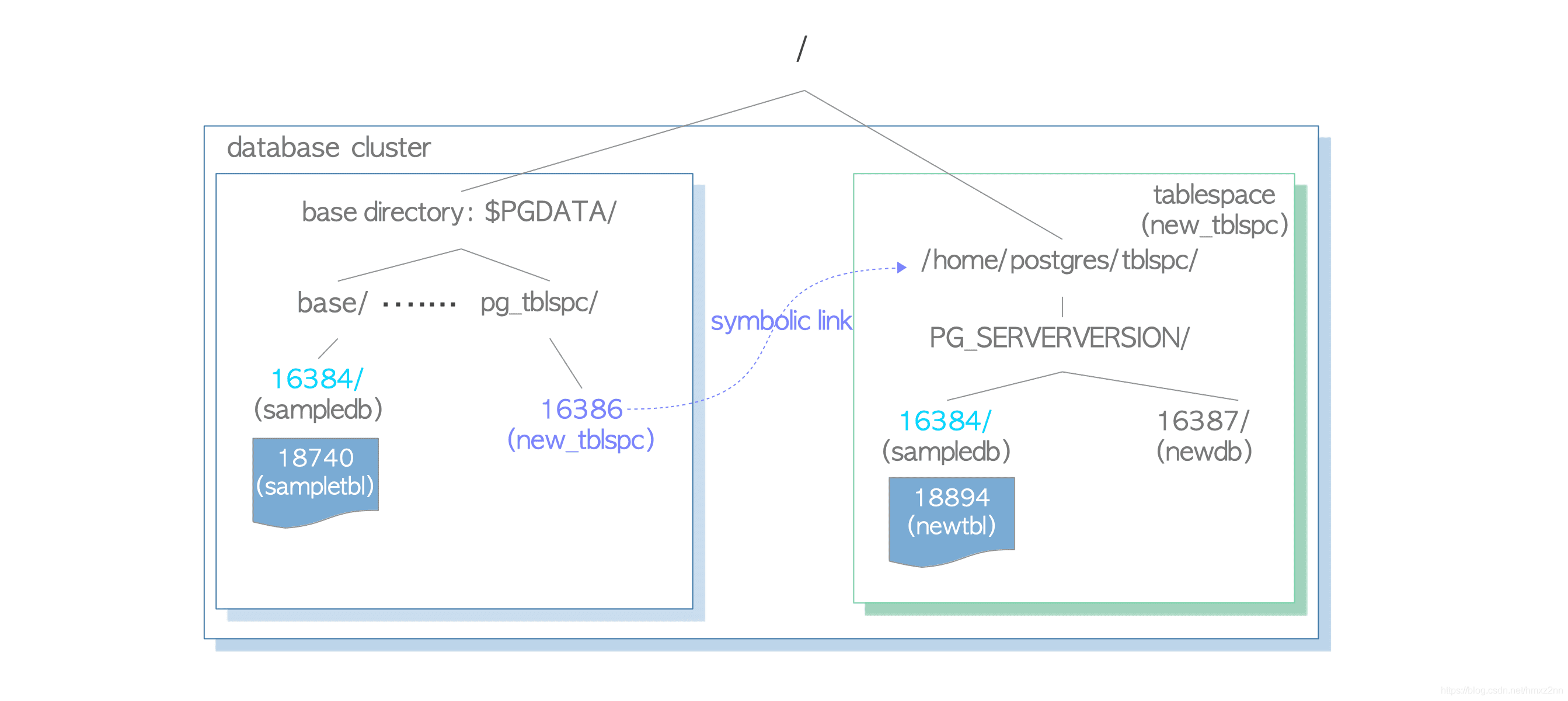

《postgresql指南--内幕探索》第一章 数据库集簇、数据库和数据表

Educational Codeforces Round 132 A - D

分析伦敦银的实时行行发展方法

RS485 communication OSI model network layer

Wechat campus second-hand book trading applet graduation design finished product (5) assignment

Codeforces Round #808 (Div. 2) A - D

随机推荐

iQOO 10系列来袭 OriginOS原系统强化手机体验

【翻译】Chaos Mesh移至CNCF孵化器

局域网SDN技术硬核内幕 - 16 三 从物到人 园区用户漫游的EVPN实现

Ambire 钱包开启 Twitter Spaces 系列

Redis——JedisConnectionException Could not get a resource from the pool

Application of workflow engine in vivo marketing automation

How to use the order flow analysis tool (Part 2)

树和二叉树

Custom view: levitation ball and accelerator ball

《postgresql指南--内幕探索》第一章 数据库集簇、数据库和数据表

Classes and objects (1)

2022 employment season surprise! The genuine Adobe software can finally be used for nothing

UE4引擎的CopyTexture, CopyToResolveTarget

小程序毕设作品之微信校园二手书交易小程序毕业设计成品(2)小程序功能

【技术科普】联盟链Layer2-论一种新的可能性

RS485 communication OSI model network layer

打板遇到的问题

Understanding service governance in distributed development

7. Learn Mysql to select a database

能量原理与变分法笔记11:形函数(一种降维思想)