当前位置:网站首页>[translation] explicit and implicit batch in tensorrt

[translation] explicit and implicit batch in tensorrt

2022-07-27 02:03:00 【Master Fuwen】

This article is translated from Explicit Versus Implicit Batch

Original address :

https://docs.nvidia.com/deeplearning/tensorrt/developer-guide/index.html#explicit-implicit-batch

This period of time began to output , Although the writing is general , But it's much better than my previous Fuwen [ Cover your face, laugh and cry ], Sure enough , People only really have time to think , In order to seriously output , Good things are hard to grind, and honesty doesn't deceive me .

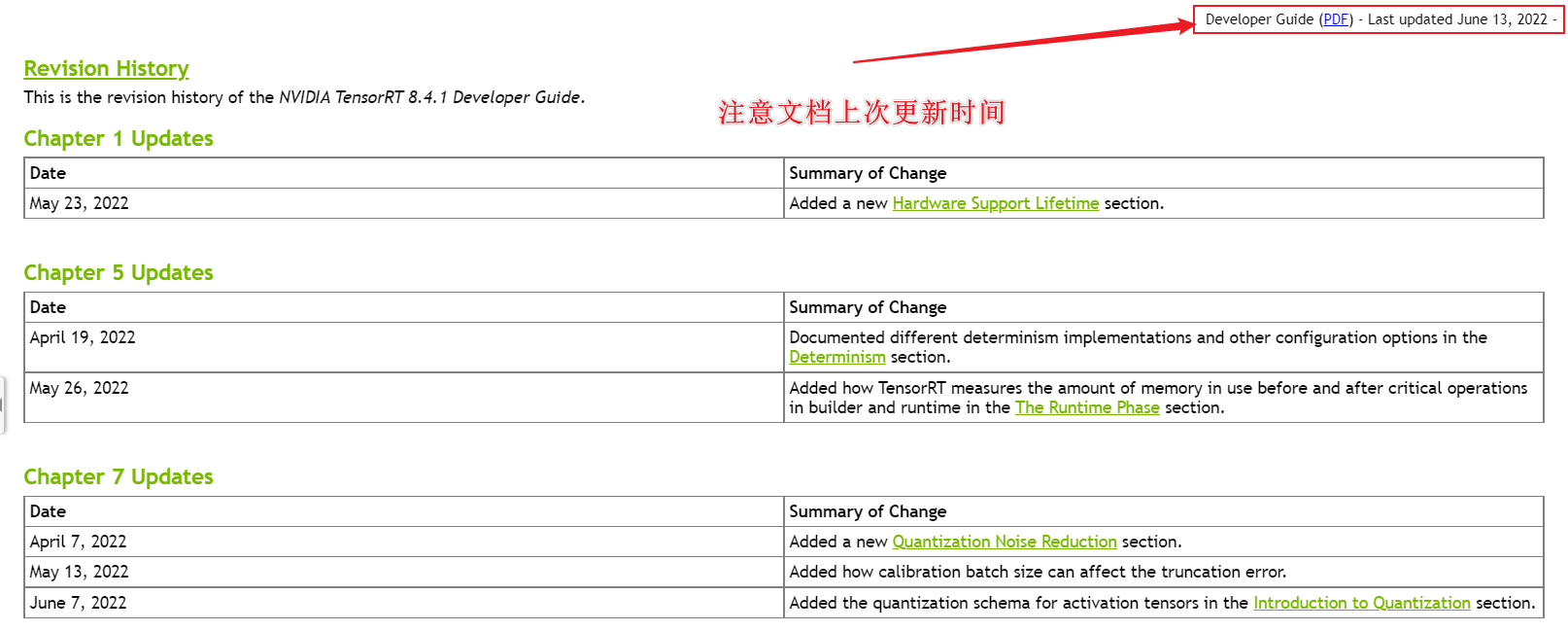

Last updated :June 13, 2022, You can see the following Chapter to update , If this chapter is updated , Then the translation of this article is useless

Next , I copy a paragraph of the original , Translate a paragraph of the original text

TensorRT supports two modes for specifying a network:

explicit batchandimplicit batch:

TensorRT There are two modes of specifying Networks :explicit( Explicit ) batch Pattern and implicit( Implicit ) batch Pattern

In

implicit batchmode, every tensor has an implicit batch dimension and all other dimensions must have constant length. This mode was used by early versions of TensorRT, and is now deprecated but continues to be supported for backwards compatibility.

stay implicit batch In the pattern , Every tensor has an implicit batch dimension , All other dimensions must be constant .

( translator's note : I guess it is. [-1, 3, 224, 224] In this way shape It means )implicit batch Pattern in earlier versions TensorRT Regular use , But now it will be abandoned , But for downward compatibility , Will still be supported .

Oh , There is a new phrase :backwards compatibility Backwards compatible hhh

In

explicit batchmode, all dimensions are explicit and can be dynamic, that is their length can change at execution time. Many new features, such as dynamic shapes and loops, are available only in this mode. It is also required by the ONNX parser.

stay explicit batch In the pattern , All dimensions are explicit and are dynamic Of , It means when executing , The length of each dimension can vary .

Many new features , For example, dynamic shapes And loops, Only in explicit batch Can only be used in mode .

It is worth mentioning that , Use ONNX parser when , This mode is also required .

For example, consider a network that processes N images of size HxW with 3 channels, in NCHW format. At runtime, the input tensor has dimensions [N,3,H,W]. The two modes differ in how the

INetworkDefinitionspecifies the tensor’s dimensions:

- In explicit batch mode, the network specifies [N,3,H,W].

- In implicit batch mode, the network specifies only [3,H,W]. The batch dimension N is implicit.

for instance , Maozi is a scene : N N N Zhang 3 3 3 passageway H × W H\times W H×W Pictures of the , That is to say NCHW The format of .

During operation , The dimension of the input tensor is [N,3,H,W]. These two modes are in INetworkDefinition It is different when specifying the tensor dimension :

- Explicit (explicit batch) In mode , The network is designated

[N,3,H,W]. - Implicit (implicit batch) In mode , The network is designated

[3,H,W],Batch dimensionNIs implicitly specified .

Operations that “talk across a batch” are impossible to express in implicit batch mode because there is no way to specify the batch dimension in the network. Examples of inexpressible operations in implicit batch mode:

- reducing across the batch dimension

- reshaping the batch dimension

- transposing the batch dimension with another dimension

involves Batch The operation of dimension is implicit (implicit batch) It cannot be operated in mode , Because... Cannot be specified in the network batch Dimensions . The following is implicit (implicit batch) In mode , Unspeakable operation :

- reducing across the batch dimension ( stay batch Dimensionally prescribed , May be

[N,3,H,W]->[1,3,H,W]) - reshaping the batch dimension ( May be

[N,3,H,W]->[X,3,H,W]) - transposing the batch dimension with another dimension ( May be

[N,3,H,W]->[3,N,H,W])

The exception is that a tensor can be broadcast across the entire batch, through the

ITensor::setBroadcastAcrossBatchmethod for network inputs, and implicit broadcasting for other tensors.

Of course , There are exceptions. . By using ITensor::setBroadcastAcrossBatch Methods and other tensors use implicit broadcasting , A tensor can be used throughout Batch Broadcast in

( I slowly played a question mark , I don't quite understand what this means …)

Explicit batch mode erases the limitations - the batch axis is axis 0. A more accurate term for explicit batch would be “batch oblivious,” because in this mode, TensorRT attaches no special semantic meaning to the leading axis, except as required by specific operations. Indeed in explicit batch mode there might not even be a batch dimension (such as a network that handles only a single image) or there might be multiple batch dimensions of unrelated lengths (such as comparison of all possible pairs drawn from two batches).

Explicit batch mode (Explicit batch mode) Eliminate restrictions —— Batch The axis is axis 0.

A more accurate term for explicit batch processing is “batch oblivious”, Because in this mode ,TensorRT Yes Batch The guide axis has no special semantic meaning ( intend , A tensor that is directly regarded as a whole ,Batch Dimension doesn't have that semantics ), Unless a specific operation requires .

in fact , In explicit batch mode , Maybe not even Batch Dimensions ( for example , Only deal with a single photo ), Or there may be multiple irrelevant lengths Batch dimension ( For example, compare all possible pairs extracted from two batches ).

The choice of explicit versus implicit batch must be specified when creating the

INetworkDefinition, using a flag. Here is the C++ code for explicit batch mode:IBuilder* builder = ...; INetworkDefinition* network = builder->createNetworkV2(1U << static_cast<uint32_t>(NetworkDefinitionCreationFlag::kEXPLICIT_BATCH)))For implicit batch, use

createNetworkor pass a 0 tocreateNetworkV2.

Creating INetworkDefinition when , You have to use flag Specify explicit or implicit batch . This is an explicit batch mode C++ Code :

IBuilder* builder = ...;

INetworkDefinition* network = builder->createNetworkV2(1U << static_cast<uint32_t>(NetworkDefinitionCreationFlag::kEXPLICIT_BATCH)))

When implicitly initialized Batch when , Usage method createNetwork Or to createNetworkV2 Methods the incoming 0

Here is the Python code for explicit batch mode:

builder = trt.Builder(...) builder.create_network(1 << int(trt.NetworkDefinitionCreationFlag.EXPLICIT_BATCH))For implicit batch, omit the argument or pass a 0.

This is explicit Batch Processing mode Python Code :

builder = trt.Builder(...)

builder.create_network(1 << int(trt.NetworkDefinitionCreationFlag.EXPLICIT_BATCH))

about , Implicit Batch Processing mode , Ignore ( Don't pass in ) Parameter or pass in a 0 that will do .

边栏推荐

猜你喜欢

MySQL索引

Proxmox ve installation and initialization

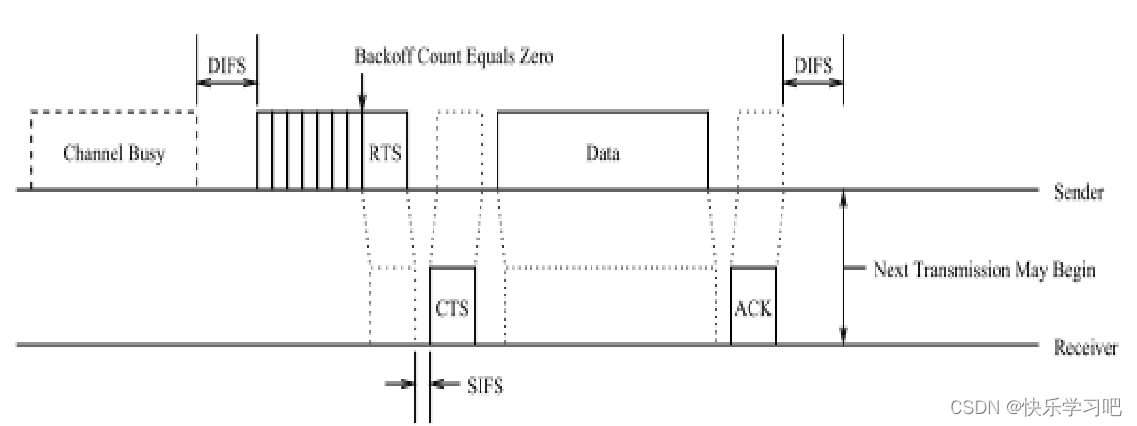

Review of wireless sensor networks (Bilingual)

Text three swordsman two

RT thread learning

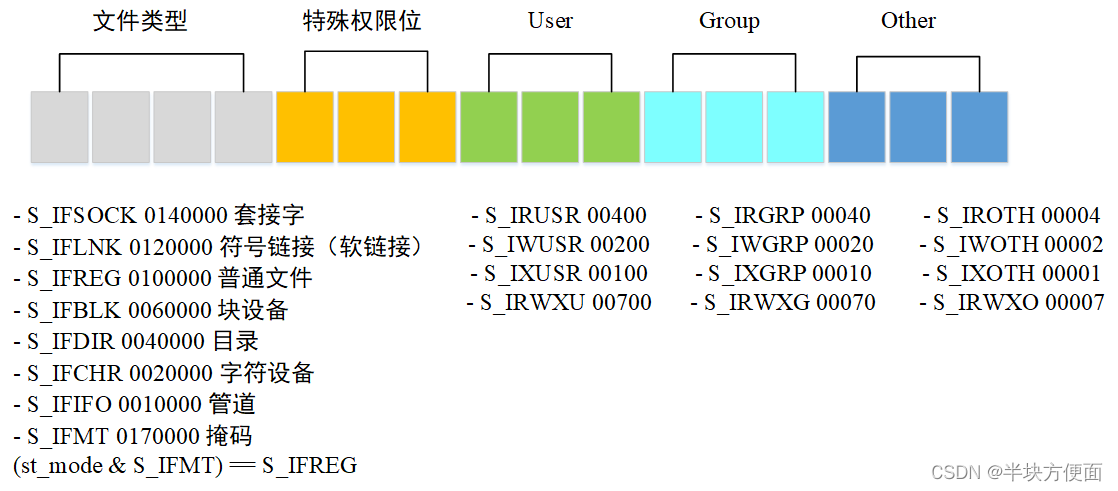

IO function of standard C library

Hands on experiment of network and VPC

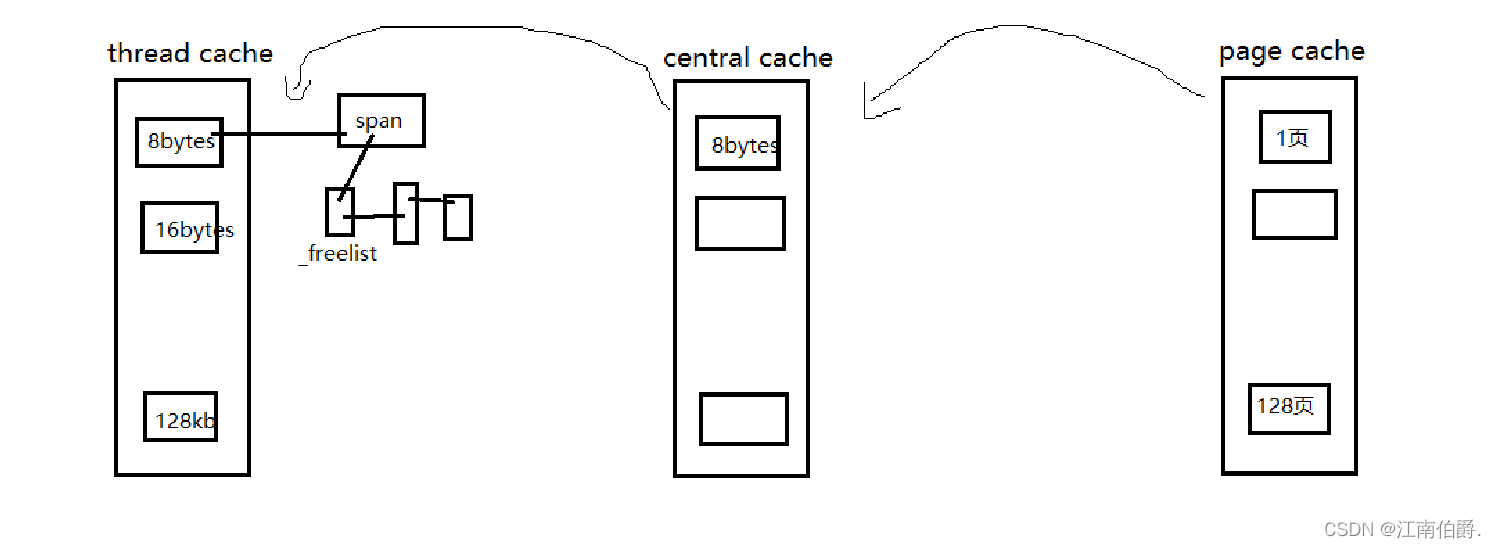

Project | implement a high concurrency memory pool

Homework 1-4 learning notes

MySQL master-slave replication and read-write separation

随机推荐

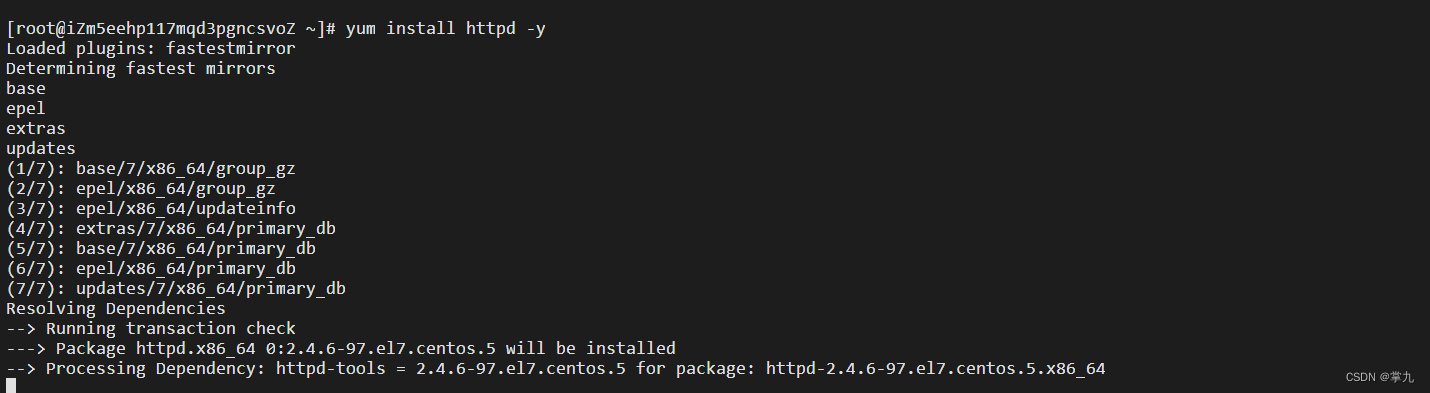

FTP service

【无标题】

作业1-4学习笔记

MySQL single table query exercise

Introduction to network - Introduction to home networking & basic network knowledge

Run NPM run dev to run 'NPM audit fix' to fix them, or 'NPM audit' for details

Shell course summary

String容器的底层实现

D - Difference HDU - 5936

PXE experiment

Shell (6) if judgment

项目 | 实现一个高并发内存池

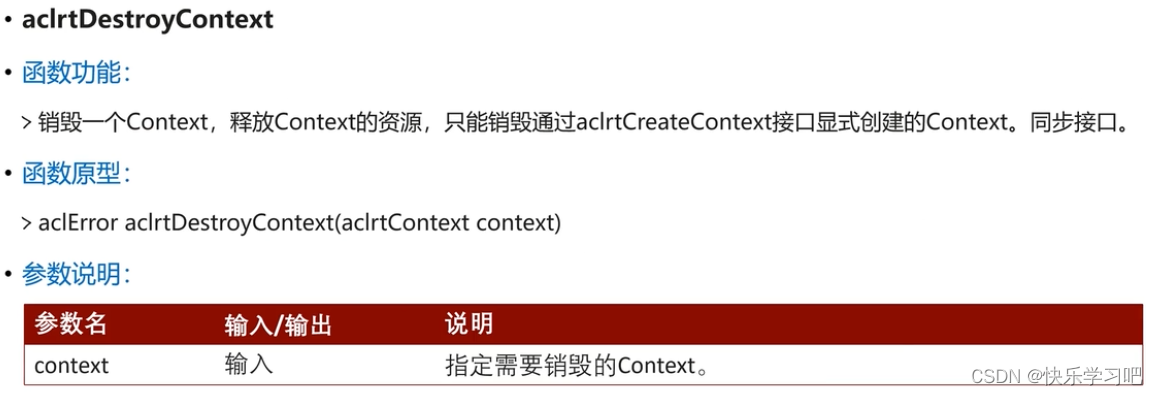

[cann training camp] enter media data processing 1

深度学习过程中笔记(待完善)

Shell (9) function

Desktop solution summary

虚拟化技术KVM

Acwing 1057. stock trading IV

Review of information acquisition technology

DF-GAN实验复现——复现DFGAN详细步骤 及使用MobaXtem实现远程端口到本机端口的转发查看Tensorboard