当前位置:网站首页>Cvpr2022 𞓜 loss problem in weakly supervised multi label classification

Cvpr2022 𞓜 loss problem in weakly supervised multi label classification

2022-06-29 13:09:00 【CV technical guide (official account)】

Preface This paper proposes a new weakly supervised multi label classification (WSML) Method , This method rejects or corrects large loss samples , To prevent the model from remembering noisy labels . Because there are no heavy and complex components , The proposed method sets labels in several parts ( Include Pascal VOC 2012、MS COCO、NUSWIDE、CUB and OpenImages V3 Data sets ) Superior to the most advanced before WSML Method . Various analyses also show that , The practical effect of this method is very good , It is proved that it is very important to deal with the loss correctly in the weakly supervised multi label classification .

Welcome to the official account CV Technical guide , Focus on computer vision technology summary 、 The latest technology tracking 、 Interpretation of classic papers 、CV Recruitment information .

The paper :Large Loss Matters in Weakly Supervised Multi-Label Classification

The paper :http://arxiv.org/pdf/2206.03740

Code :https://github.com/snucml/LargeLossMatters

background

Weakly supervised multi label classification (WSML) The task is to use part of each image to observe labels to learn multi label classification , Because of its huge labeling cost , Becoming more and more important .

at present , There are two simple ways to train a model using partial tags . One is to use only observed tags to train the model , And ignore the unobserved labels . The other is to assume that all unobserved labels are negative , And incorporate it into your training , Because in multi label settings , Most labels are negative .

But the second method has one limitation , That is, this assumption will generate some noise in the tag , Thus hindering model learning , Therefore, most of the previous work followed the first method , And try to use various technologies ( Such as bootstrap or regularization ) Explore clues to unobserved tags . However , These methods include extensive calculations or complex optimization of pipelines .

Based on the above ideas , The author assumes that , If the label noise can be properly handled , The second approach may be a good starting point , Because it has the advantage of incorporating many real negative labels into model training . therefore , The author looks at it from the perspective of noise label learning WSML problem .

as everyone knows , When training models with noise labels , The model first adapts to clean labels , Then start remembering noise labels . Although previous studies have shown that memory effect only exists in noisy multi category classification scenes , But the author found that , The same effect also exists in noisy multi label classification scenarios . Pictured 1 Shown , During training , From the clean label ( True negative sample ) The loss value of is reduced from the beginning , And from the noise tag ( False negative sample ) The loss of is reduced from the middle .

chart 1 WSML Memory effect in

Based on this discovery , The author has developed three different schemes , By rejecting or correcting large loss samples during training , To prevent false positive labels from being memorized into the multi label classification model .

contribution

1) It is proved by experiments for the first time , Memory effect occurs in the process of multi label classification with noise .

2) A new weakly supervised multi label classification scheme is proposed , This scheme explicitly utilizes the learning technology with noise labels .

3) The proposed method is light and simple , The most advanced classification performance is achieved on various partial label datasets .

Method

In this paper , The author puts forward a new WSML Method , The motivation is based on the idea of noise multiclass learning , It ignores the huge loss in the process of model training . The weight term is further introduced into the loss function λi:

The authors propose three ways to provide weights λi Different schemes , The schematic diagram is described in Figure 2 Shown .

chart 2 The overall pipeline of the proposed method

1. Loss rejection

One way to handle large loss samples is by setting λi=0 To reject it . In multi class tasks with noise ,B.Han Et al. Proposed a method to gradually increase the rejection rate in the training process . The author also sets the function λi,

Because the model learns clean patterns at the initial stage , So in t=1 Do not reject any loss value . Use small batches instead of full batches in each iteration D′ To form a loss set . The author calls this method LL-R.

2. Loss correction ( temporary )

Another way to deal with a large loss sample is to correct it rather than reject it . In multi label settings , This can be easily achieved by switching the corresponding annotation from a negative value to a positive value .“ temporary ” The word means , It does not change the actual label , Only the loss calculated according to the modified label is used , Will function λi Defined as

The author named this method LL-Ct. The advantage of this method is , It increases the number of true positive tags in the tags that have never been observed .

3. Loss correction ( permanent )

Deal more aggressively with larger loss values by permanently correcting labels . Directly change the label from negative to positive , And use the modified tag during the next training . So , Define... For each case λi=1, And modify the label as follows :

The author named this method LL-Cp.

experiment

surface 2 Quantitative results of artificially created partial label data sets

surface 3 OpenImages V3 Quantitative results in the dataset

chart 3 Artificially generated COCO Qualitative results of some label datasets

chart 4 COCO Accuracy analysis of the proposed method on the dataset

chart 5 LL-Ct Yes COCO The hyperparametric effect of data sets

chart 6 Training with fewer images

surface 4 Pointing Game

Conclusion

In this paper , The author puts forward a loss modification scheme , This scheme rejects or corrects the large loss samples when training multi label classification models with partial label annotations . This comes from empirical observation , That is, the memory effect also occurs in noisy multi label classification scenarios .

Although it does not include heavy and complex components , But the author's scheme successfully prevents the multi label classification model from remembering false negative labels with noise , State of the art performance on a variety of partially labeled multi label datasets .

CV The technical guide creates a computer vision technology exchange group and a free version of the knowledge planet , At present, the number of people on the planet has 700+, The number of topics reached 200+.

The knowledge planet will release some homework every day , It is used to guide people to learn something , You can continue to punch in and learn according to your homework .CV Every day in the technology group, the top conference papers published in recent days will be sent , You can choose the papers you are interested in to read , continued follow Latest technology , If you write an interpretation after reading it and submit it to us , You can also receive royalties . in addition , The technical group and my circle of friends will also publish various periodicals 、 Notice of solicitation of contributions for the meeting , If you need it, please scan your friends , And pay attention to .

Add groups and planets : Official account CV Technical guide , Get and edit wechat , Invite to join .

Welcome to the official account CV Technical guide , Focus on computer vision technology summary 、 The latest technology tracking 、 Interpretation of classic papers 、CV Recruitment information .

Other articles of official account

Introduction to computer vision

Summary of common words in computer vision papers

YOLO Series carding ( Four ) About YOLO Deployment of

YOLO Series carding ( 3、 ... and )YOLOv5

YOLO Series carding ( Two )YOLOv4

YOLO Series carding ( One )YOLOv1-YOLOv3

CVPR2022 | Based on egocentric data OCR assessment

CVPR 2022 | Using contrast regularization method to deal with noise labels

CVPR2022 | Loss problem in weakly supervised multi label classification

CVPR2022 | iFS-RCNN: An incremental small sample instance divider

CVPR2022 | A ConvNet for the 2020s & How to design neural network Summary

CVPR2022 | PanopticDepth: A unified framework for depth aware panoramic segmentation

CVPR2022 | Reexamine pooling : Your feeling field is not ideal

CVPR2022 | Unknown target detection module STUD: Learn about unknown targets in the video

CVPR2022 | Ranking based siamese Visual tracking

Build from scratch Pytorch Model tutorial ( Four ) Write the training process -- Argument parsing

Build from scratch Pytorch Model tutorial ( 3、 ... and ) build Transformer The Internet

Build from scratch Pytorch Model tutorial ( Two ) Build network

Build from scratch Pytorch Model tutorial ( One ) data fetch

Some personal thinking habits and thought summary about learning a new technology or field quickly

边栏推荐

- C # implements the operations of sequence table definition, insertion, deletion and search

- 推荐模型复现(四):多任务模型ESMM、MMOE

- 别再重复造轮子了,推荐使用 Google Guava 开源工具类库,真心强大!

- Aes-128-cbc-pkcs7padding encrypted PHP instance

- QT custom control: value range

- Evaluation of powerful and excellent document management software: image management, book management and document management

- Install the typescript environment and enable vscode to automatically monitor the compiled TS file as a JS file

- Unexpected ‘debugger‘ statement no-debugger

- Beifu controls the third-party servo to follow CSV mode -- Taking Huichuan servo as an example

- 倍福TwinCAT3 的OPC_UA通信测试案例

猜你喜欢

从Mpx资源构建优化看splitChunks代码分割

Unexpected ‘debugger‘ statement no-debugger

Interesting talk on network protocol (II) transport layer

OPC of Beifu twincat3_ UA communication test case

![[cloud native] 2.4 kubernetes core practice (middle)](/img/1e/b1b22caa03d499387e1a47a5f86f25.png)

[cloud native] 2.4 kubernetes core practice (middle)

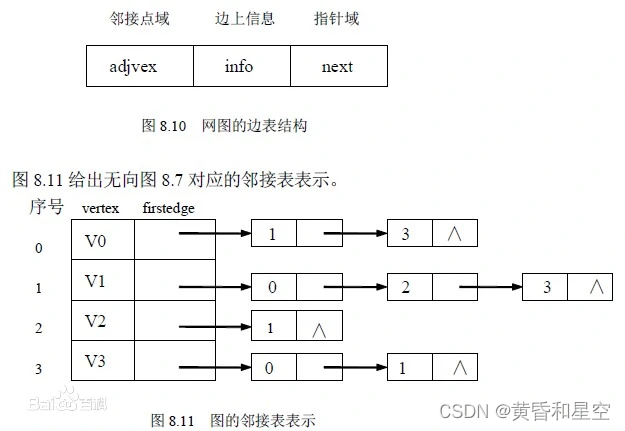

C#实现图的邻接矩阵和邻接表结构

从零搭建Pytorch模型教程(四)编写训练过程--参数解析

Aes-128-cbc-pkcs7padding encrypted PHP instance

墨菲安全入选中关村科学城24个重点项目签约

Can software related inventions be patented in India?

随机推荐

Deep understanding of volatile keyword

netdata数据持久化配置

Interview shock 61: tell me about MySQL transaction isolation level?

推荐模型复现(四):多任务模型ESMM、MMOE

Comparison table of LR and Cr button batteries

OPC of Beifu twincat3_ UA communication test case

C binary tree structure definition and node value addition

面试突击61:说一下MySQL事务隔离级别?

MATLAB求极限

STK_ Gltf model

File contained log poisoning (user agent)

SCHIEDERWERK電源維修SMPS12/50 PFC3800解析

CVPR2022 | 可精简域适应

Murphy safety was selected for signing 24 key projects of Zhongguancun Science City

C#二叉树结构定义、添加节点值

Cereal mall project

Problem solving: modulenotfounderror: no module named 'pip‘

C # clue binary tree through middle order traversal

如何统计项目代码(比如微信小程序等等)

C#通过中序遍历对二叉树进行线索化