当前位置:网站首页>Adding confidence threshold for demo visualization in detectron2

Adding confidence threshold for demo visualization in detectron2

2022-06-26 09:10:00 【G fruit】

Let me write it out front

I've been using facebook Developed detectron2 Experiment with deep learning library , When running the visualization of the detection box , I found a lot of boxes in a mess , And many test boxes with low confidence are also drawn , It looks awful , So I thought about setting a confidence threshold (score_threshold) To filter the detection box , Make visualization more intuitive , More flexible .

Initial demo

After increasing the confidence threshold demo

demo.py Complete code

from detectron2.utils.visualizer import ColorMode

from detectron2.data import MetadataCatalog, DatasetCatalog

from detectron2.engine.defaults import DefaultPredictor

from detectron2.utils.visualizer import Visualizer

from swint.config import add_swint_config

import random

import cv2

from detectron2.config import get_cfg

import os

import pig_dataset

pig_test_metadata = MetadataCatalog.get("pig_coco_test")

dataset_dicts = DatasetCatalog.get("pig_coco_test")

cfg = get_cfg()

add_swint_config(cfg)

cfg.merge_from_file("./configs/SwinT/retinanet_swint_T_FPN_3x.yaml")

cfg.MODEL.WEIGHTS = os.path.join(cfg.OUTPUT_DIR, "model_0019999.pth")

predictor = DefaultPredictor(cfg)

for d in random.sample(dataset_dicts,3):

im = cv2.imread(d["file_name"])

output = predictor(im)

v = Visualizer(im[:,:,::-1],metadata=pig_test_metadata,

scale=0.5,instance_mode=ColorMode.IMAGE_BW)

''' Frame function ( Add a confidence threshold parameter )!!!'''

out = v.draw_instance_predictions(output["instances"].to("cpu"),0.5)# threshold =0.5

cv2.namedWindow("pig",0)

cv2.resizeWindow("pig",600,400)

cv2.imshow("pig", out.get_image()[:, :, ::-1])

#cv2.imwrite("demo-%s"%os.path.basename(d["file_name"]), out.get_image()[:, :, ::-1])

cv2.waitKey(3000)

cv2.destroyAllWindows()

Set the confidence threshold (score_thredshold) Part of the code

if score_threshold != None:

top_id = np.where(scores.numpy()>score_threshold)[0].tolist()

scores = torch.tensor(scores.numpy()[top_id])

boxes.tensor = torch.tensor(boxes.tensor.numpy()[top_id])

classes = [classes[ii] for ii in top_id]

labels = [labels[ii] for ii in top_id]

draw_instance_predictions Function complete code ( After modification )

Just copy and paste the above code to if predictions.has("pred_masks"): Before that

The position of this function is in detectron2/utils/visualizer.py Inside (pycharm direct Ctrl+B You can directly access )

def draw_instance_predictions(self, predictions, score_threshold=None):

""" Draw instance-level prediction results on an image. Args: score_threshold: Confidence threshold ( New parameters ) predictions (Instances): the output of an instance detection/segmentation model. Following fields will be used to draw: "pred_boxes", "pred_classes", "scores", "pred_masks" (or "pred_masks_rle"). Returns: output (VisImage): image object with visualizations. """

boxes = predictions.pred_boxes if predictions.has("pred_boxes") else None

scores = predictions.scores if predictions.has("scores") else None

classes = predictions.pred_classes if predictions.has("pred_classes") else None

labels = _create_text_labels(classes, scores, self.metadata.get("thing_classes", None))

keypoints = predictions.pred_keypoints if predictions.has("pred_keypoints") else None

''' Some new codes '''

if score_threshold != None:

top_id = np.where(scores.numpy()>score_threshold)[0].tolist()

scores = torch.tensor(scores.numpy()[top_id])

boxes.tensor = torch.tensor(boxes.tensor.numpy()[top_id])

classes = [classes[ii] for ii in top_id]

labels = [labels[ii] for ii in top_id]

if predictions.has("pred_masks"):

masks = np.asarray(predictions.pred_masks)

masks = [GenericMask(x, self.output.height, self.output.width) for x in masks]

else:

masks = None

if self._instance_mode == ColorMode.SEGMENTATION and self.metadata.get("thing_colors"):

colors = [

self._jitter([x / 255 for x in self.metadata.thing_colors[c]]) for c in classes

]

alpha = 0.8

else:

colors = None

alpha = 0.5

if self._instance_mode == ColorMode.IMAGE_BW:

self.output.img = self._create_grayscale_image(

(predictions.pred_masks.any(dim=0) > 0).numpy()

if predictions.has("pred_masks")

else None

)

alpha = 0.3

self.overlay_instances(

masks=masks,

boxes=boxes,

labels=labels,

keypoints=keypoints,

assigned_colors=colors,

alpha=alpha,

)

return self.output

边栏推荐

- Yolov5进阶之五GPU环境搭建

- [QNX Hypervisor 2.2用户手册]12.1 术语(一)

- 远程工作的一些命令

- Course paper: Copula modeling code of portfolio risk VaR

- Unity WebGL发布无法运行问题

- Dedecms applet plug-in is officially launched, and one click installation does not require any PHP or SQL Foundation

- 【300+精选大厂面试题持续分享】大数据运维尖刀面试题专栏(一)

- 【开源】使用PhenoCV-WeedCam进行更智能、更精确的杂草管理

- 1.26 pytorch learning

- Self taught programming series - 2 file path and text reading and writing

猜你喜欢

Slider verification - personal test (JD)

隐藏式列表菜单以及窗口转换在Selenium 中的应用

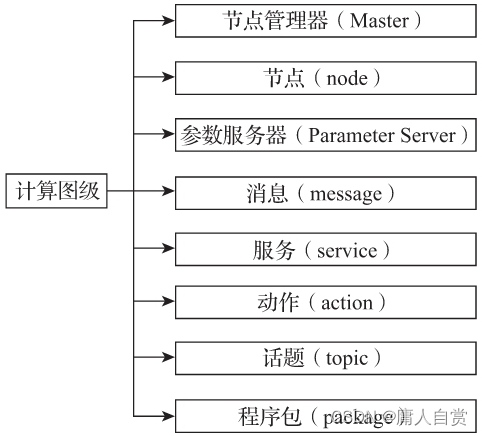

简析ROS计算图级

![[cloud primordial | kubernetes chapter] go deep into the foundation of all things - container (V)](/img/67/26508edc451139cd0f4c9511ca1ed2.png)

[cloud primordial | kubernetes chapter] go deep into the foundation of all things - container (V)

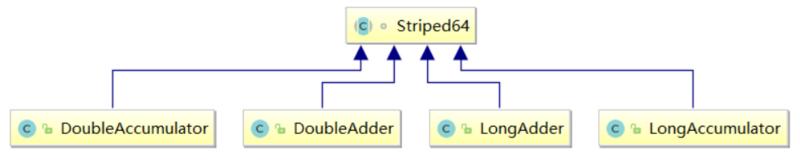

什么是乐观锁,什么是悲观锁

如何编译构建

Vipshop work practice: Jason's deserialization application

Yolov5进阶之三训练环境

Yolov5 advanced 4 train your own data set

PD快充磁吸移動電源方案

随机推荐

Uniapp uses uparse to parse the content of the background rich text editor and modify the uparse style

什么是乐观锁,什么是悲观锁

ThreadLocal

直播回顾 | smardaten李鸿飞解读中国低/无代码行业研究报告:风向变了

【IVI】15.1.2 系统稳定性优化篇(LMKD Ⅱ)PSI 压力失速信息

Vipshop work practice: Jason's deserialization application

commonJS和ES6模块化的区别

Implementation code of interceptor and filter

Pytorch neural network

Yolov5 advanced III training environment

基于SSM的毕业论文管理系统

Self taught programming series - 4 numpy arrays

How to use the least money to quickly open the Taobao traffic portal?

【C】青蛙跳台阶和汉诺塔问题(递归)

Yolov5 advanced level 2 installation of labelimg

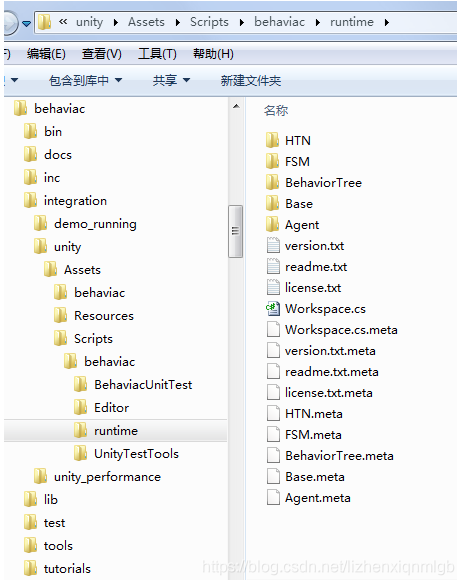

Behavior tree XML file hot load

What is optimistic lock and what is pessimistic lock

Cookie session and token

phpcms小程序插件api接口升级到4.3(新增批量获取接口、搜索接口等)

How to set the shelves and windows, and what to pay attention to in the optimization process