当前位置:网站首页>I drew a Gu ailing with characters!

I drew a Gu ailing with characters!

2022-07-02 20:40:00 【Algorithm code up】

Before, I often saw that kind of video composed of characters on the Internet , Very cool . I don't know how to do it , I've studied these two days , It's not hard to find .

Let's take a look at the final effect ( If vague , Click the link below to see the HD version ):

https://bytedance.feishu.cn/docx/doxcnCvvY051xFCBAmSqYZgiP7b

How to achieve ?

Simply speaking , To turn a color video into a black-and-white video drawn by characters , You can do it in the following steps :

- Frame the original video , Black and white every frame , And the pixels are represented by corresponding characters .

- Recombine the represented string into a character image .

- Combine all the character images into character video .

- Import the audio of the original video into the new character video .

Operation method

I put the complete code at the end of the article , Direct operation python3 video2char.py that will do . The program will ask you to enter the local path of the video and the definition after the transformation (0 Most blurred ,1 The clearest . Of course, the clearer , The slower the transition ).

To run the code, you need to use tqdm、opencv_python、moviepy Wait for a few libraries , First of all pip3 install Make sure they all have .

Principle analysis

The key step is how to convert a color image into a black-and-white character image , As shown in the figure below :

The principle of transformation is actually very simple . First of all, because a character will occupy a large pixel block in the image , So we must compress the color image first , Successive blocks of pixels can be merged , This compression process is opencv Of resize operation .

Then convert the compressed pixels into black-and-white pixels , And transform it into the corresponding character . Character, I use the following string here , From black to white , After my practice, this group is the best :

"#8XOHLTI)i=+;:,. "

Then you need to paint the transformed characters on the new canvas , It should be noted that the layout is uniform and compact , It's best not to have too much extra space around the canvas .

Finally, combine all the character images into a video , But there is no sound after the merger , Need to use moviepy The library imports the sound of the original video .

Complete code

import os

import re

import shutil

from tqdm import trange, tqdm

import cv2

from PIL import Image, ImageFont, ImageDraw

from moviepy.editor import VideoFileClip

class V2Char:

font_path = "Arial.ttf"

ascii_char = "#8XOHLTI)i=+;:,. "

def __init__(self, video_path, clarity):

self.video_path = video_path

self.clarity = clarity

def video2str(self):

def convert(img):

if img.shape[0] > self.text_size[1] or img.shape[1] > self.text_size[0]:

img = cv2.resize(img, self.text_size, interpolation=cv2.INTER_NEAREST)

ascii_frame = ""

for i in range(img.shape[0]):

for j in range(img.shape[1]):

ascii_frame += self.ascii_char[

int(img[i, j] / 256 * len(self.ascii_char))

]

return ascii_frame

print(" Converting the original video to characters ...")

self.char_video = []

cap = cv2.VideoCapture(self.video_path)

self.fps = cap.get(cv2.CAP_PROP_FPS)

self.nframe = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))

self.raw_width = int(cap.get(cv2.CAP_PROP_FRAME_WIDTH))

self.raw_height = int(cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

font_size = int(25 - 20 * max(min(float(self.clarity), 1), 0))

self.font = ImageFont.truetype(self.font_path, font_size)

self.char_width, self.char_height = max(

[self.font.getsize(c) for c in self.ascii_char]

)

self.text_size = (

int(self.raw_width / self.char_width),

int(self.raw_height / self.char_height),

)

for _ in trange(self.nframe):

raw_frame = cv2.cvtColor(cap.read()[1], cv2.COLOR_BGR2GRAY)

frame = convert(raw_frame)

self.char_video.append(frame)

cap.release()

def str2fig(self):

print(" Generating character image ...")

col, row = self.text_size

catalog = self.video_path.split(".")[0]

if not os.path.exists(catalog):

os.makedirs(catalog)

blank_width = int((self.raw_width - self.text_size[0] * self.char_width) / 2)

blank_height = int((self.raw_height - self.text_size[1] * self.char_height) / 2)

for p_id in trange(len(self.char_video)):

strs = [self.char_video[p_id][i * col : (i + 1) * col] for i in range(row)]

im = Image.new("RGB", (self.raw_width, self.raw_height), (255, 255, 255))

dr = ImageDraw.Draw(im)

for i, str in enumerate(strs):

for j in range(len(str)):

dr.text(

(

blank_width + j * self.char_width,

blank_height + i * self.char_height,

),

str[j],

font=self.font,

fill="#000000",

)

im.save(catalog + r"/pic_{}.jpg".format(p_id))

def jpg2video(self):

print(" Synthesizing character images into character video ...")

catalog = self.video_path.split(".")[0]

images = os.listdir(catalog)

images.sort(key=lambda x: int(re.findall(r"\d+", x)[0]))

im = Image.open(catalog + "/" + images[0])

fourcc = cv2.VideoWriter_fourcc("m", "p", "4", "v")

savedname = catalog.split("/")[-1]

vw = cv2.VideoWriter(savedname + "_tmp.mp4", fourcc, self.fps, im.size)

for image in tqdm(images):

frame = cv2.imread(catalog + "/" + image)

vw.write(frame)

vw.release()

shutil.rmtree(catalog)

def merge_audio(self):

print(" Synthesizing audio into character video ...")

raw_video = VideoFileClip(self.video_path)

char_video = VideoFileClip(self.video_path.split(".")[0] + "_tmp.mp4")

audio = raw_video.audio

video = char_video.set_audio(audio)

video.write_videofile(

self.video_path.split(".")[0] + f"_{self.clarity}.mp4",

codec="libx264",

audio_codec="aac",

)

os.remove(self.video_path.split(".")[0] + "_tmp.mp4")

def gen_video(self):

self.video2str()

self.str2fig()

self.jpg2video()

self.merge_audio()

if __name__ == "__main__":

video_path = input(" Enter the path of the video file :\n")

clarity = input(" Input definition (0~1, Direct enter uses the default value 0):\n") or 0

v2char = V2Char(video_path, clarity)

v2char.gen_video()Reference link

https://blog.csdn.net/weixin_41982136/article/details/89668958

边栏推荐

- Resunnet - tensorrt8.2 Speed and Display record Sheet on Jetson Xavier NX (continuously supplemented)

- Solution to blue screen after installing TIA botu V17 in notebook

- Research Report on the overall scale, major manufacturers, major regions, products and applications of outdoor vacuum circuit breakers in the global market in 2022

- Backpack template

- 测试人员如何做不漏测?这7点就够了

- CRM Customer Relationship Management System

- I want to ask you, where is a better place to open an account in Dongguan? Is it safe to open a mobile account?

- B-end e-commerce - reverse order process

- Function, function, efficiency, function, utility, efficacy

- pytorch 模型保存的完整例子+pytorch 模型保存只保存可训练参数吗?是(+解决方案)

猜你喜欢

After eight years of test experience and interview with 28K company, hematemesis sorted out high-frequency interview questions and answers

Exemple complet d'enregistrement du modèle pytoch + enregistrement du modèle pytoch seuls les paramètres d'entraînement sont - ils enregistrés? Oui (+ Solution)

【Hot100】21. 合并两个有序链表

After 65 days of closure and control of the epidemic, my home office experience sharing | community essay solicitation

How to realize the function of detecting browser type in Web System

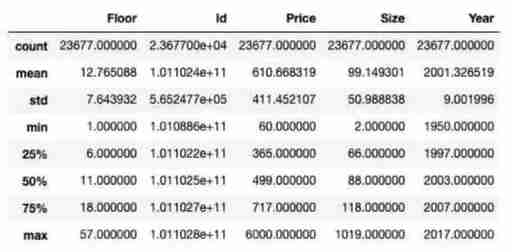

Second hand housing data analysis and prediction system

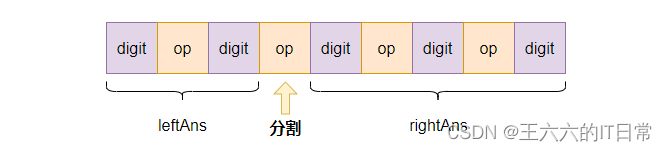

【每日一题】241. 为运算表达式设计优先级

测试人员如何做不漏测?这7点就够了

JASMINER X4 1U deep disassembly reveals the secret behind high efficiency and power saving

API文档工具knife4j使用详解

随机推荐

Codeforces Round #771 (Div. 2)(A-C)

【Hot100】22. 括号生成

Research Report on the overall scale, major manufacturers, major regions, products and applications of battery control units in the global market in 2022

Postman接口测试实战,这5个问题你一定要知道

什么叫在线开户?现在网上开户安全么?

Cron表达式(七子表达式)

At compilation environment setup -win

Google Earth Engine(GEE)——Landsat 9影像全波段影像下载(北京市为例)

Friends who firmly believe that human memory is stored in macromolecular substances, please take a look

Customized Huawei hg8546m restores Huawei's original interface

Complete example of pytorch model saving +does pytorch model saving only save trainable parameters? Yes (+ solution)

Sweet talk generator, regular greeting email machine... Open source programmers pay too much for this Valentine's day

[source code analysis] model parallel distributed training Megatron (5) -- pipestream flush

Automated video production

【实习】解决请求参数过长问题

Talk about macromolecule coding theory and Lao Wang's fallacy from the perspective of evolution theory

Welfare | Hupu isux11 Anniversary Edition is limited to hand sale!

Driverless learning (III): Kalman filter

Research Report on the overall scale, major manufacturers, major regions, products and applications of metal oxide arresters in the global market in 2022

【Kubernetes系列】kubeadm reset初始化前后空间、内存使用情况对比