当前位置:网站首页>Transfer Learning - Domain Adaptation

Transfer Learning - Domain Adaptation

2022-07-31 20:47:00 【Full stack programmer webmaster】

Hello everyone, meet again, I'm your friend Quanstack Jun.

Domain Adaptation

On the classic machineLearning In the problem, we often assume that the training set and test set have the same distribution, train the model on the training set, and test it on the test set.However, in practical problems, the test scene is often uncontrollable, and the distribution of the test set and the training set is very different. At this time, the so-called overfitting problem occurs: the model does not perform well on the test set.Taking face recognition as an example, if it is trained with oriental face data and used to recognize western people, the recognition performance will be significantly lower than that of oriental people.When the distribution of the training set and the test set is inconsistent, the model trained by the minimum empirical error criterion on the training data does not perform well on the test, so the transfer learning technology appears.

Domain Adaptation is a representative method in transfer learning, which refers to the use of informative source domain samples to improve the performance of the target domain model.Two crucial concepts in the domain adaptation problem: source domain (source domain) represents a different domain from the test sample, but has rich supervision information; target domain(target domain) represents the field where the test sample is located, with no labels or only a few labels.The source domain and target domain often belong to the same kind of tasks, but the distribution is different. According to the different types of target and source domains, domain adaptation problems have four different scenarios: unsupervised, supervised, heterogeneous distribution and multiple source domain problems.By performing domain adaptation at different stages, the researchers propose three different domain adaptation methods: 1) sample adaptation, weighted resampling of the source domain samples to approximate the distribution of the target domain.2) Feature-level adaptation, projecting the source and target domains into a common feature subspace.3) Model-level self-adaptation, modify the source domain error function, and consider the target domain error.

Sample Adaptive:

The basic idea is to resample the source domain samples, so that the resampled source domain samples and target domain samples have basically the same distribution, and the classifier is re-learned on the resampled sample set.

Instance based TL

Find data similar to the target domain in the source domain, adjust the weight of this data so that the new data matches the data in the target domain, and then increase the weight of the sample, so that when predicting the target domainproportion increased.The advantage is that the method is simple and easy to implement.The disadvantage is that the selection of weights and the measurement of similarity depend on experience, and the data distributions of the source and target domains are often different.

Feature Adaptive:

The basic idea is to learn a common feature representation. In the common feature space, the distribution of the source domain and the target domain should be as the same as possible.

Feature based TL

Assuming that the source domain and the target domain contain some common cross features, through feature transformation, the features of the source domain and the target domain are transformed into the same space, so that the source domain data and the target domain data in this space have the same distribution of data distribution, followed by traditional machine learning.The advantage is that it is applicable to most methods, and the effect is better.The disadvantage is that it is difficult to solve and is prone to overfitting.Link: https://www.zhihu.com/question/41979241/answer/247421889

Model adaptation:

The basic idea is to adapt directly at the model level.There are two ways of model adaptation. One is to model the model directly, but the constraint of "close distance between domains" is added to the model.High-degree samples are added to the training set and the model is updated.

Model Transfer (Parameter based TL)

Assuming that the source domain and the target domain share model parameters, it refers to applying the model previously trained in the source domain with a large amount of data to the target domain for prediction, such as using tens of millions of images to train an imageFor the recognition system, when we encounter a problem in a new image field, we don’t need to find tens of millions of images for training. We only need to transfer the previously trained model to the new field.In the field, only tens of thousands of images are often enough, and high accuracy can also be obtained.The advantage is that the similarities that exist between the models can be fully exploited.The disadvantage is that the model parameters are not easy to converge.

Note: The director of Bozhong’s family is a gathering of talents.

Publisher: Full-stack programmer, please indicate the source: https://javaforall.cn/127862.htmlOriginal link: https://javaforall.cn

边栏推荐

猜你喜欢

AI 自动写代码插件 Copilot(副驾驶员)

AI automatic code writing plugin Copilot (co-pilot)

All-platform GPU general AI video supplementary frame super-score tutorial

中文编码的设置与action方法的返回值

Implementing a Simple Framework for Managing Object Information Using Reflection

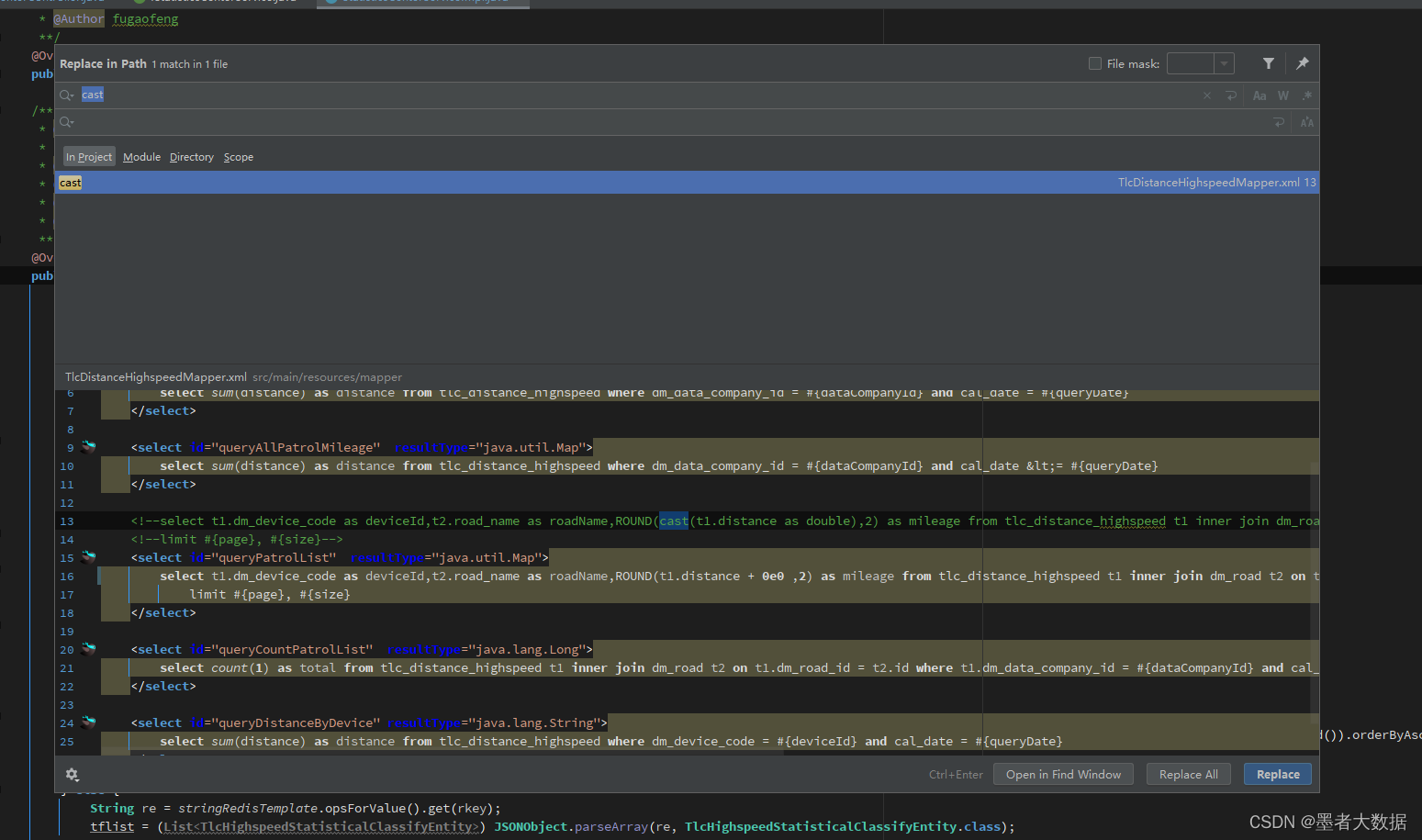

A shortcut to search for specific character content in idea

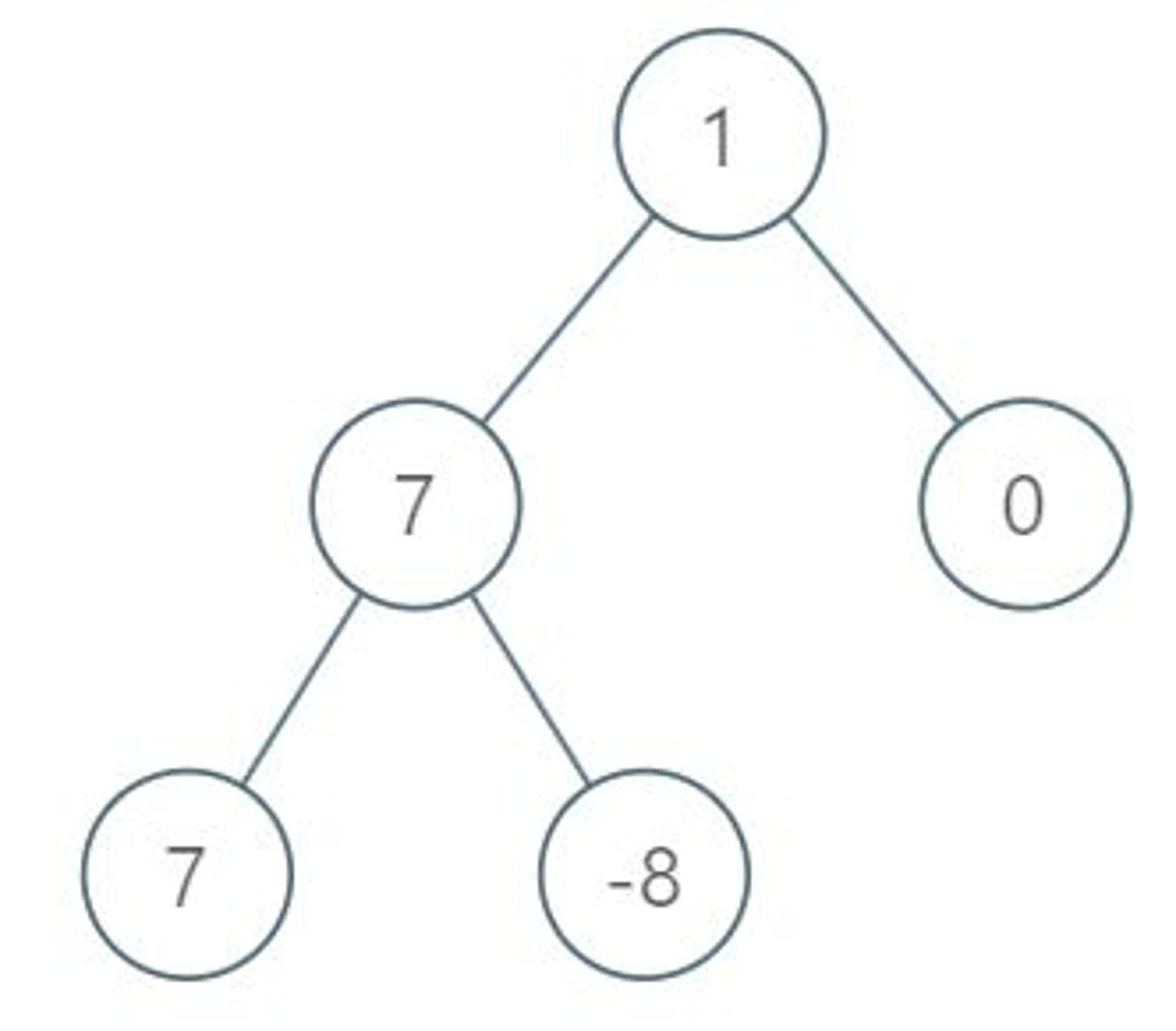

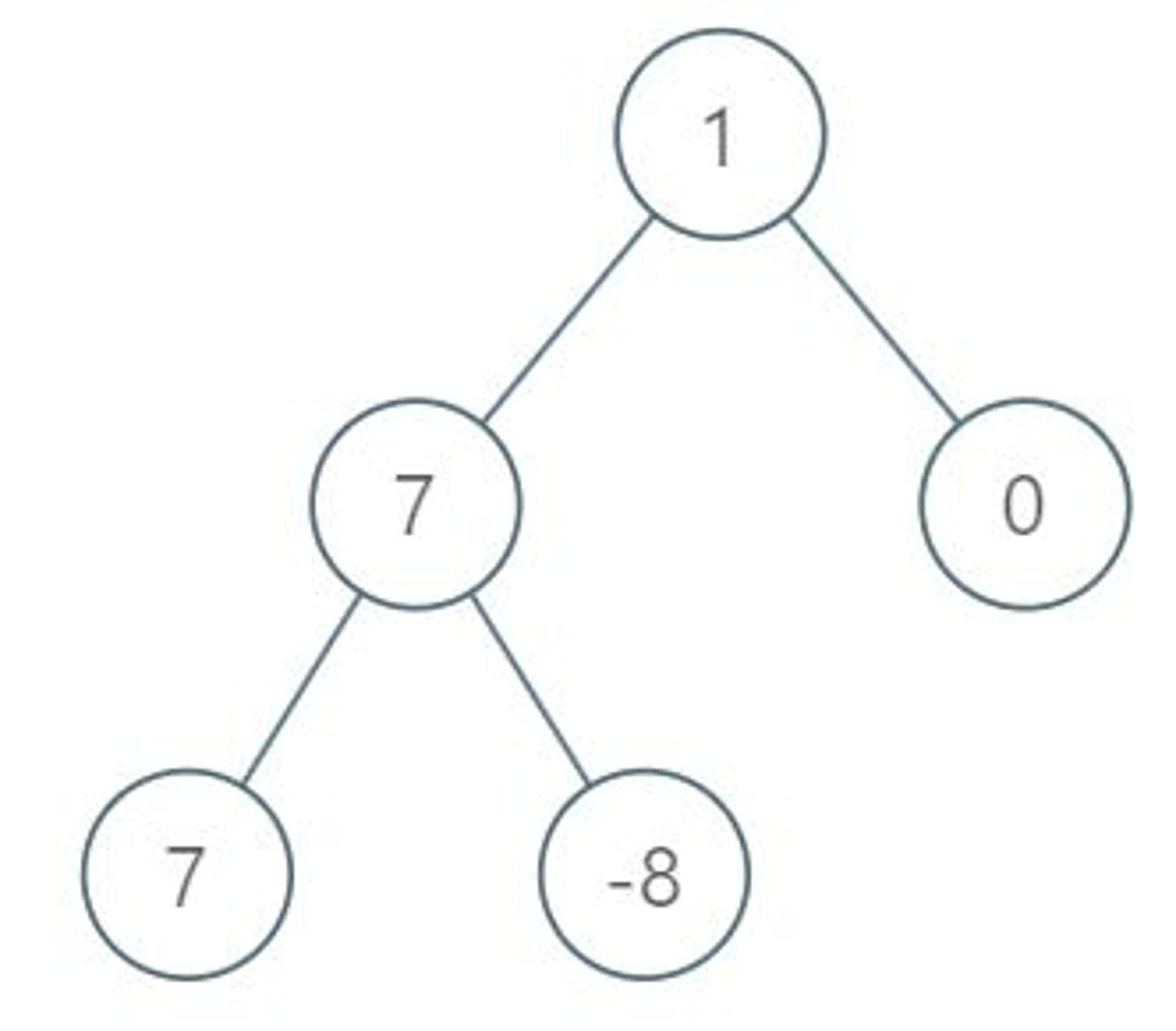

1161. 最大层内元素和 : 层序遍历运用题

Basic configuration of OSPFv3

1161. Maximum Sum of Elements in Layer: Hierarchical Traversal Application Problems

Qualcomm cDSP simple programming example (to query Qualcomm cDSP usage, signature), RK3588 npu usage query

随机推荐

Shell 脚本 快速入门到实战 -02

C# 之 扑克游戏 -- 21点规则介绍和代码实现

返回一个零长度的数组或者空的集合,不要返回null

Taobao/Tmall get Taobao password real url API

Chapter VII

财务盈利、偿债能力指标

Redis Overview: Talk to the interviewer all night long about Redis caching, persistence, elimination mechanism, sentinel, and the underlying principles of clusters!...

Socket回顾与I/0模型

Performance optimization: remember a tree search interface optimization idea

uni-app中的renderjs使用

Linux环境redis集群搭建「建议收藏」

顺序表的实现

BM5 merge k sorted linked lists

Arduino框架下STM32全系列开发固件安装指南

Efficient Concurrency: A Detailed Explanation of Synchornized's Lock Optimization

Carbon教程之 基本语法入门大全 (教程)

1161. 最大层内元素和 : 层序遍历运用题

-xms -xmx(information value)

multithreaded lock

linux查看redis版本命令(linux查看mysql版本号)