当前位置:网站首页>Deep understanding of lightgbm

Deep understanding of lightgbm

2022-06-10 19:45:00 【Xiaobai learns vision】

Click on the above “ Xiaobai studies vision ”, Optional plus " Star standard " or “ Roof placement ”

Heavy dry goods , First time delivery

The main contents of this paper are summarized :

1. LightGBM brief introduction

GBDT (Gradient Boosting Decision Tree) It's an enduring model of machine learning , The main idea is to use weak classifier ( Decision tree ) Iterative training to get the optimal model , The model has good training effect 、 It's not easy to fit .GBDT It's not only widely used in industry , It's often used to classify 、 Click through rate forecast 、 Search sorting and other tasks ; It's also a lethal weapon in various data mining competitions , According to statistics Kaggle More than half of the championship plans in the competition are based on GBDT. and LightGBM(Light Gradient Boosting Machine) Is an implementation GBDT The framework of the algorithm , Support efficient parallel training , And have faster training speed 、 Lower memory consumption 、 Better accuracy 、 Support distributed, can quickly process massive data and other advantages .

1.1 LightGBM Proposed motivation

Common machine learning algorithms , Such as neural networks and other algorithms , You can use mini-batch The way to train , The size of training data is not limited by memory . and GBDT At each iteration , All need to traverse the whole training data many times . If the whole training data is loaded into memory, the size of the training data will be limited ; If you don't load it into memory , Reading and writing training data over and over again takes a lot of time . Especially in the face of massive industrial data , ordinary GBDT The algorithm can not meet its needs .

LightGBM The main reason is to solve GBDT Problems encountered in massive data , Give Way GBDT It can be better and faster used in industrial practice .

1.2 XGBoost The disadvantages of and LightGBM The optimization of the

(1)XGBoost The shortcomings of

stay LightGBM Before I put it forward , The most famous GBDT Tool is XGBoost 了 , It is a decision tree algorithm based on pre sorting method . The basic idea of this algorithm for building a decision tree is : First , All features are pre ordered according to their values . secondly , The cost of traversing the segmentation point is to find the best segmentation point on the feature . Last , After finding the best segmentation point of a feature , Split the data into left and right child nodes .

The advantage of such a pre sorting algorithm is that it can accurately find the segmentation point . But the disadvantages are obvious : First , Big space consumption . Such an algorithm needs to preserve the eigenvalues of the data , The result of feature sorting is also saved ( for example , In order to quickly calculate the segmentation point , Saved the sorted index ), This consumes twice as much memory as the training data . secondly , There's also a big cost of time , When traversing each segmentation point , We need to calculate the splitting gain , The cost of consumption is high . Last , Yes cache Optimization is not friendly . After pre ordering , Feature access to gradients is a kind of random access , And different features access in different order , Be able to cache To optimize . meanwhile , When trees grow on every floor , You need to randomly access an array of row indexes to leaf indexes , And the order of access to different features is different , It can also cause a big cache miss.

(2)LightGBM The optimization of the

To avoid the above XGBoost The defects of , And can speed up without compromising accuracy GBDT The training speed of the model ,lightGBM In traditional GBDT The algorithm is optimized as follows :

be based on Histogram Decision tree algorithm .

One side gradient sampling Gradient-based One-Side Sampling(GOSS): Use GOSS It can reduce a large number of data instances with small gradients , In this way, only the remaining data with high gradient can be used to calculate the information gain , comparison XGBoost Traversing all eigenvalues saves a lot of time and space .

Mutually exclusive feature binding Exclusive Feature Bundling(EFB): Use EFB You can bind many mutually exclusive features into one feature , In this way, the goal of dimensionality reduction is achieved .

With depth limitation Leaf-wise Leaf growth strategy of : majority GBDT Tools that use inefficient layering (level-wise) Decision tree growth strategy , Because it treats leaves of the same layer indiscriminately , It brings a lot of unnecessary expenses . In fact, many leaves have low splitting gains , There's no need to search and split .LightGBM The use of depth limited by leaf growth (leaf-wise) Algorithm .

Directly support category features (Categorical Feature)

Support efficient parallelism

Cache Hit rate optimization

Let's introduce in detail the above mentioned lightGBM optimization algorithm .

2. LightGBM The basic principle of

2.1 be based on Histogram Decision tree algorithm

(1) Histogram algorithm

Histogram algorithm It should be translated into histogram algorithm , The basic idea of histogram algorithm is : First, the continuous floating-point eigenvalues are discretized into It's an integer , At the same time, construct a width of Histogram . When traversing data , According to the discretized value as the index, the statistics are accumulated in the histogram , After traversing the data once , The histogram accumulates the required Statistics , Then according to the discrete value of histogram , Traverse to find the best segmentation point .

chart : Histogram algorithm

Histogram algorithm is simply understood as : First determine how many boxes are needed for each feature (bin) And assign an integer to each bin ; Then the range of floating-point numbers is divided into several intervals , The number of intervals is equal to the number of boxes , Update the sample data belonging to the bin to the bin value ; Finally, histogram (#bins) Express . It looks very tall , It's actually histogram statistics , Put a large amount of data in a histogram .

We know that feature discretization has many advantages , Such as convenient storage 、 Faster calculation 、 Strong robustness 、 The model is more stable . For histogram algorithm, the most direct has the following two advantages :

Less memory : Histogram algorithm not only does not need to store the result of pre sorting , And only the discrete value of the feature can be saved , And this value is generally used Bit integer storage is enough , Memory consumption can be reduced to the original . in other words XGBoost Need to use Bit floating point number to store the characteristic value , And use Bit shaping to store index , and LightGBM Only need to use Bit to store histogram , Memory is reduced to ;

chart : Memory consumption optimization for the pre sort algorithm 1/8

It's less computationally expensive : Pre sort algorithm XGBoost Every time we traverse an eigenvalue, we need to calculate the gain of the splitting , And histogram algorithm LightGBM You just have to calculate Time ( It can be thought of as a constant ), Directly reduce the time complexity from Down to , And we know that .

Of course ,Histogram Algorithms are not perfect . Because the feature is discretized , It's not a very precise segmentation point , So it has an impact on the results . But the results on different datasets show that , The effect of discrete segmentation points on the final accuracy is not great , Even better sometimes . The reason is that decision trees are inherently weak models , It doesn't matter whether the segmentation point is accurate or not ; Coarse segmentation points also have regularization effect , It can effectively prevent over fitting ; Even if the training error of a single tree is slightly larger than that of the accurate segmentation algorithm , But in gradient ascension (Gradient Boosting) There's not much impact under the framework of .

(2) Histogram difference acceleration

LightGBM Another optimization is Histogram( Histogram ) Speed up . The histogram of a leaf can be obtained by the difference between the histogram of its parent node and that of its brother , Double the speed . Usually when constructing histogram , You need to traverse all the data on this leaf , But histogram difference only needs to traverse histogram k A barrel . In the process of actually building the tree ,LightGBM You can also calculate the leaf nodes with small histogram first , Then the histogram is used to make difference to get the leaf node with large histogram , So you can get the histogram of its brother leaves at a very small cost .

chart : Histogram error

Be careful : XGBoost Only non-zero values are considered to accelerate the pre sort , and LightGBM A similar strategy is used : Only non-zero features are used to construct histograms .

2.2 With depth limitation Leaf-wise Algorithm

stay Histogram Algorithm above ,LightGBM Further optimization . First of all, it abandoned most of GBDT Tools are used to grow by layer (level-wise) Decision tree growth strategy , Instead, we use a depth limited growth by leaf (leaf-wise) Algorithm .

XGBoost use Level-wise The growth strategy of , This strategy can split the leaves of the same layer at the same time by traversing the data once , Easy to multithread , Or control the complexity of the model , It's not easy to over fit . But actually Level-wise It's an inefficient algorithm , Because it treats leaves of the same layer indiscriminately , In fact, many leaves have low splitting gains , There's no need to search and split , So there's a lot of unnecessary computing overhead .

chart : Decision trees growing by layers

LightGBM use Leaf-wise The growth strategy of , The strategy takes all of the current leaves from , Find the leaf with the most splitting gain , And then split , So circular . So the same as Level-wise comparison ,Leaf-wise The advantages of : With the same number of splits ,Leaf-wise Can reduce more errors , Get better accuracy ;Leaf-wise The disadvantage of : A deeper decision tree may grow , Produce over fitting . therefore LightGBM Will be in Leaf-wise Added a maximum depth limit to , Avoid over fitting while ensuring high efficiency .

chart : Decision trees based on leaf growth

2.3 One side gradient sampling algorithm

Gradient-based One-Side Sampling It should be translated as unilateral gradient sampling (GOSS).GOSS The algorithm starts from the angle of reducing samples , Exclude most samples with small gradients , Only the remaining samples are used to calculate the information gain , It's an algorithm that balances data reduction and accuracy .

AdaBoost in , Sample weight is an indicator of the importance of data . However, in GBDT There is no original sample weight in , Weight sampling cannot be applied . Fortunately, , We observed that GBDT Each data in has a different gradient value , Very useful for sampling . That is, samples with small gradients , Training error is also relatively small , It shows that the data has been well learned by the model , The direct idea is to get rid of this little gradient data . However, doing so will change the distribution of the data , Will affect the accuracy of the training model , To avoid this problem , Put forward GOSS Algorithm .

GOSS Is a sample sampling algorithm , The purpose is to discard some samples which are not helpful to calculate the information gain and leave the useful ones . According to the definition of calculated information gain , The sample with large gradient has more influence on information gain . therefore ,GOSS In the process of data sampling, only the data with larger gradient is reserved , However, if all the data with smaller gradient are discarded directly, the overall distribution of data will be affected . therefore ,GOSS First, all the values of the feature to be split are sorted in descending order according to the absolute value (XGBoost It's also sorted , however LightGBM You don't have to save the sorted results ), Choose the one with the largest absolute value Data . And then randomly select from the rest of the smaller gradient data Data . And then put this Times a constant , In this way, the algorithm will pay more attention to the samples with insufficient training , It doesn't change the distribution of the original data set too much . Finally use this To calculate the information gain . The picture below is GOSS The specific algorithm .

chart : One side gradient sampling algorithm

2.4 Mutual exclusion feature binding algorithm

High dimensional data is often sparse , This sparsity inspired us to design a lossless method to reduce the dimension of features . Usually the features that are bound are mutually exclusive ( That is, the feature will not be nonzero at the same time , image one-hot), So that the two features are tied together so that information is not lost . If the two features are not completely mutually exclusive ( In some cases, both features are nonzero ), You can use an indicator to measure the degree to which features are not mutually exclusive , Call it the conflict ratio , When the value is small , We can choose to bundle two features that are not completely mutually exclusive , Without affecting the final accuracy . Mutual exclusion feature binding algorithm (Exclusive Feature Bundling, EFB) It is pointed out that if some features are fused and bound , You can reduce the number of features . In this way, the time complexity of constructing histogram is reduced from Turn into , here Refers to the number of feature packages after feature fusion binding , And Far less than .

In response to this idea , We're going to have two problems :

How to determine which features should be tied together (build bundled)?

How to tie a feature to a (merge feature)?

(1) Solve which features should be tied together

Binding independent features is a NP-Hard problem ,LightGBM Of EFB The algorithm transforms this problem into a graph coloring problem , Think of all the features as vertices of a graph , Connect features that are not independent of each other with a single edge , The weight of an edge is the total conflict value of two connected features , The features that need to be bound are those points that need to be painted with the same color in the graph coloring problem ( features ). Besides , We notice that there are usually a lot of features , Though not % Mutually exclusive , But it's rare to take nonzero values at the same time . If our algorithm allows for a small number of collisions , We can get fewer feature packs , Further improve the computational efficiency . After a simple calculation , Random contamination of a small number of eigenvalues will affect the accuracy most , Is the maximum conflict ratio in each binding , When it's relatively small , Can achieve the balance between precision and efficiency . The specific steps can be summarized as follows :

Construct a weighted undirected graph , A vertex is a feature , Sides have weight , Its weight is related to the conflict between the two features ;

Sort in descending order of nodes , The greater the degree , The greater the conflict with other features ;

Walk through each feature , Assign it to an existing feature pack , Or create a new feature pack , To minimize the overall conflict .

The algorithm allows pairwise features not completely exclusive to increase the number of feature bundles , By setting the maximum conflict ratio To balance the accuracy and efficiency of the algorithm .EFB The pseudo code of the algorithm is as follows :

chart : Greedy binding algorithm

Algorithm 3 The time complexity of is , Only deal with it once before training , The time complexity is acceptable when there are not many features , But it's hard to deal with the characteristics of the million dimensions . In order to continue to improve efficiency ,LightGBM This paper proposes a more efficient sorting strategy without graph : Sort features by the number of nonzero values , This is similar to the degree ordering of graph nodes , Because more non-zero values usually lead to conflicts , The new algorithm is in 3 Based on this, the sorting strategy is changed .

(2) How to tie features together

Feature merging algorithm , The key is that the original features can be separated from the merged features . Bind several features to the same bundle You need to ensure that the value of the original feature before binding can be in bundle To identify , in consideration of histogram-based The algorithm saves continuous values as discrete bins, We can divide the values of different features into bundle Different in bin( The box ) in , This can be solved by adding an offset constant to the eigenvalue . such as , We are bundle Two features are bound in A and B,A The original value of a feature is an interval ,B The original value of a feature is an interval , We can do it in B Add an offset constant to the value of the feature , Change its value range to , The bound feature value range is , That way, you can easily merge features A and B 了 . The specific feature merging algorithm is as follows :

chart : Feature merging algorithm

3. LightGBM Engineering optimization of

We're going to write a paper 《Lightgbm: A highly efficient gradient boosting decision tree》 Optimization not mentioned in , And in his related papers 《A communication-efficient parallel algorithm for decision tree》 The optimization scheme mentioned in , Put it in this section as LightGBM To introduce to you .

3.1 Directly support category features

In fact, most machine learning tools can not directly support category features , Generally, we need to put the category characteristics , adopt one-hot code , Transform to multidimensional features , Reduced the efficiency of space and time . But we know that... Is not recommended for decision trees one-hot code , Especially when there are many categories in the category feature , There will be the following problems :

There will be a sample segmentation imbalance problem , This leads to a very small slitting gain ( It's a waste of this feature ). Use one-hot code , It means that you can only use one vs rest( For example, is it a dog , Is it a cat or something ) Segmentation method of . for example , After the classification of animals , Will produce whether the dog , Whether it's a cat or not , There are only a few samples in this series of characteristics , A large number of samples are , At this time, the segmentation of the sample will produce imbalance , This means that the slitting gain will also be small . The smaller slice set , Its proportion in the total sample is too small , No matter how much gain , Multiplied by that ratio, it's almost negligible ; The larger split sample set , It's almost the original sample set , The gain is almost zero . A more intuitive understanding is that there is no difference between unbalanced segmentation and non segmentation .

It will affect the learning of decision tree . Because even if we can segment the features of this category , Single hot coding also splits the data into many scattered small spaces , As shown on the left of the figure below . Decision tree learning uses statistical information , In these small spaces of data , The statistics are not accurate , The learning effect will be worse . But if you use the segmentation method on the right side of the figure below , The data will be split into two larger spaces , Further study will be better . The meaning of the leaf node on the right of the figure below is or put on the left child , The rest is on the right .

chart : The picture on the left is based on one-hot Code split , The right to LightGBM be based on many-vs-many Split up

The use of category features is very common in practice . And to solve one-hot The inadequacy of coding in dealing with category features ,LightGBM Optimized support for category features , You can directly input category features , No extra unfolding is required .LightGBM use many-vs-many The classification features are divided into two subsets , To achieve the optimal segmentation of class features . Suppose that a feature of a dimension has Categories , Then there are Maybe , The time complexity is ,LightGBM be based on Fisher Of 《On Grouping For Maximum Homogeneity》 The paper implements Time complexity of .

The algorithm flow is shown in the figure below , Before enumerating split points , First put the histogram according to each category label Ranking the mean values ; Then enumerate the optimal segmentation points according to the sorting results . You can see it in the picture below , Is the mean of the category . Of course , This method is easy to over fit , therefore LightGBM It also adds a lot of constraints and regularization for this method .

chart :LightGBM An optimal segmentation algorithm for class features

stay Expo Experimental results on data sets show that , Compared to the expansion method , Use LightGBM The supported category features can accelerate the training speed by times , And the accuracy is the same . what's more ,LightGBM Is the first one that directly supports category features GBDT Tools .

3.2 Support efficient parallelism

(1) Feature parallelism

The main idea of feature parallelism is that different machines find the best segmentation point on different feature sets , Then synchronize the optimal split point between machines .XGBoost This feature parallel method is used . This feature parallel method has a big disadvantage : It's about dividing the data vertically , Each machine contains different data , Then we use different machines to find the optimal split point of different features , The partition results need to be communicated to each machine , Added extra complexity .

LightGBM The data is not divided vertically , It's all stored on every machine , After getting the best partition scheme, partition can be performed locally and unnecessary communication can be reduced . The specific process is shown in the figure below .

chart : Feature parallelism

(2) Data parallelism

The traditional data parallel strategy is to divide data horizontally , Let different machines construct histograms locally first , Then make a global merge , Finally, the best segmentation point is found on the merged histogram . There is a big drawback to this data partitioning : Too much communication overhead . If you use point-to-point communication , The communication cost of a machine is about

402 Payment Required

.LightGBM Use distributed protocols in data parallelism (Reduce scatter) Allocate the task of histogram merging to different machines , Reduce communication and Computing , And make use of histogram , Further reduced traffic by half . The specific process is shown in the figure below .

chart : Data parallelism

(3) Vote in parallel

Data parallelism based on voting further optimizes the communication cost in data parallelism , Make the communication cost constant . When there's a lot of data , In order to reduce the traffic, only the histogram of some features is merged by voting parallel , Can get very good acceleration effect . The specific process is shown in the figure below .

The general steps are two steps :

Find out locally Top K features , Based on the voting, the features that may be the optimal segmentation point are screened out ;

When merging, only the features selected by each machine are merged .

chart : Vote in parallel

3.3 Cache Hit rate optimization

XGBoost Yes cache Optimization is not friendly , As shown in the figure below . After pre ordering , Feature access to gradients is a kind of random access , And different features access in different order , Be able to cache To optimize . meanwhile , When trees grow on every floor , You need to randomly access an array of row indexes to leaf indexes , And the order of access to different features is different , It can also cause a big cache miss. In order to solve the problem of low cache hit rate ,XGBoost The cache access algorithm is improved .

chart : Random access can cause cache miss

and LightGBM The histogram algorithm is applied to Cache Friendly by nature :

First , All features get gradients in the same way ( The difference in XGBoost The gradient is obtained by different indexes ), Only the gradients need to be sorted and continuous access can be achieved , Greatly improves the cache hit rate ;

secondly , Because you don't need to store an array of row indexes to leaf indexes , Reduced storage consumption , And it doesn't exist Cache Miss The problem of .

chart :LightGBM Increase cache hit rate

4. LightGBM Advantages and disadvantages

4.1 advantage

This part mainly summarizes LightGBM be relative to XGBoost The advantages of , This paper introduces memory and speed .

(1) Faster

LightGBM The histogram algorithm is used to transform the traversal sample into the ergodic histogram , Greatly reduces the time complexity ;

LightGBM In the training process, the single side gradient algorithm is used to filter out the samples with small gradient , It reduces a lot of computation ;

LightGBM Based on Leaf-wise The growth strategy of the algorithm constructs a tree , It reduces a lot of unnecessary computation ;

LightGBM The optimized features are used in parallel 、 Data parallel methods accelerate computation , When the amount of data is very large, we can also adopt the strategy of voting parallel ;

LightGBM The cache is also optimized , Increased cache hit rate ;

(2) Smaller memory

XGBoost After using the pre sort, it is necessary to record the index of the characteristic value and the statistical value of the corresponding sample , and LightGBM The histogram algorithm is used to transform the eigenvalue into bin value , And you don't need to record the characteristics to the index of the sample , Change the spatial complexity from Reduced to , Greatly reduces memory consumption ;

LightGBM The histogram algorithm is used to transform the stored eigenvalues into storage bin value , Reduced memory consumption ;

LightGBM In the training process, the mutual exclusion feature binding algorithm is used to reduce the number of features , Reduced memory consumption .

4.2 shortcoming

A deeper decision tree may grow , Produce over fitting . therefore LightGBM stay Leaf-wise A maximum depth limit has been added to it , Avoid over fitting while ensuring high efficiency ;

Boosting Families are iterative algorithms , Each iteration adjusts the sample weight according to the prediction results of the previous iteration , So as the iteration goes on , The error will be smaller and smaller , Deviation of the model (bias) It will keep falling . because LightGBM It's a deviation based algorithm , So it's sensitive to noise ;

When looking for the optimal solution , Based on the optimal segmentation variable , The idea that the optimal solution is the synthesis of all the characteristics is not taken into account ;

5. LightGBM example

All the data sets and code in this article are in my GitHub in , Address :https://github.com/Microstrong0305/WeChat-zhihu-csdnblog-code/tree/master/Ensemble%20Learning/LightGBM

5.1 install LightGBM Dependency package

pip install lightgbm5.2 LightGBM Classification and regression

LightGBM There are two broad categories of interfaces :LightGBM The native interface and scikit-learn Interface , also LightGBM Can achieve classification and regression tasks .

(1) be based on LightGBM Classification of native interfaces

import lightgbm as lgb

from sklearn import datasets

from sklearn.model_selection import train_test_split

import numpy as np

from sklearn.metrics import roc_auc_score, accuracy_score

# Load data

iris = datasets.load_iris()

# Divide the training set and the test set

X_train, X_test, y_train, y_test = train_test_split(iris.data, iris.target, test_size=0.3)

# Convert to Dataset data format

train_data = lgb.Dataset(X_train, label=y_train)

validation_data = lgb.Dataset(X_test, label=y_test)

# Parameters

params = {

'learning_rate': 0.1,

'lambda_l1': 0.1,

'lambda_l2': 0.2,

'max_depth': 4,

'objective': 'multiclass', # Objective function

'num_class': 3,

}

# model training

gbm = lgb.train(params, train_data, valid_sets=[validation_data])

# Model to predict

y_pred = gbm.predict(X_test)

y_pred = [list(x).index(max(x)) for x in y_pred]

print(y_pred)

# Model to evaluate

print(accuracy_score(y_test, y_pred))(2) be based on Scikit-learn Classification of interfaces

from lightgbm import LGBMClassifier

from sklearn.metrics import accuracy_score

from sklearn.model_selection import GridSearchCV

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.externals import joblib

# Load data

iris = load_iris()

data = iris.data

target = iris.target

# Divide training data and test data

X_train, X_test, y_train, y_test = train_test_split(data, target, test_size=0.2)

# model training

gbm = LGBMClassifier(num_leaves=31, learning_rate=0.05, n_estimators=20)

gbm.fit(X_train, y_train, eval_set=[(X_test, y_test)], early_stopping_rounds=5)

# Model storage

joblib.dump(gbm, 'loan_model.pkl')

# Model loading

gbm = joblib.load('loan_model.pkl')

# Model to predict

y_pred = gbm.predict(X_test, num_iteration=gbm.best_iteration_)

# Model to evaluate

print('The accuracy of prediction is:', accuracy_score(y_test, y_pred))

# Importance of characteristics

print('Feature importances:', list(gbm.feature_importances_))

# The grid search , Parameter optimization

estimator = LGBMClassifier(num_leaves=31)

param_grid = {

'learning_rate': [0.01, 0.1, 1],

'n_estimators': [20, 40]

}

gbm = GridSearchCV(estimator, param_grid)

gbm.fit(X_train, y_train)

print('Best parameters found by grid search are:', gbm.best_params_)(3) be based on LightGBM Regression of native interfaces

about LightGBM Solve the problem of return , We use it Kaggle The return problem in the game :House Prices: Advanced Regression Techniques, Address :https://www.kaggle.com/c/house-prices-advanced-regression-techniques To give an example .

There are a total of columns in the training dataset of the house price forecast , The first column is Id, The last column is label, The middle column is the feature . In this list of features , Columns are typed variables , Columns are integer variables , Columns are floating point variables . There are missing values in the training data set .

import pandas as pd

from sklearn.model_selection import train_test_split

import lightgbm as lgb

from sklearn.metrics import mean_absolute_error

from sklearn.preprocessing import Imputer

# 1. Reading documents

data = pd.read_csv('./dataset/train.csv')

# 2. Split data input : features Output : Predict the target variable

y = data.SalePrice

X = data.drop(['SalePrice'], axis=1).select_dtypes(exclude=['object'])

# 3. Split training set 、 Test set , Segmentation ratio 7.5 : 2.5

train_X, test_X, train_y, test_y = train_test_split(X.values, y.values, test_size=0.25)

# 4. Null processing , The default method : Fill in with the average value of the characteristic column

my_imputer = Imputer()

train_X = my_imputer.fit_transform(train_X)

test_X = my_imputer.transform(test_X)

# 5. Convert to Dataset data format

lgb_train = lgb.Dataset(train_X, train_y)

lgb_eval = lgb.Dataset(test_X, test_y, reference=lgb_train)

# 6. Parameters

params = {

'task': 'train',

'boosting_type': 'gbdt', # Set promotion type

'objective': 'regression', # Objective function

'metric': {'l2', 'auc'}, # Evaluation function

'num_leaves': 31, # Number of leaf nodes

'learning_rate': 0.05, # Learning rate

'feature_fraction': 0.9, # The proportion of feature selection for tree building

'bagging_fraction': 0.8, # The sampling ratio of the tree building sample is

'bagging_freq': 5, # k Mean every k The next iteration is executed bagging

'verbose': 1 # <0 Show fatal , =0 Display error ( Warning ), >0 display information

}

# 7. call LightGBM Model , Training using training set data ( fitting )

# Add verbosity=2 to print messages while running boosting

my_model = lgb.train(params, lgb_train, num_boost_round=20, valid_sets=lgb_eval, early_stopping_rounds=5)

# 8. Using models to predict test set data

predictions = my_model.predict(test_X, num_iteration=my_model.best_iteration)

# 9. Evaluate the prediction results of the model ( Mean absolute error )

print("Mean Absolute Error : " + str(mean_absolute_error(predictions, test_y)))(4) be based on Scikit-learn Regression of interface

import pandas as pd

from sklearn.model_selection import train_test_split

import lightgbm as lgb

from sklearn.metrics import mean_absolute_error

from sklearn.preprocessing import Imputer

# 1. Reading documents

data = pd.read_csv('./dataset/train.csv')

# 2. Split data input : features Output : Predict the target variable

y = data.SalePrice

X = data.drop(['SalePrice'], axis=1).select_dtypes(exclude=['object'])

# 3. Split training set 、 Test set , Segmentation ratio 7.5 : 2.5

train_X, test_X, train_y, test_y = train_test_split(X.values, y.values, test_size=0.25)

# 4. Null processing , The default method : Fill in with the average value of the characteristic column

my_imputer = Imputer()

train_X = my_imputer.fit_transform(train_X)

test_X = my_imputer.transform(test_X)

# 5. call LightGBM Model , Training using training set data ( fitting )

# Add verbosity=2 to print messages while running boosting

my_model = lgb.LGBMRegressor(objective='regression', num_leaves=31, learning_rate=0.05, n_estimators=20,

verbosity=2)

my_model.fit(train_X, train_y, verbose=False)

# 6. Using models to predict test set data

predictions = my_model.predict(test_X)

# 7. Evaluate the prediction results of the model ( Mean absolute error )

print("Mean Absolute Error : " + str(mean_absolute_error(predictions, test_y)))5.3 LightGBM Adjustable parameter

In the last part ,LightGBM Some parameters of the model are set simply , But most of them use the default parameters of the model , But default parameters are not the best . Want to let LightGBM Better performance , Need to be right LightGBM Fine tuning the model parameters . The regression model is shown in the following figure , The parameters that the classification model needs to adjust are similar to this .

chart :LightGBM Regression model parameters adjustment

6. About LightGBM Reflections on some problems

6.1 LightGBM And XGBoost What are the connections and differences between them ?

(1)LightGBM Based on histogram Decision tree algorithm , This is different from XGBoost Greedy algorithm and approximation algorithm in ,histogram The algorithm has many advantages in memory and computing cost .1) Memory advantage : Obviously , The memory consumption of histogram algorithm is

402 Payment Required

Bit floating point number to save .2) The computational advantage : The pre sorting algorithm needs to traverse the eigenvalues of all samples when choosing the splitting features to calculate the splitting revenue , Time is , The histogram algorithm only needs to traverse the bucket , Time is .(2)XGBoost It's using level-wise The split strategy , and LightGBM Adopted leaf-wise The strategy of , The difference is that XGBoost Do undifferentiated splitting for all nodes of each layer , Maybe some nodes have very small gain , It has little effect on the results , however XGBoost There was also a split , It brings unnecessary expenses .leaft-wise The method is to select the node with the largest splitting profit among all the current leaf nodes , So recursively , Obviously leaf-wise It's easy to over fit , Because it's easy to fall into a higher depth , So we need to limit the maximum depth , So as to avoid over fitting .

(3)XGBoost Build histograms dynamically at each level , because XGBoost The histogram algorithm is not for a specific feature , Instead, all features share a histogram ( The weight of each sample is the second derivative ), So every layer has to rebuild the histogram , and LightGBM There is a histogram for each feature , So it's enough to build a histogram once .

(4)LightGBM Use histogram to do difference acceleration , The histogram of a child node can be obtained by subtracting the histogram of a sibling node from the histogram of the parent node , To speed up the calculation .

(5)LightGBM Support category features , There is no need to heat code alone .

(6)LightGBM The feature parallel and data parallel algorithms are optimized , In addition, a voting parallel scheme is added .

(7)LightGBM A gradient based one-sided sampling is used to reduce the training samples and keep the data distribution unchanged , Reduce the model accuracy caused by the change of data distribution .

(8) Feature binding is transformed into graph coloring problem , Reduce the number of features .

The good news !

Xiaobai learns visual knowledge about the planet

Open to the outside world

download 1:OpenCV-Contrib Chinese version of extension module

stay 「 Xiaobai studies vision 」 Official account back office reply : Extension module Chinese course , You can download the first copy of the whole network OpenCV Extension module tutorial Chinese version , Cover expansion module installation 、SFM Algorithm 、 Stereo vision 、 Target tracking 、 Biological vision 、 Super resolution processing and other more than 20 chapters .

download 2:Python Visual combat project 52 speak

stay 「 Xiaobai studies vision 」 Official account back office reply :Python Visual combat project , You can download, including image segmentation 、 Mask detection 、 Lane line detection 、 Vehicle count 、 Add Eyeliner 、 License plate recognition 、 Character recognition 、 Emotional tests 、 Text content extraction 、 Face recognition, etc 31 A visual combat project , Help fast school computer vision .

download 3:OpenCV Actual project 20 speak

stay 「 Xiaobai studies vision 」 Official account back office reply :OpenCV Actual project 20 speak , You can download the 20 Based on OpenCV Realization 20 A real project , Realization OpenCV Learn advanced .

Communication group

Welcome to join the official account reader group to communicate with your colleagues , There are SLAM、 3 d visual 、 sensor 、 Autopilot 、 Computational photography 、 testing 、 Division 、 distinguish 、 Medical imaging 、GAN、 Wechat groups such as algorithm competition ( It will be subdivided gradually in the future ), Please scan the following micro signal clustering , remarks :” nickname + School / company + Research direction “, for example :” Zhang San + Shanghai Jiaotong University + Vision SLAM“. Please note... According to the format , Otherwise, it will not pass . After successful addition, they will be invited to relevant wechat groups according to the research direction . Please do not send ads in the group , Or you'll be invited out , Thanks for your understanding ~边栏推荐

- Morris traversal of binary tree

- Source code analysis of Tencent libco collaboration open source library (III) -- Exploring collaboration switching process assembly register saving and efficient collaboration environment

- 2022.05.27 (lc_647_palindrome substring)

- Harbor镜像拉取凭证配置

- 2022.05.28(LC_516_最长回文子序列)

- Some questions often asked during the interview. Come and see how many correct answers you can get

- APICloud可视化开发丨一键生成专业级源码

- Docker/Rancher2部署redis:5.0.9

- 腾讯Libco协程开源库 源码分析(三)---- 探索协程切换流程 汇编寄存器保存 高效保存协程环境

- MySql的MyISAM引擎切换InnoDB时报错Row size too large (&gt; 8126)解决

猜你喜欢

![[C language] accidentally write a bug? Mortals teach you how to write good code [explain debugging skills in vs]](/img/34/6f254e027e5941bb2c3462b665c3ba.png)

[C language] accidentally write a bug? Mortals teach you how to write good code [explain debugging skills in vs]

618 great promotion is coming, mining bad reviews with AI and realizing emotional analysis of 100 million comments with zero code

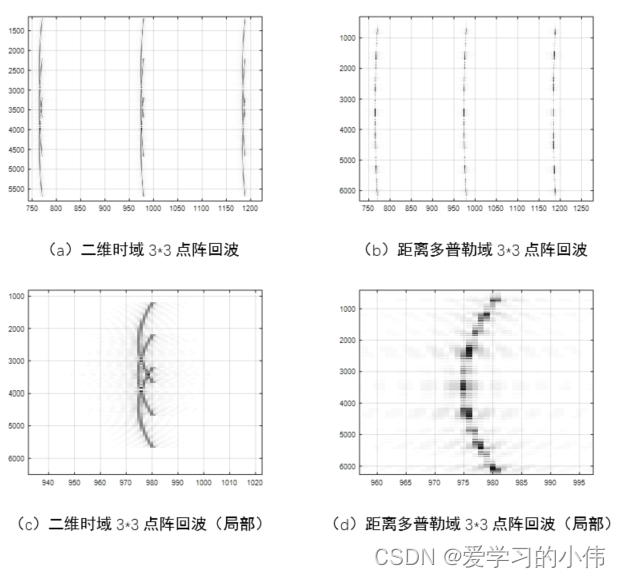

SAR回波信号基本模型与性质

SAR image focusing quality evaluation plug-in

Design and implementation of SSM based traffic metering cloud system Rar (thesis + project source code)

Tencent cloud database tdsql- a big guy talks about the past, present and future of basic software

![MySQL advanced Chapter 1 (installing MySQL under Linux) [i]](/img/f9/60998504e20561886b5f62eb642488.png)

MySQL advanced Chapter 1 (installing MySQL under Linux) [i]

【C语言】一不小心写出bug?凡人教你如何写出好代码【详解vs中调试技巧】

禁止摆烂,软件测试工程师从初级到高级进阶指南,助你一路晋升

Sliding window maximum value problem

随机推荐

一文带你了解J.U.C的FutureTask、Fork/Join框架和BlockingQueue

Writing technical articles is a fortune for the future

Yuntu says that every successful business system cannot be separated from apig

MySql的MyISAM引擎切换InnoDB时报错Row size too large (&gt; 8126)解决

领域驱动设计(六) - 架构设计浅谈

Rmarkdown 轻松录入数学公式

Basic model and properties of SAR echo signal

Source code analysis of Tencent libco collaboration open source library (III) -- Exploring collaboration switching process assembly register saving and efficient collaboration environment

一文带你了解J.U.C的FutureTask、Fork/Join框架和BlockingQueue

SAR回波信号基本模型与性质

In the all digital era, how can enterprise it complete transformation?

我的第一部作品:TensorFlow2.x

云图说|每个成功的业务系统都离不开APIG的保驾护航

Performance and high availability analysis of database firewall

大厂测试员年薪30万到月薪8K,吐槽工资太低,反被网友群嘲?

How to query the database table storage corresponding to a field on the sapgui screen

APICloud可视化开发新手图文教程

Mysql database design concept (multi table query & transaction operation)

DataScience&ML:金融科技领域之风控之风控指标/字段相关概念、口径逻辑之详细攻略

[advanced C language] data storage [part I] [ten thousand words summary]