当前位置:网站首页>Can't get data for duplicate urls using Scrapy framework, dont_filter=True

Can't get data for duplicate urls using Scrapy framework, dont_filter=True

2022-08-03 09:32:00 【The moon give me copy code】

Scenario: The code reports no errors, and the xpath expression is determined to be parsed correctly.

Possible cause: You are using Scrapy to request duplicate urls.

Scrapy has duplicate filtering built in, which is turned on by default.

The following example, parse2 cannot be called:

import scrapyclass ExampleSpider(scrapy.Spider):name="test"# allowed_domains = ["https://www.baidu.com/"]start_urls = ["https://www.baidu.com/"]def parse(self, response):yield scrapy.Request(self.start_urls[0],callback=self.parse2)def parse2(self, response):print(response.url)When Scrapy enters parse, it will request start_urls[0] by default, and when you request start_urls[0] again in parse, the bottom layer of Scrapy will filter out duplicate urls by default, and will not process the request.commit, that's why parse2 is not called.

Workaround:

Add dont_filter=True parameter so that Scrapy doesn't filter out duplicate requests.

import scrapyclass ExampleSpider(scrapy.Spider):name="test"# allowed_domains = ["https://www.baidu.com/"]start_urls = ["https://www.baidu.com/"]def parse(self, response):yield scrapy.Request(self.start_urls[0],callback=self.parse2,dont_filter=True)def parse2(self, response):print(response.url)At this point, parse2 will be called normally.

边栏推荐

猜你喜欢

随机推荐

别人都不知道的“好用”网站,让你的效率飞快

【LeetCode】622.设计循环队列

MySQL——几种常见的嵌套查询

兔起鹘落全端涵盖,Go lang1.18入门精炼教程,由白丁入鸿儒,全平台(Sublime 4)Go lang开发环境搭建EP00

SQL试题

For heavy two-dimensional arrays in PHP

深度学习之 10 卷积神经网络1

索引(三)

基于二次型性能指标的燃料电池过氧比RBF-PID控制

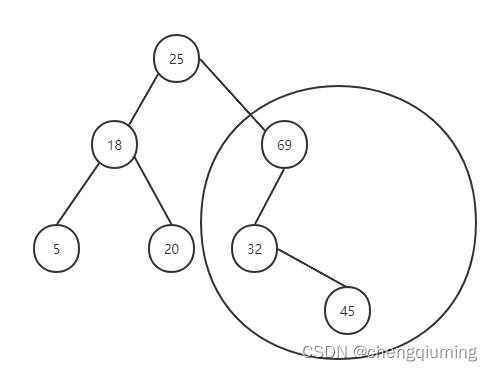

015-Balanced binary tree (1)

selenium IDE的3种下载安装方式

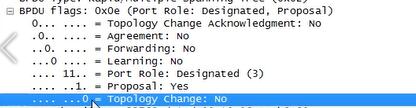

STP普通生成树安全特性— bpduguard特性 + bpdufilter特性 + guard root 特性 III loopguard技术( 详解+配置)

【LeetCode】622. Design Circular Queue

cnpm安装步骤

Go操作Redis数据库

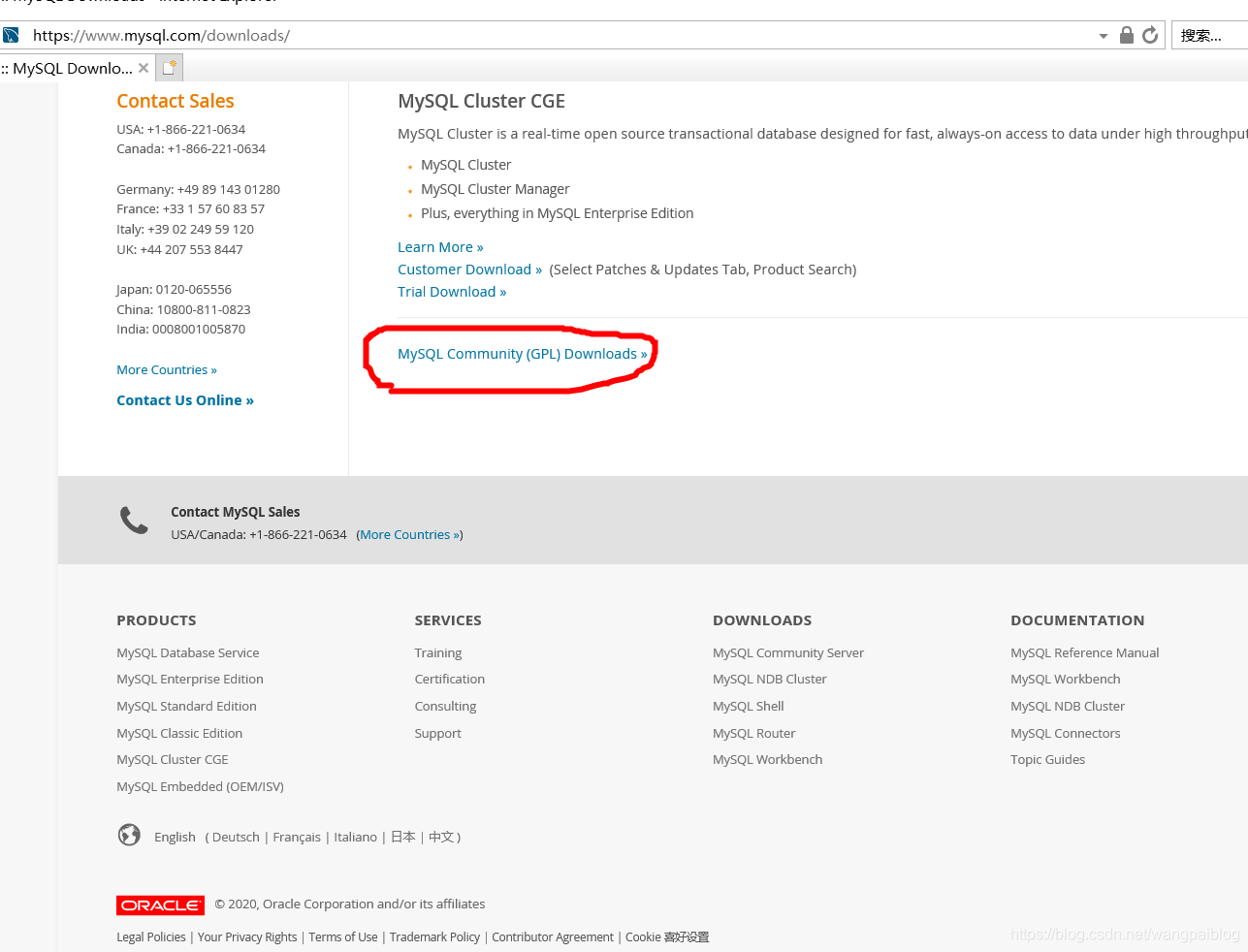

MySQL8重置root账户密码图文教程

Chrome F12 keep before request information network

LINGO 18.0软件安装包下载及安装教程

015-平衡二叉树(一)

LeetCode第三题(Longest Substring Without Repeating Characters)三部曲之二:编码实现