当前位置:网站首页>Transfer learning to freeze the network:

Transfer learning to freeze the network:

2022-08-01 11:05:00 【Wsyoneself】

Description: pytorch (1-3), TensorFlow (4)

fine tune is to freeze the front layers of the network, and then train the last layer

- Pass all parameters to the optimizer, but set the

requires_gradof the parameters of the layer to be frozen toFalse:optimizer = optim.SGD(model.parameters(), lr=1e-2) # All parameters are passed infor name, param in model.named_parameters():if the name of the network layer to be frozen (that is, the value of name):param.requires_grad = False - The optimizer passes in the parameters of the unfrozen network layer:

optimizer = optim.SGD(model.name.parameters() of the unfrozen network layer, lr=1e-2) # The optimizer only passes in the parameters of fc2 - The best practice is: the optimizer only passes in the parameter of requires_grad=True, the memory occupied will be smaller, and the efficiency will be higher.Code and Combining 1 and 2

Save video memory: do not pass parameters that will not be updated to

optimizerIncrease the speed: set the

requires_gradof the parameters that are not updated toFalse, saving the time to calculate the gradient of these parameters

- The code is as follows:

#Define optimization operatoroptimizer = tf.train.AdamOptimizer(1e-3)#Select the parameters to be optimizedoutput_vars = tf.get_collection(tf.GraphKyes.TRAINABLE_VARIABLES, scope= 'outpt')train_step = optimizer.minimize(loss_score,var_list = output_vars)

Put the layer that needs to update the gradient in the tf.get_collection function, and do not put it in if it does not need to be updated.- The main function of the function: from a collectionretrieve variable

- is used to get all the elements in the key collection and return a list.The order of the list is based on the order in which the variables were placed in the set.scope is an optional parameter, indicating the namespace (namedomain), if specified, it will return a list of all variables put into 'key' in the name domain (for example, the outpt description in the sample code is the parameter to return the outpt layer), if not specified, it will return all variables.

边栏推荐

- 基于ModelArts的物体检测YOLOv3实践【玩转华为云】

- MacOS下postgresql(pgsql)数据库密码为什么不需要填写或可以乱填写

- Mysql index related knowledge review one

- LeakCanary如何监听Service、Root View销毁时机?

- 冰冰学习笔记:gcc、gdb等工具的使用

- [Cloud Residency Co-Creation] Huawei Cloud Global Scheduling Technology and Practice of Distributed Technology

- 玻璃拟态(Glassmorphism)设计风格

- Mini Program Graduation Works WeChat Food Recipes Mini Program Graduation Design Finished Products (3) Background Functions

- 解决vscode输入! 无法快捷生成骨架(新版vscode快速生成骨架的三种方法)

- The first experience of Shengsi large model experience platform——Take the small model LeNet as an example

猜你喜欢

小程序毕设作品之微信美食菜谱小程序毕业设计成品(1)开发概要

Dapr 与 NestJs ,实战编写一个 Pub & Sub 装饰器

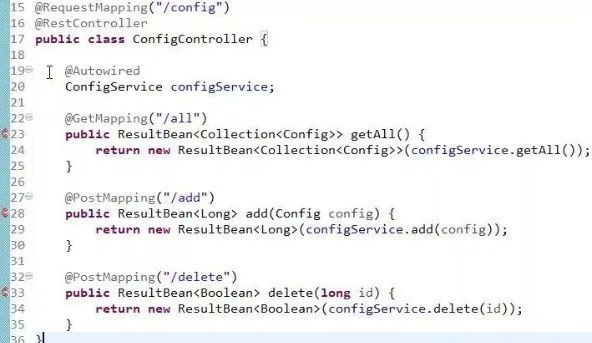

这是我见过写得最烂的Controller层代码,没有之一!

xss-labs靶场挑战

Promise learning (4) The ultimate solution for asynchronous programming async + await: write asynchronous code in a synchronous way

Promise学习(二)一篇文章带你快速了解Promise中的常用API

利用正则表达式的回溯实现绕过

C#/VB.NET 将PPT或PPTX转换为图像

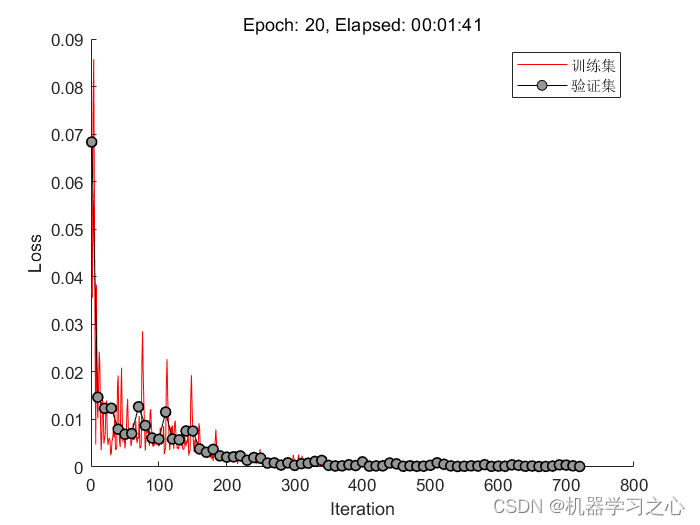

回归预测 | MATLAB实现TPA-LSTM(时间注意力注意力机制长短期记忆神经网络)多输入单输出

复现assert和eval成功连接或失败连接蚁剑的原因

随机推荐

机器学习 | MATLAB实现支持向量机回归RegressionSVM参数设定

Google Earth Engine APP——15行代码搞定一个inspector高程监测APP

玻璃拟态(Glassmorphism)设计风格

DBPack SQL Tracing 功能及数据加密功能详解

shell--第九章练习

Drawing arrows of WPF screenshot control (5) "Imitation WeChat"

LeakCanary如何监听Service、Root View销毁时机?

RK3399 platform development series on introduction to (kernel) 1.52, printk function analysis - the function call will be closed

这是我见过写得最烂的Controller层代码,没有之一!

[Cloud Residency Co-Creation] Huawei Cloud Global Scheduling Technology and Practice of Distributed Technology

Android Security and Protection Policy

博弈论(Depu)与孙子兵法(42/100)

cisco交换机基本配置命令(华为交换机保存命令是什么)

CTFshow,命令执行:web33

.NET深入解析LINQ框架(三:LINQ优雅的前奏)

PDMan-domestic free general database modeling tool (minimalist, beautiful)

图解MySQL内连接、外连接、左连接、右连接、全连接......太多了

上周热点回顾(7.25-7.31)

How I secured 70,000 ETH and won a 6 million bug bounty

我是如何保护 70000 ETH 并赢得 600 万漏洞赏金的