当前位置:网站首页>Detailed explanation of DenseNet and Keras reproduction code

Detailed explanation of DenseNet and Keras reproduction code

2022-08-04 07:02:00 【Blood chef】

The original author's open source code:https://github.com/liuzhuang13/DenseNet

论文:https://arxiv.org/pdf/1608.06993.pdf

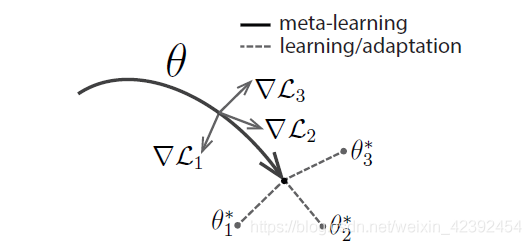

1、DenseNet

随着卷积神经网络变得越来越深,一个新的问题出现了:当输入或梯度信息在经过很多层的传递之后,在到达网络的最后(或开始)可能会消失或者“被冲刷掉”(wash out).DenseNet(Dense Convolutional Network)主要还是和ResNetCompare with stochastic deep networks,It borrows ideas from cross-layer connections and randomly dropping layers,But it's a different structure,网络结构并不复杂,却非常有效!

DenseNet有如下几个优点:

- 缓解梯度消失

- Enhanced feature dissemination,More efficient use of parameters,减少过拟合

- 特征重用

- 减少了参数量

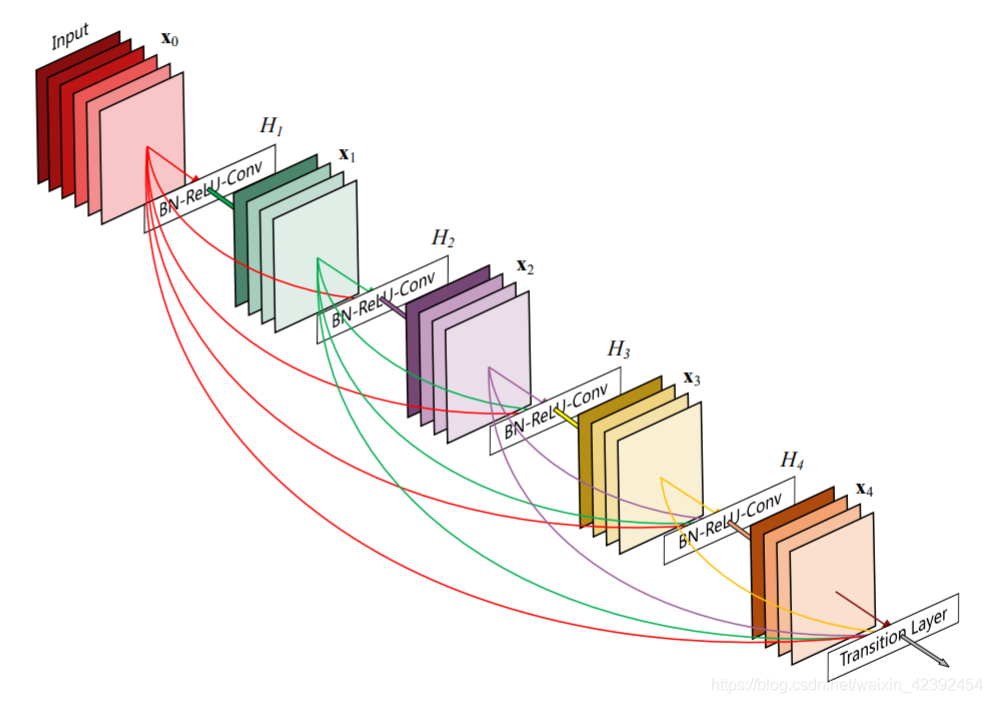

下图是Dense Blocka standard structure.

与ResNet类似的是,DenseNetThere are also cross-layer connections,And it's more connected across layers.

但不同的是,ResNetThe connection method used is direct addition(Add):

X l = H l ( x l − 1 ) + X l − 1 X_l = H_l(x_{l-1}) + X_{l-1} Xl=Hl(xl−1)+Xl−1

而DenseNetis the splicing operation(Concatenation)

X l = H l ( [ X 0 , X 1 , . . . , X l − 1 ] ) X_l = H_l([X_0,X_1,...,X_{l-1}]) Xl=Hl([X0,X1,...,Xl−1])

这也是DenseNetA very good place for papers,There are no excessive math formulas,Even a novice like me can understand it.

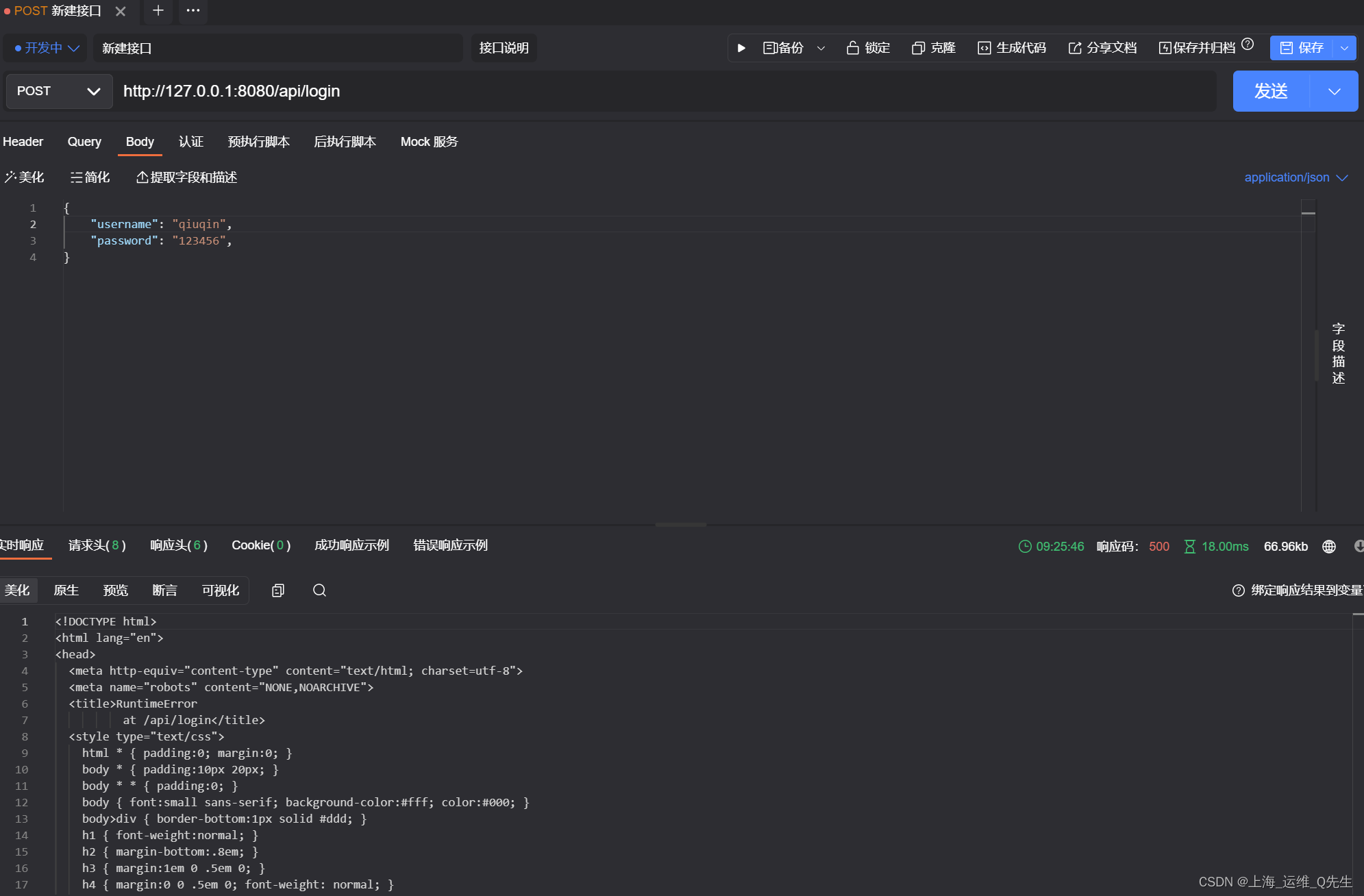

2、实现细节

Composite function

在dense block中,A standard compound function consists of three parts:BN -> Activation(ReLU) -> 3x3Conv

Pooling layers

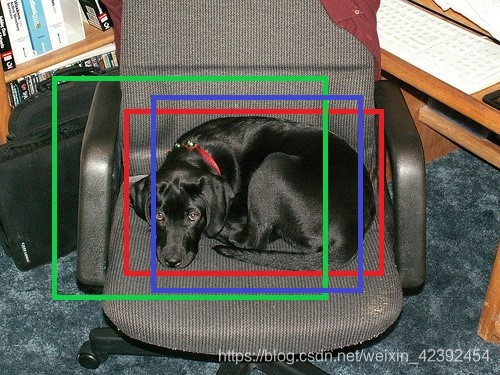

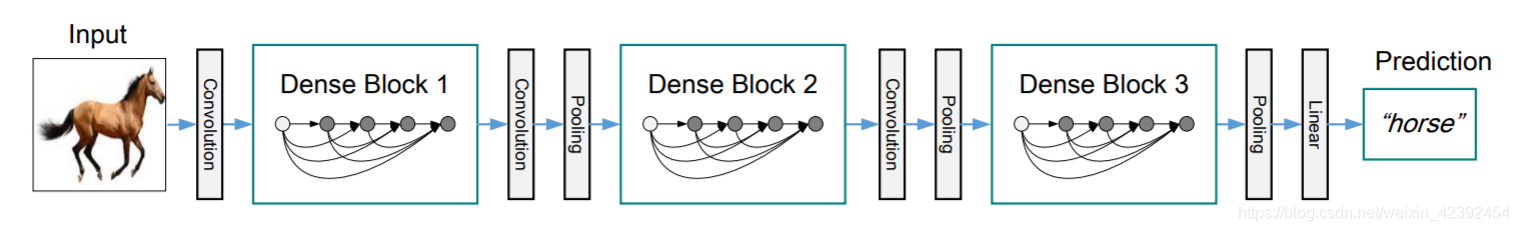

When the size of the feature map changes,There will be problems with direct splicing operations.然而,卷积网络有一个基础的部分——下采样层(transition layers),它可以改变特征图的尺寸.Each downsampling layer is composed of 1x1的卷积和2x2The average pooling composition of .为了便于下采样的实现,We divide the network into multiplesdense block,如下图所示.

Growth rate

在dense block中,If the output of each layer of the network is generated k k k个feature maps,那么第 l l lThe input has k 0 + k ∗ ( l − 1 ) k_0+k*(l-1) k0+k∗(l−1)个feature maps.其中 k 0 k_0 k0是输入层的通道数.所以 k k kIn the code is the number of channels of the convolution kernel.While in the paper the experiment proves a small growth rate k k k,有更好的结果.One explanation for this situation is,每一层都可以和它所在的block中之前的所有特征图进行连接,使得网络具有了“集体知识”.The feature map can be viewed as the global state of the network.Each layer is equivalent to adding to the current state k k k个特征图.增长速率控制着每一层有多少信息对全局状态有效.全局状态一旦被写定,就可以在网络中的任何地方被调用,而不用像传统的网络结构那样层与层之间的不断重复.

Bottleneck layers

根据dense block的设计,后面几层可以得到前面所有层的输入,concatThe latter input is still relatively large,So the further back the input layer is, the larger it is.作者就在3x3before the convolution of,添加一个1x1的卷积操作,The purpose is to reduce输入的feature map数量.This reduces the amount of computation.

Compression

尽管在每个dense block内,采用了bottleneckstructure to reduce parameters and computation.But the input features go through onedense block输出后,feature mapsIt's still huge when stacked.所以为了控制dense blockbetween the characteristicsfeature maps数量,进一步引入了 θ \theta θ参数( 0 < θ ≤ 1 0<\theta\leq1 0<θ≤1),当 θ = 1 \theta=1 θ=1时,The network remains the same.The authors recommend based on the experimental results θ = 0.5 \theta=0.5 θ=0.5

如果只使用了Bottleneck结构,is called the networkDenseNet-B,If both are usedBottleneck也使用了Compression结构,is called the networkDenseNet-BC.

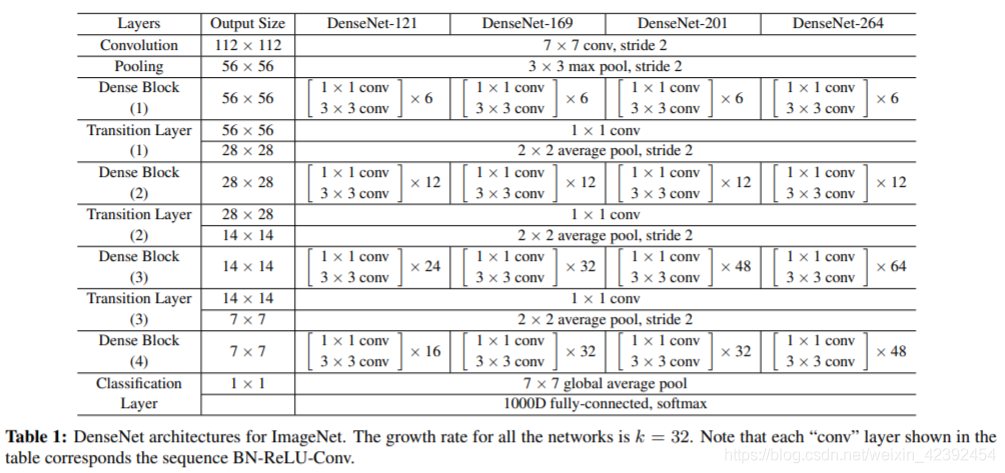

DenseNetThe common structure is shown in the figure above,建议k的值为32.

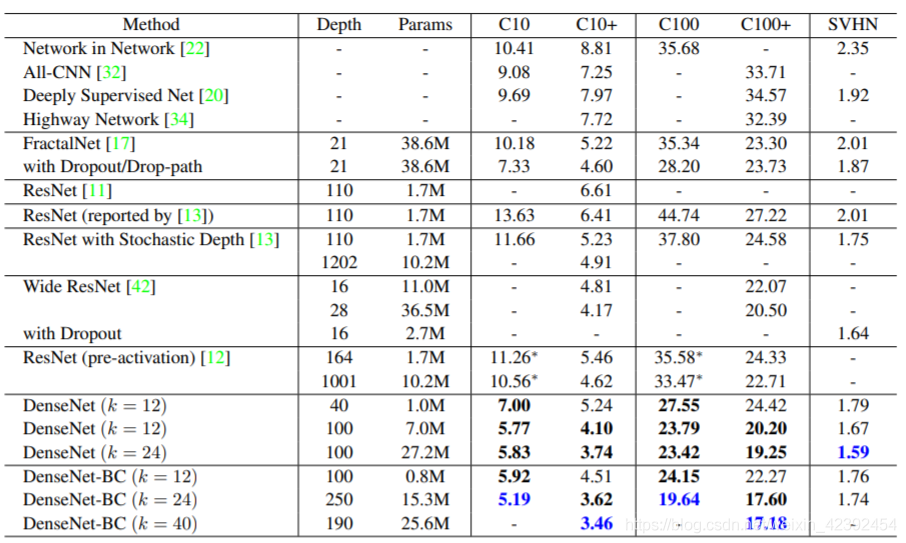

3、实验结果

作者在多个benchmarkA variety of datasets were trainedDenseNet模型,并与state-of-art的模型(主要是ResNet和其变种)进行对比:

We can see from the table above,DenseNetOnly the smaller ones are neededGrowth rate(12,24)便可以实现state-of-art的性能.结合了Bottleneck和Compression的DenseNet-BChas much less thanResNetand the number of arguments for its variants,且无论DenseNet或者DenseNet-BC,Both surpassed the original dataset and the augmented datasetResNet的性能.

4、总结分析

The features of the model are strongly correlated

将输入进行concatThe direct result is,DenseNets每一层学到的特征图都可以被以后的任一层利用.该方式有助于网络特征的重复利用,也因此得到了更简化的模型.DenseNet-BC仅仅用了大概ResNets 1/3的参数量就获得了相近的准确率.

隐含的深度监督

由于DenseNetShorter connection features,Each layer can obtain supervision information directly from the loss function.It is equivalent to adding a classifier to each hidden layer of the network,迫使中间层也学习判断特征.

Random and fixed connections

DenseNetThere are also similarities with stochastic deep networks.Since a layer is randomly discarded,This makes it possible for any two layers in the network to be short-circuited.尽管DenseNetis a fixed connection.The two methods are fundamentally different,But the effect is to strengthen the composite between features,Features from the front can be reused later.

缺点

DenseNet也有相应的缺点,due to constant splicing operations,It also leads to too much repeated gradient information.Of course, follow-up studies have also been carried out on this.

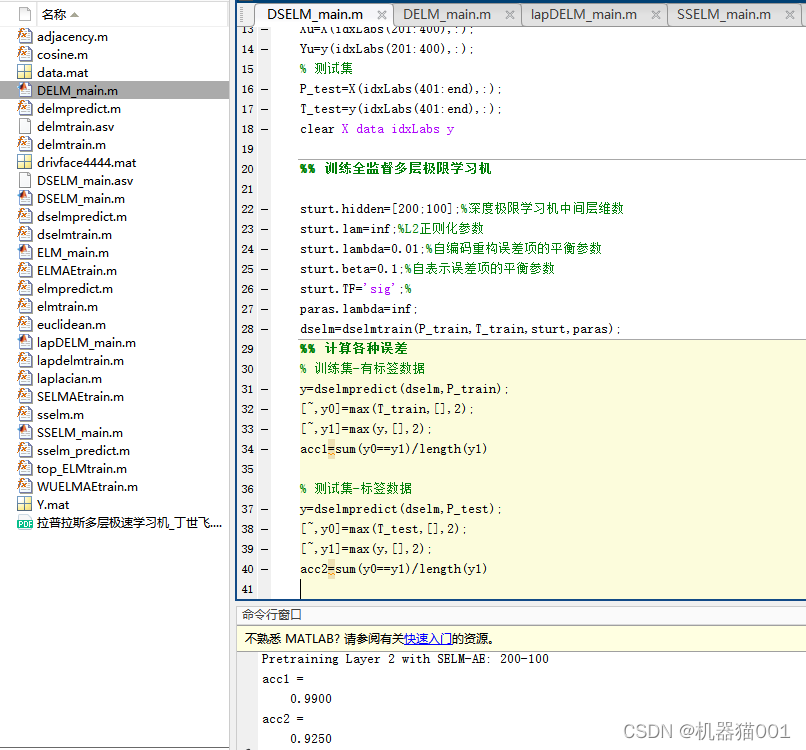

代码复现

边栏推荐

猜你喜欢

随机推荐

Microsoft Store 微软应用商店无法连接网络,错误代码:0x80131500

Operating System Random

golang chan

新冠病毒和网络安全的异同及思考

Flask request 返回网页中 checkbox 是否选中

天鹰优化的半监督拉普拉斯深度核极限学习机用于分类

冰歇webshell初探

Uos统信系统 chrony配置

华硕飞行堡垒系列无线网经常显示“无法连接网络” || 一打开游戏就断网

网络端口大全

把DocumentsandSettings迁移到别的盘

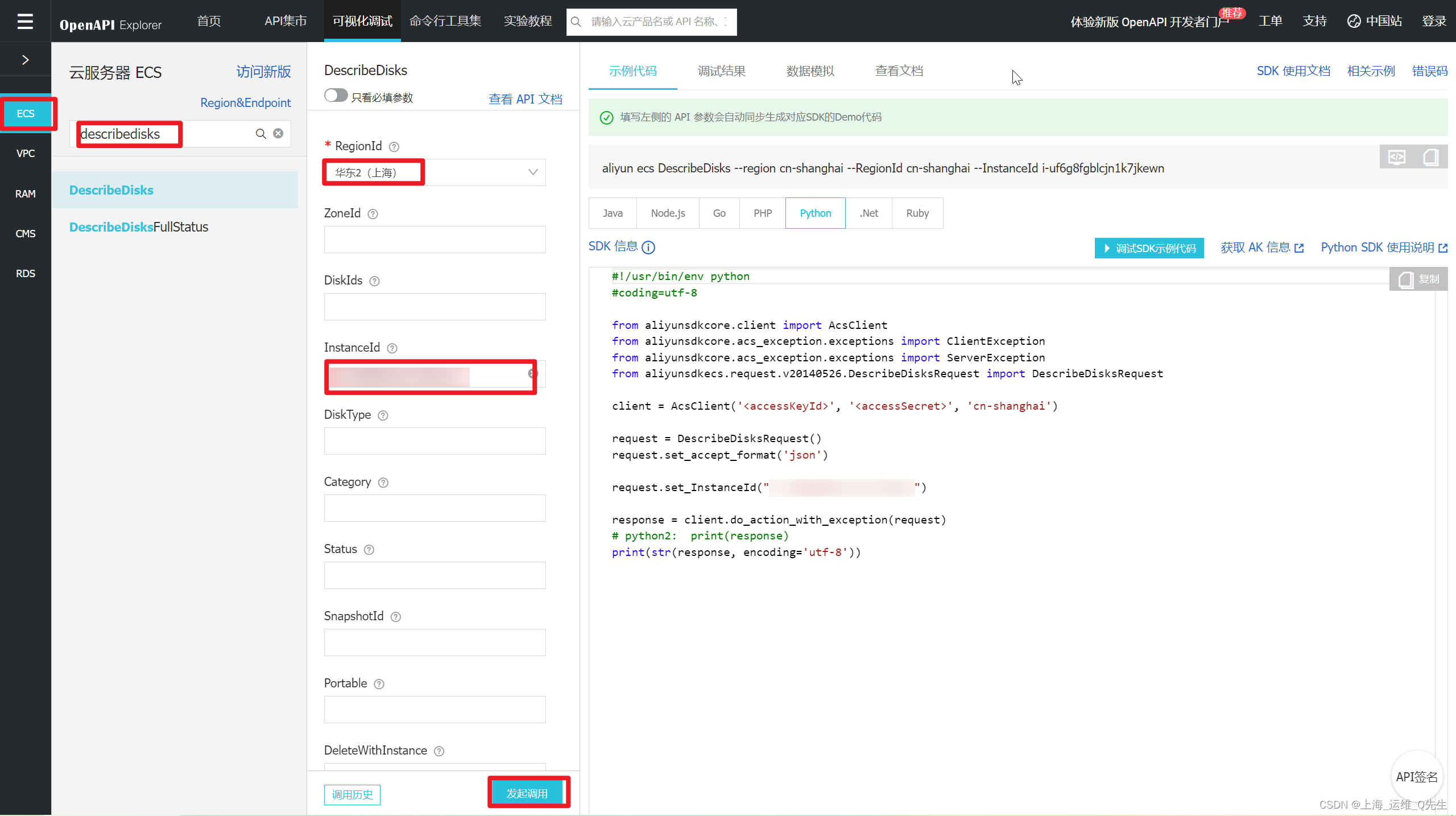

解决腾讯云DescribeInstances api查询20条记录以上的问题

硬件描述语言Verilog HDL学习笔记之模块介绍

普通用户 远程桌面连接 服务器 Remote Desktop Service

CMDB 腾讯云部分实现

【HIT-SC-MEMO1】哈工大2022软件构造 复习笔记1

JUC并发容器——阻塞队列

为什么不使用VS管理QT项目

0--100的能被3整出的数的集合打乱顺序

基于Webrtc和Janus的多人视频会议系统开发5 - 发布媒体流到Janus服务器