当前位置:网站首页>[Gan] Introduction to Gan basics and dcgan

[Gan] Introduction to Gan basics and dcgan

2022-06-22 06:56:00 【chad_ lee】

Basic Idea of GAN

Take image generation as an example , Suppose there are two networks ,G(Generator) and D(Discriminator):

- G Is a network that generates pictures , It receives a random noise z, Generate a picture from this noise , Remember to do G(z).

- D It's a discriminant network , Judge whether a picture is “ Actual ”. Its input parameter is x,x For a picture , Output D(x) representative x For the probability of a real picture , If 1, On behalf of 100% It's a real picture , And the output is 0, It means that it can't be a real picture .

Training objectives

During training , Generation network G The goal is to generate real pictures as much as possible to cheat the discrimination network D. and D The goal is to try to G The generated image is different from the real image .

The ideal result of training is G The generated image can be confused with the real , Cheated D, Use the explanation in the article as follows :

Black dotted line Represents the distribution of real samples , Blue dotted line Indicates the distribution of the discriminant probability of the discriminator , Green solid line Represents the distribution of generated samples . Z Z Z Noise representation , Z Z Z To x x x Represents the mapping of the distribution after passing through the generator (G Credit ). The figure above contains two messages :(1) generator G More and more fitting to real pictures : The green solid line increasingly coincides with the black dotted line 、 Random noise z z z after G Mapped x x x More and more consistent with the real distribution .(2) Judging device D I can't tell the difference between real and generated pictures , That is, the discriminant probability tends to 0.5, At first, the blue dotted line still distinguishes , After training, there is no distinction .

Training methods

GAN The goal of optimization :

min G max D V ( D , G ) = E x ∼ p data ( x ) [ log D ( x ) ] + E z ∼ p z ( z ) [ log ( 1 − D ( G ( z ) ) ) ] \min _{G} \max _{D} V(D, G)=\mathbb{E}_{\boldsymbol{x} \sim p_{\text {data }}(\boldsymbol{x})}[\log D(\boldsymbol{x})]+\mathbb{E}_{\boldsymbol{z} \sim p_{\boldsymbol{z}}(\boldsymbol{z})}[\log (1-D(G(\boldsymbol{z})))] GminDmaxV(D,G)=Ex∼pdata (x)[logD(x)]+Ez∼pz(z)[log(1−D(G(z)))]

- The whole formula consists of two terms . x x x Represents a real picture , z z z Indicates input G G G The noise of the network , and G ( z ) G(z) G(z) Express G G G Web generated images .

- D ( x ) D(x) D(x) Express D D D The probability of the Internet judging whether a real picture is real or not ( because x It's real , So for D D D Come on , The closer the value is 1 The better ). and D ( G ( z ) ) D(G(z)) D(G(z)) yes D D D Network judgment G G G The probability that the generated image is true or not .

- G G G Purpose : D ( G ( z ) ) D(G(z)) D(G(z)) yes D D D Network judgment G G G The probability that the generated image is real or not , G G G You should want to create your own images “ The closer to reality the better ”. in other words , G G G hope D ( G ( z ) ) D(G(z)) D(G(z)) As big as possible , At this time V ( D , G ) V(D, G) V(D,G) It's going to get smaller . So we see that the first sign of the formula is m i n G min_G minG.

- D D D Purpose : D D D The more capable , D ( x ) D(x) D(x) The bigger it should be , D ( G ( x ) ) D(G(x)) D(G(x)) The smaller it should be . At this time V ( D , G ) V(D,G) V(D,G) It's going to get bigger . So for D D D It's about maximizing m a x D max_D maxD

GAN Training process :

here G G G and D D D Alternate Training , The first step is training D D D hope V ( G , D ) V(G, D) V(G,D) The bigger the better , So we're adding gradients (ascending). Step 2 training G G G when , V ( G , D ) V(G, D) V(G,D) The smaller the better. , So it's minus the gradient (descending). The whole training process alternates .

Optimization objectives

For fixed G G G, D D D The optimal solution is

D G ∗ ( x ) = p data ( x ) p data ( x ) + p g ( x ) D_{G}^{*}(\boldsymbol{x})=\frac{p_{\text {data }}(\boldsymbol{x})}{p_{\text {data }}(\boldsymbol{x})+p_{g}(\boldsymbol{x})} DG∗(x)=pdata (x)+pg(x)pdata (x)

DCGAN

DCGAN It is also an early model .GAN Apply to image generation ,CNN Good at image processing , So the combination of the two is natural .

G G G

The length of the random input vector is 100, Four layer transposed convolution layer . Not used pooling.D and G Both of them are used batch normarlization. Get rid of FC, Make the model into a full convolution network .G Network usage ReLU As an activation function , The last layer uses tanh.D Network usage LeakyReLU As an activation function .

边栏推荐

- [5g NR] ng setup of ngap protocol

- PIP for source changing and accelerated downloading

- Anaconda introduction, installation and use nanny level tutorial

- Golang calls sdl2, plays PCM audio, and reports an error signal arrived during external code execution.

- [5g NR] RRC connection reconstruction analysis

- Great progress in code

- vue连接mysql数据库失败

- Introduction to 51 Single Chip Microcomputer -- the use of Keil uvision4

- [M32] simple interpretation of MCU code, RO data, RW data and Zi data

- How to learn 32-bit MCU

猜你喜欢

C语言——深入理解数组

Xh_CMS渗透测试文档

Rebuild binary tree

Cesium loading 3D tiles model

Py之scorecardpy:scorecardpy的简介、安装、使用方法之详细攻略

MySQL ifnull processing n/a

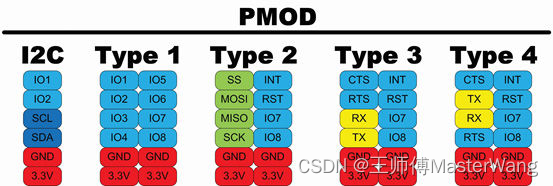

KV260的PMOD接口介绍

![[php] composer installation](/img/37/7adaca01b95085b42a116bc6b08165.png)

[php] composer installation

Py's scorecardpy: a detailed introduction to the introduction, installation and use of scorecardpy

Don't throw away the electric kettle. It's easy to fix!

随机推荐

[write CPU by yourself] implementation of exception related instructions

Theory and application of naturallanguageprocessing

JDBC查询结果集,结果集转化成表

Detailed tutorial on connecting MySQL with tableau

[M32] SCM xxx Simple interpretation of map file

仙人掌之歌——上线运营(4)

Shengxin literature learning (Part1) -- precision: a approach to transfer predictors of drug response from pre-clinical ...

Implement a timer: timer

Flink core features and principles

Introduction to 51 Single Chip Microcomputer -- the use of Proteus 8 professional

Qt development simple Bluetooth debugging assistant (low power Bluetooth)

Reprint the Alibaba open source project egg JS technical documents cause "copyright disputes". How to use the loose MIT license?

JS中如何阻止事件的传播

golang調用sdl2,播放pcm音頻,報錯signal arrived during external code execution。

EMC solutions

Record of problems caused by WPS document directory update

Leetcode--- search insertion location

Databricks from open source to commercialization

[5g NR] NAS connection management - cm status

What exactly is the open source office of a large factory like?