当前位置:网站首页>Transfer Learning

Transfer Learning

2022-06-11 06:07:00 【Tcoder-l3est】

Transfer Learning

List of articles

Labeled target data and source data

Model Fine-tuning

Task describe :

characteristic Target data Very few

be called One-shot Learning

example:

Speech recognition ,

The voice assistant will say a few words and then

Processing mode

Conservation Training

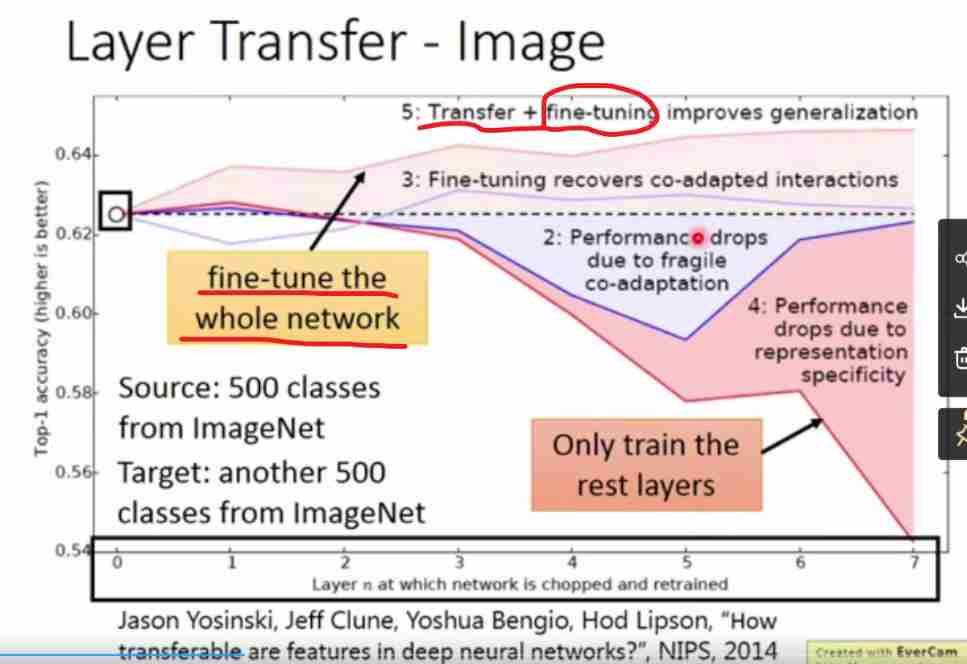

a large number of source data, To initialize another network Parameters of , And then use target data Fine tuning parameters , be prone to overfitting

new network Equivalent to the old regularization

Training limitations :

Just adjust one layer Parameters , Prevent over fitting

fine-tune the whole network

Which one layer?

Speech recognition : Adjust the first general , near input Of layer

IMAGE: Fixed at the front , transfer Output A few nearby Layer The previous basic feature extraction

fine-tune + Transfer The best effect

Multitask Learning

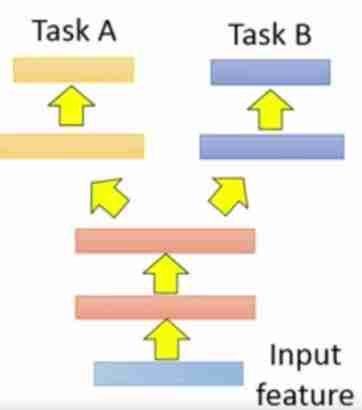

task a & b Share the same feature:

feature all Cannot share :

Do something in the middle transform

Select the appropriate relevant task

example

Speech recognition , The sound signal is thrown in , Translate into human language :

Together train

Progressive Neural Network

Study first task A then task B

Will you learn B It will affect task A Well ?

Blue output Enter to green input As another task The input of , But again BP It won't be blue when it is , Blue lock

If more task

Unlabeled Target data & Labeled Source Data

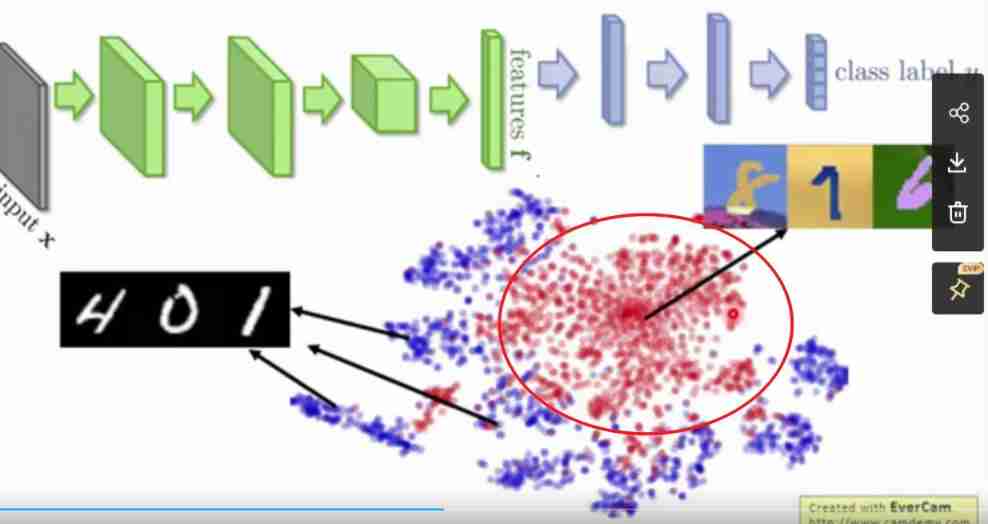

For example, handwritten numeral recognition

other image, No, label:

One is train One yes test, It won't work very well , because mismatch

data Of Distribution Is not the same

Domain-adversarial Training

hold source and target Go to the same domain Handle

feature There is no intersection at all

Need feature extractor Try to remove source target Of Different

Cheated domain classifier, It's easy ,Why?

green all output 0 That's it To add more Label predictor Need to meet

Domain classifier fails in the end

It should struggle !

Zero-shot Learning

There may be some target stay source Inside Never happened

Speech recognition : Using phonemes ( Phonetic symbols ), Not in words

***Representing each class by its attributes !*** Find unique attributes

Training:

Judgment properties , Not the last direct classification

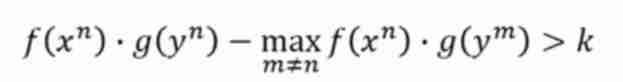

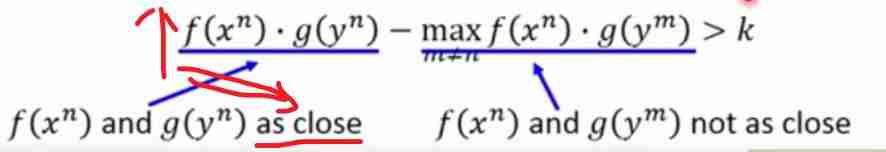

x1 x2 Through one f Mapping to embedding space then Corresponding properties y1 y2 Also through g Map to the above , If a new one enters X3 The same method can still be used The goal is the result f g As close as possible

But what about x-attributes It is estimated that you may have to rely on database support

modify The farther away from the irrelevant, the better :

K be called margin max(0, Back ) Rear greater than 0 Just can have loss <0 No, loss, When ? When didn't loss ?

inner product

There is no attribute : use word vector

Return to zero Learning:

Carry out a combination, It's in the middle ? Identify things you've never seen

Unlabeled Source and Labeled Target Data

self-taught learning

Similar to semi supervised learning , There is a big difference :data It may be irrelevant It's a different kind of

Unlabeled Source and Unlabeled Target Data

Self-taught Clustering

led Target Data

led Target Data Self-taught Clustering

边栏推荐

- qmake 实现QT工程pro脚本转vs解决方案

- NFC Development -- the method of using NFC mobile phones as access control cards (II)

- Get the value of program exit

- Servlet

- Sword finger offer 04: find in 2D array

- PgSQL reports an error: current transaction is aborted, commands ignored until end of transaction block

- 使用Batch读取注册表

- FPGA面试题目笔记(四)—— 序列检测器、跨时钟域中的格雷码、乒乓操作、降低静动态损耗、定点化无损误差、恢复时间和移除时间

- Getting started with kotlin

- handler

猜你喜欢

Summarize the five most common BlockingQueue features

Sword finger offer 50: the first character that appears only once

我们真的需要会议耳机吗?

Can Amazon, express, lazada and shrimp skin platforms use the 911+vm environment to carry out production number, maintenance number, supplement order and other operations?

How to use perforce helix core with CI build server

FPGA面试题目笔记(二)——同步异步D触发器、静动态时序分析、分频设计、Retiming

山东大学项目实训之examineListActivity

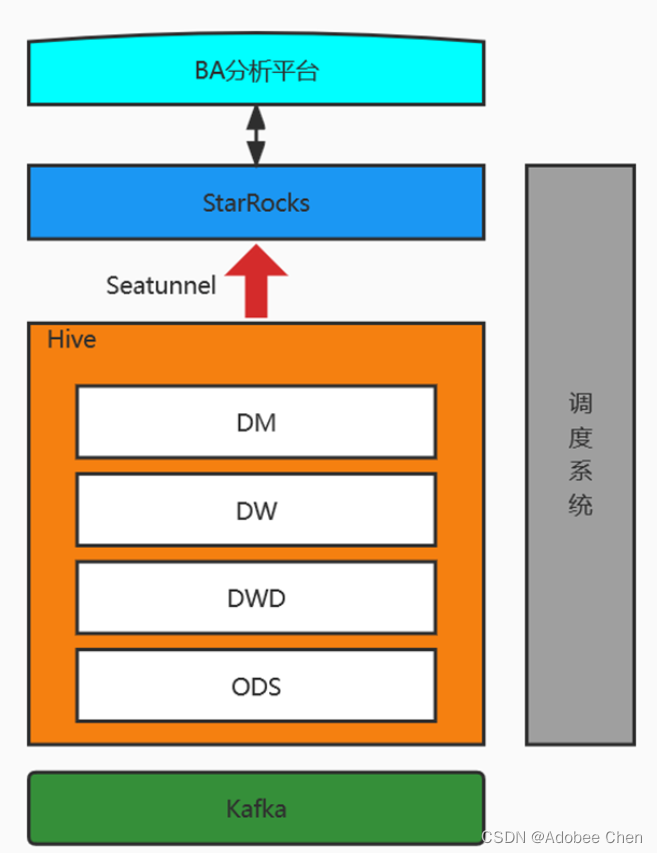

Implementation of data access platform scheme (Youzu network)

Servlet

![Chapter 4 of machine learning [series] naive Bayesian model](/img/77/7720afe4e28cd55284bb365a16ba62.jpg)

Chapter 4 of machine learning [series] naive Bayesian model

随机推荐

All questions and answers of database SQL practice niuke.com

Using Internet of things technology to accelerate digital transformation

NLP-D46-nlp比赛D15

Sword finger offer 50: the first character that appears only once

PgSQL reports an error: current transaction is aborted, commands ignored until end of transaction block

The meaning in the status column displayed by PS aux command

Growth Diary 01

Principle of copyonwritearraylist copy on write

Sqli-labs less-01

Login and registration based on servlet, JSP and MySQL

Installing MySQL for Linux

Eureka集群搭建

What happened to the young man who loved to write code -- approaching the "Yao Guang young man" of Huawei cloud

[daily exercises] 1 Sum of two numbers

All the benefits of ci/cd, but greener

使用Genymotion Scrapy控制手机

Simple understanding of pseudo elements before and after

Cocoatouch framework and building application interface

Devsecops in Agile Environment

Continuous update of ansible learning