当前位置:网站首页>"Seamless" deployment of paddlenlp model based on openvinotm development kit

"Seamless" deployment of paddlenlp model based on openvinotm development kit

2022-06-26 00:20:00 【Intel edge computing community】

Mission background

- Sentiment analysis ( Sentiment Analysis )

Affective analysis aims to analyze the subjective text with emotional color 、 Handle 、 Induction and reasoning , It is widely used in consumption decision-making 、 Public opinion analysis 、 Personalized recommendation, etc , With high commercial value . for example : Food line fresh food automatically generates food comment labels to assist users in purchasing , And guide the operation and procurement department to adjust the selection and promotion strategy ; Fangtianxia intuitively shows the users' reputation of the real estate to the buyers and developers , And recommend the top of the highly praised real estate ; Gome has built an intelligent service scoring system , Reduce customer service operating costs 40%, Negative feedback handling rate 100%.

- natural language processing (NLP) technology

natural language processing ( English :Natural Language Process, abbreviation NLP) It's computer science 、 Information Engineering and artificial intelligence , Focus on human-computer language interaction , Discuss how to deal with and use natural language . Recent years , With the development of deep learning and related technologies ,NLP Breakthroughs have been made in the field of research , Researchers design various models and methods , To solve NLP All kinds of problems , The more common ones include LSTM, BERT, GRU, Transformer, GPT Equal algorithm model .

Project introduction

This scheme adopts PaddleNLP Tool suite for model training , And based on OpenVINOTM The development suite is implemented in Intel Efficient deployment on the platform . This article will mainly share how to OpenVINOTM In Development Suite “ seamless ” Deploy PaddlePaddle BERT Model , And verify the output results .

- PaddleNLP

PaddleNLP Is a simple to use and powerful natural language processing development library . Aggregate industry quality pre training models and provide out of the box development experience , Cover NLP The multi scenario model base combined with industrial practice examples can meet the needs of developers for flexible customization .

- OpenVINO TM Development Kit

OpenVINOTM The development kit is Intel The platform's native deep learning reasoning framework , since 2018 Since its launch in ,Intel It has helped hundreds of thousands of developers greatly improve AI Reasoning performance , And extend its application from edge computing to enterprises and clients . Intel in 2022 On the eve of the world mobile communication conference in Barcelona , Launched the Intel distribution OpenVINOTM A new version of the Development Suite . The new features are mainly developed based on the feedback of developers in the past three and a half years , Include more in-depth learning model choices 、 More device portability choices, higher reasoning performance and fewer code changes . In order to better understand Paddle Model to support , new edition OpenVINOTM The development kit has been upgraded :

- Direct support for Paddle Format model

at present OpenVINO TM Development Kit 2022.1 The release has completed the PaddlePaddle Direct support for the model ,OpenVINOTM Develop a suite of Model Optimizer The tool has been able to directly complete the Paddle Offline transformation of models , meanwhile runtime api The interface can also read and load directly Paddle Model to specified hardware device , The process of offline conversion is omitted , Greatly improved Paddle Developers in Intel Efficiency of deployment on the platform . Proven performance and accuracy , stay OpenVINOTM Development Kit 2022.1 Distribution in , There will be 13 Models cover 5 Large application scenarios Paddle The model will be directly supported , There are many images PPYolo and PPOCR This is a very popular network for developers .

chart :OpenVINOTM Develop a suite of MO and IE Can be directly supported Paddle Model input

- Full introduction of dynamic input support

In order to adapt to a wider variety of models ,OpenVINOTM 2022.1 Version of CPU Plugin Dynamic... Has been supported input shape, Let developers deploy similar services in a more convenient way NLP perhaps OCR Such a network ,OpenVINOTM Development Suite users can do this without having to do reshape Under the premise of , Any input is different shape Image or vector of as input data ,OpenVINOTM The development kit will automatically be in runtime During the process, the model structure and memory space are dynamically adjusted , Further optimization dynamic shape Reasoning performance .

chart : stay NLP Medium Dynamic Input Shape

For detailed introduction, please refer to :https://docs.openvino.ai/latest/openvino_docs_OV_UG_DynamicShapes.html

BERT Introduction of the principle

- BERT Structure is introduced

BERT (Bidirectional Encoder Representations from Transformers) With Transformer The encoder is the basic component of the network , Use the mask language model (Masked Language Model) And adjacency sentence prediction (Next Sentence Prediction) Two tasks are pre trained on large-scale unlabeled text corpus (pre-train), Get a general semantic representation model that integrates two-way content . Based on the general semantic representation model generated by pre training , Simple output layer with task adaptation , fine-tuning (fine-tune) Then it can be applied to the downstream NLP Mission , The effect is usually better than the model trained directly on the downstream task . before BERT That is to say GLUE Assessment task We got it SOTA Result .

It's not hard to find out , Its model structure is Transformer Of Encoder layer , Just put the input of a specific task , Output insert into Bert in , utilize Transformer A powerful attention mechanism can simulate many downstream tasks .( Sentence to relation judgment , Single text topic classification , Q & A tasks (QA), Single sentence labeling ( Named entity recognition )),BERT The training process can be divided into pre training and fine-tuning .

- Pretraining task (Pre-training)

BERT It's a multitasking model , Its task is composed of two self-monitoring tasks , namely MLM and NSP.

So-called MLM It refers to the input anticipation immediately during the training mask Drop some words , Then predict the word through its context , This task is very similar to the cloze that we often do in middle school . Just like the traditional language model algorithm and RNN Match that ,MLM Of this nature and Transformer The structure of is very matched .

Next Sentence Prediction(NSP) The task is to judge sentences B Is it a sentence A Below . If so, output ’IsNext‘, Otherwise output ’NotNext‘. The training data is generated by two consecutive sentences randomly selected from parallel corpus , among 50% Keep the two sentences drawn , They conform to IsNext Relationship , in addition 50% The second sentence is randomly extracted from the expectation , Their relationship is NotNext Of .

After training on massive single prediction BERT after , It can be applied to NLP In each task of . The following shows BERT stay 11 Models in different tasks , They just need to be in BERT On the basis of this, add another output layer to complete the fine-tuning of specific tasks . These tasks are similar to the liberal arts papers we have done , There are multiple-choice questions , Short answer questions, etc . Fine tuning tasks include :

- Classification tasks based on sentence pairs

- Classification tasks based on a single sentence

- Q & A tasks

- Named entity recognition

边栏推荐

猜你喜欢

MySQL master-slave replication

EBS R12.2.0升级到R12.2.6

EasyConnect连接后显示未分配虚拟地址

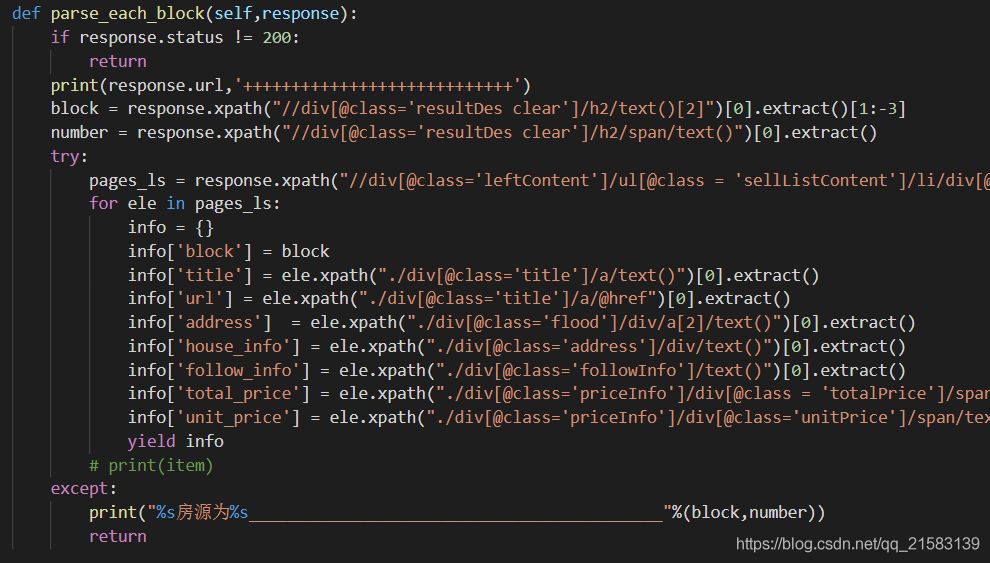

Notes on the method of passing items from the spider file to the pipeline in the case of a scratch crawler

How ASA configures port mapping and pat

Introduction to anchor free decision

Xiaohongshu microservice framework and governance and other cloud native business architecture evolution cases

(Reprint) visual explanation of processes and threads

POSTMAN测试出现SSL无响应

Regular expression introduction and some syntax

随机推荐

Darkent2ncnn error

2021-04-28

Frequently asked questions about redis

Redis之常见问题

《SQL优化核心思想》

Shredding Company poj 1416

On the use of bisection and double pointer

元宇宙中的法律与自我监管

性能领跑云原生数据库市场!英特尔携腾讯共建云上技术生态

SMT操作员是做什么的?工作职责?

迅为RK3568开发板使用RKNN-Toolkit-lite2运行测试程序

About Simple Data Visualization

SSL unresponsive in postman test

19c installing PSU 19.12

P3052 [USACO12MAR]Cows in a Skyscraper G

Bit compressor [Blue Bridge Cup training]

7. common instructions (Part 2): common operations of v-on, v-bind and V-model

yolov5 提速多GPU训练显存低的问题

redux工作流程+小例子的完整代码

Setting up a cluster environment under Linux (2) -- installing MySQL under Linux