当前位置:网站首页>Do280 allocating persistent storage

Do280 allocating persistent storage

2022-06-29 01:03:00 【It migrant worker brother goldfish】

Personal profile : Hello everyone , I am a Brother goldfish ,CSDN New star creator in operation and maintenance field , Hua Wei Yun · Cloud sharing experts , Alicloud community · Expert bloggers

Personal qualifications :CCNA、HCNP、CSNA( Network Analyst ), Soft test primary 、 Intermediate network engineer 、RHCSA、RHCE、RHCA、RHCI、ITIL

Maxim : Hard work doesn't necessarily succeed , But if you want to succeed, you must work hardStand by me : I like it 、 Can collect ️、 Leave message

List of articles

- Persistent storage

- Overview of persistent storage

- Persistent storage scenarios

- Concepts related to persistent storage

- Persistent storage plug-in

- PV Access pattern

- Persistent Volume Storage Classes

- establish PV and PVC resources

- Use NFS Of PV

- NFS Recycling policy

- Supplemental group

- adopt fsgroup Using block storage

- SELINUX And volume security

- SELinuxContext Options

- Textbook exercises

- summary

Persistent storage

Overview of persistent storage

By default , Running the container uses temporary storage within the container .Pods Consisting of one or more containers , These containers are deployed together , Share the same storage and other resources , Can be created at any time 、 start-up 、 Stop or destroy . Using temporary storage means , When the container stops , Data written to the file system in the container will be lost .

When the container also needs to hold data persistently when it stops ,OpenShift Use Kubernetes Persistent volume (PVs) by pod Provide persistent storage .

Persistent storage scenarios

Usually used in databases , Start a database pod Default temporary storage provided when . If you destroy and recreate the database pod, Destroy temporary storage and lose data . If you use persistent storage , The database stores the data in pod In the external persistent volume . If you destroy and recreate pod, The database application will continue to access the same external storage that stores the data .

Concepts related to persistent storage

Persistent volume (PV) yes OpenShift resources , It's only made up of OpenShift Administrators create and destroy . Persistent volume resources represent all OpenShift Network attached storage that can be accessed by all nodes .

Persistent storage components :

OCP Use Kubernetes Persistent volume (PV) technology , Allow administrators to provide persistent storage for the cluster . Developers use persistent volume declarations (PVC) request PV resources , You don't need to know the specific underlying storage infrastructure .

Persistent Volume:PV yes OpenShift Resources in the cluster , from PersistentVolume API Object definitions , It represents part of the existing network storage provided by the administrator in the cluster . It is the resource in the cluster , Just like nodes are cluster resources .PV The life cycle of is independent of use PV Any single pod.

Persistent Volume Claim:pvc from PersistentVolumeClaim API Object definitions , This object represents the developer's request for storage . It is associated with pod similar ,pod Consume node resources , and pvc Consume PV resources .

Persistent storage plug-in

A volume is a mounted file system , Yes pods And its container is available , And it can be backed up by many local or network connected storage .OpenShift Use plug-ins to support the following different back ends for persistent storage :

- NFS

- GlusterFS

- OpenStack Cinder

- Ceph RBD

- AWS Elastic Block Store (EBS)

- GCE Persistent Disk

- iSCSI

- Fibre Channel

- Azure Disk and Azure File

- FlexVolume (allows for the extension of storage back-ends that do not have a built-in plug-in)

- VMWare vSphere

- Dynamic Provisioning and Creating Storage Classes

- Volume Security

- Selector-Label Volume Binding

PV Access pattern

PV We can use resource provider Any way to mount on the host ,provider It has different functions , And the access mode of each persistent volume is set to the specific mode supported by that particular volume . for example ,NFS Can support multiple reads / Write client , But specifically NFS PV Can be exported as read-only on the server .

Every PV Receive your own set of access patterns , Describes the functionality of a specific persistent volume .

The access mode is shown in the table below :

| Access pattern | CLI abbreviation | describe |

|---|---|---|

| ReadWriteOnce | RWO | Volumes can be mounted by a single node as read / Write |

| ReadOnlyMany | ROX | Volumes can be mounted read-only by many nodes |

| ReadWriteMany | RWX | Volumes can be mounted for read by many nodes / Write |

PV claims Match with volumes with similar access patterns . The only two matching criteria are access mode and size .claim The access pattern of is a request . therefore , Users can be granted more access , But never reduce access . for example , If one claim request RWO, But the only volume available is NFS PV (RWO+ROX+RWX), that claim Will match NFS, Because it supports RWO.

All volumes with the same pattern are grouped , And then press the size ( From smallest to largest ) Sort .

master I'm in charge of PV Bound to the PVC Upper service Receive groups with matching patterns , And iterate over each group ( In order of size ), Until a size match , And then PV Bound to the PVC On .

Persistent Volume Storage Classes

PV Claims It can be done by storageClassName Property to selectively request a specific storage class by specifying its name in the . Only with PVC Of the request class with the same storage class name pv To bind to PVC.

The cluster administrator can be used for all PVC Set a default storage class , Or configure a dynamic provider to service one or more storage classes , These storage classes will match available PVC Specifications in .

establish PV and PVC resources

pv Is the resource in the cluster ,pvc Is a request for these resources , It also serves as a resource for claim Check .PV And PVCs The interaction of the two has the following life cycle :

- Create persistent volume

The Cluster Administrator creates any number of pv, these pv Indicates that cluster users can use OpenShift API The actual stored information used .

- Define persistent volume declaration

User created with a specific amount of storage 、 Specific access patterns and optional storage classes PVC.master Watch for new pvc, Or find a match PV, Or wait for the storage class to create a provider , And then tie them together .

- Use persistent storage

Pods Use claims As a volume . The cluster checks to find the declaration of the bound volume , And for pod Bind the volume . For volumes that support multiple access modes , The user is using its declaration as pod Specifies which mode is required when the volume in the .

Once the user has a claim, And it's time to claim Bound , The binding of PV It belongs to the user , In the process of use PV All belong to the user . Users use the pod Of Volume Contains a persistent volume claim To dispatch pod And access its declared pv.

Use NFS Of PV

OpenShift Use random uid Run container , So it will Linux User from OpenShift Nodes map to NFS The users on the server are not working properly . As OpenShift pv The use of NFS Sharing must comply with the following configuration :

- Belong to nfsnobody Users and groups .

- Have rwx------ jurisdiction ( namely 0700).

- Use all_squash Options

Sample configuration :

/var/export/vol *(rw,async,all_squash)

other NFS export Options , for example sync and async, And OpenShift irrelevant . If you use either option ,OpenShift Can work . however , In high latency environments , add to async Options can speed up NFS Shared write operations ( for example , take image push The scene to the warehouse ).

Use async Options are faster , because NFS The server responds to the client immediately when it processes the request , Instead of waiting for data to be written to disk .

When using sync Option , On the contrary ,NFS The server responds to the client only after the data has been written to disk .

Be careful :NFS Shared file system size and user quota pairs OpenShift No impact .PV Size in PV The resource definition specifies . If the actual file system is smaller , be PV Created and bound . If PV Bigger ,OpenShift The space used will not be limited to the specified PV size , And allow the container to use all the free space on the file system .OpenShift It provides storage quota and storage location limit , Can be used to control resource allocation in a project .

default SELinux Policy does not allow container access to NFS share . Must be in each OpenShift Change policy in instance node , The method is to virt_use_nfs and virt_sandbox_use_nfs The variable is set to true.

# setsebool -P virt_use_nfs=true

# setsebool -P virt_sandbox_use_nfs=true

NFS Recycling policy

NFS Support OpenShift Of Recyclable plug-in unit , Automatically performs the recycle task according to the policy processing set on each persistent volume .

By default , Persistent volume is set to Retain.Retain reclaim Policy allows manual recycling of resources . When the delete pv claim when , Persistent volumes still exist , And think the volume has been released . But it can't be used for another claim, Because from the previous one claim The data is still on the volume . At this point, the administrator can manually recycle the volume .

NFS The volume and its recycling policy are set to Recycle, From... To... From claim Will be cleared after release . for example , When will NFS The recycle policy is set to Recycle after , Delete the user bound to the volume pv claim after , Will run on this volume rm -rf command . After it was recycled ,NFS The volume can be bound directly to a new pv claim.

Supplemental group

Supplemental group It's routine Linux Group . When a process is in Linux Middle runtime , It has one UID、 One GID And one or more Supplemental group. You can set these properties for the main process of the container .

Supplemental groupid Usually used to control access to shared storage , such as NFS and GlusterFS, and fsGroup Used to control block storage ( Such as Ceph Of RBD live iSCSI) The interview of .

OpenShift The shared storage plug-in mounts the volume , In order to make the mounted POSIX Permissions match the permissions on the target store . for example , If the owner of the target store ID yes 1234, Group ID yes 5678, Then the mount in the host node and container will have the same ID. therefore , The main process of the container must match one or two id, To access the volume .

[[email protected] ~]# showmount -e

Export list for master.lab.example.com:

/var/export/nfs-demo *

[[email protected] ~]# cat /etc/exports.d/nfs-demo.conf

/var/export/nfs-demo

...

[[email protected] ~]# ls -lZ /var/export -d

drwx------. 10000000 650000 unconfined_u:object_r:usr_t:s0 /var/export/nfs-demo

Here's an example ,UID 10000000 And groups 650000 You can visit /var/export/nfs-demo export. Usually , Containers should not be used as root User run . In this NFS Example , If the container is not used as UID 10000000 Running , And it's not a group 650000 Members of , Then these containers are not accessible NFS export.

adopt fsgroup Using block storage

fsGroup Defined pod Of “file-system group”ID, The ID Added to the container supplemental group in .supplemental group ID For shared storage , and fsGroup ID For block storage .

Block storage , Such as Ceph RBD、iSCSI And all kinds of cloud storage , Usually dedicated to a single pod. Unlike shared storage , Block storage by pod To take over , It means pod( or image) Users and groups provided in the definition id Applied to actual physical block devices , Block storage is usually not shared .

SELINUX And volume security

except SCC outside , All predefined security context constraints will seLinuxContext Set to MustRunAs. Most likely to match pod The needs of the SCC force pod Use SELinux Strategy .pod The use of SELinux Strategies can be in pod In itself 、image、SCC or project( Provide default values ) In the definition of .

SELinux Tags can be in pod Of securityContext In the definition of ., And support user、role、type and level label .

SELinuxContext Options

- MustRunAs

If you don't use pre assigned values , You need to configure seLinuxOptions. Use seLinuxOptions As default , So as to aim at seLinuxOptions verification .

- RunAsAny

No default is provided , Allow to specify any seLinuxOptions.

Textbook exercises

Environmental preparation

[[email protected] ~]$ lab install-prepare setup

[[email protected] ~]$ cd /home/student/do280-ansible

[[email protected] do280-ansible]$ ./install.sh

Tips : If you already have a complete environment , Don't execute .

Prepare for this exercise

[[email protected] ~]$ lab deploy-volume setup

To configure NFS

This experiment does not explain in detail NFS Configuration and creation of , Use it directly /root/DO280/labs/deploy-volume/config-nfs.sh Script implementation , The specific script content can be viewed in the following ways .

meanwhile NFS from services Nodes provide .

[[email protected] ~]# less -FiX /root/DO280/labs/deploy-volume/config-nfs.sh

[[email protected] ~]# /root/DO280/labs/deploy-volume/config-nfs.sh # establish NFS

Export directory /var/export/dbvol created.

[[email protected] ~]# showmount -e # Validation verification

Export list for services.lab.example.com:

/exports/prometheus-alertbuffer *

/exports/prometheus-alertmanager *

/exports/prometheus *

/exports/etcd-vol2 *

/exports/logging-es-ops *

/exports/logging-es *

/exports/metrics *

/exports/registry *

/var/export/dbvol *

node Node mount NFS

[[email protected] ~]# mount -t nfs services.lab.example.com:/var/export/dbvol /mnt

[[email protected] ~]# mount | grep /mnt

services.lab.example.com:/var/export/dbvol on /mnt type nfs4 (rw,relatime,vers=4.1,rsize=262144,wsize=262144,namlen=255,hard,proto=tcp,port=0,timeo=600,retrans=2,sec=sys,clientaddr=172.25.250.11,local_lock=none,addr=172.25.250.13)

[[email protected] ~]# ll -a /mnt/ # Check relevant permissions

total 0

drwx------. 2 nfsnobody nfsnobody 6 Mar 3 15:38 .

dr-xr-xr-x. 17 root root 224 Aug 16 2018 ..

[[email protected] ~]# umount /mnt/

node2 Also do the above mount test , It is recommended to download after the test ,NFS Share in OpenShift It will mount automatically when needed .

Create persistent volume

[[email protected] ~]$ oc login -u admin -p redhat https://master.lab.example.com

[[email protected] ~]$ less -FiX /home/student/DO280/labs/deploy-volume/mysqldb-volume.yml

apiVersion: v1

kind: PersistentVolume

metadata:

name: mysqldb-volume

spec:

capacity:

storage: 3Gi

accessModes:

- ReadWriteMany

nfs:

path: /var/export/dbvol

server: services.lab.example.com

persistentVolumeReclaimPolicy: Recycle

[[email protected] ~]$ oc create -f /home/student/DO280/labs/deploy-volume/mysqldb-volume.yml

[[email protected] ~]$ oc get pv # see PV

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

etcd-vol2-volume 1G RWO Retain Bound openshift-ansible-service-broker/etcd 5d

mysqldb-volume 3Gi RWX Recycle Available 4s

registry-volume 40Gi RWX Retain Bound default/registry-claim

Create project

[[email protected] ~]$ oc login -u developer -p redhat https://master.lab.example.com

[[email protected] ~]$ oc new-project persistent-storage

Deploy the application

[[email protected] ~]$ oc new-app --name=mysqldb \

--docker-image=registry.lab.example.com/rhscl/mysql-57-rhel7 \

-e MYSQL_USER=ose \

-e MYSQL_PASSWORD=openshift \

-e MYSQL_DATABASE=quotes

[[email protected] ~]$ oc status # Validation verification

In project persistent-storage on server https://master.lab.example.com:443

svc/mysqldb - 172.30.234.19:3306

dc/mysqldb deploys istag/mysqldb:latest

deployment #1 deployed 45 seconds ago - 1 pod

2 infos identified, use 'oc status -v' to see details.

Configure persistent volumes

[[email protected] ~]$ oc describe pod mysqldb | grep -A2 'Volumes'

# View the current pod Of Volume

Volumes:

mysqldb-volume-1:

Type: EmptyDir (a temporary directory that shares a pod's lifetime)

[[email protected]n ~]$ oc set volumes dc mysqldb \

--add --overwrite --name=mysqldb-volume-1 -t pvc \

--claim-name=mysqldb-pvclaim \

--claim-size=3Gi \

--claim-mode='ReadWriteMany' # modify dc And create PVC

[[email protected] ~]$ oc describe pod mysqldb | grep -E -A 2 'Volumes|ClaimName' # View validation

Volumes:

mysqldb-volume-1:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: mysqldb-pvclaim

ReadOnly: false

default-token-fp8gq:

[[email protected] ~]$ oc get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysqldb-pvclaim Bound mysqldb-volume 3Gi RWX 1m

Port forwarding

[[email protected] ~]$ oc get pod

NAME READY STATUS RESTARTS AGE

mysqldb-2-7npfx 1/1 Running 0 6m

[[email protected] ~]$ oc port-forward mysqldb-2-7npfx 3306:3306

Forwarding from 127.0.0.1:3306 -> 3306

Test database

[[email protected] ~]$ mysql -h127.0.0.1 -uose -popenshift \

quotes < /home/student/DO280/labs/deploy-volume/quote.sql # Fill in the data test

[[email protected] ~]$ mysql -h127.0.0.1 -uose -popenshift \

quotes -e "select count(*) from quote;" # Confirm that the filling is complete

[[email protected] ~]$ ssh [email protected] ls -la /var/export/dbvol # see NFS Server data

……

drwxr-x---. 2 nfsnobody nfsnobody 8192 Mar 3 16:14 performance_schema

-rw-------. 1 nfsnobody nfsnobody 1676 Mar 3 16:14 private_key.pem

-rw-r--r--. 1 nfsnobody nfsnobody 452 Mar 3 16:14 public_key.pem

drwxr-x---. 2 nfsnobody nfsnobody 54 Mar 3 16:32 quotes

-rw-r--r--. 1 nfsnobody nfsnobody 1079 Mar 3 16:14 server-cert.pem

-rw-------. 1 nfsnobody nfsnobody 1680 Mar 3 16:14 server-key.pem

……

[[email protected] ~]$ ssh [email protected] ls -la /var/export/dbvol/quotes

total 212

drwxr-x---. 2 nfsnobody nfsnobody 54 Mar 3 16:32 .

drwx------. 6 nfsnobody nfsnobody 4096 Mar 3 16:14 ..

-rw-r-----. 1 nfsnobody nfsnobody 65 Mar 3 16:14 db.opt

-rw-r-----. 1 nfsnobody nfsnobody 8584 Mar 3 16:32 quote.frm

-rw-r-----. 1 nfsnobody nfsnobody 98304 Mar 3 16:32 quote.ibd

Delete PV

[[email protected] ~]$ oc delete project persistent-storage # Delete the project

project "persistent-storage" deleted

[[email protected] ~]$ oc login -u admin -p redhat

[[email protected] ~]$ oc delete pv mysqldb-volume # Delete PV

persistentvolume "mysqldb-volume" deleted

Verify persistence and clear experiments

Delete PV Whether the data will be retained for a long time .

[[email protected] ~]$ ssh [email protected] ls -la /var/export/dbvol

……

drwxr-x---. 2 nfsnobody nfsnobody 54 Mar 3 16:32 quotes

……

[[email protected] ~]$ ssh [email protected] rm -rf /var/export/dbvol/* # Use rm You can delete it completely

[[email protected] ~]$ ssh [email protected] ls -la /var/export/dbvol

total 0

drwx------. 2 nfsnobody nfsnobody 6 Mar 3 16:38 .

drwxr-xr-x. 3 root root 19 Mar 3 15:38 ..

[[email protected] ~]$ lab deploy-volume cleanup

summary

RHCA Certification requires experience 5 Study and examination of the door , It still takes a lot of time to study and prepare for the exam , Come on , Can GA 🤪.

That's all 【 Brother goldfish 】 Yes Chapter six DO280 Allocate persistent storage Brief introduction and explanation of . I hope it can be helpful to the little friends who see this article .

Red hat Certification Column series :

RHCSA special column : entertain RHCSA authentication

RHCE special column : entertain RHCE authentication

This article is included in RHCA special column :RHCA memoir

If this article 【 article 】 It helps you , I hope I can give 【 Brother goldfish 】 Point a praise , It's not easy to create , Compared with the official statement , I prefer to use 【 Easy to understand 】 To explain every point of knowledge with your writing .

If there is a pair of 【 Operation and maintenance technology 】 Interested in , You are welcome to pay attention to ️️️ 【 Brother goldfish 】️️️, I will bring you great 【 Harvest and surprise 】!

边栏推荐

- be based on. NETCORE development blog project starblog - (13) add friendship link function

- Comparison between winding process and lamination process

- 学习通否认 QQ 号被盗与其有关:已报案;iPhone 14 量产工作就绪:四款齐发;简洁优雅的软件早已是明日黄花|极客头条

- How to carry token authentication in websocket JS connection

- [staff] pedal mark (step on pedal ped mark | release pedal * mark | corresponding pedal command in MIDI | continuous control signal | switch control signal)

- 流媒体集群应用与配置:如何在一台服务器部署多个EasyCVR?

- Accessories and working process of machine vision system

- Mask wearing face data set and mask wearing face generation method

- EasyCVR接入Ehome协议的设备,无法观看设备录像是什么原因?

- Maximum path and problem (cherry picking problem)

猜你喜欢

Analysis Framework -- establishment of user experience measurement data system

How to calculate the income tax of foreign-funded enterprises

Blazor University (34) forms - get form status

What is redis

EasyCVR集群版本替换成老数据库造成的服务崩溃是什么原因?

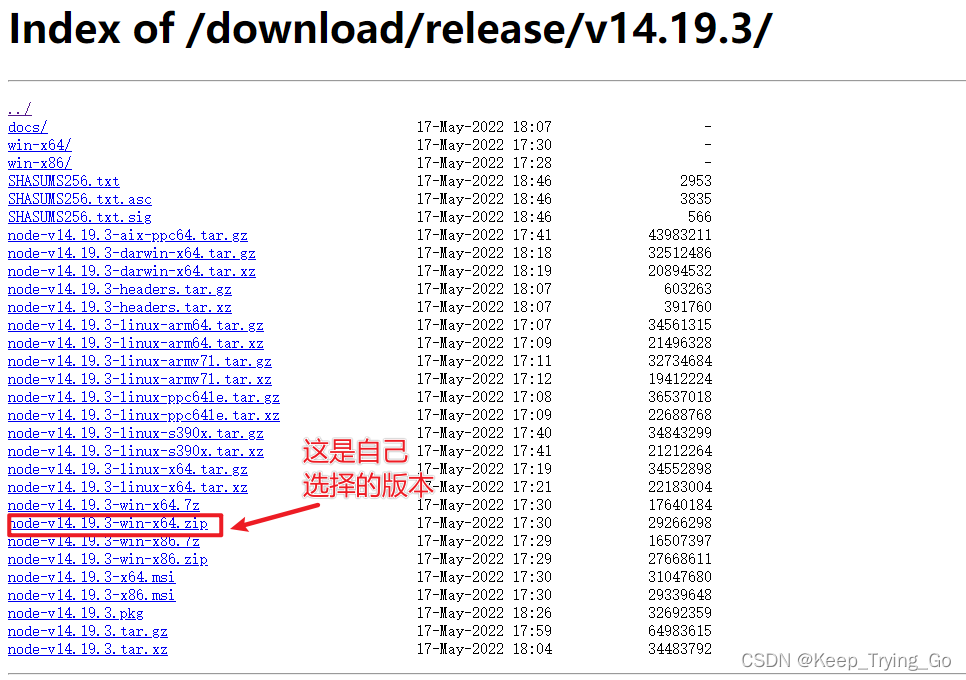

Nodejs installation and download

cocoscreator动态切换SkeletonData实现骨骼更新

Breadth first search to catch cattle

Go1.18 new feature: discard strings Title Method, a new pit!

基于.NetCore开发博客项目 StarBlog - (13) 加入友情链接功能

随机推荐

[leetcode] 522. 最长特殊序列 II 暴力 + 双指针

基于.NetCore开发博客项目 StarBlog - (13) 加入友情链接功能

Connected to rainwater series problems

Click hijack: X-FRAME-OPTIONS is not configured

Sampling with VerilogA module

How to solve the problem of Caton screen when easycvr plays video?

华泰证券安全吗

[Architect (Part 38)] locally install the latest version of MySQL database developed by the server

Maximum path and problem (cherry picking problem)

Misunderstanding of innovation by enterprise and it leaders

Bmfont make bitmap font and use it in cocoscreator

Analysis of basic structure and working principle of slip ring

[UVM] my main_ Why can't the case exit when the phase runs out? Too unreasonable!

狼人杀休闲游戏微信小程序模板源码/微信小游戏源码

Reference materials in the process of using Excel

Count the number of different palindrome subsequences in the string

机器视觉系统的配件及工作过程

[agile 5.1] core of planning: user stories

UI高度自适应的修改方案

How to mount FSS object storage locally