当前位置:网站首页>Highly scalable, emqx 5.0 achieves 100million mqtt connections

Highly scalable, emqx 5.0 achieves 100million mqtt connections

2022-06-21 20:39:00 【51CTO】

Abstract

The connection and deployment scale of IOT devices are expanding , It puts forward higher requirements for the scalability and robustness of the Internet of things message platform . To confirm cloud native distribution MQTT Message server EMQX Its performance can fully meet the needs of today's Internet of things connection scale , We are 23 Of nodes EMQX Established on the cluster 1 One hundred million MQTT Connect , Yes EMQX The scalability of the system has been stress tested .

In this test , Every MQTT The client subscribes to a unique wildcard topic , This requires more than a direct subject CPU resources . When the message is published , We chose a one-to-one publisher - Subscriber topology model , Messages can be processed per second 100 Ten thousand . Besides , We also compared the use of two different database back ends ——RLOG DB and Mnesia when , How the maximum subscription rate changes as the cluster size increases . This article will introduce the testing situation and some challenges in this process in detail .

Background introduction

EMQX Is a highly scalable distributed open source MQTT Message server , be based on Erlang/OTP Platform development , Support millions of concurrent clients . therefore ,EMQX You need to persist and replicate various data between cluster nodes , Such as MQTT Topics and their subscribers 、 Routing information 、ACL The rules 、 Various configurations, etc . To meet such needs , Since its release ,EMQX Always use Mnesia As a database back end .

Mnesia Is based on Erlang/OTP Embedded in ACID Distributed database , Use full mesh point-to-point Erlang Distributed for transaction coordination and replication . This feature makes it difficult to expand horizontally : More nodes , The more data you replicate , The higher the cost of writing task coordination , The greater the risk of a schizophrenic scenario .

stay EMQX 5.0 in , We try to use a new database backend type ——RLOG(Replication Log) To alleviate this problem , It USES the Mria Realization . As Mnesia Database extension ,Mria Two types of nodes are defined to help it expand horizontally : One is the core node , Its behavior is similar to that of ordinary Mnesia The nodes are the same , Participate in write transactions ; The other is the replication node , Do not participate in transactions , Delegate transactions to the core node , Keep a read-only copy of the data locally . Because there are fewer nodes involved , This greatly reduces the risk of cerebral fissure , And reduces the coordination required for transaction processing . meanwhile , Because all nodes can read data locally , It can realize quick access to read-only data .

In order to use this new database backend by default , We need to stress test it , Verify that it can really expand horizontally . We built a 23 Node EMQX colony , keep 1 Billion concurrent connections , Divide equally between publishers and subscribers , And release the news in a one-to-one way . Besides , We will also RLOG DB Back end vs. traditional Mnesia The back end is compared , And confirmed RLOG The arrival rate of is indeed higher than Mnesia Higher .

The test method

We used AWS CDK To deploy and run cluster tests , It can test different types and numbers of instances , You can also try using EMQX Different development branches of . Interested readers can read this Github Warehouse See our script in . We are at the load generator node ( abbreviation “loadgens”) We used our emqtt-bench The tool generates connections with various options / Release / Subscription traffic , And use EMQX Of Dashboard and Prometheus To monitor the progress of the test and the health of the instance .

We tested it one by one with different instance types and numbers . In the last few tests , We decided to be right EMQX Nodes and loadgen Use c6g.metal example , Use for clusters ”3+20” Topology , namely 3 Core nodes participating in write transactions , as well as 20 Replication nodes that are read-only replicas and delegate writes to the core node . as for loadgen, We observed that the publisher client needs far more resources than the subscriber . If only connect and subscribe 1 Billion connections , It only needs 13 individual loadgen example ; If you still need to publish , You need to 17 individual .

No load balancer was used in these tests ,loadgen Connect directly to each node . To make the core node dedicated to managing database transactions , We have not established connections to these core nodes , Every loadgen Clients are directly connected to each node in a uniform distribution , Therefore, the number of connections and resource usage of all nodes are roughly the same . Every subscriber subscribed to QoS by 1 Of bench/%i/# Wildcard theme of form , among %i A unique number representing each subscriber . Each publisher is represented by QoS 1 Released bench/%i/test The theme of form , among %i With subscribers %i identical . This ensures that each publisher has only one subscriber . The payload size in the message is always 256 byte .

In the test , We first connected all subscriber clients , Then start connecting to the publisher . Only after all publishers are connected , They just started every 90 The message is released once every second . In the 1 Billion connection test in progress , Subscribers and publishers connect to broker The rate of 16000 Connect / second , However, we believe that the cluster can maintain a higher connection rate .

Challenges in testing

In the process of experimenting with connections and throughput of this magnitude , We encountered some challenges and conducted relevant investigations accordingly , The performance bottleneck has been improved .system_monitor Track for us Erlang Memory in the process and CPU Usage helps a lot , It can be called “BEAM Process htop”, Enables us to find a queue with long messages 、 High memory and / or CPU The process of utilization . After observing the situation during the cluster test , We use it in Mria Some performance tuning has been done in [1] [2] [3].

In the use of Mria During the initial test conducted , In short , The replication mechanism basically records all transactions in a hidden table subscribed by the replication node . This actually creates some network overhead between the core nodes , Because every transaction is essentially “ Copy of the ”. In us fork Of Erlang/OTP In the code , We added a new Mnesia modular , Makes it easier to capture all committed transaction logs , Unwanted “ Copy ” write in , Greatly reduce network usage , Let the cluster maintain a higher connection and transaction rate . After these optimizations , We further stress test the cluster , And found new bottlenecks , Further performance tuning is required [4] [5] [6].

Even our signature testing tool needs some adjustments to handle such a large number of connections and connection rates . For this reason, we have made some quality improvements [7] [8] [9] [10] And performance optimization [11] [12]. In our release - Subscription testing , Even set up a special branch ( Not in the current trunk branch ), In order to further reduce memory usage .

test result

The animation above shows a one-on-one release - The final result of the subscription test . We established 1 Billion connections , among 5000 Wan is a subscriber , in addition 5000 Wan is the publisher . Through every 90 The message will be released every second , We can see that the average inbound and outbound rates have reached... Per second 100 More than ten thousand . At the peak of release ,20 Replication nodes ( These nodes are connected nodes ) Each node in the is used on average in the publishing process 90% Of memory ( about 113GiB) A contract 97% Of CPU(64 individual arm64 kernel ). Dealing with business 3 Core nodes use CPU Less ( The usage rate is less than 1%), And only used 28% Of memory ( about 36GiB).256 The network traffic required during the release of byte payload is 240MB/s To 290 MB/s Between . At the peak of release ,loadgen Requires almost all memory ( about 120GiB) And the whole CPU.

Be careful : In this test , All paired publishers and subscribers happen to be in the same broker in , This is not an ideal scenario that is very close to a real use case . at present EMQX The team is doing more testing , And will continue to update progress .

Testing period EMQX Node pair CPU、 Memory and network usage Grafana Screenshot

In order to RLOG Cluster and equivalent Mnesia Cluster for comparison , We used another topology with a small total number of connections :RLOG Use 3 A core node +7 Replication nodes ,Mnesia Cluster use 10 Nodes , among 7 Nodes enter the connection . We connect and subscribe at different rates , No release .

The following figure shows our test results . about Mnesia, The faster you can connect and subscribe to nodes , Observed “ flat ” The more you act , That is, the cluster cannot reach the target maximum number of connections , In these tests , The maximum number of target connections is 5000 ten thousand . And for RLOG, We can see that it can achieve a higher connection rate , Clusters do not exhibit this flattening behavior . So we can draw a conclusion , Use RLOG Of Mria In the case of high connection rate, it is better than the Mnesia Back end performance is better .

Conclusion

After a series of tests and these satisfactory results , We think Mria Provided RLOG The back end of the database can be in EMQX 5.0 Put into use in the future . It has become the default database backend in the current main branch .

References

[1] - fix(performance): Move message queues to off_heap by k32 · Pull Request #43 · emqx/mria

[3] - fix(mria_status): Remove mria_status process by k32 · Pull Request #48 · emqx/mria

[4] - Store transactions in replayq in normal mode by k32 · Pull Request #65 · emqx/mria

[5] - feat: Remove redundand data from the mnesia ops by k32 · Pull Request #67 · emqx/mria

[6] - feat: Batch transaction imports by k32 · Pull Request #70 · emqx/mria

[7] - feat: add new waiting options for publishing by thalesmg · Pull Request #160 · emqx/emqtt-bench

[8] - feat: add option to retry connections by thalesmg · Pull Request #161 · emqx/emqtt-bench

[9] - Add support for rate control for 1000+ conns/s by qzhuyan · Pull Request #167 · emqx/emqtt-bench

[10] - support multi target hosts by qzhuyan · Pull Request #168 · emqx/emqtt-bench

[11] - feat: bump max procs to 16M by qzhuyan · Pull Request #138 · emqx/emqtt-bench

[12] - feat: tune gc for publishing by thalesmg · Pull Request #164 · emqx/emqtt-bench

Copyright notice : This paper is about EMQ original , Reprint please indicate the source .

Link to the original text : https://www.emqx.com/zh/blog/reaching-100m-mqtt-connections-with-emqx-5-0

边栏推荐

- Points cloud to Depth maps: conversion, Save, Visualization

- 带你区分几种并行

- Harbor高可用集群设计及部署(实操+视频),基于离线安装方式

- Influxdb optimization configuration item

- How does the easycvr intelligent edge gateway hardware set power on self start?

- Software testing office tools recommendation - Desktop Calendar

- Visualization of operation and maintenance monitoring data - let the data speak [Huahui data]

- SQL教程之数据科学家需要掌握的五种 SQL 技能

- [wechat applet] collaboration and publishing data binding

- 浅析Js中${}字符串拼接

猜你喜欢

The difference between break and continue

Envi classic annotation object how to recall modification and deletion of element legend scale added

Snake game project full version

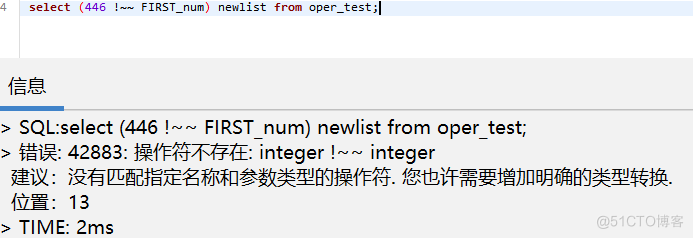

瀚高数据库自定义操作符'!~~'

How to query the maximum ID value in MySQL

机器学习和模式识别怎么区分?

自然语言处理如何实现聊天机器人?

机器学习之数据处理与可视化【鸢尾花数据分类|特征属性比较】

某大厂第二轮裁员来袭,套路满满

FS9935 高效率恒流限流 WLED 驱动IC

随机推荐

SQL 面试题:2022 年最热门的 15 个问题

Deep learning image data enhancement

Netcore3.1 Ping whether the network is unblocked and obtaining the CPU and memory utilization of the server

点云转深度图:转化,保存,可视化

浅析Js中${}字符串拼接

Software testing office tools recommendation - Desktop Calendar

Kubernetes-23:详解如何将CPU Manager做到游刃有余

Visualization of operation and maintenance monitoring data - let the data speak [Huahui data]

Jenkins regularly builds and passes build parameters

Is it safe to open a margin account? What are the requirements?

YX2811景观装鉓驱动IC

2022-06-20

贪吃蛇游戏项目完整版

Envi classic annotation object how to recall modification and deletion of element legend scale added

Summary of methods for NSIS to run bat

Alibaba cloud ack one and ACK cloud native AI suite have been newly released to meet the needs of the end of the computing era

Points cloud to Depth maps: conversion, Save, Visualization

M3608升压ic芯片High Efficiency 2.6A Boost DC/DC Convertor

什么是eGFP,绿色荧光蛋白

EasyCVR智能边缘网关硬件如何设置通电自启动?