当前位置:网站首页>Technical dry goods | hundred lines of code to write Bert, Shengsi mindspire ability reward

Technical dry goods | hundred lines of code to write Bert, Shengsi mindspire ability reward

2022-07-03 07:34:00 【Shengsi mindspire】

How to evaluate Huawei before MindSpore 1.5 ? Referred to the MindSpore The ease of use of has a hundred lines of code to write a BERT The ability of , Make up this time .BERT As 18 year NLP Milestone model , While being sought after by countless people, it has also been deconstructed and analyzed countless times , While I try to explain the model a little clearly , Let readers also Get To MindSpore Current capabilities . Although it is somewhat suspected of advertising , But please listen to me carefully .

01

near 1000 Yes BERT Realization

The idea of writing this article was motivated by the use of MindSpore Also wrote a lot of models , In particular, we have done some reproduction of the pre training language model , During this period, continuous reference Model Zoo Model implementation of , There was a doubt at that time , Write a BERT Need close 1000 That's ok ,MindSpore Is it so complicated and difficult to use ?

Put the official link (gitee.com/mindspore/models/blob/master/official/nlp/bert/src/bert_model.py), Interested readers can have a look at , Remove annotations , This implementation is still complex and lengthy , And it doesn't show MindSpore As advertised ——“ Simple development experience ”. Later, I want to migrate huggingface Of checkpoint, I wrote a version by myself , It is found that this lengthy official implementation is completely compressible , And such a complex implementation will add some confusion to the latecomers , So there are hundreds of lines of code version.

02

BERT Model

BERT yes “Bidirectional Encoder Reporesentation from Transfromers” Abbreviation , It is also the name of Sesame Street anime characters ( Google old egg man ).

Sesame Street BERT

The title has already pointed out the core of the model , Bidirectional Transformer Encoder,BERT stay GPT and ELMo On the basis of , Absorb the advantages of both , And make the most of Transformer The ability of feature extraction , In that year, I swiped all the evaluation data sets , Become insurmountable SOTA Model . Next, I will start from the most basic components of the coordination formula bit by bit 、 Image & Text 、 Code , use MindSpore Complete a very light BERT. For reasons of length , It won't be right here Transformer Or explain the basic knowledge of the pre training language model in detail , Only to achieve BERT Mainline .

03

Multi-head Attention

differ Paper analysis , I won't come up and say BERT and Transformer stay Embedding The difference of , And the pre training tasks set , It starts with the most basic module implementation . The first is long attention (Multi-head Attention) modular .

because BERT The basic skeleton of the model is completely composed of Transformer Of Encoder constitute , So here's to Transformer in Self-Attention and Multi-head Attention Give a brief introduction . First of all Self-Attention, That is, in the paper Scaled Dot-product Attention, The formula is as follows :

there Self-Attention The operation is performed by three inputs , Namely Q(query matrix), K(key matrix), V(value matrix), They are respectively obtained by linear transformation of the same input through the full connection layer . The implementation here can be completely reproduced by referring to the formula , The code is as follows :

class ScaledDotProductAttention(Cell):

def __init__(self, d_k, dropout):

super().__init__()

self.scale = Tensor(d_k, mindspore.float32)

self.matmul = nn.MatMul()

self.transpose = P.Transpose()

self.softmax = nn.Softmax(axis=-1)

self.sqrt = P.Sqrt()

self.masked_fill = MaskedFill(-1e9)

if dropout > 0.0:

self.dropout = nn.Dropout(1-dropout)

else:

self.dropout = None

def construct(self, Q, K, V, attn_mask):

K = self.transpose(K, (0, 1, 3, 2))

scores = self.matmul(Q, K) / self.sqrt(self.scale) # scores : [batch_size x n_heads x len_q(=len_k) x len_k(=len_q)]

scores = self.masked_fill(scores, attn_mask) # Fills elements of self tensor with value where mask is one.

attn = self.softmax(scores)

context = self.matmul(attn, V)

if self.dropout is not None:

context = self.dropout(context)

return context, attnamong ,Q*K^T And zoom , Did a step masked_fill The operation of , It's a reference Pytorch Version implementation , The calculated results and the initial input sequence Padding by 0 Replace the value of the corresponding position of , Replace with something close to 0 Number of numbers , As in the above code -1e-9. In addition, there are ways to increase the robustness of the model Dropout operation .

Multi-head Attention

At the completion of the basic Scaled Dot-product Attention after , Let's look at the implementation of the long attention mechanism . The so-called bull , In fact, the original single Q、K、V The projection is h individual Q', K', V'. Thus, without changing the amount of calculation , It enhances the generalization ability of the model . It can be regarded as multiple head Integration within the model (ensamble), It can also be regarded as multi-channel in convolution operation (channel), actually Multi-head Attention There is also reference CNN The smell of ( Many years ago, I heard teacher Liu Tieyan mention in the workshop ). Let's look at the implementation part :

class MultiHeadAttention(Cell):

def __init__(self, d_model, n_heads, dropout):

super().__init__()

self.n_heads = n_heads

self.W_Q = Dense(d_model, d_model)

self.W_K = Dense(d_model, d_model)

self.W_V = Dense(d_model, d_model)

self.linear = Dense(d_model, d_model)

self.head_dim = d_model // n_heads

assert self.head_dim * n_heads == d_model, "embed_dim must be divisible by num_heads"

self.layer_norm = nn.LayerNorm((d_model, ), epsilon=1e-12)

self.attention = ScaledDotProductAttention(self.head_dim, dropout)

# ops

self.transpose = P.Transpose()

self.expanddims = P.ExpandDims()

self.tile = P.Tile()

def construct(self, Q, K, V, attn_mask):

# q: [batch_size x len_q x d_model], k: [batch_size x len_k x d_model], v: [batch_size x len_k x d_model]

residual, batch_size = Q, Q.shape[0]

q_s = self.W_Q(Q).view((batch_size, -1, self.n_heads, self.head_dim))

k_s = self.W_K(K).view((batch_size, -1, self.n_heads, self.head_dim))

v_s = self.W_V(V).view((batch_size, -1, self.n_heads, self.head_dim))

# (B, S, D) -proj-> (B, S, D) -split-> (B, S, H, W) -trans-> (B, H, S, W)

q_s = self.transpose(q_s, (0, 2, 1, 3)) # q_s: [batch_size x n_heads x len_q x d_k]

k_s = self.transpose(k_s, (0, 2, 1, 3)) # k_s: [batch_size x n_heads x len_k x d_k]

v_s = self.transpose(v_s, (0, 2, 1, 3)) # v_s: [batch_size x n_heads x len_k x d_v]

attn_mask = self.expanddims(attn_mask, 1)

attn_mask = self.tile(attn_mask, (1, self.n_heads, 1, 1)) # attn_mask : [batch_size x n_heads x len_q x len_k]

# context: [batch_size x n_heads x len_q x d_v], attn: [batch_size x n_heads x len_q(=len_k) x len_k(=len_q)]

context, attn = self.attention(q_s, k_s, v_s, attn_mask)

context = self.transpose(context, (0, 2, 1, 3)).view((batch_size, -1, self.n_heads * self.head_dim)) # context: [batch_size x len_q x n_heads * d_v]

output = self.linear(context)

return self.layer_norm(output + residual), attn # output: [batch_size x len_q x d_model]Q,K,V First, go through the full connection layer (Dense) Make a linear transformation , And then pass by reshape(view) Switch to multi head , Then carry out the corresponding transpose to meet the feeding ScaledDotProductAttention The need for . Finally, the output obtained is spliced , Note that there is no explicit Concat operation , But directly through view, take context Of shape[-1] Revert to heads*hidden_size Size . Besides , Last return When I joined Add&Norm operation , namely Encoder The corresponding residual sum in the structure Norm Calculation . Not detailed here , See the next section .

04

Transformer Encoder

After completing the basic Multi-head Attention After module , You can finish the rest , Construct a single layer Encoder. Here, let's start with the single layer Encoder The structure of ,Transformer Encoder from Poswise Feed Forward Layer and Multi-head Attention Layer constitute , And each Layer The input and output of Residual operation ( namely : y = f(x) + x), To ensure that deepening the number of neural network layers will not cause degradation problems , as well as Layer Norm To meet the deep neural network can be trained ( Alleviate gradient disappearance and gradient explosion ). Why use... Here Layer Norm Instead of Batch Norm Search for , It's also Transformer An interesting example of model construction trick.

Transformer Encoder

Finished Encoder Structure , Need to put the missing Poswise Feed Forward Layer To implement , At the same time Multi-head Attention Layer similar , take Residual and Layer Norm Integrate... Together , The code implementation is as follows :

class PoswiseFeedForwardNet(Cell):

def __init__(self, d_model, d_ff, activation:str='gelu'):

super().__init__()

self.fc1 = Dense(d_model, d_ff)

self.fc2 = Dense(d_ff, d_model)

self.activation = activation_map.get(activation, nn.GELU())

self.layer_norm = nn.LayerNorm((d_model,), epsilon=1e-12)

def construct(self, inputs):

residual = inputs

outputs = self.fc1(inputs)

outputs = self.activation(outputs)

outputs = self.fc2(outputs)

return self.layer_norm(outputs + residual)take Multi-head Attention Layer and Poswise Feed Forward Layer Connect to get Encoder:

class BertEncoderLayer(Cell):

def __init__(self, d_model, n_heads, d_ff, activation, dropout):

super().__init__()

self.enc_self_attn = MultiHeadAttention(d_model, n_heads, dropout)

self.pos_ffn = PoswiseFeedForwardNet(d_model, d_ff, activation)

def construct(self, enc_inputs, enc_self_attn_mask):

enc_outputs, attn = self.enc_self_attn(enc_inputs, enc_inputs, enc_inputs, enc_self_attn_mask)

enc_outputs = self.pos_ffn(enc_outputs)

return enc_outputs, attnThen, according to the number of configured layers 、hidden_size, head Number and other parameters , take n layer Encoder Connect in turn , Can finish BERT Of Encoder, Use here nn.CellList Container to implement :

class BertEncoder(Cell):

def __init__(self, config):

super().__init__()

self.layers = nn.CellList([BertEncoderLayer(config.hidden_size, config.num_attention_heads, config.intermediate_size, config.hidden_act, config.hidden_dropout_prob) for _ in range(config.num_hidden_layers)])

def construct(self, inputs, enc_self_attn_mask):

outputs = inputs

for layer in self.layers:

outputs, enc_self_attn = layer(outputs, enc_self_attn_mask)

return outputs05

structure BERT

At the completion of the Encoder after , You can start to assemble the complete BERT Model . The contents of the preceding chapters are Transformer Encoder The realization of the structure , and BERT The core innovation or difference of the model is mainly Transformer backbone outside . First of all, yes Embedding To deal with .

As shown in the figure , Enter the text and send it to BERT Model Embedding Get hidden layer representation by three different Embedding Add and get , These include :

Token Embeddings: That is, the most common word vector , The first placeholder is [CLS], It is used to express the code of the whole input text after subsequent coding , Used to classify tasks ( So it becomes CLS, namely classifier). Besides, there are [SEP] Placeholders are used to separate two different sentences of the same input , as well as [PAD] Express Padding.

Segment Embedding: To distinguish two different sentences of the same input . The Embedding The purpose of joining is to Next Sentence Predict Mission .

Position Embedding: And Transformer equally , It cannot be like LSTM Naturally retain location information , You need to encode the location information manually , The difference here is Transformer Trigonometric functions are used , Here, the corresponding position is directly index Send in Embedding Layer get code .( There is no essential difference between the two , And the latter is more simple and direct )

After analyzing three different Embedding, Use it directly nn.Embedding You can complete this part , The corresponding code is as follows :

class BertEmbeddings(Cell):

def __init__(self, config):

super().__init__()

self.tok_embed = Embedding(config.vocab_size, config.hidden_size)

self.pos_embed = Embedding(config.max_position_embeddings, config.hidden_size)

self.seg_embed = Embedding(config.type_vocab_size, config.hidden_size)

self.norm = nn.LayerNorm((config.hidden_size,), epsilon=1e-12)

def construct(self, x, seg):

seq_len = x.shape[1]

pos = mnp.arange(seq_len) # mindspore.numpy

pos = P.BroadcastTo(x.shape)(P.ExpandDims()(pos, 0))

seg_embedding = self.seg_embed(seg)

tok_embedding = self.tok_embed(x)

embedding = tok_embedding + self.pos_embed(pos) + seg_embedding

return self.norm(embedding) It's used here mindspore.numpy.arange To generate location index, The rest are simple calls and matrix plus .

At the completion of Embedding After the layer , take Encoder And the output of pooler Combine , Can constitute a complete BERT Model , The code is as follows :

class BertModel(Cell):

def __init__(self, config):

super().__init__(config)

self.embeddings = BertEmbeddings(config)

self.encoder = BertEncoder(config)

self.pooler = Dense(config.hidden_size, config.hidden_size, activation='tanh')

def construct(self, input_ids, segment_ids):

outputs = self.embeddings(input_ids, segment_ids)

enc_self_attn_mask = get_attn_pad_mask(input_ids, input_ids)

outputs = self.encoder(outputs, enc_self_attn_mask)

h_pooled = self.pooler(outputs[:, 0])

return outputs, h_pooledHere we use a full connection layer , Right position is 0 The output of pooler operation , The corresponding [CLS] The input text of the placeholder represents , For subsequent classification tasks .

06

BERT Pretraining task

BERT The essence of model lies in task design rather than model structure , It is people who treat it Paper Consensus of .BERT Two pre training tasks are designed , To complete unsupervised language model training ( In fact, it is not unsupervised ).

1. Next Sentence Predict

First of all, the simpler NSP Task analysis . Join in NSP The task is mainly aimed at QA or NLI The number of input sentences is 2 Downstream tasks , Enhance the ability of the model in such tasks . This pre training task is just as the name suggests , Sentence A and B Splice as input , among B Half of them are correct , yes A The next , The other half randomly selects text that is not the next sentence . The prediction task is classified into two categories , forecast B Is it A The next . The specific implementation is as follows :

class BertNextSentencePredict(Cell):

def __init__(self, config):

super().__init__()

self.classifier = Dense(config.hidden_size, 2)

def construct(self, h_pooled):

logits_clsf = self.classifier(h_pooled)

return logits_clsf2. Masked Language Model

Mask Of Token. This task is different from the traditional language model ( or GPT The language model of ), It is bidirectional , namely :

Take this as the objective function , Predicted by context Mask Of Token, Nature conforms to the form of cloze .

Data preprocessing is not involved here , Not right. Mask And replacement ratio . The corresponding implementation is relatively simple , It's actually Dense+activation+LayerNorm+Dense, The implementation is as follows :

class BertMaskedLanguageModel(Cell):

def __init__(self, config, tok_embed_table):

super().__init__()

self.transform = Dense(config.hidden_size, config.hidden_size)

self.activation = activation_map.get(config.hidden_act, nn.GELU())

self.norm = nn.LayerNorm((config.hidden_size, ), epsilon=1e-12)

self.decoder = Dense(tok_embed_table.shape[1], tok_embed_table.shape[0], weight_init=tok_embed_table)

def construct(self, hidden_states):

hidden_states = self.transform(hidden_states)

hidden_states = self.activation(hidden_states)

hidden_states = self.norm(hidden_states)

hidden_states = self.decoder(hidden_states)

return hidden_statesPut two Task Combine , You can complete the pre training BERT Model :

class BertForPretraining(Cell):

def __init__(self, config):

super().__init__(config)

self.bert = BertModel(config)

self.nsp = BertNextSentencePredict(config)

self.mlm = BertMaskedLanguageModel(config, self.bert.embeddings.tok_embed.embedding_table)

def construct(self, input_ids, segment_ids):

outputs, h_pooled = self.bert(input_ids, segment_ids)

nsp_logits = self.nsp(h_pooled)

mlm_logits = self.mlm(outputs)

return mlm_logits, nsp_logitsWriting at this point , use MindSpore To realize the whole BERT The model is done , You can see , Each module can fully correspond to the formula or diagram , And the implementation of a single module is in 10-20 Row or so , The overall implementation code is 150-200 Between the lines , Compare with Model Zoo Of 800+ Code , It's really simple .

07

before and after comparison

Because the official implementation is really lengthy , Here, choose a screenshot of some code to compare

On the left is the official BERTModel, On the right is the integration of the above implementation , alike BERT The model can go through 100 Simple implementation with multiple lines of code . thus it can be seen ,MindSpore After multiple version iterations , Its own operator support and ease of use of front-end expression have gradually tended to improve , Hundred lines of code BERT, Maybe there was only Pytorch Able to do that. , Now MindSpore It's fine too .

Of course , The official implementation has been maintained since the early version , There should be no consideration of using a more concise way to complete after the version change , But this will cause MindSpore It's hard to use. , The illusion of writing a lot more code . stay 1.2 After release , Its ability has gradually been able to support and Pytorch The same amount of code completes the same level model , Hope this article , Can become a small example .

08

Summary

Finally, let's summarize . First , From my personal experience ,MindSpore from 0.7 Will be available , To 1.0 The basic improvement of , Until then 1.5 Improved usability , stay “ Simple development experience ” On this goal , There is a qualitative leap . and ModelZoo After all, there are many models , And few people continue to restructure and optimize , There should be more than a few misunderstandings . therefore , So BERT Take this milestone model as an example , Let us have a little intuitive experience .

Besides , For all the NLPer A few more words ,BERT That is to say 100 A model of a line of code ,Transformer Structure what you see is what you get , Don't be afraid of big models , Reproduce yourself , Whether it's doing experiments Paper Or interview to answer questions , It's much more handy . therefore , Hold MindSpore Write it down !

MindSpore Official information

official QQ Group : 486831414

Official website :https://www.mindspore.cn/

Gitee : https : //gitee.com/mindspore/mindspore

GitHub : https://github.com/mindspore-ai/mindspore

Forum :https://bbs.huaweicloud.com/forum/forum-1076-1.html

边栏推荐

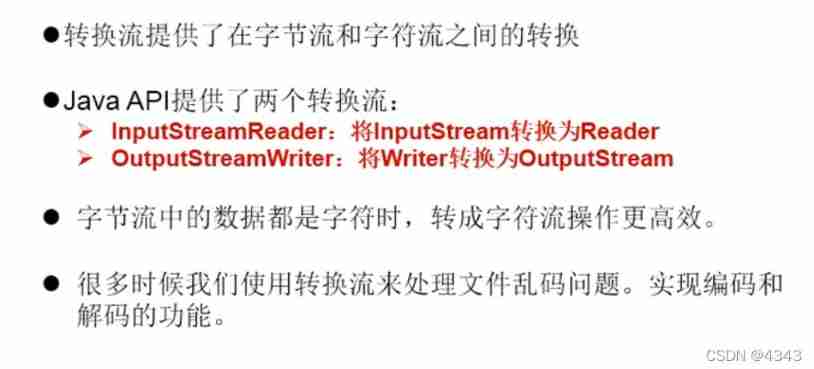

- Introduction of buffer flow

- Longest common prefix and

- 《指環王:力量之戒》新劇照 力量之戒鑄造者亮相

- [cmake] cmake link SQLite Library

- Use of other streams

- Industrial resilience

- Some experiences of Arduino soft serial port communication

- New stills of Lord of the rings: the ring of strength: the caster of the ring of strength appears

- 你开发数据API最快多长时间?我1分钟就足够了

- How long is the fastest time you can develop data API? One minute is enough for me

猜你喜欢

《指环王:力量之戒》新剧照 力量之戒铸造者亮相

Technical dry goods Shengsi mindspire innovation model EPP mvsnet high-precision and efficient 3D reconstruction

Partage de l'expérience du projet: mise en œuvre d'un pass optimisé pour la fusion IR de la couche mindstore

Technical dry goods Shengsi mindspire operator parallel + heterogeneous parallel, enabling 32 card training 242 billion parameter model

Various postures of CS without online line

Introduction of transformation flow

New stills of Lord of the rings: the ring of strength: the caster of the ring of strength appears

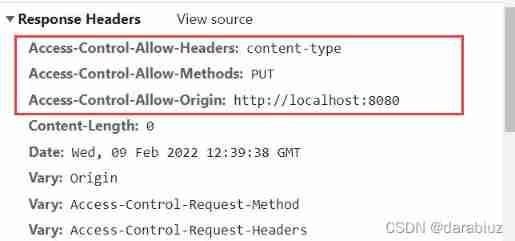

Homology policy / cross domain and cross domain solutions /web security attacks CSRF and XSS

How long is the fastest time you can develop data API? One minute is enough for me

Common methods of file class

随机推荐

Technical dry goods | reproduce iccv2021 best paper swing transformer with Shengsi mindspire

7.2刷题两个

Technical dry goods Shengsi mindspire lite1.5 feature release, bringing a new end-to-end AI experience

Sent by mqtt client server of vertx

[Development Notes] cloud app control on device based on smart cloud 4G adapter gc211

lucene scorer

Dora (discover offer request recognition) process of obtaining IP address

Technical dry goods Shengsi mindspire elementary course online: from basic concepts to practical operation, 1 hour to start!

An overview of IfM Engage

技术干货|利用昇思MindSpore复现ICCV2021 Best Paper Swin Transformer

New stills of Lord of the rings: the ring of strength: the caster of the ring of strength appears

Circuit, packet and message exchange

Wireshark software usage

图像识别与检测--笔记

docket

树莓派更新工具链

Introduction of transformation flow

Download address collection of various versions of devaexpress

论文学习——鄱阳湖星子站水位时间序列相似度研究

最全SQL与NoSQL优缺点对比