当前位置:网站首页>Technical dry goods Shengsi mindspire lite1.5 feature release, bringing a new end-to-end AI experience

Technical dry goods Shengsi mindspire lite1.5 feature release, bringing a new end-to-end AI experience

2022-07-03 07:34:00 【Shengsi mindspire】

Shengsi MindSpore Lite 1.5 Version we are mainly in heterogeneous reasoning 、 Mixed precision reasoning 、 The characteristics of end-to-end training and mixed bit weight quantization are optimized , In reasoning performance 、 The miniaturization of the model and the ease of use and performance of end-to-side training bring new experiences . Now let's take a quick look at these key features .

01

Multi hardware heterogeneous reasoning

How to obtain the optimal reasoning performance on the limited end-side hardware resources is the end-side AI One of the main goals of the reasoning framework . And heterogeneous device hybrid computing can make full use of CPU、GPU、NPU And other heterogeneous hardware resources , Make full use of the computing power and memory of resources , So as to achieve the ultimate performance of end-to-side reasoning .

This time we are 1.5 The version supports the ability of multi hardware heterogeneous reasoning . Users can set open mindspore::Context Enable different back-end heterogeneous hardware , Include CPU、GPU、NPU. And users can set the priority of each hardware according to their needs . Realized Mindspore Lite Flexible deployment on a variety of heterogeneous hardware , And high-performance reasoning .

Heterogeneous deployment of operators according to user selection

Early versions Mindspore Lite Only support CPU+ The pattern of , Can only support CPU And NPU perhaps CPU And GPU Heterogeneous execution , And the priority of heterogeneous hardware cannot be selected . The latest version of multi hardware heterogeneous reasoning features , It gives users more heterogeneous hardware choices , Increased ease of use , It is more friendly to reasoning on heterogeneous hardware .

For information on the use of multi hardware heterogeneous reasoning features, please refer to :

https://www.mindspore.cn/lite/docs/zh-CN/r1.5/use/runtime_cpp.html#id3

02

Mixed precision reasoning

We often find that users are using float16 The accuracy error is too large when reasoning the model , The reason may be that some data are small , Use float16 The low accuracy of expression leads to excessive error , It may also be because part of the data is too large to float16 The scope of expression .

In response to such problems , In the latest version, we provide mixed precision reasoning feature support . Users can specify the reasoning accuracy of specific operators in the model , At present, the available precision is float32、float16 Two kinds of . This feature can solve the problem that some models cannot be used in the whole network float16 The problem of reasoning , Users can use the data in the model float16 Some operators of inference accuracy error , Switch to use float32 Reasoning , So as to ensure the reasoning accuracy of the whole network . In this way, high performance can be used float16 Based on the operator , The inference accuracy of the model is improved .

For information on the use of mixed precision reasoning features, please refer to :

https://www.mindspore.cn/lite/docs/zh-CN/r1.5/use/runtime_cpp.html#id13

03

End to side hybrid accuracy training

In order to improve the end-to-side training performance as much as possible on the premise of ensuring the training accuracy , We are 1.5 The version supports the end-to-side hybrid accuracy training feature , Realized, including 1) Support will fp32 The model is converted online to fp16 Mixed accuracy model , as well as 2) Direct seamless operation MindSpore Two schemes of hybrid accuracy model are derived .

First option , According to the incoming traincfg Realize automatic mixing accuracy , And provides different optimization levels (O0 Do not change the operator type ,O2 Convert the operator to fp16 Model ,batchnorm and loss keep fp32,O3 stay O2 On the basis of general batchnorm and loss Turn into fp16), Configuration is also supported fp16 Operator custom configuration manual mixing accuracy scheme , Provides a choice between performance and accuracy , More flexibility . The calculation process is as follows :

Please refer to the relevant API:

https://www.mindspore.cn/lite/api/zh-CN/r1.5/api_cpp/mindspore.html#traincfg

Second option , Support will MindSpore After the derived hybrid accuracy model is derived, incremental training is directly carried out on the end side , comparison fp32 Training memory 、 Time consumption has increased significantly , On average 20-40%.

04

End to side training support resize

Due to the end side memory limitation , Training model batch Not too big , Therefore, the input model needs to be batch Configurable , Therefore, we support the training model in this version resize The ability of . We added resize Interface , At the same time, the dynamic allocation of training memory is supported in the framework . Use resize The interface only needs to be passed in resize input shape.

Please refer to the relevant API:

https://www.mindspore.cn/lite/api/zh-CN/r1.5/api_cpp/mindspore.html#resize

05

End to side training supports online and offline integration

End to side training is mostly finetune Model , Most of these layers will be frozen , Weight parameters will not change during training , Therefore, the training performance can be improved through the relevant fusion and optimization of these frozen layers in the offline stage . We analyze the connection relationship of model nodes , The scheme of dynamically identifying the fusion optimization points of the frozen layer is realized , Thus, the training performance is significantly improved . We pass the right Effnet Experiment with network training , From the result, the time consumption can be saved by about 40%, Training memory overhead is reduced 30%.

At the same time, we support that after the training , When the inference model is derived at the end of end-to-end training, online fusion , No additional offline optimization is required , Users can directly use this model for online reasoning , Due to the reuse of offline fusion pass, Dynamic library only increases 76k, It ensures the lightness of the end-to-side Training Library .

06

Mixed bit weight quantization

Scenarios with strict requirements for model size , Weight quantization (weight quantization) No data set required , You can directly map the weight data from floating-point type to low bit fixed-point data , Convenient model transmission and storage . The traditional weight model quantization method is to quantify the weight value of the whole model to a specific bit , for example 8 Bit quantization is to Float32 The floating-point number of is mapped to Int8 Fixed point number of , Theoretically, the model can achieve 4 Fold compression , But there is a problem with this approach : High bit quantization can ensure high accuracy , But it needs more storage space ; Low bit memory takes up less space , But there will be a greater loss of accuracy .

MindSpore Lite stay 1.5 The version supports mixed bit weight quantization , According to the data distribution of neural network , Automatically search the quantization bits that are most suitable for the current layer , It can effectively achieve fine granularity between compression ratio and precision trade-off, Realize the efficient compression of the model .

Because different layers of neural network have different sensitivity to quantitative loss , Layers with lower loss sensitivity can be represented by lower bits , The layer with higher loss sensitivity is represented by higher bits .MindSpore Lite The mixed bit quantization of adopts the mean square error as the optimization objective , Automatically search out the most suitable for the current layer scale value .

For the quantified model , At the same time, finite state entropy (Finite State Entropy, FSE) Entropy coding the quantized weight data , You can further obtain greater compression , Up to 50+ times .

Please refer to :

https://www.mindspore.cn/lite/docs/zh-CN/r1.5/use/post_training_quantization.html#id9

MindSpore Official information

GitHub : https://github.com/mindspore-ai/mindspore

Gitee : https : //gitee.com/mindspore/mindspore

official QQ Group : 871543426

边栏推荐

- gstreamer ffmpeg avdec解码数据流向分析

- Read config configuration file of vertx

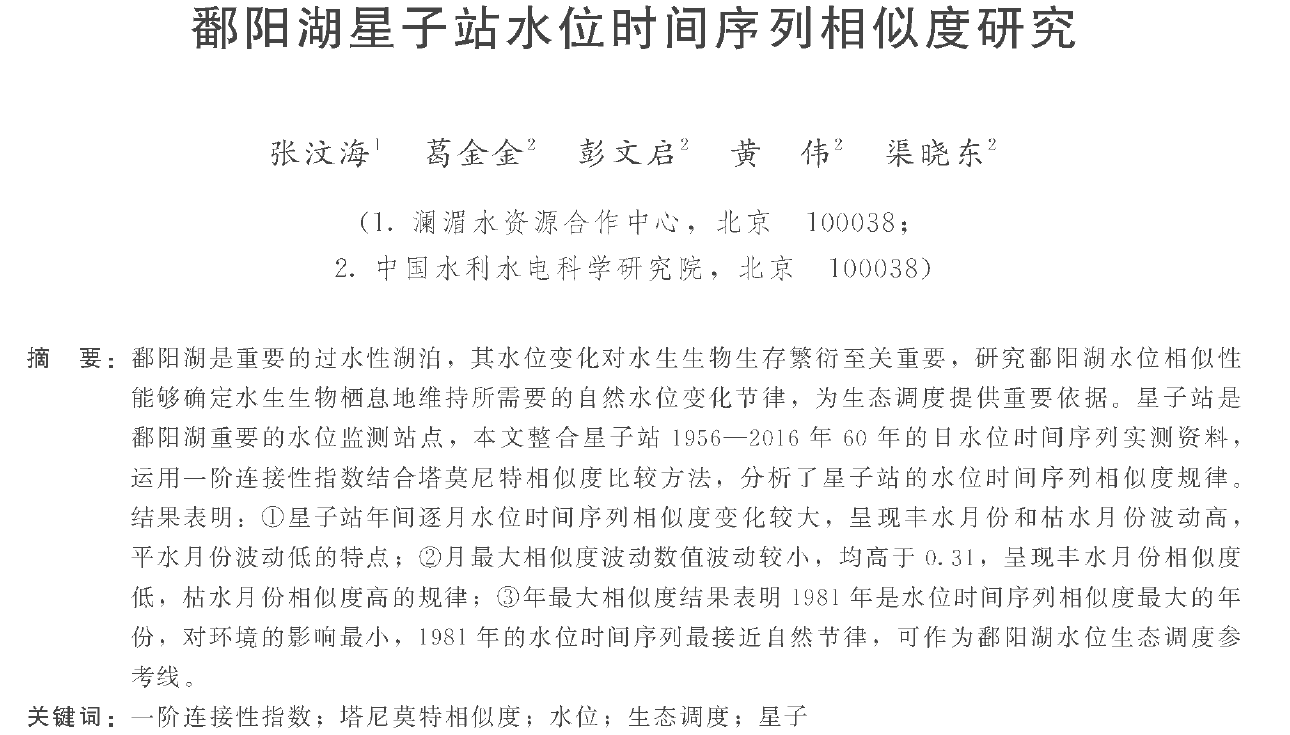

- 论文学习——鄱阳湖星子站水位时间序列相似度研究

- 2. E-commerce tool cefsharp autojs MySQL Alibaba cloud react C RPA automated script, open source log

- Some basic operations of reflection

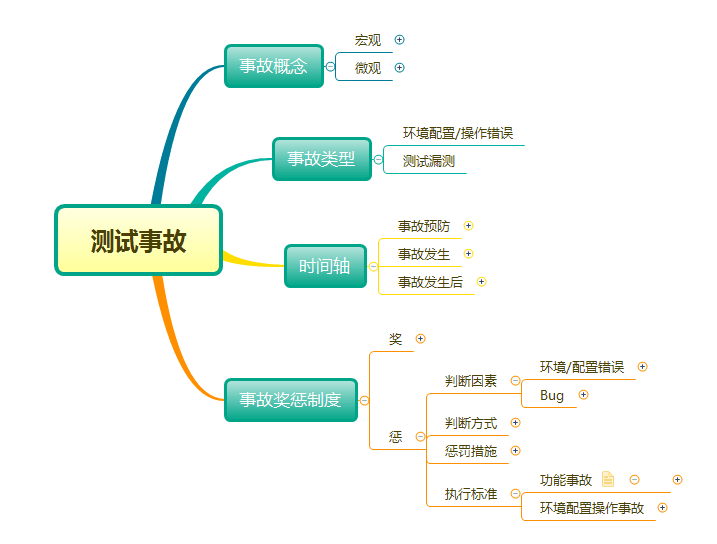

- Take you through the whole process and comprehensively understand the software accidents that belong to testing

- lucene scorer

- 技术干货 | AlphaFold/ RoseTTAFold开源复现(2)—AlphaFold流程分析和训练构建

- [untitled]

- Basic knowledge about SQL database

猜你喜欢

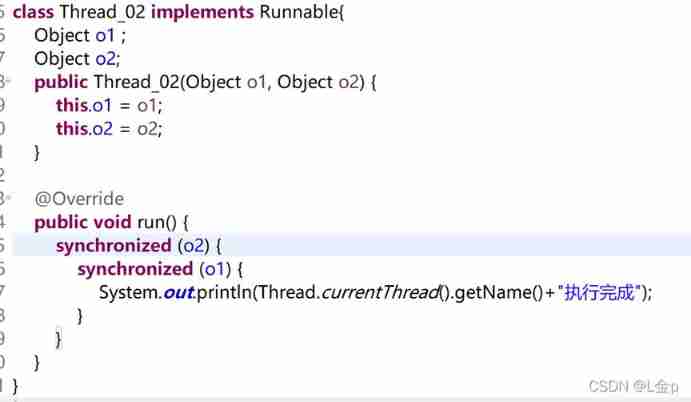

Interview questions about producers and consumers (important)

Take you through the whole process and comprehensively understand the software accidents that belong to testing

HCIA notes

论文学习——鄱阳湖星子站水位时间序列相似度研究

Collector in ES (percentile / base)

Hnsw introduction and some reference articles in lucene9

![[solved] sqlexception: invalid value for getint() - 'Tian Peng‘](/img/bf/f6310304d58d964b3d09a9d011ddb5.png)

[solved] sqlexception: invalid value for getint() - 'Tian Peng‘

Hash table, generic

Use of file class

How long is the fastest time you can develop data API? One minute is enough for me

随机推荐

Lucene introduces NFA

sharepoint 2007 versions

Talk about floating

Analysis of the problems of the 10th Blue Bridge Cup single chip microcomputer provincial competition

Homology policy / cross domain and cross domain solutions /web security attacks CSRF and XSS

Realize the reuse of components with different routing parameters and monitor the changes of routing parameters

gstreamer ffmpeg avdec解码数据流向分析

Operation and maintenance technical support personnel have hardware maintenance experience in Hong Kong

Segment read

Docker builds MySQL: the specified path of version 5.7 cannot be mounted.

Leetcode 198: 打家劫舍

Take you through the whole process and comprehensively understand the software accidents that belong to testing

圖像識別與檢測--筆記

项目经验分享:基于昇思MindSpore,使用DFCNN和CTC损失函数的声学模型实现

La différence entre le let Typescript et le Var

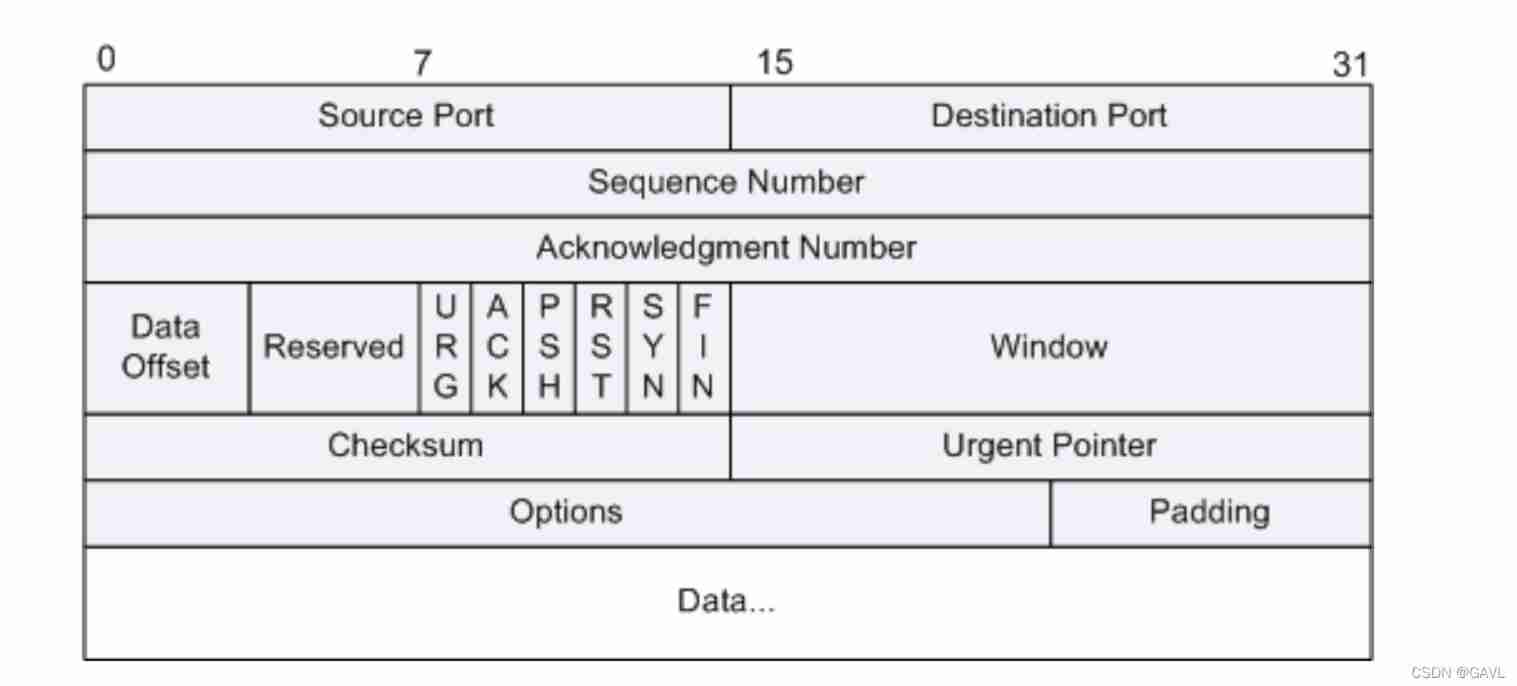

Circuit, packet and message exchange

Lucene hnsw merge optimization

1. E-commerce tool cefsharp autojs MySQL Alibaba cloud react C RPA automated script, open source log

C WinForm framework

Visit Google homepage to display this page, which cannot be displayed