当前位置:网站首页>Richardsutton: experience is the ultimate data of AI. The four stages lead to the development of real AI

Richardsutton: experience is the ultimate data of AI. The four stages lead to the development of real AI

2022-06-21 15:55:00 【Zhiyuan community】

Reading guide : The development of strong artificial intelligence is a topic of concern in recent years . Give Way AI From human perception and behavior , Instead of simply learning from labeled data , It has become the focus of many researchers . among , How to use the daily life experience acquired by human beings , Heuristic construction can adapt to different environments , Artificial intelligence, which interacts with the outside world, has become a new way to explore in some fields .

Known as the father of reinforcement learning Richard Sutton Recently, it was proposed to use experience to inspire AI Development ideas . He will be AI The process from using data to using experience can be divided into four stages , It is proposed to build a real AI(Real AI) Development direction .2022 year 5 month 31 Japan ,Richard Sutton stay 2022 A speech entitled “The Increasing Role of Sensorimotor Experience in AI” Keynote speech of , Inspired by experience AI The way to develop A summary and prospect are given .

Introduction to the speaker : Richard · Sutton (Richard Sutton), One of the founders of modern computational reinforcement learning , yes DeepMind The outstanding research scientist of , Professor, Department of computational science, University of Alberta , It is also the Royal Society 、 Royal Society of Canada 、 Artificial intelligence Promotion Association 、 Alberta Machine Intelligence Institute (AMII) and CIFAR Research Fellow .

Arrangement : Dai Yiming 、 Cui Jingnan

Sutton Think , Agents interact with the outside world , Act on it , And receive perception ( Bring feedback ). This interaction involves experience , It is a normal way of perception in reinforcement learning . It is also a normal way for agents to try to predict the external world . However , This method is rarely seen in supervised learning , Supervised learning is the most common type of machine learning . Ordinary experience is not involved in machine learning (Ordinary Experience), The model will not learn from special training data different from ordinary experience . in fact , At run time , The supervised learning system does not learn at all .

So , Experience is interaction ( bring ) The data of , It is a way to communicate with the outside world . Experience has no meaning , Unless there is a connection with other experiences . Of course , There is one exception : A reward indicated by a special signal . Rewards represent good goals , Agents certainly want to maximize rewards .

In the speech ,Sutton It raises a core issue : Intelligence is ultimately explained by what ? Is an objective term (Objective terms), Or the term of experience (Experiential terms)? The former includes the state of the external world 、 The goal is 、 people 、 place 、 Relationship 、 Space 、 action 、 Distance and other things that are not in the agent , The latter includes perception 、 action 、 Reward 、 Time steps and other things inside the agent .Sutton Think , Although researchers usually think about objective concepts when communicating and writing papers , But now more attention should be paid to the experience generated in the interaction between agents and the external world .

In order to further introduce the importance of experience for agents ,Richard Sutton Put forward , As experience is valued , It has gone through four stages . Respectively : agent (Agenthood ), Reward (Reward), Experience state (Experiential State), And predictable knowledge (Predictive Knowledge). After these four stages of development ,AI Gradually have experience , Become more practical 、 Learnable and easy to expand .

1. agent (Agenthood)

An agent means having / Gain experience ( Of AI). Perhaps surprisingly , In the early AI The system does not have any experience . In the early stages of AI development (1954-1985 year ), majority AI The system is only used to solve problems or answer questions , They have no perception , Will not act . Robots are an exception , But the traditional system only has startup state and target state , Just like the building blocks to be stacked in the figure below .

If you want to achieve the appropriate target state , The solution is a sequence of actions , Make sure AI Be able to reach the target state from the startup state . There is no perception or action , Because the whole external world is known 、 determine 、 Closed , So there is no need to let AI Perception and action . Researchers know what happens , So just build a plan to solve the problem , Give Way AI To execute , Humans know that this will solve the problem .

in the past 30 In the development of , The research of artificial intelligence focuses on building agents . This change can be reflected in this point : The standard textbook of artificial intelligence includes the concept of agent , Use it as a foundation . for example ,1995 Year version of 《 Artificial intelligence : A modern way 》 I mentioned , The unified theme of the book is to introduce agents (Intelligent Agent) The concept of . In this perspective ,AI The problem is to describe and build agents , And gain knowledge from the environment , And take action . With the development of research , standard 、 The modern approach is to build an agent that can interact with the outside world .Sutton I think we can look at it from this perspective AI.2. Reward (Reward)

Reward (Reward) It is described in the form of experience AI The goal of . This is also an effective method proposed at present , Be able to build AI All our goals . This is also Sutton The method proposed by its collaborators .

Reward is considered to be a sufficient hypothesis at present —— Intelligence and its related capabilities can be understood as the result of serving to maximize rewards . So there is a saying that , The reward is enough for the agent .

However Sutton Think , This idea needs to be challenged . Rewards are not enough to achieve intelligence . The reward is just a number 、 A scalar , It is not enough to explain the goal of intelligence . From outside the mind , A goal expressed in a single number , It looks too small 、 Too reductive , Even too demeaning ( The human goal ) 了 . Human beings like to imagine their goals bigger , Such as taking care of the family 、 Save the world 、 world peace 、 Make the world a better place . Human goals are more important than maximizing happiness and comfort .

Just as researchers have found that rewards are not a good way to build goals , Researchers have also found the advantage of building goals through rewards . The goal of reward construction is too small , But people can make progress in it —— Goals can be well 、 Clearly define , And easy to learn . This is a challenge for building goals through experience .

Sutton Think , Imagine fully building goals through experience , This is challenging . Looking back on history, we can see ,AI I wasn't interested in rewards , Even now . therefore , Whether it's an early problem solving system , It is also the latest version of AI textbook , It still defines goals as the state of the world that needs to be achieved (World State), Not empirical ( Definition ). This goal may still be a specific set of “ Building blocks ”, It is not a perceptual result to be achieved .

Of course , The latest textbooks already have chapters on reinforcement learning , And mention these AI Using a reward mechanism . Besides , In the process of building goals , Reward is already a regular practice , Markov decision process can be used to realize . For researchers who criticize and reward those who fail to fully construct their goals ( Such as Yann LeCun) Come on , Reward is already the part of intelligence “ The cake ” Apical “ Cherry ” 了 , It is very important . In the next two stages ,Sutton It will introduce how to understand the external world from the perspective of experience , But before that , He will first introduce what experience refers to .

3. episode : What is experience

The sequence shown in the figure below ( Untrue data ) Shown , When the time step starts , The system will get the sensing signal , It also sends out signals and actions . So the perceptual signal may cause some actions , And these actions will cause the next perceptual signal . At any time , Systems need to pay attention to recent actions and recent signals , So that we can decide what will happen next , How to do .

As shown in the figure , This is an array of input and output signals for the agent to execute the program . The first column is the time step , Each step can be thought of as 0.1 Seconds or 0.01 In seconds . The action signal column is represented by two-level system , Represented by gray and white . Then there is the perceptual signal column , The first four columns are binary values ( Also use gray and white ), The last four columns are 0-3 Four values of , Red, yellow, blue and green , The last column is a continuous variable , Represents a reward . In the experiment , The researchers removed the numbers , Just leave the color , So as to find patterns in it .Sutton Think , Experience , It means feeling - Patterns found in sports experience data generate knowledge and understanding .

In this case ,Sutton Four typical patterns are listed :

1. The last one in action , It is the same as the perceptual signal that follows . If the action of a certain time step is white , The first perceptual signal after that is also white , Grey is the same .2. When red pixels appear , The next time step is the green pixel . After expanding the data range, you can find , After the red and green pixels appear one after another , Blue pixels appear every other time step .

3. The last three columns of data often have a long string of the same color , remain unchanged . Once a color starts , It will last for multiple time periods , Finally, stripes are formed . Such as a long string of red 、 green 、 Blue, etc .4. If displayed AI Predicted specific perceptual data , Many times this is not immediately observable , Therefore, the return value is added to this data (Return), It represents a prediction of the rewards that will come . The green bar in the box represents the following reward , Green will be more than red . This represents the current forecast for rewards .

Special shaded areas represent wait functions . There will be green and red bands in the shaded area of the wait function . ad locum , The sooner the researchers will return , With color reward, give higher weight . As the return value moves over time , You can see the corresponding changes in color and value between the predicted result and the actual reward , This return value is a prediction —— It can learn from experience .

Sutton Think , This return value is not essentially learned from events that have already occurred , But from the time difference signal . The most important signal is the value function . In this case , The return value is actually a value function , Represents the sum of future rewards . If you want a general form of , complex , A function that can refer to future values , A general value function can be used (General Value Functions GVFs) Methods . The general value function includes various signals , It's not just a reward ; It can be any time envelope , Not just indices . The general value function can also include policies for any queue , There are many predictable quantities , A wide range of things . Of course ,Sutton Think , Predict by calculation , The degree of difficulty depends on the form of the predicted object . When forecasting using a general value function , The expression of the predicted object needs to be designed to be easy to learn , And it needs high computational efficiency .

4. Experience state (Experiential State)

mention “ state ” The word , Many studies will mention the state of the world (World State), This is a word under the objective concept . State refers to a symbolic description of the objective world ( reflect ), Can match the situation of the world itself . for example , For the position information of building blocks (C stay A On ) etc. . At a recent time , Some researchers ( Such as Judea Pearl) A probability graph model is proposed , It represents the probability distribution of the state of the world . Some events , Such as “ It's raining outside , Whether the grass is wet ?” etc. , There is a probability relationship between these events .

Another state is the state of belief (Belief State), In this concept , A state is a probability distribution , It represents the state of the discrete world , The corresponding method is called POMDPs(Partially observable Markov decision process)—— There are hidden state variables , Some of them are observable , Markov decision process can be used for modeling .

The above methods are objective , Far from experience , It is the method that researchers try to describe the state of the world at the beginning .

And what is different , It is the state of experience .Sutton Think , The state of experience refers to the state of the whole world, which is defined according to experience . The state of experience is a summary of past experience , Be able to predict and control the experience that will be gained in the future .

The past experience of this structure , Forecasting future practices , It has been reflected in the research . for example , One of the tasks of reinforcement learning —— Yadali game , The researcher will use the last four frames of video to construct the empirical state , Then predict future behavior .LSTM Some methods in the network , It can also be considered as a prediction from an empirical state .

return Look at the experience state , It can be updated recursively . The empirical state is a function of summing up the whole past , because AI Need to access experience status all the time , Realize the prediction of the next event , So the update of experience state is recursive : Only the experience status of the previous time can be accessed at the current time , The experience state at the last moment is a summary of all the events that have happened in the past . The next moment , Also only access the experience state at this moment , This state of experience is also a summary of all events that have occurred in the past .

The following figure shows the construction process of agent experience state . among , The red arrow indicates the basic working signal of the agent , Include : Feeling 、 action 、 Rewards, etc . The blue arrow indicates the state of experience ( characterization ) The direction of , Output from perception , He is responsible for updating his experience status for each time step . The updated status will be used to develop strategies for actions , Or other updates .

5. Predictive knowledge (Predictive Knowledge)

knowledge , Such as “ Joe Biden is the president of the United States ”,“ The Eiffel Tower is in Paris ” etc. , Both are descriptions of the external objective world , It's not empirical . however , Be similar to “ The estimated cost of doing sth X Hours ” Such knowledge , It is experience and knowledge . There is a huge difference between empirical knowledge and objective knowledge , This is also for AI One of the challenges of the study .

In the past AI Research tends to regard knowledge as an objective term , Although there have been some recent studies that look at problems from an empirical perspective . In the early AI The system has no experience , It is impossible to predict . And more modern AI Regard knowledge as an objective existence . More advanced is the probability graph model , But most of the time it studies the probability between two simultaneous events , And the prediction should aim at a series of events .

The prediction based on sequence events is the knowledge with clear semantic attributes . If something is predicted to happen ,AI You can compare the forecast with the actual results . And this prediction model , It can be regarded as a new kind of world knowledge , Predictive knowledge . And in predictive knowledge ,Sutton I think the most advanced is the general value function (General Value Function) And select the model (Option Model).

Sutton Divide world knowledge into two categories , One is knowledge about the state of the world ; The second is the knowledge about the transformation of the world state . The case of knowledge about world state transition is the world prediction model . The world prediction model here , It is not a Markov decision-making process or difference equation in its primary form . It can be an abstract state , It can be extracted from the empirical state . Because the prediction is based on the whole behavior , So in the selection model , An agent can also choose to stop a policy , End a condition . occasionally , Use the transition model for melody , It is possible to predict the state after a certain action is taken . Take everyday life as an example , Suppose someone is going to town , He / She'll be wondering about the distance to the city center 、 Time to make a prediction , For behavior that exceeds a certain threshold ( Like walking 10 Minutes into the city ), Will further predict a state , Such as fatigue .

With this model that extends behavior , The scale of knowledge can also be very large . for example , According to a behavior , Predict the state of the world , Then predict the next behavior according to the state ...... And so on . Sum up experience in AI The development process in the research ,Sutton Express , Experience is the foundation of world knowledge , Human beings know and influence the world through perception and action , Experience is human access to information 、 The only way to take action , And it is indispensable to human beings . Unfortunately , Because experience is too subjective and personal , Human beings still do not like to think and express in the way of experience . Experience is too strange to human beings 、 Counter intuitive 、 brief 、 complex . And experience is subjective 、 intimate , Communicate with others , Or verification is almost impossible .

Sutton Think , Experience for AI Very important , There are several reasons . First, experience comes from AI Daily operation process of , There is no cost to gain this experience 、 automatic . meanwhile ,AI There is a large amount of data in the field for calculation , So experience provides a way to understand the world , If any fact in the world is empirical , that AI You can learn about the world from experience , And verify it through experience .

边栏推荐

- [redis] basic operation of key

- Research Report on the overall scale, major producers, major regions, products and application segments of active aluminum chloride in the global market in 2022

- Tomb. Weekly update of Finance (February 14-20)

- Three sides of the headline: tostring(), string Valueof, (string) forced rotation. What is the difference

- Select article (039) - when the button is clicked, event What is target?

- Kubernetes deployment language

- Mysql5.7 add SSL authentication

- When Huawei order service is called to verify the token interface, connection reset is returned

- 对Integer进行等值比较时踩到的一个坑

- Perfect partner of ebpf: cilium connected to cloud native network

猜你喜欢

Comprehensive learning notes for intermediate network engineer in soft test (nearly 40000 words)

Go language - Method

Gmail: how to track message reading status

Gather high-quality ar application developers, and help the AR field prosper with technology

加密市场「大逃杀」:清算、抛售、挤兑

WEB3 安全系列 || 攻击类型和经验教训

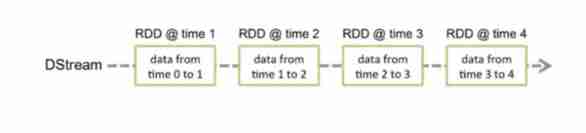

Dstream and its basic operating principle

Mid year summary of 33 year old programmer

What has paileyun done to embrace localization and promote industrial Internet?

20000 words + 30 pictures | what's the use of chatting about MySQL undo log, redo log and binlog?

随机推荐

Mid year summary of 33 year old programmer

Write commodity table with JSP

Principles and examples of PHP deserialization vulnerability

Go language - structure

[deep learning] was blasted by pytorch! Google abandons tensorflow and bets on JAX

Go language - Method

建立自己的网站(4)

What is Objective-C ID in swift- What is the equivalent of an Objective-C id in Swift?

GO语言-指针

Quod AI: find the code you need faster

Analysis report on competition trend and development scale of China's heavy packaging industry 2022-2028 Edition

Mysql5.7 add SSL authentication

Soft test intermediate network engineering test site

高级性能测试系列《1.思维差异、性能的概念、性能测试》

高级性能测试系列《6.问题解答、应用的发展》

Description of new features and changes in ABP Framework version 5.3.0

The key points of the article get to solid principle

Blazor overview and routing

Turn off WordPress auto thumbnail

2022awe opened in March, and Hisense conference tablet was shortlisted for the review of EPLAN Award