当前位置:网站首页>Exploration on the construction path of real-time digital warehouse integrating digital intelligence learning and streaming batch

Exploration on the construction path of real-time digital warehouse integrating digital intelligence learning and streaming batch

2022-06-28 02:37:00 【Digital technology dtwave】

Column words

Shulan technology opens a new column 「 Technical school +」, Focus on cutting edge technology , Insight into the wind direction of the industry , Share R & D experience and application practice from the front line .

This column is brought by liumu, a R & D expert of Shulan technology , Explore the construction path of real-time data warehouse integrating flow and batch .

Introduction

At the beginning of the data warehouse construction process , Enterprise business scenarios are basically based on batch processing , Use mature offline technology to build offline data warehouse , There may also be some real-time processing scenarios in the middle , But most of them will be converted to quasi real-time processing mode , Such as minute scheduling .

With the development of the times , Enterprise business data is growing geometrically , The shortage of traditional offline data warehouse is gradually revealed , The quasi real-time processing method is not enough to meet the business demands , Enterprises begin to build real-time data warehouses .

In the process of real-time warehouse construction , Use the same set of code to realize the flow calculation and batch calculation of big data , So as to ensure the consistency between the processing process and the results “ Stream batch integration ” The technical concept is widely recognized by the industry , And successfully verified in multiple business scenarios , Gradually to the ground .

One 、 Development of flow batch integration technology concept

Look back at , The development of real-time data warehouse technology architecture has mainly experienced Three stages :Lambda framework 、Kappa framework 、 Carrying data Lake Kappa framework .

stay Lambda Architecture , Batch and stream processing are separate , The off-line data acquisition and processing are carried out through periodic scheduling , Intermediate data can also be saved , At the same time, real-time stream processing can quickly provide processed data . Batch processing ensures data accuracy , Stream processing ensures the timeliness of data , The architecture is stable .

But on the other hand ,Lambda The drawbacks of architecture are also obvious , Own batch 、 Stream two different computing engines , Two sets of codes need to be maintained for the same business scenario , It is easy to produce different data results ; Two computing engines , Data development costs 、 Operation and maintenance costs are also relatively high .

In order to solve the above problems , The industry has put forward the technical concept of integrating flow and batch , That is, the computing engine has the low latency of flow computing at the same time 、 High throughput and stability of batch computing , The same set of programming interface is used to realize batch computing and flow computing and ensure the consistency of underlying execution logic , So as to ensure the consistency of the processing process and results .

The integration of flow and batch is mainly reflected in the following four aspects :

Unified metadata : Offline and real-time metadata are stored uniformly .

Unified data storage : That is, the data of offline calculation and real-time calculation are uniformly stored , Avoid data inconsistencies 、 Problems such as repeated storage and repeated calculation .

Unified computing engine : That is, offline computing and real-time computing adopt a unified computing engine , And use the same set of logic or code to cover the two scenarios .

Unified semantics : That is, the unification of semantic development layer , Think about design from the user's point of view , Make the data development process convenient 、 Low threshold 、 high efficiency . Simple understanding can be divided into three categories : Unified development, such as the use of unified SQL or SDK、 Develop based on business model or logical model, such as low code or no code 、 Unified feature development process, such as in flow computing or batch computing AI engineering .

Two 、 Real time data warehouse architecture based on stream batch integration

After the concept of flow batch integration technology was put forward ,Kappa Architecture has come into the mainstream .Kappa The architecture integrates streams and batches , Data caching through message queuing , The result data is stored in KV database (Hbase/ES) or OLAP In the database , For the business party to access and analyze in real time . Data R & D only needs to write a set of processing logic , Ensure the consistency of data , At the same time, the resource consumption and maintenance cost are relatively reduced .

But the architecture also has flaws , The data in the message queue cannot be ad hoc analyzed , And the performance of the message queue itself 、 Storage requirements are very high , The full link depends on message queuing , It is easy to cause incorrect data results caused by data timing , Besides , Message queue backtracking ability is not as good as offline storage .

With the data lake and Flink And other related big data technologies , be based on Flink+ Data lake Kappa framework It has become the mainstream architecture of real-time data warehouse integrating flow and batch .

adopt Flink CDC Technology writes full and incremental raw data to ODS Layer , Use data lake for unified storage , Follow up only through Flink Calculation engine 、 Write a set of code to calculate the data in the data lake , The entire data processing link can be completed , Ensure the consistency of data , Reduced operation and maintenance costs ; meanwhile , Some data Lake technologies are as follows Iceberg It can also be directly connected Presto/Trino Calculation engine , Scenarios that can quickly support ad hoc analysis of real-time data .

3、 ... and 、 A platform for counting —— Stream batch integrated real-time computing platform

Datacenter of digital LAN technology builds a suite of digital habitat platforms , Provide a one-stop flow batch integrated computing platform , Through cluster management 、 Metadata management 、 Data development 、 Operation and maintenance release 、 Visual monitoring alarm and other core function modules , Help enterprises quickly build a real-time data warehouse platform .

at present , The digital habitat platform adopts Flink+Iceberg Technical solution , The metadata of the table is uniformly stored in HiveMeta in , Data files are uniformly stored in HDFS On , Through full hosting, users do not need to care about the architecture principle of the underlying storage computing cluster , Just focus on your own business logic .

The core functions of the digital habitat platform :

- Plug in design , It is suitable for different big data computing clusters from various manufacturers .

- Support rich node libraries , Can quickly expand support for new data sources .

- Provide Wizard mode to create real-time ETL Homework , Full data and incremental data are synchronized and seamlessly connected , It also supports multiple primary key conflict modes at the data writing end .

- Built in a variety of DDL Templates , Reduce development workload 、 Avoid manual input of wrong meter structure , Focus on the task development process .

- Support online SQL Develop built-in SQL format 、 Semantic check 、 Code highlighting, etc , Shielding the underlying native code framework , Lower the development threshold .

- Integrate Flink Web UI, Online real-time monitoring of task operation status and rapid and accurate positioning of abnormal .

- Support visual drag and drop development configuration jobs , The operation process and dependencies are clear .

Build a real-time data warehouse based on the data habitat platform , First, collect the data from various data sources through the real-time synchronization task Iceberg in , And then create... On the real-time development platform Flink SQL or Flink Job to calculate and process data , Calculation results can be written Hbase/ES/Mysql etc. , Finally, create a data service API For business application calls .

The official website of Shulan technology _ Let the data work

边栏推荐

- 【历史上的今天】6 月 13 日:分组交换网路的“亲子纠纷”;博弈论创始人出生;交互式电视初现雏形

- 数智学习 | 流批一体实时数仓建设路径探索

- Graduation summary

- Jenkins - Pipeline concept and creation method

- 【历史上的今天】6 月 15 日:第一个手机病毒;AI 巨匠司马贺诞生;Chromebook 发布

- 《低代码解决方案》——覆盖工单、维修和财务全流程的数字化售后服务低代码解决方案

- 数据治理与数据标准

- KVM相关

- 【历史上的今天】6 月 2 日:苹果推出了 Swift 编程语言;电信收购联通 C 网;OS X Yosemite 发布

- SQL injection bypass (2)

猜你喜欢

【历史上的今天】6 月 16 日:甲骨文成立;微软 MSX 诞生;快速傅里叶变换发明者出生

How to realize red, green and yellow traffic lights in ros+gazebo?

【历史上的今天】5 月 31 日:Amiga 之父诞生;BASIC 语言的共同开发者出生;黑莓 BBM 停运

ScheduledThreadPoolExecutor源码解读(二)

SQL 注入绕过(五)

ROS+Gazebo中红绿黄交通灯如何实现?

CVPR22收录论文|基于标签关系树的层级残差多粒度分类网络

【历史上的今天】6 月 23 日:图灵诞生日;互联网奠基人出生;Reddit 上线

关于st-link usb communication error的解决方法

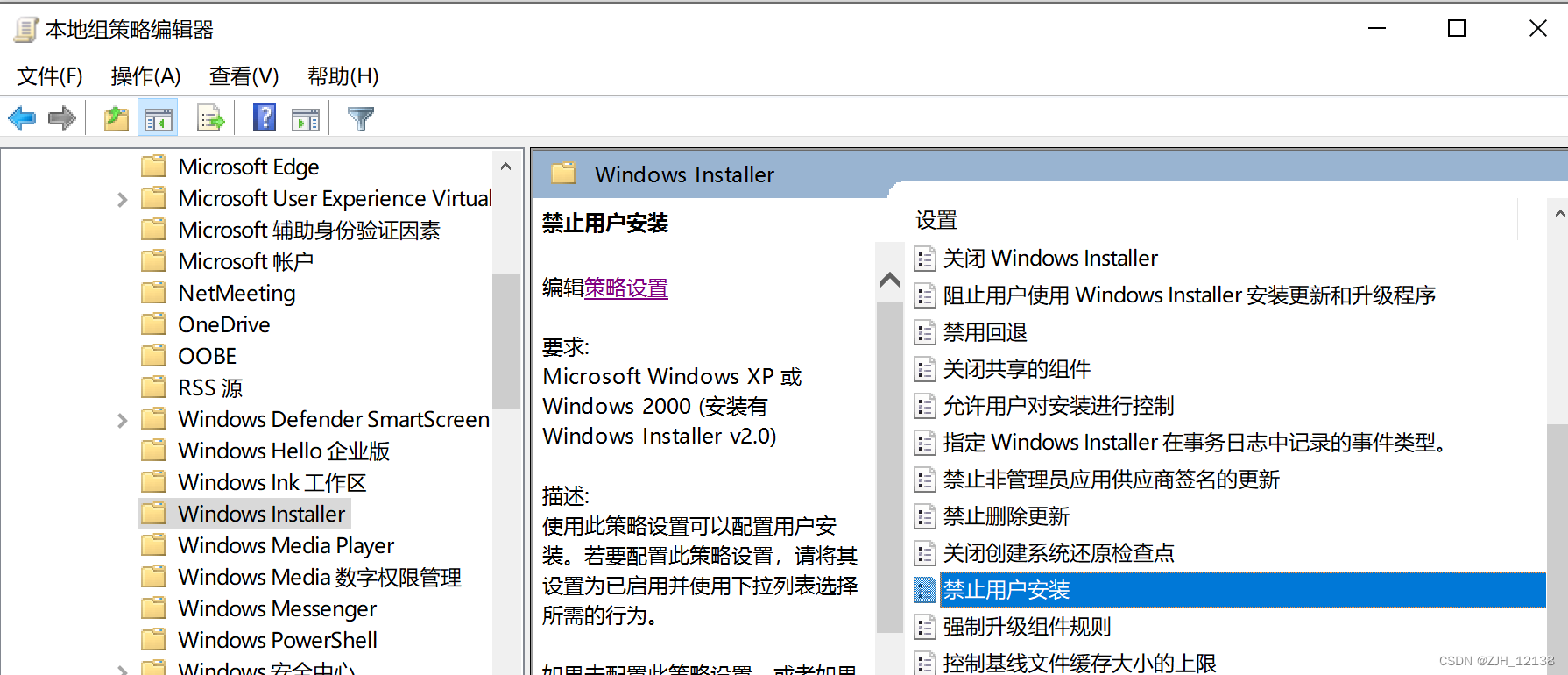

系统管理员设置了系统策略,禁止进行此安装。解决方案

随机推荐

STM32F103的11个定时器

Mysql查询相关知识(进阶七:子查询

How technicians become experts in technical field

【历史上的今天】6 月 11 日:蒙特卡罗方法的共同发明者出生;谷歌推出 Google 地球;谷歌收购 Waze

MySQL优化小技巧

ROS+Gazebo中红绿黄交通灯如何实现?

启牛开户安全吗?怎么线上开户?

Prometheus 2.27.0 新特性

Scoped attribute and lang attribute in style

数据清洗工具flashtext,效率直接提升了几十倍数

MySQL interview question set

Jenkins - groovy postbuild plug-in enriches build history information

贪吃蛇 C语言

Domain Name System

Prometheus 2.27.0 new features

Résumé de la graduation

关于st-link usb communication error的解决方法

Jenkins - 内置变量访问

云原生(三十) | Kubernetes篇之应用商店-Helm

11 timers for STM32F103