当前位置:网站首页>Scripy framework learning

Scripy framework learning

2022-07-04 13:42:00 【Hua Weiyun】

Case study jd Book crawler

jd It's easier to crawl the book website , Mainly data extraction

spider Code :

import scrapyfrom jdbook.pipelines import JdbookPipelineimport refrom copy import deepcopyclass JdbookspiderSpider(scrapy.Spider): name = 'jdbookspider' allowed_domains = ['jd.com'] start_urls = ['https://book.jd.com/booksort.html'] # Process the data of the classification page def parse(self, response): # Here, with the help of selenium First visit jd Book net , Because direct get request jdbook Get just a bunch js Code , Nothing useful html Elements , adopt selenium Normal access to web pages , take page_source( Is the content of the current web page ,selenium Properties provided ) Return to spider Data processing # Handle the list page of the big category response_data, driver = JdbookPipeline.gain_response_data(url='https://book.jd.com/booksort.html') driver.close() item = {} # because selenium Back to page_source Is string , So you can't use xpath, Using regular ( You can also use bs4 Then use regular ) middle_group_link = re.findall('<em>.*?<a href="(.*?)">.*?</a>.*?</em>', response_data, re.S) big_group_name = re.findall('<dt>.*?<a href=".*?">(.*?)</a>.*?<b>.*?</b>.*?</dt>', response_data, re.S) big_group_link = re.findall('<dt>.*?<a href=".*?channel.jd.com/(.*?)\.html">.*?</a>.*?<b>.*?</b>.*?</dt>', response_data, re.S) middle_group_name = re.findall('<em>.*?<a href=".*?">(.*?)</a>.*?</em>', response_data, re.S) for i in range(len(middle_group_link)): var = str(middle_group_link[i]) var1 = var[:var.find("com") + 4] var2 = var[var.find("com") + 4:] var3 = var2.replace("-", ",").replace(".html", "") var_end = "https:" + var1 + "list.html?cat=" + var3 for j in range(len(big_group_name)): temp_ = var_end.find(str(big_group_link[j]).replace("-", ",")) if temp_ != -1: item["big_group_name"] = big_group_name[j] item["big_group_link"] = big_group_link[j] item["middle_group_link"] = var_end item["middle_group_name"] = middle_group_name[i] # Request the detail page of the small group under the large group if var_end is not None: yield scrapy.Request( var_end, callback=self.parse_detail, meta={"item": deepcopy(item)} ) # Deal with the data of the book list page def parse_detail(self, response): print(response.url) item = response.meta["item"] detail_name_list = re.findall('<div class="gl-i-wrap">.*?<div class="p-name">.*?<a target="_blank" title=".*?".*?<em>(.*?)</em>', response.body.decode(), re.S) detail_content_list = re.findall( '<div class="gl-i-wrap">.*?<div class="p-name">.*?<a target="_blank" title="(.*?)"', response.body.decode(), re.S) detail_link_list = re.findall('<div class="gl-i-wrap">.*?<div class="p-name">.*?<a target="_blank" title=".*?" href="(.*?)"', response.body.decode(), re.S) detail_price_list = re.findall('<div class="p-price">.*?<strong class="J_.*?".*?data-done="1".*?>.*?<em>¥</em>.*?<i>(.*?)</i>', response.body.decode(), re.S) page_number_end = re.findall('<span class="fp-text">.*?<b>.*?</b>.*?<em>.*?</em>.*?<i>(.*?)</i>.*?</span>', response.body.decode(), re.S)[0] print(len(detail_price_list)) print(len(detail_name_list)) for i in range(len(detail_name_list)): detail_link = detail_link_list[i] item["detail_name"] = detail_name_list[i] item["detail_content"] = detail_content_list[i] item["detail_link"] = "https:" + detail_link item["detail_price"] = detail_price_list[i] yield item # Page turning for i in range(int(page_number_end)): next_url = item["middle_group_link"] + "&page=" + str(2*(i+1) + 1) + "&s=" + str(60*(i+1)) + "&click=0" yield scrapy.Request( next_url, callback=self.parse_detail, meta={"item": deepcopy(item)} )pipeline Code :

# Define your item pipelines here## Don't forget to add your pipeline to the ITEM_PIPELINES setting# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html# useful for handling different item types with a single interfaceimport csvfrom itemadapter import ItemAdapterfrom selenium import webdriverimport timeclass JdbookPipeline: # Write data to csv file def process_item(self, item, spider): with open('./jdbook.csv', 'a+', encoding='utf-8') as file: fieldnames = ['big_group_name', 'big_group_link', 'middle_group_name', 'middle_group_link', 'detail_name', 'detail_content', 'detail_link', 'detail_price'] writer = csv.DictWriter(file, fieldnames=fieldnames) writer.writerow(item) return item def open_spider(self, spider): with open('./jdbook.csv', 'w+', encoding='utf-8') as file: fieldnames = ['big_group_name', 'big_group_link', 'middle_group_name', 'middle_group_link', 'detail_name', 'detail_content', 'detail_link', 'detail_price'] writer = csv.DictWriter(file, fieldnames=fieldnames) writer.writeheader() # Normal access provided jdbook Method , With the help of selenium @staticmethod def gain_response_data(url): drivers = webdriver.Chrome("E:\python_study\spider\data\chromedriver_win32\chromedriver.exe") drivers.implicitly_wait(2) drivers.get(url) drivers.implicitly_wait(2) time.sleep(2) # print(tb_cookie) return drivers.page_source, driversCase study Dangdang Book crawler

It is also easier to climb Dangdang net , But here we need to combine scrapy-redis To achieve distributed crawling data

import urllibfrom copy import deepcopyimport scrapyfrom scrapy_redis.spiders import RedisSpiderimport re# No longer inheritance Spider class , It's inherited from scrapy_redis Of RedisSpider class class DangdangspiderSpider(RedisSpider): name = 'dangdangspider' allowed_domains = ['dangdang.com'] # http://book.dangdang.com/ # meanwhile ,start_urls No longer use , Instead, define a redis_key, spider To crawl request Object takes this value as key, url Store values in redis in ,spider Climb from redis In order to get redis_key = "dangdang" # Deal with book classification data def parse(self, response): div_list = response.xpath("//div[@class='con flq_body']/div") for div in div_list: item = {} item["b_cate"] = div.xpath("./dl/dt//text()").extract() item["b_cate"] = [i.strip() for i in item["b_cate"] if len(i.strip()) > 0] # Intermediate classification and grouping if len(item["b_cate"]) > 0: div_data = str(div.extract()) dl_list = re.findall('''<dl class="inner_dl" ddt-area="\d+" dd_name=".*?">.*?<dt>(.*?)</dt>''', div_data, re.S) for dl in dl_list: if len(str(dl)) > 100: dl = re.findall('''.*?title="(.*?)".*?''', dl, re.S) item["m_cate"] = str(dl).replace(" ", "").replace("\r\n", "") # Small category grouping a_link_list = re.findall( '''<a class=".*?" href="(.*?)" target="_blank" title=".*?" nname=".*?" ddt-src=".*?">.*?</a>''', div_data, re.S) a_cate_list = re.findall( '''<a class=".*?" href=".*?" target="_blank" title=".*?" nname=".*?" ddt-src=".*?">(.*?)</a>''', div_data, re.S) print(a_cate_list) print(a_link_list) for a in range(len(a_link_list)): item["s_href"] = a_link_list[a] item["s_cate"] = a_cate_list[a] if item["s_href"] is not None: yield scrapy.Request( item["s_href"], callback=self.parse_book_list, meta={"item": deepcopy(item)} ) # Processing book list page data def parse_book_list(self, response): item = response.meta["item"] li_list = response.xpath("//ul[@class='bigimg']/li") # todo improvement , Do different processing for different book list pages # if li_list is None: # print(True) for li in li_list: item["book_img"] = li.xpath('./a[1]/img/@src').extract_first() if item["book_img"] is None: item["book_img"] = li.xpath("//ul[@class='list_aa ']/li").extract_first() item["book_name"] = li.xpath("./p[@class='name']/a/@title").extract_first() item["book_desc"] = li.xpath("./p[@class='detail']/text()").extract_first() item["book_price"] = li.xpath(".//span[@class='search_now_price']/text()").extract_first() item["book_author"] = li.xpath("./p[@class='search_book_author']/span[1]/a/text()").extract_first() item["book_publish_date"] = li.xpath("./p[@class='search_book_author']/span[2]/text()").extract_first() item["book_press"] = li.xpath("./p[@class='search_book_author']/span[3]/a/text()").extract_first() next_url = response.xpath("//li[@class='next']/a/@href").extract_first() yield item if next_url is not None: next_url = urllib.parse.urljoin(response.url, next_url) yield scrapy.Request( next_url, callback=self.parse_book_list, meta={"item": item} )pipeline Code :

import csvfrom itemadapter import ItemAdapterclass DangdangbookPipeline: # Writes data to csv In file def process_item(self, item, spider): with open('./dangdangbook.csv', 'a+', encoding='utf-8', newline='') as file: fieldnames = ['b_cate', 'm_cate', 's_cate', 's_href', 'book_img', 'book_name', 'book_desc', 'book_price', 'book_author', 'book_publish_date', 'book_press'] writer = csv.DictWriter(file, fieldnames=fieldnames) writer.writerow(item) return item def open_spider(self, spider): with open('./dangdangbook.csv', 'w+', encoding='utf-8', newline='') as file: fieldnames = ['b_cate', 'm_cate', 's_cate', 's_href', 'book_img', 'book_name', 'book_desc', 'book_price', 'book_author', 'book_publish_date', 'book_press'] writer = csv.DictWriter(file, fieldnames=fieldnames) writer.writeheader()settings Code :

BOT_NAME = 'dangdangbook'SPIDER_MODULES = ['dangdangbook.spiders']NEWSPIDER_MODULE = 'dangdangbook.spiders'# need scrapy-redis De duplication function of , Here quote DUPEFILTER_CLASS = "scrapy_redis.dupefilter.RFPDupeFilter"# And the scheduler SCHEDULER = "scrapy_redis.scheduler.Scheduler"SCHEDULER_PERSIST = True# LOG_LEVEL = 'WARNING'# Set up redis Service address of REDIS_URL = 'redis://127.0.0.1:6379'# Crawl responsibly by identifying yourself (and your website) on the user-agentUSER_AGENT = 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.82 Safari/537.36'# Obey robots.txt rulesROBOTSTXT_OBEY = FalseITEM_PIPELINES = { 'dangdangbook.pipelines.DangdangbookPipeline': 300,}crontab Timing execution

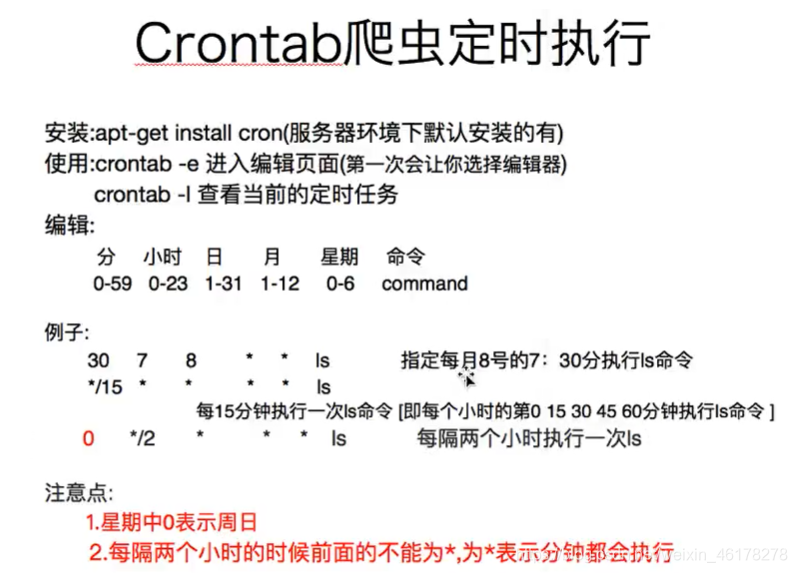

All the above are in Linux Direct operation of the platform crontab.

stay python In this environment, we can use pycrontab To operate crontab To set a scheduled task .

Add

Self defined excel To export file format code :

from scrapy.exporters import BaseItemExporterimport xlwtclass ExcelItemExporter(BaseItemExporter): def __init__(self, file, **kwargs): self._configure(kwargs) self.file = file self.wbook = xlwt.Workbook() self.wsheet = self.wbook.add_sheet('scrapy') self.row = 0 def finish_exporting(self): self.wbook.save(self.file) def export_item(self, item): fields = self._get_serialized_fields(item) for col, v in enumerate(x for _, x in fields): self.wsheet.write(self.row, col, v) self.row += 1 边栏推荐

- Efficient! Build FTP working environment with virtual users

- Use fail2ban to prevent password attempts

- "Pre training weekly" issue 52: shielding visual pre training and goal-oriented dialogue

- Cors: standard scheme of cross domain resource request

- CTF竞赛题解之stm32逆向入门

- Personalized online cloud database hybrid optimization system | SIGMOD 2022 selected papers interpretation

- 7、 Software package management

- Building intelligent gray-scale data system from 0 to 1: Taking vivo game center as an example

- Is the outdoor LED screen waterproof?

- 实战:fabric 用户证书吊销操作流程

猜你喜欢

上汽大通MAXUS正式发布全新品牌“MIFA”,旗舰产品MIFA 9正式亮相!

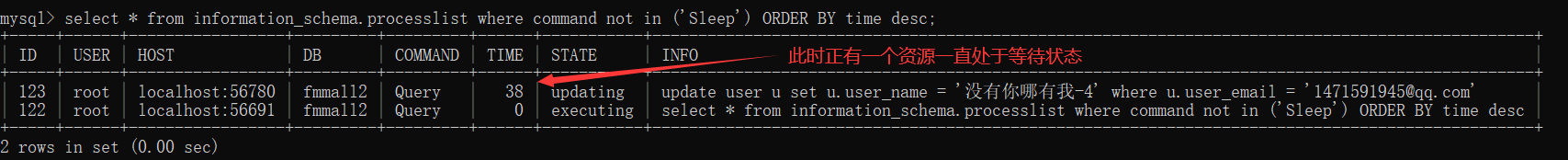

数据库锁表?别慌,本文教你如何解决

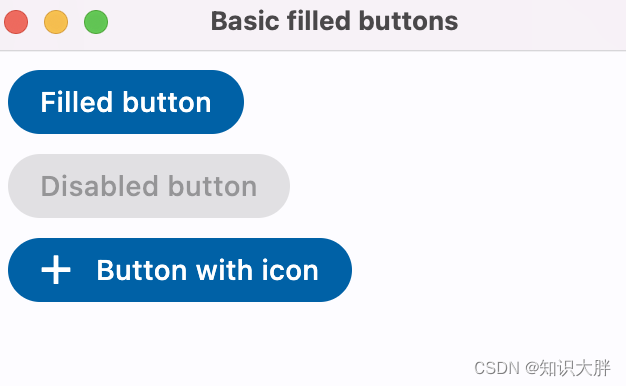

Flet教程之 03 FilledButton基础入门(教程含源码)(教程含源码)

N++ is not reliable

干货整理!ERP在制造业的发展趋势如何,看这一篇就够了

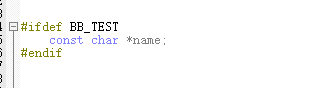

MDK在头文件中使用预编译器时,#ifdef 无效的问题

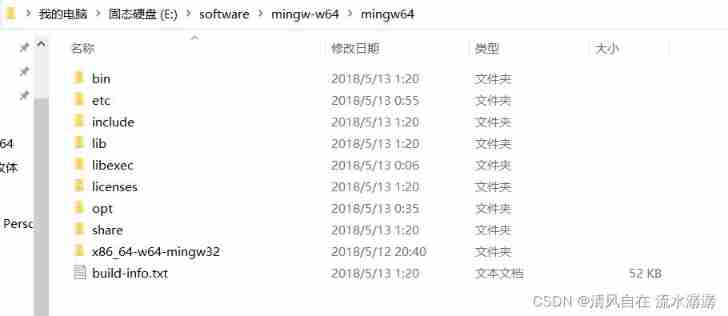

After installing vscode, the program runs (an include error is detected, please update the includepath, which has been solved for this translation unit (waveform curve is disabled) and (the source fil

2022KDD预讲 | 11位一作学者带你提前解锁优秀论文

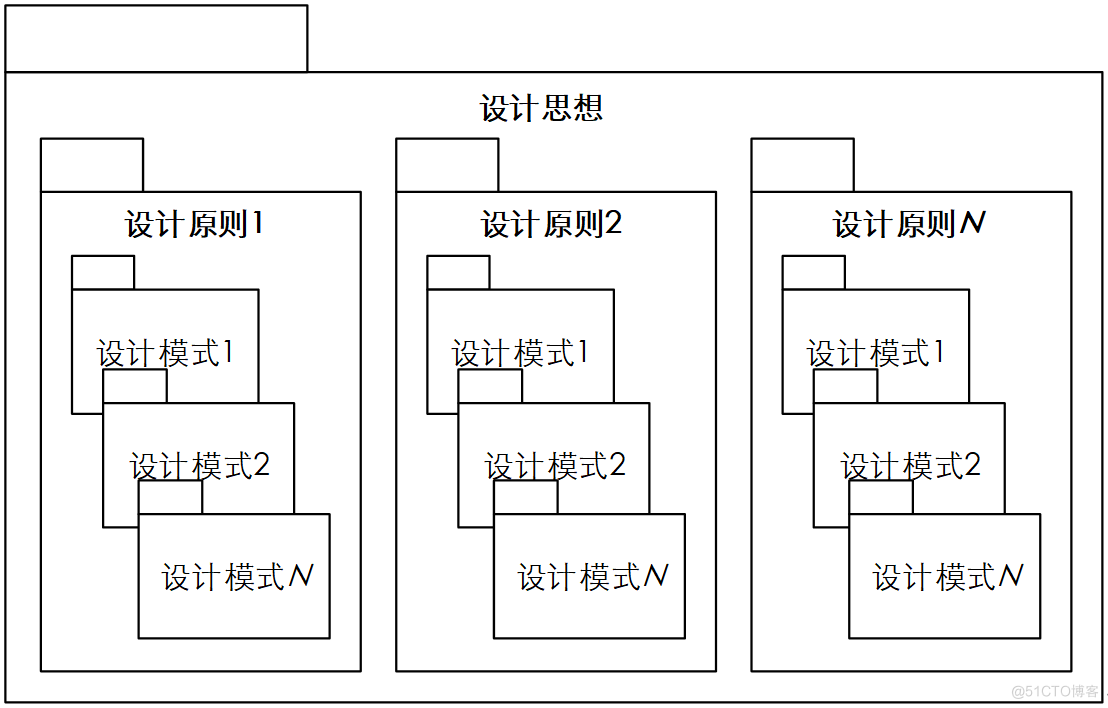

The only core indicator of high-quality software architecture

When MDK uses precompiler in header file, ifdef is invalid

随机推荐

Cann operator: using iterators to efficiently realize tensor data cutting and blocking processing

Iptables foundation and Samba configuration examples

七、软件包管理

2022年中国移动阅读市场年度综合分析

Deploy halo blog with pagoda

Golang sets the small details of goproxy proxy proxy, which is applicable to go module download timeout and Alibaba cloud image go module download timeout

Personalized online cloud database hybrid optimization system | SIGMOD 2022 selected papers interpretation

三星量产3纳米产品引台媒关注:能否短期提高投入产出率是与台积电竞争关键

WPF双滑块控件以及强制捕获鼠标事件焦点

Read the BGP agreement in 6 minutes.

2022KDD预讲 | 11位一作学者带你提前解锁优秀论文

CTF竞赛题解之stm32逆向入门

[AI system frontier dynamics, issue 40] Hinton: my deep learning career and research mind method; Google refutes rumors and gives up tensorflow; The apotheosis framework is officially open source

Using nsproxy to forward messages

C语言职工管理系统

C语言小型商品管理系统

Annual comprehensive analysis of China's mobile reading market in 2022

Apache服务器访问日志access.log设置

When MDK uses precompiler in header file, ifdef is invalid

8个扩展子包!RecBole推出2.0!