当前位置:网站首页>Three schemes to improve the efficiency of MySQL deep paging query

Three schemes to improve the efficiency of MySQL deep paging query

2022-07-04 13:27:00 【1024 questions】

Development often meets the need of paging query , But when you turn too many pages , Deep paging will occur , This leads to a sharp decline in query efficiency . Is there any way , It can solve the problem of deep paging ? This paper summarizes three optimization schemes , Query efficiency is directly improved 10 times , Let's study together .

Development often meets the need of paging query , But when you turn too many pages , Deep paging will occur , This leads to a sharp decline in query efficiency .

Is there any way , It can solve the problem of deep paging ?

This paper summarizes three optimization schemes , Query efficiency is directly improved 10 times , Let's study together .

1. Prepare the dataFirst create a user table , Only in create_time Index the fields :

CREATE TABLE `user` ( `id` int NOT NULL AUTO_INCREMENT COMMENT ' Primary key ', `name` varchar(255) DEFAULT NULL COMMENT ' full name ', `create_time` timestamp NULL DEFAULT NULL COMMENT ' Creation time ', PRIMARY KEY (`id`), KEY `idx_create_time` (`create_time`)) ENGINE=InnoDB COMMENT=' User table ';Then insert 100 10000 test data , Here you can use stored procedures :

drop PROCEDURE IF EXISTS insertData;DELIMITER $$create procedure insertData()begin declare i int default 1; while i <= 100000 do INSERT into user (name,create_time) VALUES (CONCAT("name",i), now()); set i = i + 1; end while; end $$call insertData() $$2. Verify the deep paging problem each page 10 strip , When we look up the first page , fast :

select * from user where create_time>'2022-07-03' limit 0,10;

In less than 0.01 It returns directly within seconds , So the execution time is not displayed .

When we turn to No 10000 Page time , Query efficiency drops sharply :

select * from user where create_time>'2022-07-03' limit 100000,10;

Execution time becomes 0.16 second , The performance has decreased at least dozens of times .

Where is the time spent ?

Need to scan before 10 Data , Large amount of data , More time-consuming

create_time It's a non clustered index , You need to query the primary key first ID, Go back to the table again , Through primary key ID Query out all fields

Draw the back table query process :

1. Through the first create_time Query the primary key ID

Don't ask why B+ The structure of the tree is like this ? Asking is the rule .

You can take a look at the first two articles .

Then we will optimize for these two time-consuming reasons .

3. Optimized query 3.1 Use subqueryFirst, use the sub query to find out the qualified primary keys , Then use the primary key ID Make conditions to find out all fields .

select * from user where id in ( select id from user where create_time>'2022-07-03' limit 100000,10);However, such a query will report an error , It is said that the use of limit.

We add a layer of sub query nesting , That's all right. :

select * from user where id in ( select id from ( select id from user where create_time>'2022-07-03' limit 100000,10 ) as t);

The execution time is shortened to 0.05 second , Less 0.12 second , It is equivalent to that the query performance is improved 3 times .

Why use subquery to find qualified primary keys first ID, It can shorten the query time ?

We use it explain Just look at the implementation plan :

explain select * from user where id in ( select id from ( select id from user where create_time>'2022-07-03' limit 100000,10 ) as t);

You can see Extra Column displays the... Used in the subquery Using index, Indicates that the overlay index is used , Therefore, sub queries do not need to be queried back to the table , Speed up query efficiency .

3.2 Use inner join Relational queryTreat the result of subquery as a temporary table , Then perform an association query with the original table .

select * from user inner join ( select id from user where create_time>'2022-07-03' limit 100000,10) as t on user.id=t.id;

Query performance is the same as using subqueries .

3.3 Use paging cursor ( recommend )The implementation method is : When we look up the second page , Put the query results on the first page into the query conditions on the second page .

for example : First, check the first page

select * from user where create_time>'2022-07-03' limit 10;Then check the second page , Put the query results on the first page into the query conditions on the second page :

select * from user where create_time>'2022-07-03' and id>10 limit 10;This is equivalent to querying the first page every time , There is no deep paging problem , Recommended .

Execution time is 0 second , The query performance has been directly improved by dozens of times .

Although such a query method is easy to use , But it brings another problem , Is to jump to the specified number of pages , You can only turn down one page .

So this kind of query is only suitable for specific scenarios , For example, information APP Home page .

Internet APP Usually in the form of waterfall flow , For example, baidu home page 、 The front page of the headlines , All slide down and turn the page , There is no need to jump to the number of pages .

Don't believe it , You can look at it , This is the waterfall of headlines :

The query result of the previous page is carried in the transmission parameter .

Response data , Returned the query criteria on the next page .

Therefore, the application scenario of this query method is quite wide , Use it quickly .

Summary of knowledge points :

This is about solving MySQL This is the end of the article on deep paging inefficiency . I hope it will be helpful for your study , I also hope you can support the software development network .

边栏推荐

猜你喜欢

PostgreSQL 9.1 soaring Road

JVM系列——栈与堆、方法区day1-2

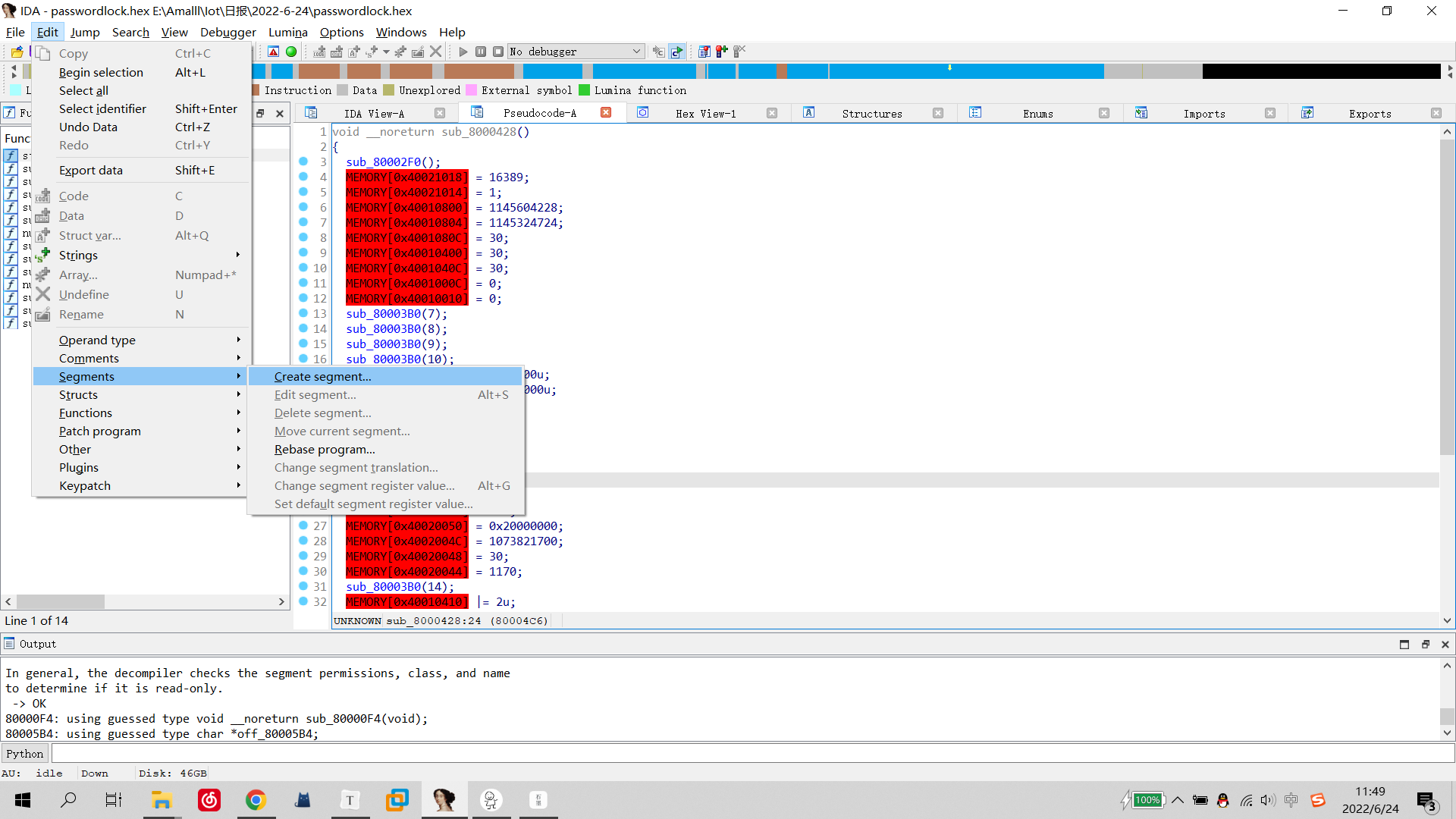

CTF competition problem solution STM32 reverse introduction

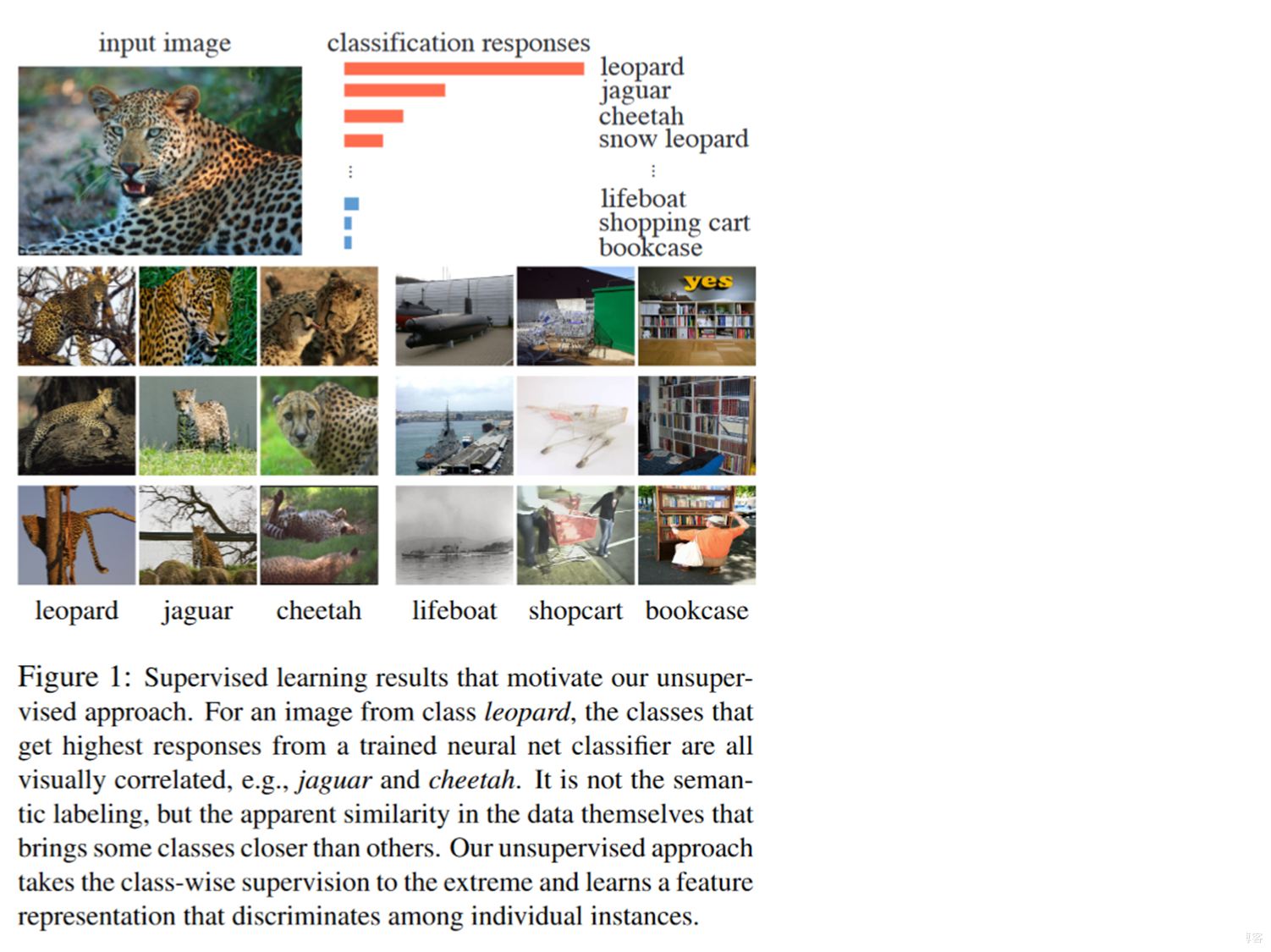

诸神黄昏时代的对比学习

Annual comprehensive analysis of China's mobile reading market in 2022

N++ is not reliable

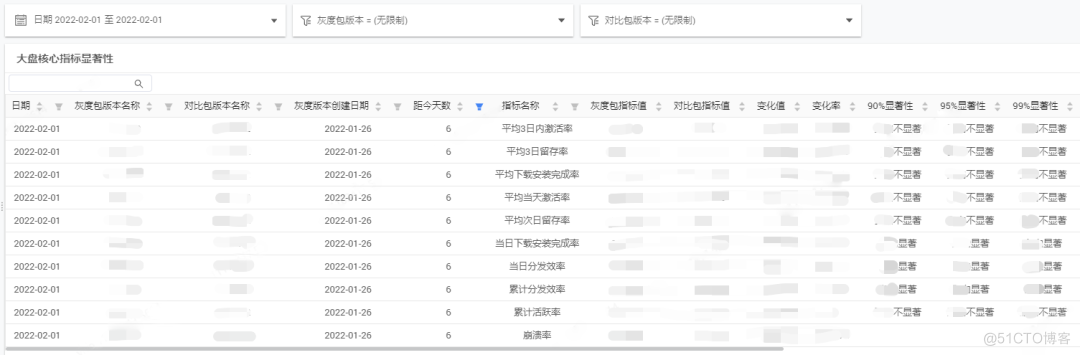

Building intelligent gray-scale data system from 0 to 1: Taking vivo game center as an example

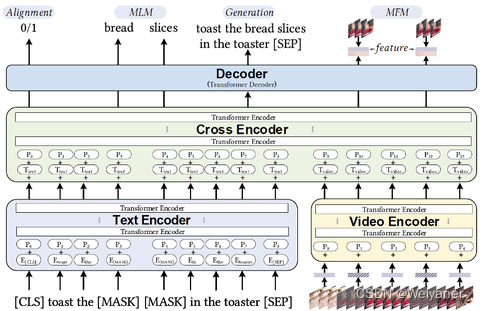

美团·阿里关于多模态召回的应用实践

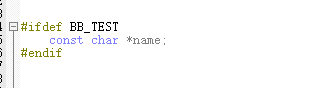

When MDK uses precompiler in header file, ifdef is invalid

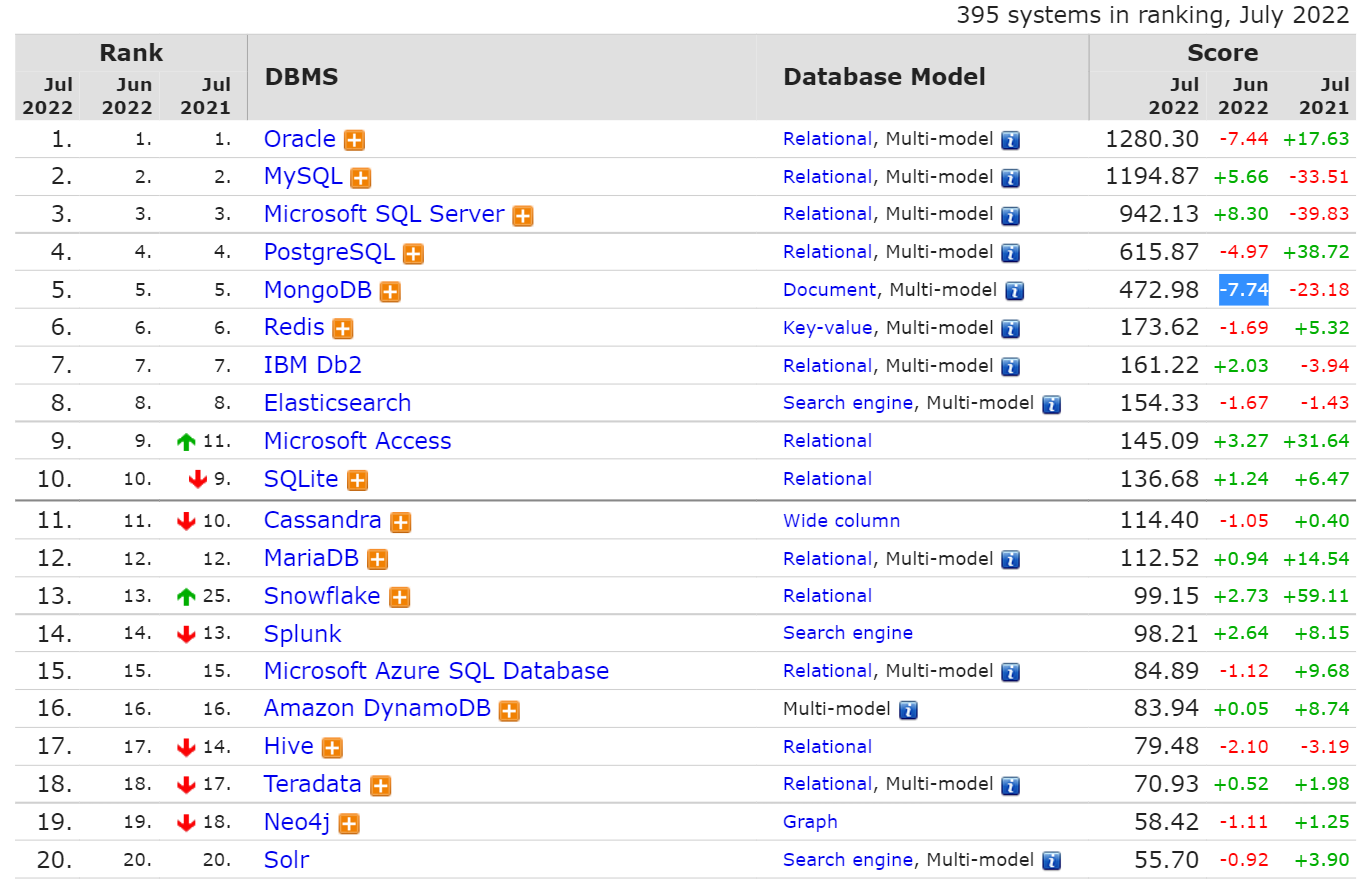

7 月数据库排行榜:MongoDB 和 Oracle 分数下降最多

随机推荐

Alibaba cloud award winning experience: build a highly available system with polardb-x

美团·阿里关于多模态召回的应用实践

使用 NSProxy 实现消息转发

Practice: fabric user certificate revocation operation process

Personalized online cloud database hybrid optimization system | SIGMOD 2022 selected papers interpretation

Building intelligent gray-scale data system from 0 to 1: Taking vivo game center as an example

三星量产3纳米产品引台媒关注:能否短期提高投入产出率是与台积电竞争关键

在 Apache 上配置 WebDAV 服务器

读《认知觉醒》

ASP.NET Core入门一

c#数组补充

6 分钟看完 BGP 协议。

BackgroundWorker用法示例

Deploy halo blog with pagoda

七、软件包管理

《预训练周刊》第52期:屏蔽视觉预训练、目标导向对话

N++ is not reliable

CVPR 2022 | TransFusion:用Transformer进行3D目标检测的激光雷达-相机融合

二分查找的简单理解

Alibaba cloud award winning experience: build a highly available system with polardb-x