Introduction to the experience

The scene will provide a configuration for CentOS 8.5 Operating system and installation deployment PolarDB-X Clustered ECS example ( Cloud server ). Through the operation of this tutorial , Take you to experience how to use PolarDB-X Build a highly available system , By direct kill Container simulation node failure , To observe PolarDB-X Automatic recovery of . Go to immediately

Experiment preparation

1. Create experimental resources

Before you start the experiment , You need to create ECS Instance resources .

On the lab page , single click Create resources .

( Optional ) In the left navigation bar of the lab page , single click Cloud product resources list , You can view the relevant information of the experiment resources ( for example IP Address 、 User information, etc ).

explain : The resource creation process requires 1~3 minute .

2. Installation environment

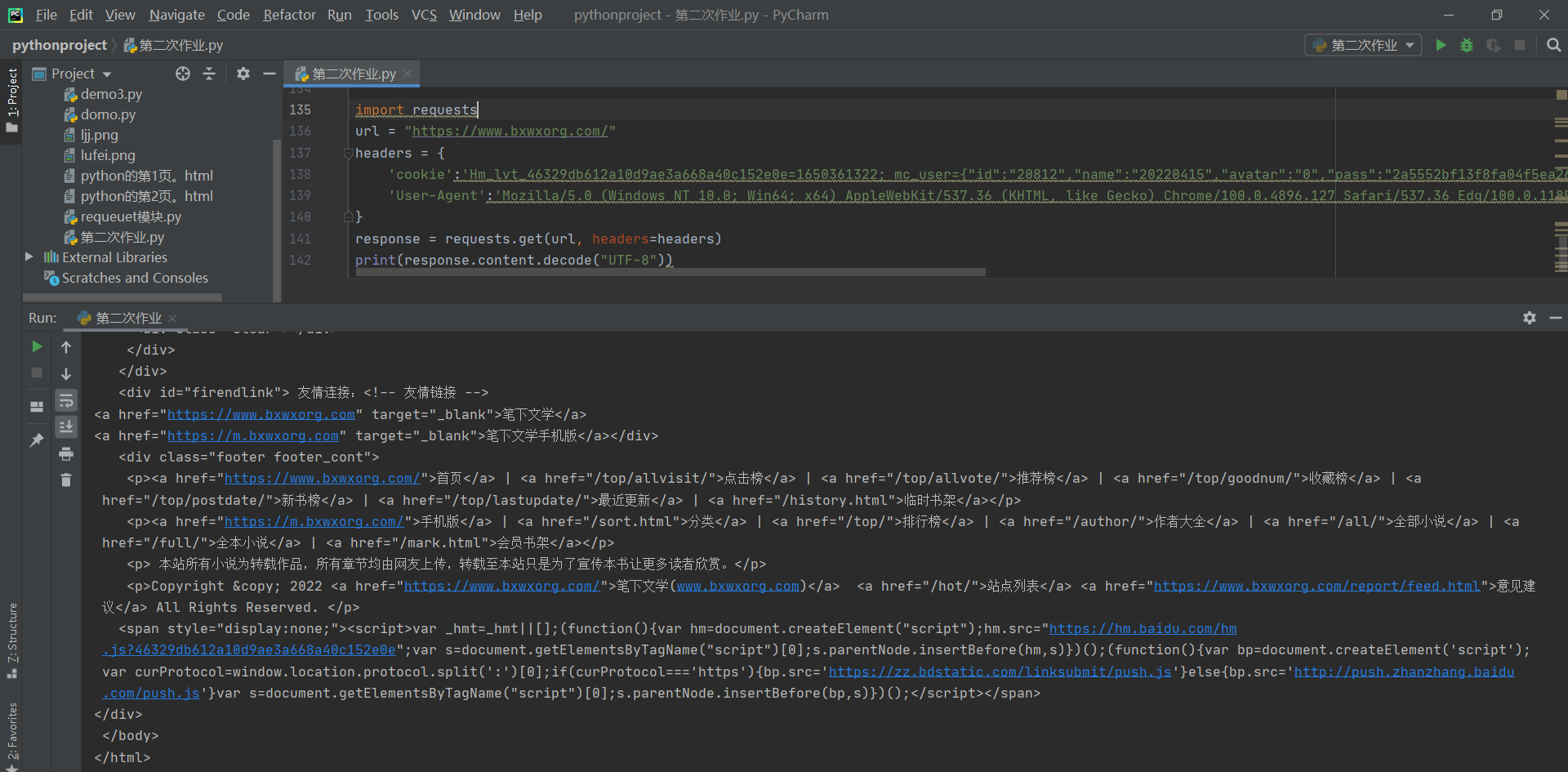

This step will guide you through how to install Docker、kubectl、minikube and Helm3.

- install Docker.

a. Execute the following command , install Docker.

curl -fsSL https://get.docker.com | bash -s docker --mirror Aliyun

b. Execute the following command , start-up Docker.

systemctl start docker

- install kubectl.

a. Execute the following command , download kubectl file .

curl -LO https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectl

b. Execute the following command , Give executable permission .

chmod +x ./kubectl

c. Execute the following command , Move to system directory .

mv ./kubectl /usr/local/bin/kubectl

- install minikube.

Execute the following command , Download and install minikube.

curl -LO https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64

sudo install minikube-linux-amd64 /usr/local/bin/minikube

- install Helm3.

a. Execute the following command , download Helm3.

wget https://labfileapp.oss-cn-hangzhou.aliyuncs.com/helm-v3.9.0-linux-amd64.tar.gz

b. Execute the following command , decompression Helm3.

tar -zxvf helm-v3.9.0-linux-amd64.tar.gz

c. Execute the following command , Move to system directory .

mv linux-amd64/helm /usr/local/bin/helm

5. install MySQL.

yum install mysql -y

3. Use PolarDB-X Operator install PolarDB-X

This step will guide you through creating a simple Kubernetes Cluster and deploy PolarDB-X Operator , Use Operator Deploy a complete PolarDB-X colony , For detailed documentation, please refer to adopt Kubernetes install PolarDB-X.

- Use minikube establish Kubernetes colony .

minikube It is maintained by the community for quick creation Kubernetes Tools for testing clusters , Suitable for testing and learning Kubernetes. Use minikube Created Kubernetes Clusters can run in containers or virtual machines , This experimental scenario is based on CentOS 8.5 To create a Kubernetes For example .

explain : If you deploy with other operating systems minikube, for example macOS or Windows, Some steps may be slightly different .

a. Execute the following command , New account galaxykube, And will galaxykube Join in docker In the group .minikube It is required to use non root Account for deployment , So you need to create a new account .

useradd -ms /bin/bash galaxykube

usermod -aG docker galaxykube

b. Execute the following command , Switch to account galaxykube.

su galaxykube

c. Execute the following command , Enter into home/galaxykube Catalog .

cd

d. Execute the following command , Start a minikube.

explain : Here we use Alibaba cloud minikube Mirror source and USTC Provided docker Image source to speed up the pulling of image .

minikube start --cpus 4 --memory 12288 --image-mirror-country cn --registry-mirror=https://docker.mirrors.sjtug.sjtu.edu.cn --kubernetes-version 1.23.3

The results are as follows , Express minikube It's working properly ,minikube Will automatically set kubectl Configuration file for .

e. Execute the following command , Use kubectl View cluster information .

kubectl cluster-info

Returns the following , You can view the cluster related information .

- Deploy PolarDB-X Operator.

a. Execute the following command , Create a file called polardbx-operator-system The namespace of .

kubectl create namespace polardbx-operator-system

b. Execute the following command , install PolarDB-X Operator.

helm repo add polardbx https://polardbx-charts.oss-cn-beijing.aliyuncs.com

helm install --namespace polardbx-operator-system polardbx-operator polardbx/polardbx-operator

c. Execute the following command , see PolarDB-X Operator Operation of components .

kubectl get pods --namespace polardbx-operator-system

The results are as follows , Please wait patiently 2 minute , Wait for all components to enter Running state , Express PolarDB-X Operator Installation completed .

- Deploy PolarDB-X colony .

a. Execute the following command , establish polardb-x.yaml.

vim polardb-x.yaml

b. Press i Key to enter edit mode , Copy the code to the following file , Then press ECS Exit edit mode , Input :wq Then press Enter Key save and exit .

apiVersion: polardbx.aliyun.com/v1

kind: PolarDBXCluster

metadata:

name: polardb-x

spec:

config:

dn:

mycnfOverwrite: |-

print_gtid_info_during_recovery=1

gtid_mode = ON

enforce-gtid-consistency = 1

recovery_apply_binlog=on

slave_exec_mode=SMART

topology:

nodes:

cdc:

replicas: 1

template:

resources:

limits:

cpu: "1"

memory: 1Gi

requests:

cpu: 100m

memory: 500Mi

cn:

replicas: 2

template:

resources:

limits:

cpu: "2"

memory: 4Gi

requests:

cpu: 100m

memory: 1Gi

dn:

replicas: 1

template:

engine: galaxy

hostNetwork: true

resources:

limits:

cpu: "2"

memory: 4Gi

requests:

cpu: 100m

memory: 500Mi

gms:

template:

engine: galaxy

hostNetwork: true

resources:

limits:

cpu: "1"

memory: 1Gi

requests:

cpu: 100m

memory: 500Mi

serviceType: ClusterIP

upgradeStrategy: RollingUpgrade

c. Execute the following command , establish PolarDB-X colony .

kubectl apply -f polardb-x.yaml

d. Execute the following command , see PolarDB-X Cluster creation status .

kubectl get polardbxCluster polardb-x -o wide -w

The results are as follows , Please wait patiently for about seven minutes , When PHASE Is shown as Running when , Express PolarDB-X The cluster has been deployed .

e. Press Ctrl+C key , Exit view PolarDB-X Cluster creation status .

4. Connect PolarDB-X colony

This step will guide you how to connect through K8s The deployment of PolarDB-X colony .

- Execute the following command , see PolarDB-X Cluster login password .

kubectl get secret polardb-x -o jsonpath="{.data['polardbx_root']}" | base64 -d - | xargs echo "Password: "

The results are as follows , You can see PolarDB-X Cluster login password .

- Execute the following command , take PolarDB-X The cluster port is forwarded to 3306 port .

explain : Use MySQL Client Login through k8s The deployment of PolarDB-X Before cluster , You need to get PolarDB-X Cluster login password and port forwarding .

kubectl port-forward svc/polardb-x 3306

- On the experiment page , Click on the

Icon , Create a new terminal 2 .

Icon , Create a new terminal 2 .

- Execute the following command , Connect PolarDB-X colony .

explain :

You need to <PolarDB-X Cluster login password > Replace with the actually obtained PolarDB-X Cluster login password .

If encountered mysql: [Warning] Using a password on the command line interface can be insecure.ERROR 2013 (HY000): Lost connection to MySQL server at 'reading initial communication packet', system error: 0 Report errors , Please wait a minute , Retransmit the port and connect PolarDB-X Just cluster .

mysql -h127.0.0.1 -P3306 -upolardbx_root -p<PolarDB-X Cluster login password >

5. Start the business

This step will guide you on how to use Sysbench OLTP The scenario simulates business traffic .

Prepare the pressure test data .

- The implementation is as follows SQL sentence , Create pressure test database sysbench_test.

create database sysbench_test;

- Input exit Exit database .

- Execute the following command , Switch to account galaxykube.

su galaxykube

- Execute the following command , Enter into /home/galaxykube Catalog .

cd

- Execute the following command , Create a sysbench-prepare.yaml file .

vim sysbench-prepare.yaml

- Press i Key to enter edit mode , Copy the code to the following file , Then press ECS Exit edit mode , Input :wq Then press Enter Key save and exit .

apiVersion: batch/v1

kind: Job

metadata:

name: sysbench-prepare-data-test

namespace: default

spec:

backoffLimit: 0

template:

spec:

restartPolicy: Never

containers:

- name: sysbench-prepare

image: severalnines/sysbench

env:

- name: POLARDB_X_USER

value: polardbx_root

- name: POLARDB_X_PASSWD

valueFrom:

secretKeyRef:

name: polardb-x

key: polardbx_root

command: [ 'sysbench' ]

args:

- --db-driver=mysql

- --mysql-host=$(POLARDB_X_SERVICE_HOST)

- --mysql-port=$(POLARDB_X_SERVICE_PORT)

- --mysql-user=$(POLARDB_X_USER)

- --mysql_password=$(POLARDB_X_PASSWD)

- --mysql-db=sysbench_test

- --mysql-table-engine=innodb

- --rand-init=on

- --max-requests=1

- --oltp-tables-count=1

- --report-interval=5

- --oltp-table-size=160000

- --oltp_skip_trx=on

- --oltp_auto_inc=off

- --oltp_secondary

- --oltp_range_size=5

- --mysql_table_options=dbpartition by hash(`id`)

- --num-threads=1

- --time=3600

- /usr/share/sysbench/tests/include/oltp_legacy/parallel_prepare.lua

- run

- Execute the following command , Run to prepare pressure measurement data sysbench-prepare.yaml file , Initialize test data .

kubectl apply -f sysbench-prepare.yaml

- Execute the following command , Get task progress status .

kubectl get jobs

The results are as follows , Please wait patiently for about 1 minute , When the task state COMPLETIONS by 1/1 when , Indicates that the data has been initialized .

Start pressure measurement flow .

- Execute the following command , Create a sysbench-oltp.yaml file .

vim sysbench-oltp.yaml

- Press i Key to enter edit mode , Copy the code to the following file , Then press ECS Exit edit mode , Input :wq Then press Enter Key save and exit .

apiVersion: batch/v1

kind: Job

metadata:

name: sysbench-oltp-test

namespace: default

spec:

backoffLimit: 0

template:

spec:

restartPolicy: Never

containers:

- name: sysbench-oltp

image: severalnines/sysbench

env:

- name: POLARDB_X_USER

value: polardbx_root

- name: POLARDB_X_PASSWD

valueFrom:

secretKeyRef:

name: polardb-x

key: polardbx_root

command: [ 'sysbench' ]

args:

- --db-driver=mysql

- --mysql-host=$(POLARDB_X_SERVICE_HOST)

- --mysql-port=$(POLARDB_X_SERVICE_PORT)

- --mysql-user=$(POLARDB_X_USER)

- --mysql_password=$(POLARDB_X_PASSWD)

- --mysql-db=sysbench_test

- --mysql-table-engine=innodb

- --rand-init=on

- --max-requests=0

- --oltp-tables-count=1

- --report-interval=5

- --oltp-table-size=160000

- --oltp_skip_trx=on

- --oltp_auto_inc=off

- --oltp_secondary

- --oltp_range_size=5

- --mysql-ignore-errors=all

- --num-threads=8

- --time=3600

- /usr/share/sysbench/tests/include/oltp_legacy/oltp.lua

- run

- Execute the following command , Run the startup pressure measurement sysbench-oltp.yaml file , Start the pressure test .

kubectl apply -f sysbench-oltp.yaml

- Execute the following command , Find the one where the pressure test script runs POD.

kubectl get pods

The results are as follows , With ‘sysbench-oltp-test-’ At the beginning POD That is the goal POD.

- Execute the following command , see QPS Equal flow data .

explain : You need to set the target in the command POD Replace with ‘sysbench-oltp-test-’ At the beginning POD.

kubectl logs -f The goal is POD

6. Experience PolarDB-X High availability

After the previous preparations , We've used PolarDB-X+Sysbench OLTP Built a running business system . This step will guide you through the use of kill POD The way , Simulate physical downtime 、 Node unavailability caused by network disconnection , And observe the business QPS The change of .

- On the experiment page , Click on the

Icon , Create a new terminal III .

Icon , Create a new terminal III .

kill CN.

- Execute the following command , Switch to account galaxykube.

su galaxykube

- Execute the following command , obtain CN POD Name .

kubectl get pods

The results are as follows , With ‘polardb-x-xxxx-cn-default’ The first is CN POD Name .

- Execute the following command , Delete any one CN POD. explain : You need to replace the in the command with any one with ‘polardb-x-xxxx-cn-default’ At the beginning CN POD Name .

kubectl delete pod <CN POD>

- Execute the following command , see CN POD Automatically create situations .

kubectl get pods

The results are as follows , You can see CN POD Already in automatic creation .

After dozens of seconds , By kill Of CN POD Automatic return to normal .

- Switch to terminal 2 , You can view kill CN Subsequent business QPS The situation of .

kill DN.

- Switch to terminal 3 , Execute the following command , obtain DN POD Name .

kubectl get pods

The results are as follows , With ‘polardb-x-xxxx-dn’ The first is DN POD Name .

- Execute the following command , Delete any one DN POD.

explain :

You need to replace the in the command with any one with ‘polardb-x-xxxx-dn’ At the beginning DN POD Name .

DN Each logical node is a three replica architecture , That is to say a DN The node corresponding to the 3 individual POD, You can select any one to delete . Besides ,GMS Node is a special role DN, It also has high availability , You can select any POD To delete .

kubectl delete pod <DN POD>

- Execute the following command , see DN POD Automatically create situations .

kubectl get pods

The results are as follows , You can see DN POD Already in automatic creation .

After dozens of seconds , By kill Of DN POD Automatic return to normal .

- Switch to terminal 2 , You can view kill DN Subsequent business QPS The situation of .

kill CDC.

- Switch to terminal 3 , Execute the following command , obtain CDC POD Name .

kubectl get pods

The results are as follows , With ‘polardb-x-xxxx-cdc-defaul’ The first is CDC POD Name .

- Execute the following command , Delete any one CDC POD.

explain : You need to replace the in the command with any one with ‘polardb-x-xxxx-cdc-defaul’ At the beginning CDC POD Name .

kubectl delete pod <CDC POD>

- Execute the following command , see CDC POD Automatically create situations .

kubectl get pods

The results are as follows , You can see CDC POD Already in automatic creation .

After dozens of seconds , By kill Of CDC POD Automatic return to normal .

- Switch to terminal 2 , You can view kill CDC Subsequent business QPS The situation of .

7. Learn more about

If you want to know more about PolarDB-X Highly available knowledge , See the following for details .

PolarDB-X Storage architecture “ be based on Paxos Best production practices for ”

Miscellaneous talk about database architecture (2) High availability and consistency

PolarDB-X Source code interpretation ( Off the coast ): How to achieve a Paxos

Congratulations to complete

Alibaba cloud award experience : use PolarDB-X More articles about building a highly available system

- SpringCloud One of the learning series ----- Build a highly available registry (Eureka)

Preface This article mainly introduces SpringCloud Related knowledge . Microservice architecture and building a highly available service registration and discovery service module (Eureka). SpringCloud Introduce Spring Cloud Is in Spring B ...

- Based on Alibaba cloud SLB/ESS/EIP/ECS/VPC City wide high availability scheme drill

Today is based on Alibaba cloud SLB/ESS/EIP/ECS/VPC And other products conducted a city wide high availability scheme drill : The basic steps are as follows : 1. In East China 1 establish VPC The Internet VPC1, In East China 1 Availability zone B and G Create a virtual switch each vpc1_swi ...

- Build a highly available redis Environmental Science

One . Environmental preparation My environment : Fedora 25 server 64 Bit Edition 6 platform : 192.168.10.204 192.168.10.205 192.168.10.206 192.168.10.203 ...

- Nginx Series IV :Nginx+keepalived Build a highly available dual machine dual active hot standby system

Suggest : Read first Nginx+keepalived Master slave configuration , Because this article starts from the previous one In the previous article, we briefly introduced the master-slave configuration and its disadvantages , Let's take a look at the dual active hot standby configuration : 2 platform Nginx+keepalived Prepare for each other , Each bound ...

- Alibaba cloud award winning survey results are released , Give 10 Alibaba logo Brooch ...

4 month 17 Japan , We launched " Alibaba cloud award winning survey ! Gift 10 Alibaba logo Brooch " Activities , Now it is selected by random lottery 10 Lucky classmates , Each person presents an Alibaba Brooch . Now let's welcome the award-winning students ID The announcement is as follows , Please send a private message to the following students ...

- High concurrency & Common coping strategies for highly available systems Second kill, etc -( Ali )

For a system that needs to handle high concurrency , We can solve this problem from many aspects . 1. Database system : The database system can adopt cluster strategy to ensure that the downtime of a database server will not affect the whole system , And through the load balancing strategy to reduce the cost of each database server ...

- Building a redis High availability system

One . A single instance When there is only one in the system redis Runtime , Once it's time to redis Hang up , It will make the whole system unable to run . A single instance Two . Backup Because of the single station redis There is a single point of failure , It will make the whole system unavailable , So the natural way to think of is to back up ( ...

- Haproxy+Keepalived build Weblogic High availability load balancing cluster

Configuration environment description : KVM Virtual machine configuration purpose Number IP Address machine name fictitious IP Address Hardware Memory 3G System disk 20G cpu 4 nucleus Haproxy keepalived 2 platform 192.168.1.10 192 ...

- be based on keepalived build MySQL High availability cluster

MySQL There are several high availability schemes for : keepalived+ Double master ,MHA,MMM,Heartbeat+DRBD,PXC,Galera Cluster The more common one is keepalived+ Double master ,MHA and ...

- EMQ Cluster construction realizes high availability and load balancing ( Millions of device connections )

One .EMQ Cluster construction realizes high availability and load balancing Architecture server planning The server IP Deploy the business effect 192.168.81.13 EMQTTD EMQ colony 192.168.81.22 EMQTTD EMQ colony 192. ...

Random recommendation

- trigger Event simulation

Event simulation trigger In operation DOM In the elements , Most events are events that users have to operate to trigger , But sometimes , Need to simulate the user's operation , To achieve the effect . demand : When the page is initialized, the search event is triggered and the input Control value , And print out ( The renderings are as follows ...

- Axure Learning from

I've been studying these two days Axure, The first thing to do is download Axure Of 7.0 edition , And then Chinese , Baidu can find . But I also encountered some problems at the beginning of my study . When you start adding catalogs, it's still very smooth , But when I released it , I found some problems . At the beginning of the release ...

- atitit. The formula and principle of the lunar calendar and the date calculation of the lunar calendar

atitit. The formula and principle of the lunar calendar and the date calculation of the lunar calendar 1. An overview of the lunar calendar 1 2. How to calculate the lunar calendar in a computer program ??1 3. The formula of the lunar calendar 2 4. Get the lunar calendar of the day 3 5. Historical date formula and look-up table can get accurate date 3 6 ...

- Emphasis <strong> and <em> label , Set separate styles for text <span>

difference :1,<em> Emphasize ,<strong> Express a stronger emphasis on . 2, And in the browser <em> Italics are used by default ,<strong> In bold type . 3, Two ...

- oracle Table joins - hash join Hash join

One . hash Connect ( Hash join ) principle When two tables are connected , First, use the table with fewer records in the two tables to create a table in memory hash surface , Then scan the table with more records and detect hash surface , Find out and hash Table to get the result set ...

- Apache+Django+Mysql Environment configuration

Environmental requirements :Apache:2.2 Mysql:5.5 Django:1.5 python:2.7 First download mod_wsgi-win32-ap22py27-3.3.so After downloading , Change its name to mod_wsg ...

- activity Nested one level fragment, Class A fragment Nested secondary fragment, At the first level fragment Refresh Level 2 fragment Medium UI

Today I have a very tangled problem , Due to the problem of product design , Technically, it involves activity Nested one level fragment, Class A fragment Nested secondary fragment, At the first level fragment Refresh Level 2 fragment Medium UI ...

- Visual Studio plug-in unit Resharper 2016.1 And above 【 Close test effectively 】

1. Crack patch download :https://flydoos.ctfile.com/fs/y80153828783. Download and unzip the following file : 2. Open folder “IntelliJIDEALicenseServer ...

- iOS Let the view UIView Show border lines on one side separately

Sometimes it's necessary to let view Show border lines on one side , Now set layer Of border It doesn't work . Looking up the information on the Internet, I found that there is a way of opportunism , The principle is to view Of layer Add another layer, Let this layer Acting as a side ...

- Hibernate Primary key generation strategy and selection

1 .increment: Apply to short,int,long A primary key , Instead of using the automatic database growth mechanism This is a hibernate A growth mechanism provided in When the program is running , Check first :select max(id) f ...

Icon , Create a new terminal 2 .

Icon , Create a new terminal 2 . Icon , Create a new terminal III .

Icon , Create a new terminal III .