当前位置:网站首页>Self taught machine learning series - 1 basic framework of machine learning

Self taught machine learning series - 1 basic framework of machine learning

2022-06-26 09:09:00 【ML_ python_ get√】

The basic framework of machine learning

1 Basic ideas of machine learning

- Introduce the basic framework of machine learning : Data acquisition 、 feature extraction 、 Data conversion 、 model training 、 Model selection 、 Model evaluation

- Supervised learning : Give original features and problem labels , Mining rules , Learn a pattern , Answer new questions

- Unsupervised learning : Just look for patterns based on original features

- Reinforcement learning : Maximize returns , There is no absolutely correct label , Nor is it looking for data structure features

1.1 Model selection

- How to select model parameters ? Cross validation

- For the general regression problem : Minimize the mean square error

- Over fitting : The variance increases as the model complexity increases , The bias decreases as the complexity of the model increases , Mean square error U type -

- Cross validation :

- The easiest way : Select a certain proportion of the training set as the verification set , But will not participate in model training , Reduce accuracy

- Usually used K The method of cross validation , All samples are then divided into K part (3-20). Use one part of it as a validation set each time , repeat K Time , Until all parts have been verified .

1.2 Model evaluation

- The return question : Mean square error

- Classification problem : Accuracy rate - hit (1,1) A false report (1,0) Omission of (0,1) Right to refuse (0,0) 1 I'm sick ,0 No disease

- Accuracy rate :( hit + Right to refuse )/ total When the incidence rate is particularly low

- Accuracy : hit /( hit + A false report )

- shooting : hit /( hit + Omission of )

- False report rate : A false report /( A false report + Right to refuse )

- ROC

- AUC

2 Common machine learning methods

- Supervised learning : Generalized linear model 、 Linear discriminant analysis 、 Support vector machine 、 Decision tree 、 Random forests 、 neural network 、K a near neighbor

- Unsupervised learning : clustering 、 Dimension reduction PCA

2.1 Generalized linear model

- Simple regression : Single factor

- Multiple regression : Multi factor

- Ridge return :L2 Regularization

- Lasso : L1 Regularization

- Logical regression : Dichotomous problem , Improvement of linear probability model

- Ordered multi classification : Multiple classification problem , The order in which dependent variables exist , fitting N-1 A logistic regression

- OvR:one vs rest Divide the samples into two categories , Conduct N Secondary logistic regression , The probability of each single class is obtained

2.2 Linear discriminant analysis and quadratic discriminant analysis

- Logistic regression is not suitable for cases where two categories are far apart

- LDA: Linear discriminant analysis , Expansion of logistic regression , It is considered that the sample satisfies the normal distribution , Use sample moments to estimate coefficients

- QDA: Second discriminant analysis , The discriminant equation is a quadratic function , The boundary is a curve

2.3 Support vector machine

- Dividing sample space by a hyperplane : Use a super large piece of paper to divide the space into two parts

- And this hyperplane is only determined by a finite number of points , It's called support vector

- XOR gate problem : Only the input (1,0)(0,1) Just output 1, Lines cannot be classified

- L d , Such as regression introduction x1*x2, Taylor expansion, etc

- SVM Introducing kernel function to compute hyperplane , Linear kernel 、 Polynomial kernel 、 Gaussian kernel

2.4 Decision trees and random forests

- Decision tree : Each layer node is divided into multiple nodes by some rule , The leaf node of the terminal is the classification result

- Selection of classification features : It maximizes the information gain after splitting sum(-plogp)

- Avoid overfitting : prune 、 Branch stop method

- C4.5 Algorithm : It can only be used for classification , Feature combinations are not possible

- CART Algorithm : Each node can only be classified into two child nodes , Support feature combination , It can be used for classification and regression

- advantage : Fast training , Solve non numerical characteristics , Nonlinear classification

- shortcoming : unstable , Sensitive to training samples , Easy to overfit

- Integration method

- Bootstrap : Some samples with the same length can be obtained by putting them back for sampling Bootstrap Data sets , Conduct N Time , Train weak classifiers for each data set .

- Bagging : be based on Bootstrap Method , Vote on multiple weak classifiers 、 The mean gets the final classification

- Parallel methods :Bagging—— Random forests , Row sampling results in Bootstrap Data sets , Column sampling random selection m Features , Final N Four decision trees vote to get the classification

- Serial method :AdaBoost—— Gradient lift decision tree :GBDT, The original data is trained to get a weak classifier , The samples with wrong classification increase the weight , Keep training

2.5 Neural networks and deep learning

- The basic idea : Neurons have two states of excitation and inhibition , The dendrites on it will receive the stimulation from the last neuron , Only the potential reaches a certain threshold , Neurons will be activated to the excited state , The electrical signal then travels along the axon and synapse to the dendrite of the next neuron , This forms a huge network

- Input layer : Linear weighting

- Hidden layer : Activation function ,ReLu,sigmoid,tanh

- Output layer : classification softmax、sigmoid, Regression equality

- Too many layers : The parameters are difficult to estimate , The gradient disappears , Convolutional neural networks CNN( Local connection )

- Image recognition :CNN

- Time series problem : Recursive neural network RNN And the long and short memory network LSTM

- Unsupervised learning : Generative antagonistic network GAN

2.6 KNN

- Supervised learning

- The above categories are based on assumptions : If two samples have similar characteristics , Belong to the same category

- Based on this idea , Make a new classification rule : The category corresponding to each point shall be determined by the nearest K Neighbor categories determine

- K Determination of value : Too small to fit , Too big to fit , Cross validation

2.7 clustering

- Unsupervised learning : Divide the sample into K A cluster of , Group similar objects into a cluster .

- K-means

- Randomly determine K A point as the center of mass , Find the nearest centroid for each sample point , Assigned to the corresponding cluster

- Select the average value of each cluster as the new particle , Update the sample points in the cluster , Get the first iteration result

- Keep repeating the process , Until the cluster no longer changes

- shortcoming : suffer K Value has a great influence , Affected by outliers , Slow convergence

- Hierarchical clustering : Decompose the sample hierarchy , Then decompose from top to bottom or merge from bottom to top

- Spectral clustering : Each object looks at the vertex of the graph V, The similarity between vertices is equal to the connecting edge E A weight , An undirected weighted graph based on similarity is obtained G(V,E)

2.8 Dimension reduction

- PAC:

- It is assumed that the larger part of the independent variable can obtain a larger response at the dependent variable

- Look for the most changeable direction in space , As a new feature , Data needs to be standardized

- How to determine the characteristic number , Cross validation

- Partial least squares :

- When PAC Suppose not , Look for the close relationship between features and dependent variables to determine new features

- X Yes Y Do the regression coefficient of linear regression , Use significant coefficients as much as possible to get Z1

- X Yes Z1 Do linear regression , The residuals , Not by Z1 Explain the part as a new feature

- New features on Y Do linear regression to get significant regression coefficient , Linear combination gives Z2

- Repeat the process M Time

- Fisher Linear discriminant analysis :

- The core idea : The spacing within the sample class is the smallest , The space between classes is the largest

- Calculate the center of two types of data

- Calculate the dispersion matrix of two types of data ( Within class ) Add to get the total dispersion matrix S

- Calculate the dispersion matrix between classes Sb

- Want to project to wx Distance between classes on w’Sbw As big as possible , In class distance w’Sw As small as possible , So optimize w’Sbw/w’Sw

- Get the projected features

- Nonlinear dimensionality reduction

- Local linear embedding LLE

- Geodesic distance isomap

- Laplacian characteristic mapping

边栏推荐

- 框架跳转导致定位失败的解决方法

- Application of hidden list menu and window transformation in selenium

- uniapp用uParse实现解析后台的富文本编辑器的内容及修改uParse样式

- ImportError: ERROR: recursion is detected during loading of “cv2“ binary extensions. Check OpenCV in

- Yolov5 advanced III training environment

- Yolov5进阶之三训练环境

- Fast construction of neural network

- 1.25 suggestions and design of machine learning

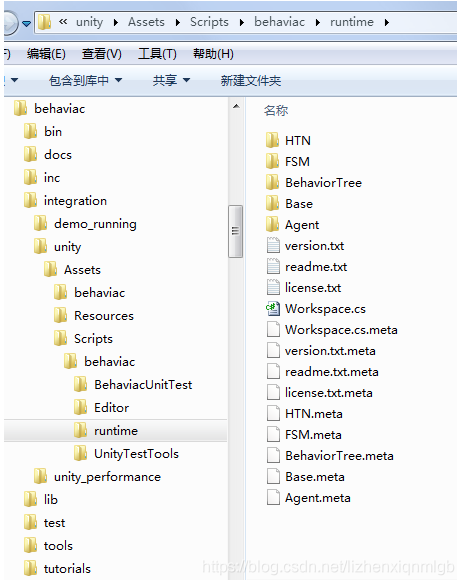

- Introduction to common classes on the runtime side

- 百度小程序富文本解析工具bdParse

猜你喜欢

20220623 Adobe Illustrator入门

如何编译构建

Data warehouse (1) what is data warehouse and what are the characteristics of data warehouse

Fast construction of neural network

框架跳转导致定位失败的解决方法

phpcms v9后台文章列表增加一键推送到百度功能

phpcms v9商城模块(修复自带支付宝接口bug)

Fix the problem that the rich text component of the applet does not support the properties of video cover, autoplay, controls, etc

Reverse crawling verification code identification login (OCR character recognition)

爬虫 对 Get/Post 请求时遇到编码问题的解决方案

随机推荐

攔截器與過濾器的實現代碼

教程1:Hello Behaviac

What is optimistic lock and what is pessimistic lock

How to use the least money to quickly open the Taobao traffic portal?

行为树的基本概念及进阶

行为树XML文件 热加载

Yolov5进阶之二安装labelImg

In depth study paper reading target detection (VII) Chinese version: yolov4 optimal speed and accuracy of object detection

Data warehouse (3) star model and dimension modeling of data warehouse modeling

Machine learning (Part 2)

【程序的编译和预处理】

phpcms小程序插件api接口升级到4.3(新增批量获取接口、搜索接口等)

Load other related resources or configurations (promise application of the applet) before loading the homepage of the applet

Solution to the encoding problem encountered by the crawler when requesting get/post

Phpcms V9 mall module (fix the Alipay interface Bug)

Sqoop merge usage

Chargement à chaud du fichier XML de l'arbre de comportement

自动化测试中,三种常用的等待方式,强制式(sleep) 、 隐式 ( implicitly_wait ) 、显式(expected_conditions)

Application of hidden list menu and window transformation in selenium

PD快充磁吸移動電源方案