当前位置:网站首页>In depth study paper reading target detection (VII) Chinese version: yolov4 optimal speed and accuracy of object detection

In depth study paper reading target detection (VII) Chinese version: yolov4 optimal speed and accuracy of object detection

2022-06-26 09:00:00 【Jasper0420】

In depth study paper, read target detection ( 7、 ... and ) Chinese English version :YOLOv4《Optimal Speed and Accuracy of Object Detection》

- Abstract Abstract

- 1. Introduction introduction

- 2. Related work Related work

- 3. Methodology Method

- 4. Experiments experiment

- 4.1. Experimental setup Experimental setup

- 4.2. Influence of different features on Classifier training The influence of different skills on classifier training

- 4.3 Influence of different features on Detector training The influence of different skills on testing training

- 4.4 Influence of different backbones and pre-trained weightings on Detector training Different backbone And the influence of pre training weight on detector training

- 4.5 Influence of different mini-batch size on Detector training Different mini-batch size Impact on detector training

- 5. Results result

- 6. Conclusions Conclusion

Abstract Abstract

There are a number of techniques to improve convolutional neural networks (CNN) The accuracy of the . Need to be in big The combination of this technique is actually tested under the data set , And need to theorize about the results Prove . Some techniques are used only on certain models and are specific to certain problems , Or only for small Large data sets ; And some skills , Such as batch normalization 、 Residual connection, etc , Apply to Most models 、 Tasks and datasets . We assume that this general technique includes weighted residuals Differential connection (Weighted-Residual-Connection,WRC)、 Across small batch connections (Cross-Stage-Partial-connection,CSP)、Cross mini-Batch Normalization (CmBN)、 Self confrontation training (Self-adversarial-training,SAT) and Mish Thrill Live functions . We use these new techniques in this article :WRC、CSP、CmBN、SAT, Mish-activation,Mosaic data augmentation、CmBN、DropBlock Regular Hua He CIoU Loss , And a combination of these techniques , stay MS COCO The data set reaches the goal The best result before :43.5% Of AP(65.7% AP50), stay Tesla V100 Up to To about 65FPS. See source code :GitHub

1. Introduction introduction

Most are based on CNN The target detectors are basically only applicable to the recommended system . example Such as : Look for free parking spaces through city cameras , It is done by an accurate slow model , and The car collision warning needs to be provided by the fast 、 Low precision model completion . Improve the performance of real-time target detector precision , It can not only be used to prompt the generation of recommendation system , It can also be used for independent Process management and reduction of human input . Tradition GPU So that target detection can be carried out at an affordable price Grid operation . The most accurate modern neural networks do not run in real time , Requiring a lot of training GPU With big mini bacth size. Let's create a CNN To solve this problem problem , In traditional GPU Real time operation on , For these exercises, only one is needed Conventional GPU.

The main purpose of this research is to design a target that can run quickly in the production environment detector , And parallel computing optimization , It is not a theoretical index with low calculation amount (BFLOP). We want the goal to be easy to train and use . for example , Anything that makes With tradition GPU People who train and test can achieve real-time 、 High-quality 、 Persuasive Force target detection results ,YOLOv4 The results are shown in the figure 1 Shown . Now our achievements are summarized as As follows :

- We built a fast 、 Powerful models , This makes it possible for everyone to use 1080 Ti or 2080 Ti GPU To train a super fast 、 Accurate target detector .

- We have verified the most advanced Bag-of-Freebies and Bag-of-Specials Method Impact during target detection training .

- We modified the most advanced method , Make it more efficient and suitable for single GPU Training , Include CBN[89]、PAN[49]、SAM[85] etc. .

2. Related work Related work

2.1. Object detection models Target detection model

Modern target detectors usually consist of two parts :ImageNet Pre trained backbone And are used to predict categories and BBOX The detector head. For those in GPU Detectors running on the platform , Its backbone It can be VGG[68],ResNet[26]、 ResNeXt[86]、 or DenseNet [30]. For those running on CPU Detection on the platform Device form , Their backbone It can be SqueezeNet[31]、MobileNet[28,66, 27,74], or ShuffleNet[97,53]. as for head part , It is usually divided into two categories : That is, one stage (one-stage) And two stages (two-stage) The target detector . The most modern The representational two-stage detector is R-CNN[19] Series model , Include Fast R-CNN[18]、 Faster R-CNN[64]、R-FCN[9] and Libra R-CNN[58]. It can also be done in two stages Not used in standard detector anchor The target detector , Such as RepPoints[87]. For first order Segment detector , The most representative ones are YOLO[61、62、63]、SSD[50] and RetinaNet[45]. in recent years , Also developed many do not use anchor The first stage goal of detector . This type of detector has CenterNet[13]、CornerNet[37,38]、FCOS[78] etc. . In recent years, the development of detectors is often in backbone and head Insert some layers between , These layers are used to collect characteristic maps of different stages . We can call it the detector neck. Usually neck There are several bottom-up or top-down pathways (paths) form . Networks with this structure include Feature Pyramid Network (FPN)[44]、Path Aggregation(PAN)[49]、BiFPN[77] and NAS-FPN[17]. In addition to the above model , Some researchers focus on direct reconstruction backbone(DetNet[43]、DetNAS[7]) Or rebuild the entire model (SpineNet[12]、HitDetector[20]), And used for target detection Test task .

Sum up , Generally, the target detection model consists of the following parts :

2.2. Bag of freebies

Usually , The traditional training of target detector is carried out offline . therefore , Researchers always like to use the benefits of pure training to study better training methods , bring The target detector achieves better accuracy without increasing the test cost . We put these The method that only needs to change the training strategy or only increase the training cost is called bag of freebies. Objective Data enhancement is often used and meets this definition . The purpose of data enhancement is Increase the diversity of input images , Thus, the designed target detection model can be applied to different environments The image of has high robustness . such as photometric distortions and geometric distortions Are two commonly used data enhancement methods , They must be good for the detection task Situated . Use photometric distortions when , We adjust the brightness of the image 、 Contrast 、 tonal 、 Saturation and noise . Use geometric distortions when , We add... To the image Random scaling 、 tailoring 、 Flip and rotate .

The data enhancement methods mentioned above are all pixel by pixel adjustment , And adjust the area The original pixel information will be preserved . Besides , Some research on data enhancement The researchers focus on the problem of simulating target occlusion . They are in image classification and object detection Got good results . for example , Random erase [100] and CutOut[11] The graph can be selected randomly Rectangular area in image , And fill in random values or complementary values of zero . as for hide-and-seek [69] and grid mask [6], They randomly or evenly select multiple rectangular regions in the image , and Replace all its pixel values with zero values . If a similar concept is applied to a feature graph , Just yes DropOut[71]、DropConnect[80] and DropBlock[16] Method . Besides , Youyan Researchers have proposed a method of data enhancement by putting multiple images together . for example , MixUp[92] Multiply and superimpose the two images with different coefficients , And according to the stacking ratio For example, adjust the label . about CutMix[91], It overwrites the cropped image to other images The rectangular area of , And adjust the label according to the size of the mixing area . In addition to the methods mentioned above , Network migration GAN[15] It is also often used for data enhancement , This method can effectively reduce CNN Learned texture deviation .

Different from the various methods proposed above , Others Bag of freebies The method is Specifically solve the problem of semantic distribution in data sets that may have deviation . In dealing with semantic distribution deviation On the issue of , A very important problem is the data imbalance between different categories , And two The stage detector usually handles this problem by hard negative example mining [72] or online hard example mining [67]. but example mining method Do not apply Target detector in one stage , Because this detector is a dense prediction Architecture . therefore , Linet al.[45] Put forward focal loss Solve the problem of data imbalance . Another very important The problem is ,one-hot Coding is difficult to express the degree of association between classes . This representation is Law (one-hot) It is usually used in labeling . stay [73] Proposed in label smoothing The scheme is to transform hard tags into soft tags for training , It can make the model more Robust . In order to get better soft tags ,Islam etc. [33] Introduce the concept of knowledge distillation And used to design label refinement network .

the last one bag of freebies It's a bounding box (BBox) The objective function of regression . testing The device usually uses MSE Loss function pair BBOX The center point and width and height of , for example {xcenter, ycenter, w, h}, Or regression prediction of the upper left corner and the lower right corner , for example {xtop_left, ytop_left, xbottom_right, ybottom_right}. Based on anchor Methods , It will Estimate the corresponding offset , for example { x center offset , y center offset , w offset , h offset } and { x top left offset , y top left offset , x bottom right offset , y bottom right offset }. however , If you want to estimate directly BBOX Coordinate value of each point , Take these points as independent variables , But in fact, the integrity of the object itself is not considered . by This makes this problem better solved , Some researchers recently proposed IoU Loss [90], It takes into account the forecast BBox Area and ground truth BBox Coverage of area . IoU The loss calculation process will calculate the difference between the predicted value and the real value IoU, And then what will be generated The results are linked into a whole code , Finally, through calculation BBox Four coordinate values of . because IOU Is a scale independent representation , It can be solved when the traditional method calculates {x, y,w,h} Of l1 or l2 When it's lost , The problem that the loss will increase with the increase of scale . most near , Some researchers continue to improve IOU Loss . for example GIoU Loss [65] In addition to coverage The product also takes into account the shape and direction of the object . They suggest finding ways to cover predictions at the same time BBOX and True value BBox The smallest area of BBOX, And use this BBox As the denominator and replace The original IoU Denominator of loss . as for DIoU Loss [99], It also includes considering objects The distance from the center , On the other hand CIoU Loss [99] Taking into account the overlapping area and the center point The distance between them and the aspect ratio .CIoU Can be in BBox On the issue of regression The convergence rate and accuracy of .

2.3. Bag of specials

For those insert modules and post-processing methods that only add a small amount of reasoning cost , However, it can significantly improve the accuracy of target detection , We call it “Bag of specials”. One In general , These plug-in modules are used to enhance certain properties of the model , Such as expanding the receptive field 、 Introduce attention mechanism or enhance feature integration ability , Post processing is a screening model pre - processing Test result method .

Common modules that can be used to expand receptive fields are SPP[25]、ASPP[5] and RFB[47]. SPP The module comes from Spatial Pyramid Match(SPM)[39], and SPMs The original square of Method is to divide the feature map into several d×d Blocks of equal size , among d It can be {1,2,3,…}, So as to form a spatial pyramid , Then extract bag-of-word features .SPP take SPM Integrated into the CNN And use max-pooling Operate rather than bag-of-word shipment count . because He Et al SPP modular [25] One dimensional eigenvectors will be output , So no It may be applied to full convolution networks (FCN) in . therefore , stay YOLOv3 The design of the [63] in , Redmon and Farhadi Improved YOLOv3 The design of the , take SPP The module is modified as fusion k×k Maximum pooled output of pooled cores , among k = {1,5,9,13}, The step size is equal to 1. Here A design , A relatively large k×k Effectively increases backbone Feeling field of . An improved version of SPP After module ,YOLOv3-608 stay MS COCO On AP50 carry Up 2.7%, But you have to pay 0.5% Additional calculation cost of .ASPP[5] Module and improved SPP The difference in the operation of the module is mainly caused by the original step size 1、 The nuclear size is k×k Of Maximum pool size to several 3×3 Maximum pooling of cores , The scale is k, step 1 The emptiness of Convolution .RFB The module uses several k×k Void convolution of kernels , The void rate is k, step by 1 To get a better result ASPP More comprehensive space coverage .RFB[47] Just add extra 7% The reasoning time is MS COCO Admiral SSD Of AP50 promote 5.7%.

The attention module is often used in object detection , Usually divided into channel-wise notes Willpower and point-wise attention , The two representative models are Squeeze-andExcitation (SE) [29] and Spatial Attention Module (SAM) [85]. although SE model A block can put ResNet50 stay ImageNet Image classification task top-1 Accuracy improved 1%, The amount of computation only increases 2%, But in GPU Reasoning time usually increases 10% about , So it is more suitable for mobile devices . But for the SAM, It just needs extra 0.1% The amount of calculation can be ResNet50-SE stay ImageNet Image classification task Top1 Improved accuracy 0.5%. most important of all , It will not affect at all GPU The speed of reasoning .

In terms of feature fusion , Early practice was to use a quick connection (skip connection) [51] Or super column (hyper-column)[22] Integrate low-level physical features into high-level semantic features . because FPN And other multi-scale prediction methods are becoming more and more popular , Many have integrated different features The lightweight module of the character of the word tower has been put forward . Such modules include SFAM[98]、 ASFF[48] and BiFPN[77].SFAM The main idea is to use SE Module to multi-scale The feature map reweights the mosaic feature map in the channel direction . as for ASFF, It USES softmax Conduct point-wise Horizontal reweighting , Then add feature maps at different scales . stay BiFPN in , Multiple input weighted residuals are connected for multi-scale reweighting , And then in Add characteristic graphs on different scales .

In the study of deep learning , Some people focus on finding good activation functions . A good Activation function can make gradient propagate more effectively , At the same time, it will not cause too many additional calculations cost .2010 year ,Nair and Hinton [56] Put forward ReLU To substantially address the gradient The problem of disappearing , This is also tanh and sigmoid Problems frequently encountered by activation functions . And then So I put forward LReLU[54]、PReLU[24]、ReLU6[28]、Scaled Exponential Linear Unit (SELU)[35]、Swish[59]、hard-Swish[27] and Mish[55] etc. , this Some activation functions are also used to solve the gradient vanishing problem .LReLU and PReLU The main purpose of Is to solve when ReLU When the output is less than zero, the gradient is zero . as for ReLU6 and Hard-Swish, They are designed for quantitative networks (quantization networks) Design . For self normalized neural networks ,SELU The proposal of activation function satisfies this purpose . It should be noted that ,Swish and Mish Are continuously differentiable activation functions .

The commonly used post-processing method in object detection based on deep learning is NMS( Nonmaximal Value suppression ), It can be used to filter the bounding boxes with poor prediction of the same target , also Only candidate bounding boxes with high response are reserved .NMS Try to improve the way with the objective function The optimization method is consistent .NMS The original method did not consider the background information , therefore Girshick etc. people [19] stay R-CNN The classification confidence score is added as a reference , And score according to trust The order of numbers , Perform greedy from high to low NMS. as for soft NMS[1], It focuses on such a problem , That is, target occlusion may result in IoU The greed of scores NMS The confidence score of . be based on DIoU Of NMS[99] The developer's idea is to soft NMS Add the distance information of the center point to BBox In the process of screening . Worth Pay attention to is , Because the above post-processing methods do not directly involve the extraction of feature map , therefore Without using anchor The post-processing is no longer required in the subsequent development of the method .

3. Methodology Method

The basic purpose is the fast running speed of the neural network in the production system and the parallel computing Optimize , Instead of low computational theoretical indicators (BFLOP). We propose two kinds of real-time gods Via Internet :

- about GPU, We use a small number of groups in the convolution layer (1-8):CSPResNeXt50 / CSPDarknet53

- about VPU, We use packet convolution , But avoid using Squeeze-andexcitement (SE)blocks. It includes the following models :EfficientNet-lite / MixNet [76] / GhostNet[21] / MobileNetV3

3.1 Selection of architecture Schema selection

Our goal is to input network resolution 、 Number of convolution layers 、 The number of arguments ( Convolution nucleus 2 * Number of convolution kernels * The channel number / Group number ) And the number of outputs per layer ( filter ) Between look for To The best balance . For example, many of our studies have shown that CSPResNext50 stay ILSVRC2012(ImageNet) The target classification effect on the dataset is better than CSPDarknet53 good quite a lot . However ,CSPDarknet53 stay MS COCO The target detection effect on the dataset is better than CSPResNext50 Better .

The next goal is to choose additional blocks To expand the receptive field , From different levels of backbone In order to achieve different levels of detection results , example Such as FPN、PAN、ASFF、BiFPN.

An optimal classification reference model is not always an optimal detector . Compared with classifiers , The detector needs to meet the following points :

- Larger input network size ( The resolution of the )—— It is used to detect multiple small-size items mark

- More layers —— Get a larger receptive field to adapt to the network input size An increase in

- More parameters —— Obtain larger model capacity to detect multiple objects in a single image Objects of different sizes .

We can assume that backbone The model has a large receptive field ( It's very many 3×3 Convolution layer ) And a lot of parameters . surface 1 Shows CSPResNeXt50, CSPDarknet53 and EfficientNet B3 Information about .CSPResNext50 Contains only 16 individual 3×3 Convolution layer 、425×425 Feel the size of the field and 20.6M Parameters , and CSPDarknet53 contain 29 individual 3×3 Convolution 、725×725 Feel the size of the field and 27.6M Parameters . This theoretical proof , In addition, our large number of experiments show that :CSPDarknet53 It is the best detector in these two neural networks backbone Model .

The effects of receptive fields of different sizes are summarized as follows : Maximum target size —— Allow observation of the entire target Maximum network size —— Allows you to observe the context around the target Network size exceeded —— Increase the connection between image pixels and the final active value Count

We will SPP Module added to CSPDarknet53 On , Because it greatly increases the sense of Receptive field , Isolate the most important context features , However, it hardly causes the network to run faster Degree reduction . We use PANet As from different detector levels backbone Of Parameter combination method instead of YOLOv3 Used in FPN. Finally, we choose CSPDarknet53 backbone, SPP additional module, PANet path-aggregation neck, and YOLOv3 (anchor based) head as the architecture of YOLOv4. Last , about YOLOv4 framework , We choose CSPDarknet53 by backbone、 SPP Add additional modules 、PANet path-aggregation by neck、YOLOv3( be based on anchor Of ) by head.

after , We will plan to expand greatly Bag of Freebies(BoF) The content of is detected Device architecture , These extended modules should theoretically solve some problems and increase detection Device accuracy , And check the influence of each function in order through experiments .

We don't use cross GPU Standardization of batch production (CGBN or SyncBN) Or expensive Special equipment . This makes it possible for anyone to use a conventional graphics processor , for example GTX 1080Ti or RTX2080Ti, Reproduce our latest achievements .

3.2. Selection of BoF and BoS BoF and BoS The choice of

In order to improve the training of target detection ,CNN The following methods or structures are usually used : Activation function : ReLU、leaky-ReLU、parametric-ReLU、ReLU6、 SELU、Swish、Mish Bounding box loss regression :MSE、IoU、GIoU、CIoU、DIoU Data to enhance :CutOut、MixUp、CutMix Regularization method :DropOut、DropPath[36]、Spatial DropOut [79]、 DropBlock through too Mean and Fang Bad Standardized network activation function output value : Batch Normalization (BN) [32] 、 Cross-GPU Batch Normalization (CGBN or SyncBN)[93]、Filter Response Normalization (FRN) [70]、Cross-Iteration Batch Normalization (CBN) [89] Quick connection (Skip-connections): Residual connection 、 Weighted residual connection 、 many Input weighted residual connection 、Cross stage partial connections (CSP)

As for the training activation function , because PRELU and SELU The training is difficult , and ReLU6 It is specially designed for quantifying the network , So we remove this from the candidate list Several activation functions . As for the regularization method , Published DropBlock Of people will be their side Method is compared with other methods , Their regularization method won out . therefore , I We chose without hesitation DropBlock As our regularization method . As for normalization ( Or standardization ) Choice of method , Because we only focus on using one GPU Upper Training strategy , Therefore, the use of syncBN.

3.3. Additional improvements Additional improvements

In order to make the designed detector more suitable for single GPU Training on , We do The following additional designs and improvements have been made : We introduce a new data enhancement method Mosaic And self confrontation training side Law (Self-Adversarial Training,SAT) We use genetic algorithm to select the optimal hyperparameter We have made some changes to the current method , Make our design more suitable for high Effective training and testing —— Modified SAM、 Modified PAN and Cross miniBatch Normalization (CmBN).

Mosaic It's a mixture of 4 A new data enhancement method for training images . because Mixed up 4 Different contexts, and CutMix Only mixed 2 Input images . this So that the target can be detected contexts Other goals . Besides , Batch standardization from each On the floor 4 Calculate activation value statistics for different images . This significantly reduces the need for batch size The need for .

Self confrontation training (Self-Adversarial Training,SAT) It is also a new kind of data Enhancement technology , With 2 Operate in a forward and reverse phase . In the first stage , god The network changes the original image instead of the network weight . In this way, the neural network has its own Conduct a confrontational attack , Change the original image and create the illusion that there is no target on the image . stay The first 2 In three stages , Through the normal way in the modified image to detect the target on the neural network Train through the network .

Pictured 4 Shown ,CmBN yes CBN Modified version of , Defined as Cross mini-Batch Normalization(CmBN). It only collects data from a single batch mini-batches Statistics between data .

Pictured 5 Shown , We will SAM from spatial-wise attention It is amended as follows pointwise attention, Pictured 6 Shown , We will PAN The quick connection of is changed to splicing .

3.4. YOLOv4 YOLOv4

In this section, , We will introduce in detail YOLOv4. YOLOv4 Include :

- Backbone:CSPDarknet53 [81]

- Neck:SPP [25]、PAN [49]

- Head:YOLOv3[63] YOLO v4 Use :

- Bag of Freebies (BoF) for backbone:CutMix and Mosaic data enhance 、DropBlock Regularization 、Class label smoothing

- Bag of Specials(BoS) for backbone:Mish Activation function 、Crossstage partial connections (CSP) 、 Lose more Enter into Add power remnant Bad even Pick up (MiWRC)

- Bag of Freebies (BoF) for detector: CIoU Loss 、CmBN、 DropBlock Regularization 、Mosaic Data to enhance 、 Self confrontation training 、Eliminate grid sensitivity、Using multiple anchors for a single ground truth、 Cosine annealing scheduler [52]、 Optimize the super parameters 、Random training shapes

- Bag ofSpecials (BoS) for detector:Mish Activation function 、SPP-block、 SAM-block、PAN path-aggregation block、DIoU-NMS

4. Experiments experiment

We tested different training improvements in ImageNet Dataset classification task (ILSVRC 2012 year val) and MS COCO(test-dev 2017) Data set detection The accuracy of the .

4.1. Experimental setup Experimental setup

stay ImageNet In the experiment of image classification , The default super parameters are as follows : The training steps are 8 Millions ; Batch size and mini The batch sizes are 128 and 32;polynomial decay learning rate scheduling strategy The initial learning rate is 0.1 Polynomial decay scheduling Strategy ;warm-up The steps are 1000; The momentum and attenuation weights are set to 0.9 and 0.005. All of us BoS The default superparameter settings used in experiments , And in the BoF In the experiments , We added extra 50% The number of training steps . stay BoF In the experiments , We verified MixUp、 CutMix、Mosaic、Bluring Data enhancement and tagging smoothing Regularization method . stay BoS In the experiment we compared LReLU、Swish and Mish The effect of the activation function . the It is used in all experiments 1080Ti or 2080 Ti GPU Trained .

stay MS COCO In the target detection experiment , The default parameters are as follows : The training steps are 500500; The initial learning rate is 0.01 Learning rate decay strategy , And separately in 40 ten thousand Step sum 45 Ten thousand step times the coefficient 0.1. Momentum and weight attenuation are set to 0.9 and 0.0005. All architectures use a single GPU Multi-scale training , Batch size is 64,mini batch The size is 8 or 4, It depends on the model architecture and GPU Video memory capacity limit . In addition to making Using genetic algorithm to search the super parameters , All other experiments use the default settings . YOLOv3-SPP The genetic algorithm experiment used GIoU I'm going to train , Yes minval 5k The dataset goes on 300 Round search . The learning rate of genetic algorithm is 0.00261、 Momentum is 0.949, True value IoU Threshold set to 0.213, Loss regularity Into 0.07. We have also verified a large number of BoF, Include grid sensitivity elimination、 Mosaic data enhancement 、IoU threshold 、 Genetic algorithm (ga) 、 Category label smoothing、 Cross small batch Quantity standardization 、 Self confrontation training 、cosine annealing scheduler、dynamic mini-batch size、DropBlock、Optimized Anchors、 Different types of IoU Loss . We also For all kinds of BoS Experiments were carried out , Include Mish、SPP、SAM、RFB、BiFPN、 BiFPN and Gaussian YOLO[8]. For all the experiments , We only use one GPU Trained , So things like syncBN It can optimize many GPU The technique of training does not Use .

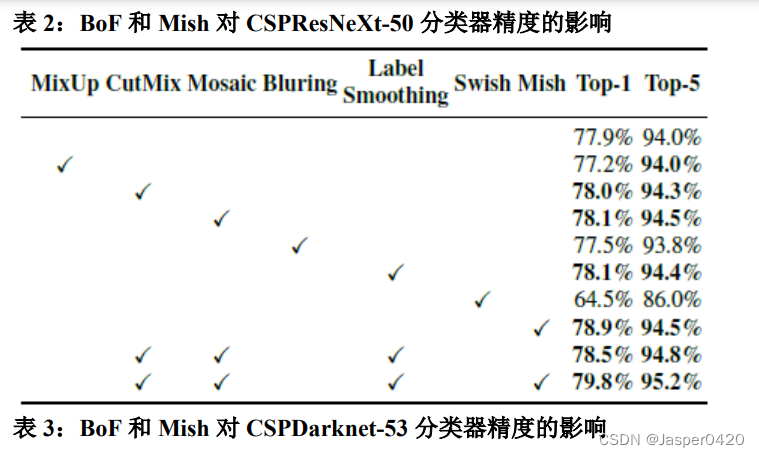

4.2. Influence of different features on Classifier training The influence of different skills on classifier training

First , We study the effects of different techniques on classifier training ; To be specific , study Studied the category label smoothing Influence , Pictured 7 Bilateral blur shown (bilateral blurring)、MixUp、CutMix And mosaic , as well as Leaky-ReLU( The default value is )、Swish and Mish And so on .

As shown in the table 2 Shown , Our experiment introduces the following techniques to improve the accuracy , Such as CutMix And mosaic data enhancement 、 Category label smoothing and Mish Activation function . therefore , Our classifier is trained BoF-backbone (Bag of Freebies) Include CutMix and Mosaic Data to enhance 、 Category label smoothing. besides , As shown in the table 2 And table 3 As shown in, we also use Mish Activate function as complementary option .

4.3 Influence of different features on Detector training The influence of different skills on testing training

As shown in the table 4 Shown , In depth study of different Bag-of-Freebies (BoF-detector) Under inspection The influence of the tester training . We do not affect... Through research FPS Which can improve the accuracy at the same time skill , Significantly expanded BOF The contents of the list , As follows :

- S: Eliminates lattice sensitivity , stay YOLOv3 Through the equation bx=σ(tx)+cx, by=σ(ty)+cy Calculate object coordinates , among cx and cy Is always an integer , therefore , When bx It's close to cx or cx+1 It takes a lot of tx The absolute value . We go through take sigmoid Multiply by more than 1.0 To solve this problem , So as to eliminate Have detected the impact of the target grid .

- M: Mosaic data enhancement —— Use during training 4 Mosaic results of images Not a single image

- IT:IoU threshold —— Use multiple... For a truth bounding box anchor,Iou ( Truth value ,anchor)>IoU threshold

- GA: Genetic algorithm (ga) —— At the beginning of network training 10% Using genetic algorithms within a period of time Method to select the optimal hyperparameters

- LS: Category label smoothing—— Yes sigmoid The activation function result uses the class Don't label smoothing

- CBN:CmBN—— Use Cross mini-Batch Normalization Throughout Collect statistical data in small batches , Not in a single mini Collect statistics in small batches data

- CA:Cosine annealing scheduler—— Change the learning rate in sine training DM:Dynamic mini-batch size—— When using random training shapes , For small resolution input, it is automatically increased mini-batch Size

- OA: optimization Anchors—— Use optimization anchor Yes 512×512 Net of Network resolution for training

- GIoU、CIoU、DIoU、MSE—— Bounding box uses different loss algorithms

As shown in the table 5 Shown , Further research involves different Bag-of-Specials(BoSdetector) The influence on the training accuracy of detector , Include PAN、RFB、SAM、Gaussian YOLO(G) and ASFF. In our experiment , When using SPP、PAN and SAM when , The detector achieves the best performance .

4.4 Influence of different backbones and pre-trained weightings on Detector training Different backbone And the influence of pre training weight on detector training

As shown in the table 6 Shown , We further study different backbone Influence on detector accuracy . We note that model architectures with the best classification accuracy do not always have the best check the accuracy .

First , Although using different features CSPResNeXt50 The classification accuracy of the model is high On CSPDarknet53 Model , however CSPDarknet53 The model is on the target detection side Higher accuracy .

secondly ,CSPResNeXt50 The training of classifiers uses BoF and Mish After that, it was improved Its classification accuracy , However, applying these pre trained weights to the detector training reduces The accuracy of the detector . However ,CSPDarknet53 The classifier is trained using BoF and Mish Both improve the accuracy of the classifier and detector , The detector uses the weight of classifier pre training . The end result is , CSPDarknet53 Than CSPResNeXt50 More suitable for detector Of backbone.

We observed that ,CSPDarknet53 The model shows greater performance due to various improvements Force to improve the accuracy of the detector .

4.5 Influence of different mini-batch size on Detector training Different mini-batch size Impact on detector training

Last , We analyzed the model through different mini-batch The results of size training , The result chart 7 Shown . From the table 7 From the results shown in , We found that when training, we added BoF and BoS after mini-batch Size has almost no effect on detector performance . This result indicate , introduce BoF and BoS There will be no need to use expensive GPU To train . let me put it another way , Anyone can use only one traditional GPU To train a good detector .

5. Results result

Pictured 8 The comparison results between our model and other state-of-the-art detectors are shown . I Their YOLOv4 On the Pareto optimal curve , And in terms of speed and accuracy, it is superior to The fastest and most accurate detector .

Because different methods use different architectures in reasoning time verification GPU, We let YOLOv4 Running on the Maxwell、Pascal and Volta Such as the commonly used GPU On , And compared with other latest technologies . surface 8 Lists the use of Maxwell GPU Time frame rate comparison results , The specific model can be GTX Titan X (Maxwell) or Tesla M40 GPU). surface 9 Lists the use of Pascal GPU Time frame rate comparison results , The specific model can be Titan X(Pascal)、Titan Xp、GTX 1080 Ti or Tesla P100 GPU. surface 10 List the uses Volta GPU Time frame rate comparison results , Specific model It can be Titan Volta or Tesla V100 GPU.

6. Conclusions Conclusion

We provide a state-of-the-art detector , Compared to all other available 、 Substitutable The detector is faster (FPS)、 More accurate (MS COCO AP50…95 and AP50). The detector can be used in 8-16GB-VRAM Tradition GPU Train and use , This makes It can be widely used . Based on one stage anchor The original concept of the detector has been Proved to be feasible . We have validated a number of methods , And choose to use some of these methods To improve the accuracy of classifiers and detectors . These methods can be used for future research and development Best practices .

边栏推荐

- 小程序实现图片预加载(图片延迟加载)

- How to use leetcode

- [IVI] 15.1.2 system stability optimization (lmkd Ⅱ) psi pressure stall information

- Machine learning (Part 2)

- Construction and verification of mongodb sharding environment (redis final assignment)

- 20220623 getting started with Adobe Illustrator

- 关于小程序tabbar不支持传参的处理办法

- Games104 Lecture 12 游戏引擎中的粒子和声效系统

- Polka lines code recurrence

- Nebula diagram_ Object detection and measurement_ nanyangjx

猜你喜欢

Simulation of parallel structure using webots

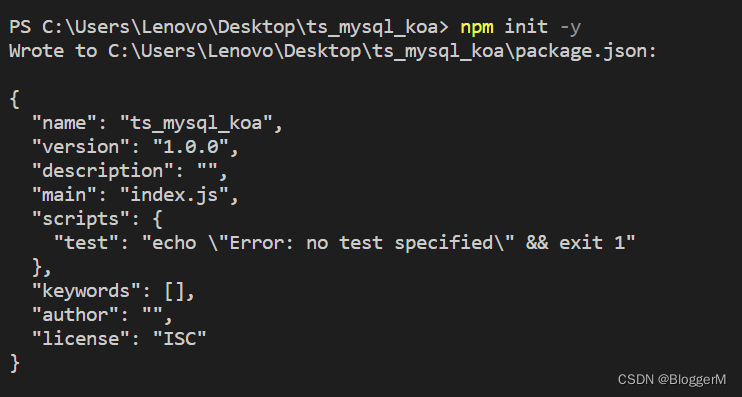

Koa_ mySQL_ Integration of TS

uniapp用uParse实现解析后台的富文本编辑器的内容及修改uParse样式

phpcms小程序插件api接口升级到4.3(新增批量获取接口、搜索接口等)

phpcms v9去掉phpsso模块

Fast construction of neural network

修复小程序富文本组件不支持video视频封面、autoplay、controls等属性问题

![[Matlab GUI] key ID lookup table in keyboard callback](/img/b6/8f62ff4ffe09a5320493cb5d834ff5.png)

[Matlab GUI] key ID lookup table in keyboard callback

Uniapp uses uparse to parse the content of the background rich text editor and modify the uparse style

力扣399【除法求值】【并查集】

随机推荐

1.21 study logistic regression and regularization

【IVI】15.1.2 系统稳定性优化篇(LMKD Ⅱ)PSI 压力失速信息

Implementation code of interceptor and filter

【云原生 | Kubernetes篇】深入万物基础-容器(五)

isinstance()函数用法

In automated testing, there are three commonly used waiting methods: sleep, implicitly\wait, and expected\u conditions

Autoregressive model of Lantern Festival

Object extraction_ nanyangjx

SRv6----IS-IS扩展

Backward usage

Whale conference provides digital upgrade scheme for the event site

Fourier transform of image

Install Anaconda + NVIDIA graphics card driver + pytorch under win10_ gpu

phpcms小程序插件教程网站正式上线

Koa_ mySQL_ Integration of TS

唯品会工作实践 : Json的deserialization应用

Convex optimization of quadruped

phpcms v9商城模块(修复自带支付宝接口bug)

Games104 Lecture 12 游戏引擎中的粒子和声效系统

Corn image segmentation count_ nanyangjx