当前位置:网站首页>Transformers datacollatorwithpadding class

Transformers datacollatorwithpadding class

2022-06-26 14:32:00 【Live up to your youth】

Construction method

DataCollatorWithPadding(tokenizer:PreTrainedTokenizerBase

padding:typing.Union[bool, str, transformers.utils.generic.PaddingStrategy] = True

max_length : typing.Optional[int] = None

pad_to_multiple_of : typing.Optional[int] = None

return_tensors : str = 'pt ' )

stay transfomers in , Defined a DataCollator class , This class is used to package a single element of a dataset into a batch of data .DataCollatorWithPadding Class is DataCollator Class , This class will dynamically fill in the input data when packaging .

Parameters tokenizer Indicates the input word breaker . Parameters padding It can be for bool type ,True Indicates filling ,False Means not to fill ; It can also be a string , Indicates a population policy ,"longest" It means to fill according to the longest data in the input data ,"max_length" Indicates that it is filled to the parameter max_length Set the length ,“do_not_pad" Means not to fill . Parameters pad_to_multiple_of Represents a multiple of the filled data . Parameters return_tensors Indicates the data type returned , It can be for "pt”,pytorch data type ;“tf”,tensorflow data type ;“np”,"numpy" data type .

Examples of use

>>> import transformers

>>> import datasets

>>> dataset = datasets.load_dataset("glue", "cola", split="train")

>>> dataset = dataset.map(lambda data: tokenizer(data["sentence"],padding=True), batched=True)

>>> dataset

Dataset({

features: ['sentence', 'label', 'idx', 'input_ids', 'token_type_ids', 'attention_mask'],

num_rows: 8551

})

>>> tokenizer = transformers.BertTokenizer.from_pretrained("bert-base-uncased")

>>> data_collator = transformers.DataCollatorWithPadding(tokenizer,

padding="max_length",

max_length=12,

return_tensors="tf")

>>> dataset = dataset.to_tf_dataset(columns=["label", "input_ids"], batch_size=16, shuffle=False, collate_fn=data_collator)

>>> dataset

<PrefetchDataset element_spec={'input_ids': TensorSpec(shape=(None, None), dtype=tf.int64, name=None), 'attention_mask': TensorSpec(shape=(None, None), dtype=tf.int64, name=None), 'labels': TensorSpec(shape=(None,), dtype=tf.int64, name=None)}>

边栏推荐

- Sword finger offer 06.24.35 Linked list

- Bucket of P (segment tree + linear basis)

- vmware部分设置

- Extended hooks

- Experience sharing of mathematical modeling: comparison between China and USA / reference for topic selection / common skills

- 数学建模经验分享:国赛美赛对比/选题参考/常用技巧

- How to convert data in cell cell into data in matrix

- idea快捷键

- ArcGIS batch export layer script

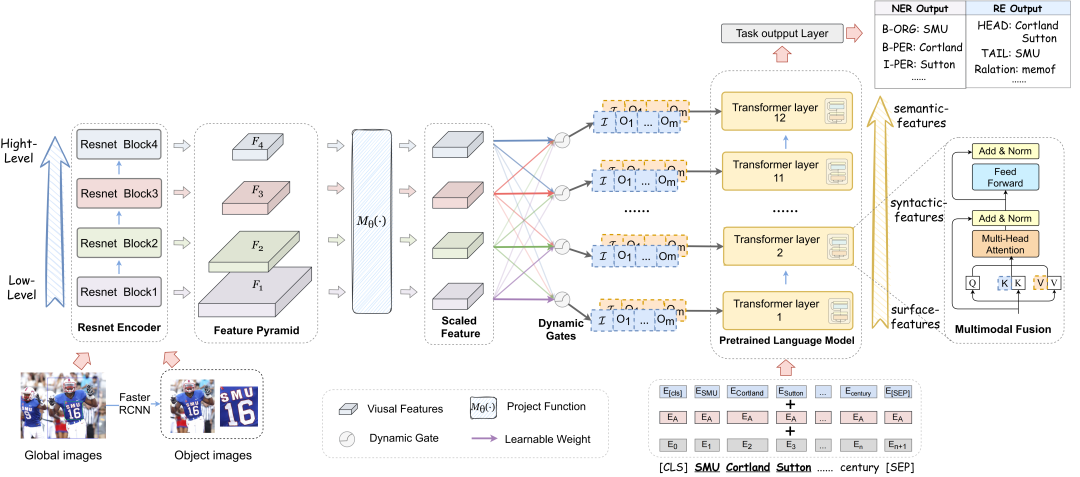

- NAACL2022:(代码实践)好的视觉引导促进更好的特征提取,多模态命名实体识别(附源代码下载)...

猜你喜欢

GDAL multiband synthesis tool

9 articles, 6 interdits! Le Ministère de l'éducation et le Ministère de la gestion des urgences publient et publient conjointement neuf règlements sur la gestion de la sécurité incendie dans les établ

NAACL2022:(代码实践)好的视觉引导促进更好的特征提取,多模态命名实体识别(附源代码下载)...

这才是优美的文件系统挂载方式,亲测有效

Sword finger offer 45.61 Sort (simple)

永远不要使用Redis过期监听实现定时任务!

备战数学建模30-回归分析2

Codeforces Global Round 21A~D

备战数学建模32-相关性分析2

使用宝塔面板部署flask环境

随机推荐

Freefilesync folder comparison and synchronization software

【async/await】--异步编程最终解决方案

How to call self written functions in MATLAB

Correlation analysis related knowledge

Extended hooks

MHA高可用配合及故障切换

One article of the quantification framework backtrader read observer

Linear basis

ThreadLocal giant pit! Memory leaks are just Pediatrics

Introduction to basic knowledge of C language (Daquan) [suggestions collection]

Error when redis is started: could not create server TCP listening socket *: 6379: bind: address already in use - solution

Relevant knowledge of information entropy

2022年最新贵州建筑八大员(机械员)模拟考试题库及答案

Codeforces Global Round 21A~D

服务器创建虚拟环境跑代码

C language | file operation and error prone points

[wc2006] director of water management

Server create virtual environment run code

Sword finger offer 06.24.35 Linked list

Difference between classification and regression