当前位置:网站首页>In depth analysis of mobilenet and its variants

In depth analysis of mobilenet and its variants

2022-06-23 13:39:00 【Xiaobai learns vision】

Click on the above “ Xiaobai studies vision ”, Optional plus " Star standard " or “ Roof placement ”

Heavy dry goods , First time delivery I've been looking at things about lightweight networks recently , I found that this summary is very good , So I translated it ! Sum up the varieties , At the same time, the schematic diagram is very clear , Hope to give you some inspiration , If you feel good, welcome to sanlianha !

Introduction

In this paper , I outlined efficiency CNN Model ( Such as MobileNet And its variants ) The components used in (building blocks), And explain why they are so efficient . Specially , I've provided a visual explanation of how to convolute in space and channel domains .

Components used in efficient models

In explaining specific efficiency CNN Before the model , Let's check the efficiency first CNN The amount of computation of the components used in the model , Let's see how convolution works in space and channel domains .

hypothesis H x W For export feature map Space size of ,N Is the number of input channels ,K x K Is the size of the convolution kernel ,M Is the number of output channels , Then the computation of standard convolution is HWNK²M .

The important point here is , The computation of standard convolution is the same as that of (1) Output characteristic map H x W Space size of ,(2) Convolution kernel K Size ,(3) Number of input and output channels N x M In direct proportion to .

When convoluting in the spatial domain and the channel domain , The above calculation is required . By decomposing this convolution , Can speed up CNNs, As shown in the figure below .

Convolution

First , I offer an intuitive explanation , How the convolution of space and channel domain is standard convolution , The amount of calculation is HWNK²M .

I connect the line between the input and the output , To visualize the dependency between input and output . The number of lines roughly represents the amount of computation of convolution in space and channel domain .

for example , The most common convolution ——conv3x3, It can be as shown in the figure above . We can see , Input and output are locally connected in the spatial domain , And in the channel domain, it's fully connected .

Next , Used to change the number of channels as shown above conv1x1, or pointwise convolution. because kernel Its size is 1x1, So the computational cost of this convolution is HWNM, Calculation volume ratio conv3x3 To reduce the 1/9. This convolution is used to “ blend ” Information between channels .

Grouping convolution (Grouped Convolution)

Packet convolution is a variant of convolution , Will input feature map Channel grouping of , Convolute the channels of each packet independently .

hypothesis G Represents the number of groups , The computational complexity of grouping convolution is HWNK²M/G, The computation becomes standard convolution 1/G.

stay conv3x3 and G=2 The situation of . We can see , The number of connections in the channel domain is smaller than the standard convolution , It shows that the calculation is less .

stay conv3x3,G=3 Under the circumstances , Connections become more sparse .

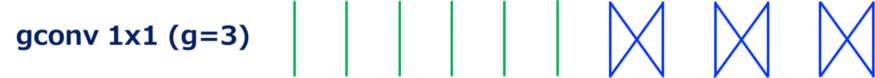

stay conv1x1,G=2 Under the circumstances ,conv1x1 It can also be grouped . This type of convolution is used to ShuffleNet in .

stay conv1x1,G=3 The situation of .

Depth separates the convolution (Depthwise Convolution)

In depth convolution , Convolute each input channel separately . It can also be defined as a special case of packet convolution , Where the number of input and output channels is the same ,G Equal to the number of channels .

As shown above ,depthwise convolution By omitting convolution in the channel domain , The computation is greatly reduced .

Channel Shuffle

Channel shuffle It's an operation ( layer ), It changes ShuffleNet The order of channels used in . This is done by tensor reshape and transpose To achieve .

More precisely , Give Way GN ' (=N) Represents the number of input channels , First, the dimension of the input channel reshape by (G, N '), And then (G, N ') Transpose to (N ', G), Finally, it will be flatten In the same shape as the input . here G Denotes the number of groups in the packet convolution , stay ShuffleNet China and channel shuffle Use layers together .

although ShuffleNet Can't use multiply add operation (MACs) To define by the number of , But there should be some overhead .

G=2 At the time of the channel shuffle situation . Convolution does not perform , It just changed the order of the channels .

The number of channels disturbed in this case G=3

Efficient Models

below , For efficient CNN Model , I'm going to intuitively explain why they're efficient , And how to convolute in space and channel domain .

ResNet (Bottleneck Version)

ResNet With the use of bottleneck The residual unit of the architecture is a good starting point for further comparison with other models .

As shown above , have bottleneck The residual unit of the architecture consists of conv1x1、conv3x3、conv1x1 form . first conv1x1 The dimension of input channel is reduced , Reduced the subsequent conv3x3 Amount of computation . final conv1x1 Restore the dimension of the output channel .

ResNeXt

ResNeXt It's an efficient CNN Model , It can be seen as ResNet A special case of , take conv3x3 Replace with a group of conv3x3. By using valid grouping conv, And ResNet comparison ,conv1x1 The channel reduction rate becomes moderate , So as to get better accuracy at the same cost .

MobileNet (Separable Conv)

MobileNet It's a stack of separable convolution modules , from depthwise conv and conv1x1 (pointwise conv) form .

Separable convolution performs convolution independently in space domain and channel domain . This convolution decomposition significantly reduces the amount of computation , from HWNK²M Down to HWNK² (depthwise) + HWNM (conv1x1), HWN(K² + M) . In general ,M>>K( Such as K=3 and M≥32), The reduction rate is about 1/8-1/9.

The important point here is , Calculate the amount of bottleneck Now it is conv1x1!

ShuffleNet

ShuffleNet The motivation is as mentioned above ,conv1x1 It's the bottleneck of separable convolution . although conv1x1 It's already working , There seems to be no room for improvement , grouping conv1x1 Can be used for this purpose !

The figure above illustrates the use of ShuffleNet Module . The important thing here is building block yes channel shuffle layer , In packet convolution, it performs the order of passthrough between groups “shuffles”. without channel shuffle, The output of packet convolution is not utilized between groups , Resulting in a decrease in accuracy .

MobileNet-v2

MobileNet-v2 A similar ResNet With medium bottleneck The module architecture of the residual unit ; Convolution can be separated by depth (depthwise convolution) Instead of conv3x3, It's an improved version of the residual unit .

You can see from above , And standard bottleneck The architecture is the opposite , first conv1x1 Increased channel dimension , And then execute depthwise conv, the last one conv1x1 Reduced channel dimensions .

By means of the above building blocks Reorder , And with MobileNet-v1( Separable conv) Compare , We can see how this architecture works ( This reordering does not change the entire model architecture , because MobileNet-v2 It's a stack of modules ).

in other words , The above module can be regarded as an improved version of separable convolution , In which the single of convolution can be separated conv1x1 It's broken down into two conv1x1. Give Way T The expansion factor representing the channel dimension , Two conv1x1 The amount of calculation is 2HWN²/T , And... Under separable convolution conv1x1 The amount of calculation is HWN². stay [5] in , Use T = 6, take conv1x1 The cost of computing has been reduced 3 times ( It's usually T/2).

FD-MobileNet

Last , Introduce Fast-Downsampling MobileNet (FD-MobileNet)[10]. In this model , And MobileNet comparison , Down sampling is performed in earlier layers . This simple technique can reduce the total computational cost . The reason is the traditional down sampling strategy and the computational cost of separable variables .

from VGGNet Start , Many models use the same down sampling strategy : Perform down sampling , Then double the number of channels in subsequent layers . For standard convolutions , The amount of calculation remains unchanged after down sampling , Because by definition HWNK²M . And for separable variables , After down sampling, the amount of calculation is reduced ; from HWN(K² + M) Reduced to H/2 W/2 2N(K² + 2M) = HWN(K²/2 + M). When M When it's not big ( The earlier layers ), It's relatively dominant .

The following is a summary of the full text

The good news !

Xiaobai learns visual knowledge about the planet

Open to the outside world

download 1:OpenCV-Contrib Chinese version of extension module

stay 「 Xiaobai studies vision 」 Official account back office reply : Extension module Chinese course , You can download the first copy of the whole network OpenCV Extension module tutorial Chinese version , Cover expansion module installation 、SFM Algorithm 、 Stereo vision 、 Target tracking 、 Biological vision 、 Super resolution processing and other more than 20 chapters .

download 2:Python Visual combat project 52 speak

stay 「 Xiaobai studies vision 」 Official account back office reply :Python Visual combat project , You can download, including image segmentation 、 Mask detection 、 Lane line detection 、 Vehicle count 、 Add Eyeliner 、 License plate recognition 、 Character recognition 、 Emotional tests 、 Text content extraction 、 Face recognition, etc 31 A visual combat project , Help fast school computer vision .

download 3:OpenCV Actual project 20 speak

stay 「 Xiaobai studies vision 」 Official account back office reply :OpenCV Actual project 20 speak , You can download the 20 Based on OpenCV Realization 20 A real project , Realization OpenCV Learn advanced .

Communication group

Welcome to join the official account reader group to communicate with your colleagues , There are SLAM、 3 d visual 、 sensor 、 Autopilot 、 Computational photography 、 testing 、 Division 、 distinguish 、 Medical imaging 、GAN、 Wechat groups such as algorithm competition ( It will be subdivided gradually in the future ), Please scan the following micro signal clustering , remarks :” nickname + School / company + Research direction “, for example :” Zhang San + Shanghai Jiaotong University + Vision SLAM“. Please note... According to the format , Otherwise, it will not pass . After successful addition, they will be invited to relevant wechat groups according to the research direction . Please do not send ads in the group , Or you'll be invited out , Thanks for your understanding ~边栏推荐

- Learning experiences on how to design reusable software from three aspects: class, API and framework

- The two 985 universities share the same president! School: true

- How did Tencent's technology bulls complete the overall cloud launch?

- 爱思唯尔-Elsevier期刊的校稿流程记录(Proofs)(海王星Neptune)(遇到问题:latex去掉章节序号)

- Has aaig really awakened its AI personality after reading the global June issue (Part 1)? Which segment of NLP has the most social value? Get new ideas and inspiration ~

- 快速了解常用的非对称加密算法,再也不用担心面试官的刨根问底

- 20000 words + 30 pictures | MySQL log: what is the use of undo log, redo log and binlog?

- One way linked list implementation -- counting

- AI 参考套件

- 【深入理解TcaplusDB技术】单据受理之事务执行

猜你喜欢

Go寫文件的權限 WriteFile(filename, data, 0644)?

腾讯的技术牛人们,是如何完成全面上云这件事儿的?

Quartus call & design D trigger Simulation & timing wave verification

Broadcast level E1 to aes-ebu audio codec E1 to stereo audio XLR codec

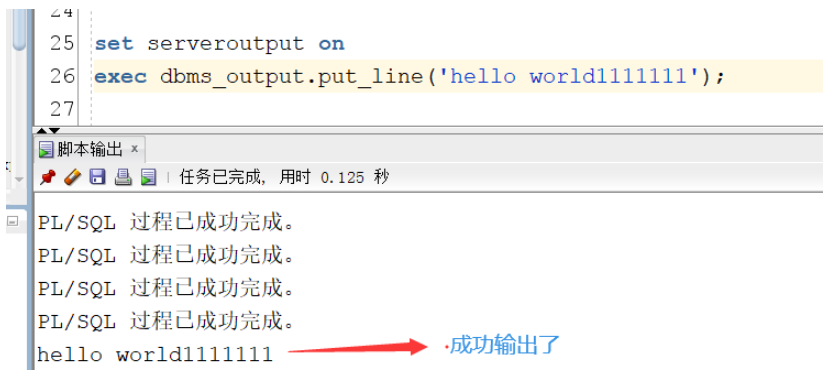

Oracle中dbms_output.put_line怎么使用

Getting started with reverse debugging - learn about PE structure files

Go write file permission WriteFile (filename, data, 0644)?

PHP handwriting a perfect daemon

使用OpenVINOTM预处理API进一步提升YOLOv5推理性能

2022软科大学专业排名出炉!西电AI专业排名超清北,南大蝉联全国第一 !

随机推荐

服务稳定性治理

kubernetes日志监控系统架构详解

How to enable the SMS function of alicloud for crmeb knowledge payment

Go write file permission WriteFile (filename, data, 0644)?

构建英特尔 DevCloud

windows 安装 MySQL

实战监听Eureka client的缓存更新

Strengthen the sense of responsibility and bottom line thinking to build a "safety dike" for flood fighting and rescue

How about stock online account opening and account opening process? Is it safe to open a mobile account?

What is the principle of live CDN in the process of building the source code of live streaming apps with goods?

#云原生征文#深入了解Ingress

You call this shit MQ?

Quarkus+saas multi tenant dynamic data source switching is simple and perfect

Yyds dry inventory solution sword finger offer: judge whether it is a balanced binary tree

Go write permissions to file writefile (FileName, data, 0644)?

Filtre de texte en ligne inférieur à l'outil de longueur spécifiée

2022软科大学专业排名出炉!西电AI专业排名超清北,南大蝉联全国第一 !

ExpressionChangedAfterItHasBeenCheckedError: Expression has changed after it was checked.

POW共识机制

边缘和物联网学术资源