当前位置:网站首页>Record the first data preprocessing process

Record the first data preprocessing process

2022-06-11 06:07:00 【Tcoder-l3est】

Record the first data preprocessing process

List of articles

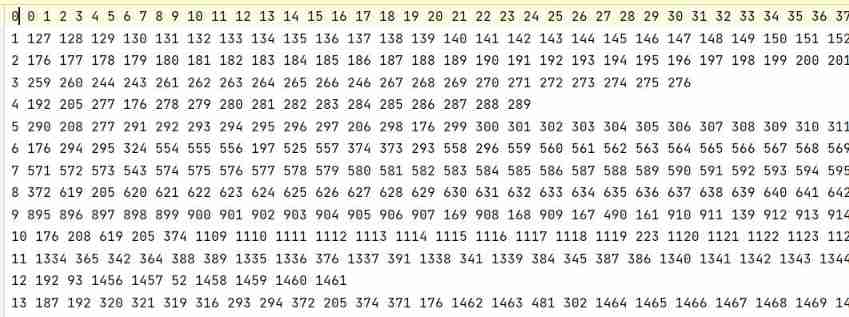

Data description

item_idx and user_idx The product and user name correspond to each other id.train_pair.json It's some binary [u,i] A list of , Represent user u I love products i, It's our training data ( Here you need to construct your own negative example ).valid_pair and test_pair The format inside is the same , Also some binary , A user corresponds to a positive example product and 100 Negative example items , The purpose of our task is to give this user this 101 Sort items , Then look at the sorting position of the sorting items .

Final data target

Be similar to gowalla in train.txt yes

The first in each line is user The following is a positive example item

test.txt in : user - To test items

from list To txt Of remap:

import torch

import numpy as np

from torch.utils.data import Dataset

import json

import string

org_path = "D:\GraphNetworkCF-main\Data\men\\user_idx.json"

des_path = "D:\GraphNetworkCF-main\Data\men\\user_list.txt"

with open(des_path, "a") as fl:

fl.write("org_id remap_id\n")

with open(org_path,'r',encoding='utf8')as fp:

json_datas = json.load(fp)

#print(' This is the... In the file json data :',json_datas)

#print(' This is the data type that reads the file data :', type(json_datas))

length = len(json_datas)

count = 1

for json_data in json_datas:

with open(des_path, "a") as fl:

fl.write(str(json_data)+" "+str(count)+"\n")

count = count + 1

mapping , Converting to an index should be easier to handle than a string

altogether 4807 individual user,43832 individual item

train_pair To user-items preliminary

import torch

import numpy as np

from torch.utils.data import Dataset

import json

import string

import textwrap

import fileinput

import os

org_path_user = "D:\GraphNetworkCF-main\Data\men\\user_list.txt"

org_path_item = "D:\GraphNetworkCF-main\Data\men\\item_list.txt"

org_path_train_pari = "D:\GraphNetworkCF-main\Data\men\\train_pair.json"

des_path = "D:\GraphNetworkCF-main\Data\men\\train.txt"

#coding:utf-8

with open(org_path_user,'r',encoding='utf-8') as f:

user_dic=[]

for line in f.readlines():

line=line.strip('\n') # Remove the newline \n

b=line.split(' ') # Convert each line to a list with a space separator

user_dic.append(b)

user_dic=dict(user_dic)

with open(org_path_item, 'r', encoding='utf-8') as f:

item_dic = []

for line in f.readlines():

line = line.strip('\n') # Remove the newline \n

b = line.split(' ') # Convert each line to a list with a space separator

item_dic.append(b)

item_dic=dict(item_dic)

with open(org_path_train_pari,'r',encoding='utf8')as fp:

json_datas = json.load(fp)

#print(' This is the... In the file json data :',json_datas)

#print(' This is the data type that reads the file data :', type(json_datas))

length = len(json_datas)

#print(length)

# 0 yes user 1 yes item

#print(json_datas[2][0])

now_user_id = -1

for json_data in json_datas:

nxt_user = json_data[0]

nxt_item = json_data[1]

nxt_user_id = user_dic[nxt_user]

item_id = item_dic[nxt_item]

#print(nxt_user_id)

#print(item_id)

if now_user_id != nxt_user_id:

# for the first time

now_user_id = nxt_user_id

with open(des_path, "a") as fl:

fl.write("\n")

fl.write(str(now_user_id) + " " + str(item_id))

else:

with open(des_path, "a") as fl:

fl.write(" " + str(item_id))

Further treatment train.txt

import torch

import numpy as np

from torch.utils.data import Dataset

import json

import string

import textwrap

import fileinput

import os

org_path_train = "D:\GraphNetworkCF-main\Data\men\\test.txt"

des_path = "D:\GraphNetworkCF-main\Data\men\\test_1.txt"

trains = {

}

with open(org_path_train,'r',encoding='utf-8') as f:

line_dic=[]

for line in f.readlines():

if len(line) == 0:break

line=line.strip('\n') # Remove the newline \n

items = [int(i) for i in line.split(' ')] # goods

uid, train_items = items[0], items[1:]

train_items.sort()

trains[uid] = train_items

#print(trains.items())

train = sorted(trains.items(), key=lambda d: d[0])

# print(train)

# print(type(train))

#count = 4807; # 0 - 4806

#print(max(train))

#print(len(train))

# print(type(train[count]))

# print(train[count][1])

length = len(train) # 1 - 4807

with open(des_path, "a") as fl:

for i in range(0,length):

fl.write("\n")

uid = i # 0 - 4806

u_items = train[i][1]

#print(u_items)

fl.write(str(uid)+" ")

for item in u_items:

fl.write(str(item)+" ")

Final effect

边栏推荐

- Super explanation

- How to use perforce helix core with CI build server

- Sqoop installation tutorial

- Cocoatouch framework and building application interface

- "All in one" is a platform to solve all needs, and the era of operation and maintenance monitoring 3.0 has come

- Moteur de modèle de moteur thymeleaf

- FIFO最小深度计算的题目合集

- Review Servlet

- Data quality: the core of data governance

- What is a thread pool?

猜你喜欢

verilog实现双目摄像头图像数据采集并modelsim仿真,最终matlab进行图像显示

Jsonobject jsonarray for parsing

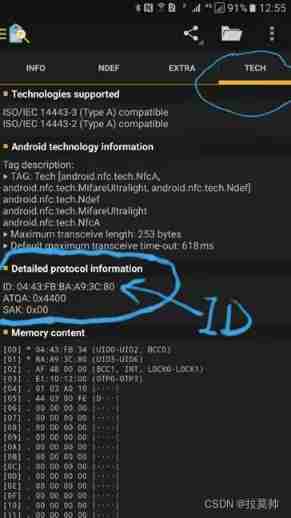

NFC Development -- the method of using NFC mobile phones as access control cards (II)

山东大学项目实训之examineListActivity

Getting started with kotlin

Altiumdesigner2020 import 3D body SolidWorks 3D model

“All in ONE”一个平台解决所有需求,运维监控3.0时代已来

FIFO最小深度计算的题目合集

那个酷爱写代码的少年后来怎么样了——走近华为云“瑶光少年”

Eureka集群搭建

随机推荐

Super explanation

View controller and navigation mode

11. Gesture recognition

Sqli-labs less-01

修复Yum依赖冲突

Chapter 4 of machine learning [series] naive Bayesian model

Goodbye 2021 Hello 2022

MySQL implements over partition by (sorting the data in the group after grouping)

Can Amazon, express, lazada and shrimp skin platforms use the 911+vm environment to carry out production number, maintenance number, supplement order and other operations?

Dichotomy find template

Control your phone with genymotion scratch

Cenos7 builds redis-3.2.9 and integrates jedis

Super (subclass)__ init__ And parent class__ init__ ()

Yoyov5's tricks | [trick8] image sampling strategy -- Sampling by the weight of each category of the dataset

NLP-D46-nlp比赛D15

Which company is better in JIRA organizational structure management?

Mingw-w64 installation instructions

OJDBC在Linux系统下Connection速度慢解决方案

Concepts and differences of parallel computing, distributed computing and cluster (to be updated for beginners)

Growth Diary 01