当前位置:网站首页>特征工程学习笔记

特征工程学习笔记

2022-08-03 12:02:00 【羊咩咩咩咩咩】

针对数据的特征进行操作的一些函数

1.针对字符串编码

读取数据

import pandas as pd

vg_df =pd.read_csv(,encoding='ISO-8859-1')

vg_df[['Name','Platform','Year','Genre','Publisher']].iloc[1:7]LabelEncoder:针对不同的文本属性值转换成数字。

import numpy as np

genre=np.unique(vg_df['Genre'])

from sklearn.preprocessing import LabelEncoder

gle =LabelEncoder()

gle_label =gle.fit_transform(vg_df['Genre'])

genre_mapping = {index:label for index,label in enumerate(gle.classes_)}

gle.classes_可以通过gle.classes_输出训练后的分类。

poke_df =pd.read_csv(,encoding='ISO-8859-1')

poke_df.head()

gle =LabelEncoder()

generation_label =gle.fit_transform(poke_df['Generation'])

poke_df['Generation_label']=generation_label

poke_dfOneHotEncoder:独热编码,对数据进行数值映射操作,独热编码可以将所有可能情况进行展开。

from sklearn.preprocessing import OneHotEncoder

onehot_encoder = OneHotEncoder()

gen_feature_arr = onehot_encoder.fit_transform(poke_df[['Generation']]).toarray()

gen_feature_labels = list(gle.classes_)

gen_features = pd.DataFrame(gen_feature_arr,columns =gen_feature_labels)

poke_df_ohe = pd.concat([poke_df,gen_features],axis=1)

poke_df_ohe或者可以通过pd.get_dummies进行操作

gen_ohe = pd.get_dummies(poke_df['Generation'])

pd.concat([gen_ohe,poke_df],axis=1)

gen_onehot_features = pd.get_dummies(poke_df['Generation'],prefix='one_hot')##prefit为添加前缀

pd.concat([poke_df,gen_onehot_features],axis=1)2.二值与多项式特征

对二值特征进行操作

popsong_df = pd.read_csv(,encoding='utf-8')

popsong_df.head()

watched = np.array(popsong_df['listen_count'])

watched[watched >=1]=1

popsong_df['watched']=watched

popsong_df

from sklearn.preprocessing import Binarizer

bn = Binarizer(threshold=0.9)

pd_watched = bn.transform(popsong_df['listen_count'].values.reshape(-1,1))

popsong_df['pd_watched']=pd_watched

popsong_df.head()可以使用Polynomialfeature对高次方的数据改变成新的特征

poke_df =pd.read_csv(,encoding='utf-8')

atk_df = poke_df[['Attack','Defense']]

atk_df.head()

from sklearn.preprocessing import PolynomialFeatures

pf = PolynomialFeatures(degree=2,interaction_only=False,include_bias=False)

res =pf.fit_transform(atk_df)3.对连续值进行离散化,将数据划分成几个区间

fcc_survey_df =pd.read_csv(,encoding='utf-8')

fcc_survey_df.head()

fcc_survey_df['Age_bin_round']= np.array(np.floor(np.array(fcc_survey_df['Age'])/10.))##np.floor为向下取整

fcc_survey_df[['Age','Age_bin_round']]

quantile_list=[0,.25,.5,.75,1.]

quantiles =fcc_survey_df['Income'].quantile(quantile_list)

quantiles

quantiles_label=['0~25','25~50','50~75','75~100']

fcc_survey_df['income_quantile_range']=pd.qcut(fcc_survey_df['Income'],q=quantile_list)

fcc_survey_df['income_quantile_label'] =pd.qcut(fcc_survey_df['Income'],q=quantile_list,labels = quantiles_label)

fcc_survey_df4.使用对数对时间序列进行操作

fcc_survey_df['income_log'] = np.log((1+fcc_survey_df['Income']))

income_log_mean = np.round(np.mean(fcc_survey_df['income_log']),2)

import datetime

import numpy as np

import pandas as pd

from dateutil.parser import parse

import pytz

time_stamps = ['2015-03-08 10:30:00.360000+00:00', '2017-07-13 15:45:05.755000-07:00',

'2012-01-20 22:30:00.254000+05:30', '2016-12-25 00:30:00.000000+10:00']

df = pd.DataFrame(time_stamps, columns=['Time'])

df

ts_objs = np.array([pd.Timestamp(item) for item in np.array(df.Time)])

df['Year'] = df['TS_obj'].apply(lambda d: d.year)

df['Month'] = df['TS_obj'].apply(lambda d: d.month)

df['Day'] = df['TS_obj'].apply(lambda d: d.day)

df['DayOfWeek'] = df['TS_obj'].apply(lambda d: d.dayofweek)

df['DayOfYear'] = df['TS_obj'].apply(lambda d: d.dayofyear)

df['WeekOfYear'] = df['TS_obj'].apply(lambda d: d.weekofyear)

df['Quarter'] = df['TS_obj'].apply(lambda d: d.quarter)

df[['Time', 'Year', 'Month', 'Day', 'Quarter',

'DayOfWeek', 'DayOfYear', 'WeekOfYear']]文本特征处理

1.建立词袋模型,进行分词操作

import pandas as pd

import numpy as np

import re

import nltk

corpus = ['The sky is blue and beautiful.',

'Love this blue and beautiful sky!',

'The quick brown fox jumps over the lazy dog.',

'The brown fox is quick and the blue dog is lazy!',

'The sky is very blue and the sky is very beautiful today',

'The dog is lazy but the brown fox is quick!'

]

labels = ['weather', 'weather', 'animals', 'animals', 'weather', 'animals']

corpus = np.array(corpus)

corpus_df = pd.DataFrame({'Document': corpus,

'Category': labels})

corpus_df = corpus_df[['Document', 'Category']]

corpus_df

#加载停用词

wpt = nltk.WordPunctTokenizer()

stop_words = nltk.corpus.stopwords.words('english')

def normalize_document(doc):

# 去掉特殊字符

doc = re.sub(r'[^a-zA-Z0-9\s]', '', doc, re.I)

# 转换成小写

doc = doc.lower()

doc = doc.strip()

# 分词

tokens = wpt.tokenize(doc)

# 去停用词

filtered_tokens = [token for token in tokens if token not in stop_words]

# 重新组合成文章

doc = ' '.join(filtered_tokens)

return doc

norm_corpus = normailized_document(corpus)

norm_corpus2.使用文本特征构造方法

tf-idf:不急考虑了词频,同时考虑了词的重要性

from sklearn.feature_extraction.text import TfidfVectorizer

tv = TfidfVectorizer(min_df=0.,max_df=1.,use_idf=True)

tv_matrix = tv.fit_transform(norm_corpus)

tv_matrix=tv_matrix.toarray()

vocab = tv.get_feature_names()

pd.DataFrame(np.round(tv_matrix,2),columns=vocab)相似度特征:将特征转换成树枝数据,然后计算其相似度,这里需要tf-idf值

##文本相似度特征

from sklearn.metrics.pairwise import cosine_similarity

similarity_matrix =consine_similarity(tv_matrix)特征聚类:将数据按堆划分,最后给每一堆一个实际标签。

##特征聚类

from sklearn.cluster import KMeans

km = KMeans(n_cluster=2)

km.fit_transform(similarity_df)

cluster_labels -km.labels_

cluster_labels =pd.DataFrame(cluster_labels,columns=['ClusterLabel'])

pd.concat([corpus_df,cluster_labels],axis=1)建立主题模型:主题模型属于无监督方法,输入就是处理好的语料库,可以得到主题类型以及每一个词的权重。

##主题模型

from sklearn.decomposition import LatentDirichletAllocation

lda =LaatentDirichletAllocation(n_topics=2,max_iter=100,random_state=42)

dt_matrix =lda.fit_transform(tv_matrix)

features =pd.DataFrame(dt_matrix,columns=['T1','T2'])

tt_matrix =lda.components_

for topic_weights in tt_matrix:

topic =[(token,weight) for token,weight in zip(vocab,topic_weights)]

topic =sorted(topic,key =lambda x :-x[1])

topic =[item for item in topic if item[1] >0.6]

print(topuc)建立词向量模型:选对每个词进行初始化操作,赋予了每个词的实际的空间意义。

##词向量模型

from gensim.models import word2vec

wpt = nltk.wordPunctTokenizer()

tokenized_corpus =[wpt.tokenized(documents) for documents in norm_corpus]

feature_size =10##词向量维度

windows_context =10##滑动窗口

min_word_count =1##最小词频

w2v_model =word2vec.WoRD2vEC(tokenized_corpus,size =feature_size,windows =window_context,min_count =min_word_count)

w2v_model.wv['sky']边栏推荐

猜你喜欢

After completing the interview and clearance collection of Alibaba, I successfully won the 15th Offer this year

深度学习中数据到底要不要归一化?实测数据来说明!

劝退背后。

一个扛住 100 亿次请求的红包系统,写得太好了!!

深度学习跟踪DLT (deep learning tracker)

【一起学Rust】Rust学习前准备——注释和格式化输出

4500字归纳总结,一名软件测试工程师需要掌握的技能大全

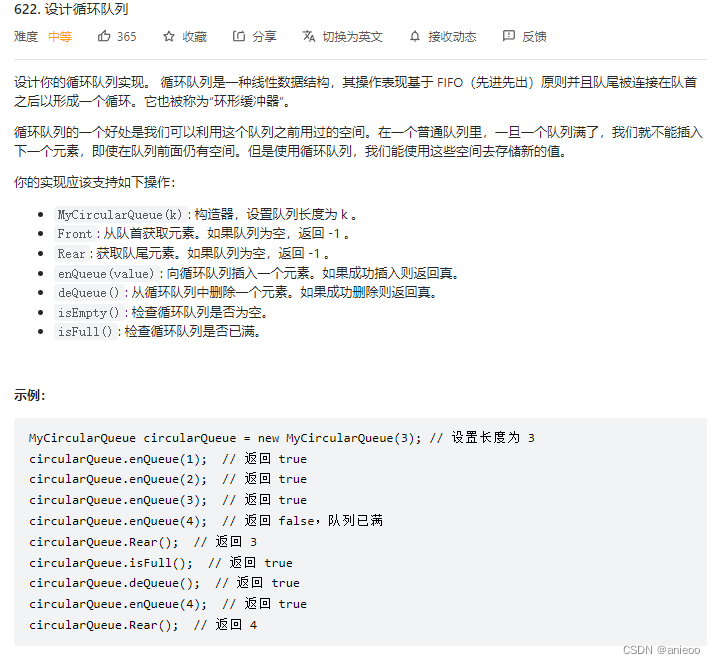

622. 设计循环队列

html+css+php+mysql实现注册+登录+修改密码(附完整代码)

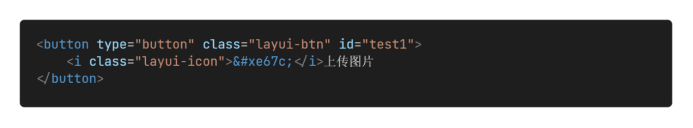

后台图库上传功能

随机推荐

viewstub 的详细用法_pageinfo用法

hystrix 服务熔断和服务降级

【MySQL】数据库进阶之索引内容详解(上篇 索引分类与操作)

【一起学Rust】Rust的Hello Rust详细解析

数据库系统原理与应用教程(073)—— MySQL 练习题:操作题 131-140(十七):综合练习

"Digital Economy Panorama White Paper" Financial Digital User Chapter released!

PC client automation testing practice based on Sikuli GUI image recognition framework

RTP协议分析

从零开始Blazor Server(6)--基于策略的权限验证

bash case usage

第3章 搭建短视频App基础架构

How to do App Automation Testing?Practical sharing of the whole process of App automation testing

Take you understand the principle of CDN technology

最牛逼的集群监控系统,它始终位列第一!

国内数字藏品与国外NFT主要有以下六大方面的区别

笔试题:金额拆分

码率vs.分辨率,哪一个更重要?

622. 设计循环队列

LeetCode——622.设计循环队列

基于英雄联盟的知识图谱问答系统