当前位置:网站首页>Large language models teach agents to evolve. Openai reveals the complementary relationship between the two

Large language models teach agents to evolve. Openai reveals the complementary relationship between the two

2022-06-21 21:54:00 【Zhiyuan community】

Put forward to pass LLM Of the highly efficient evolutionary program ELM Method ; A method based on LLM Mutation operator to improve ELM Technology for searching capabilities over time ; It shows LLM In areas not covered by training data ELM; The verification passed ELM The generated data can lead to enhanced LLM, This provides a new way to openness .

边栏推荐

- Scientific research cartoon | you can learn EEG by looking at the picture. Would you like to try it?

- Overriding Overloading final

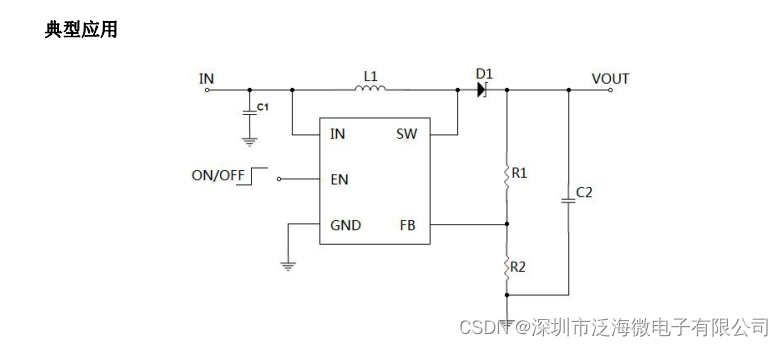

- M3608 boost IC chip high efficiency 2.6a boost dc/dc converter

- There is no sound solution to the loopback when jerryzhi launches Bluetooth [chapter]

- 有哪些将英文文献翻译为中文的网站或软件?

- Jerizhi, processing method for prompting chip information mismatch and error code 10 [chapter]

- 晶合集成通过注册:拟募资95亿 合肥建投与美的是股东

- Jerry's near end tone change problem of opening four channel call [chapter]

- #16迭代器经典案例

- 科研漫畫 | 看圖可以學腦電,來試試?

猜你喜欢

Huawei Hongmeng development lesson 3

类似中国知网但是搜索英文文献的权威网站有哪些?

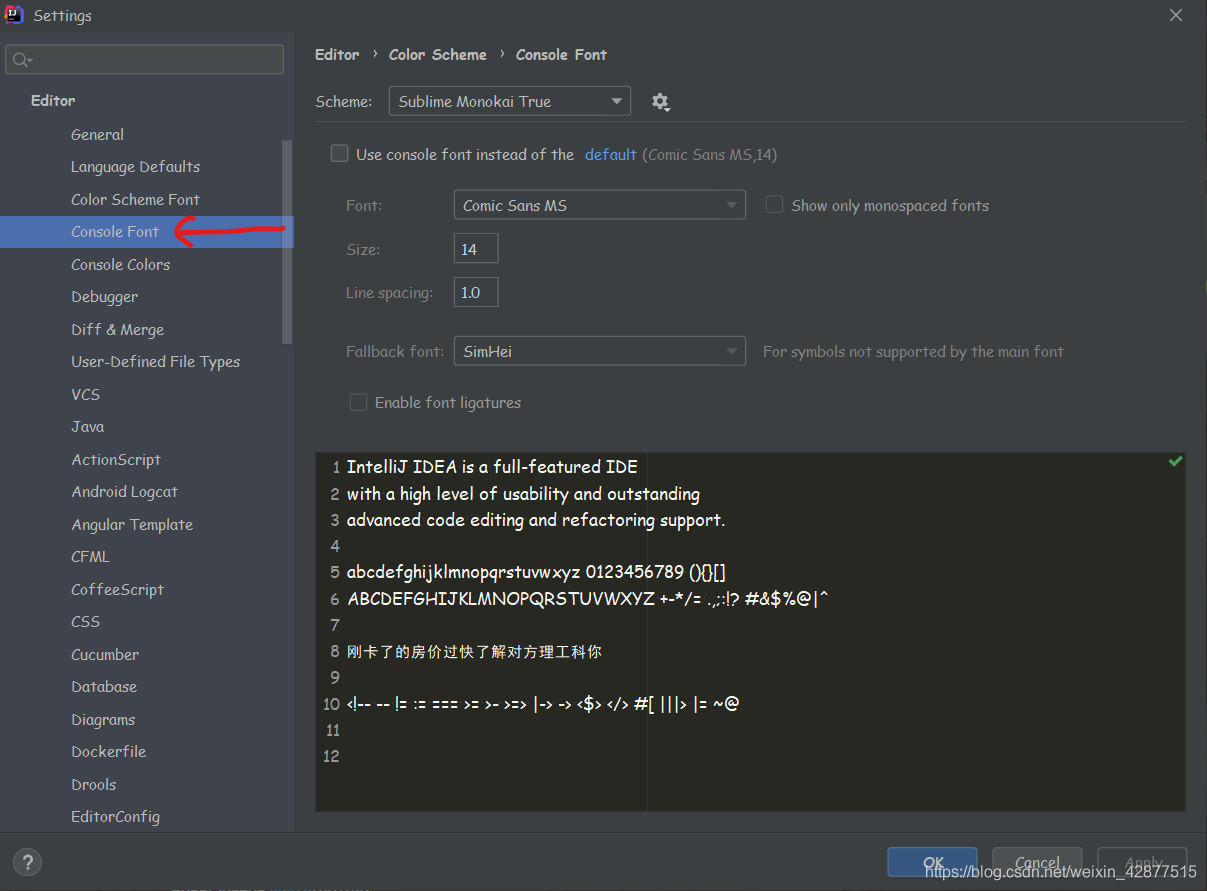

InteliJ-IDEA-高效技巧(一)

How to use the free and easy-to-use reference management software Zotero? Can I support both Chinese and English

Hypebeast, un média à la mode, est sur le point d'être lancé: 530 millions de dollars pour le troisième trimestre

Tc3608h high efficiency 1.2MHz DC-DC booster IC

js中的for.....in函数

撰写学术论文引用文献时,标准的格式是怎样的?

Yx2811 landscape installation driver IC

在AD中安装元件和封装库

随机推荐

挖财赠送的证券账户安全吗?可以开户吗?

Can I open an account for online stock trading? Is it safe

ctfshow 105-127

ADT Spec RI AF CheckRep Safety from Rep Exposure

Xmind8 latest cracking tutorial (useful for personal testing)

Tx9116 Synchronous Boost IC

Jerry's problem of playing songs after opening four channel EQ [chapter]

ACM. HJ35 蛇形矩阵 ●

Uibutton implements left text and right picture

Overriding Overloading final

安全加密简介

类似中国知网但是搜索英文文献的权威网站有哪些?

J - Count the string HDU - 3336 (KMP)

ACM. HJ61 放苹果 ●

盐语Saltalk获800万美元A轮融资:继续扩大团队及市场覆盖

#16迭代器经典案例

Mendeley 安装、配置、使用

7.目标检测

Go language self-study series | golang standard library encoding/xml

Which small directions of cv/nlp are easy to publish papers?