当前位置:网站首页>Build a series of vision transformer practices, and finally meet, Timm library!

Build a series of vision transformer practices, and finally meet, Timm library!

2022-07-25 12:19:00 【MengYa_ Dream】

Preface : Poor use of tools , Everything worries , Originally, it was really a very simple idea to realize , It happened that I went round and round , Let's meet today Timm Kuba !

Catalog

1. Provided by Baidu PaddlePaddle - Learn vision from scratch Transformer

2. resources : Vision Transformer Excellent open source work

4.2 Timm Library Vision Transformer Flexible use

4.2.1 vision_transformer.py Parameter interpretation

4.2.2 Timm Call and build the Library Vision Transformer

at present ,pytorch Needless to say, the popularity of ,vision transformer The popularity of , Know everything. . Actually , Build yourself vision transformer It was my original intention , However, there is simply no way to start , It's mainly about flexible use . today , Suddenly found that , It's not as bad as I thought , In previous experiments , It's still traceable .

First, let's put some hand-in-hand structures seen during the preliminary study transformer Resources for framework ideas and practices :

1. Provided by Baidu PaddlePaddle - Learn vision from scratch Transformer

The theory is quite detailed , Code practice is built on Baidu sub paddle On the library , And pytorch Somewhat different , But the original intention remains unchanged .

Course : Learn vision from scratch Transformer

Paddle-Pytorch API Corresponding table :paddle pytorch contrast

2. resources : Vision Transformer Excellent open source work

Vision Transformer Excellent open source work :timm library vision transformer Code reading

python timm library -CSDN Blog _python timm

3. How to find out Timm-Debug

I have been trying to build my own network model , But he didn't get the effect he wanted , Actually , It's very painful . The reason is to reproduce the work of predecessors , There are many recurrences , But often there is no in-depth interpretation , Because floating on the surface , How is it possible to know his true intention ? How do you know how he quotes the experience of his predecessors ? It started again and again Debug, Breakthrough learning point by point , Between airborne python Of me , A lot of knowledge has also begun to accumulate , Sure enough , As long as the mind does not slip , There are more ways than difficulties !

This is science popularization timm Kura ! This is my reappearance Debug Observed by the author Vision Transformer When the module , What's going on , It's not completely self built at all ! There are tools out there , Just rectify it ! Everything seems to be better again hahahaha !

![]()

4 Timm library

4.1 Concept

Timm:pyTorImageModels, Simply speaking , Namely PyTorch One of the libraries of , It is torchvision.models Expansion module for , oriented CV Model of , Mainly based on classification . meanwhile , All models have default API.

Model is introduced :Model Summaries - Pytorch Image Models (rwightman.github.io)

Model results :Results - Pytorch Image Models (rwightman.github.io)

4.2 Timm Library Vision Transformer Flexible use

4.2.1 vision_transformer.py Parameter interpretation

- img_size: Image size , Default 224,tuple type , Inside int type .

- patch_size: Patch size, Default 16,tuple type , Inside int type .

- in_chans: Input the channel Count , Default 3,int type .

- num_classes: classification head Number of classifications , Default 1000,int type .

- embed_dim:Transformer Of embedding dimension, Default 768, int type .

- depth: Transformer Of Block The number of , Default 12,int type .

- num_head: attention heads The number of , Default 12,int type .

- mlp_ratio: mlp hidden dim/embedding dim Value , Default 4, int type .

- qkv_bias: attention Module Computing qkv when , need bias Do you ? Default True,bool type .

- qk_scale: Usually it is None.

- drop_rate: dropout rate, Default 0,float type .

- attn_drop_rate: attention Modular dropout rate, Default 0,float type .

- drop_path_rate: Default 0,float type .

- hybrid_backbone: Converting images into Patch Before , You need to pass a Backbone Do you ? Default None. If it is None, Just convert the image directly into Patch. If not None, Just pass this Backbone, And then into Patch,nn.Module type .

- norm_layer: Normalization layer type , Default None,nn.Module type .

- Tuples (Tulpe) yes Python Another data type in , And list (List) It is also a set of ordered objects .

4.2.2 Timm Call and build the Library Vision Transformer

(1) Import the necessary libraries and models :

import timm(2) call timm Model in Library :

model = timm.create_model("vit_deit_base_patch16_384", pretrained=pretrained)(3) Adjust as needed , If there is a need , Follow up

边栏推荐

- Ansible

- 【GCN-RS】Region or Global? A Principle for Negative Sampling in Graph-based Recommendation (TKDE‘22)

- scrapy 设置随机的user_agent

- [high concurrency] Why is the simpledateformat class thread safe? (six solutions are attached, which are recommended for collection)

- 【AI4Code最终章】AlphaCode:《Competition-Level Code Generation with AlphaCode》(DeepMind)

- Dr. water 2

- Go garbage collector Guide

- 给生活加点惊喜,做创意生活的原型设计师丨编程挑战赛 x 选手分享

- Fault tolerant mechanism record

- 协程

猜你喜欢

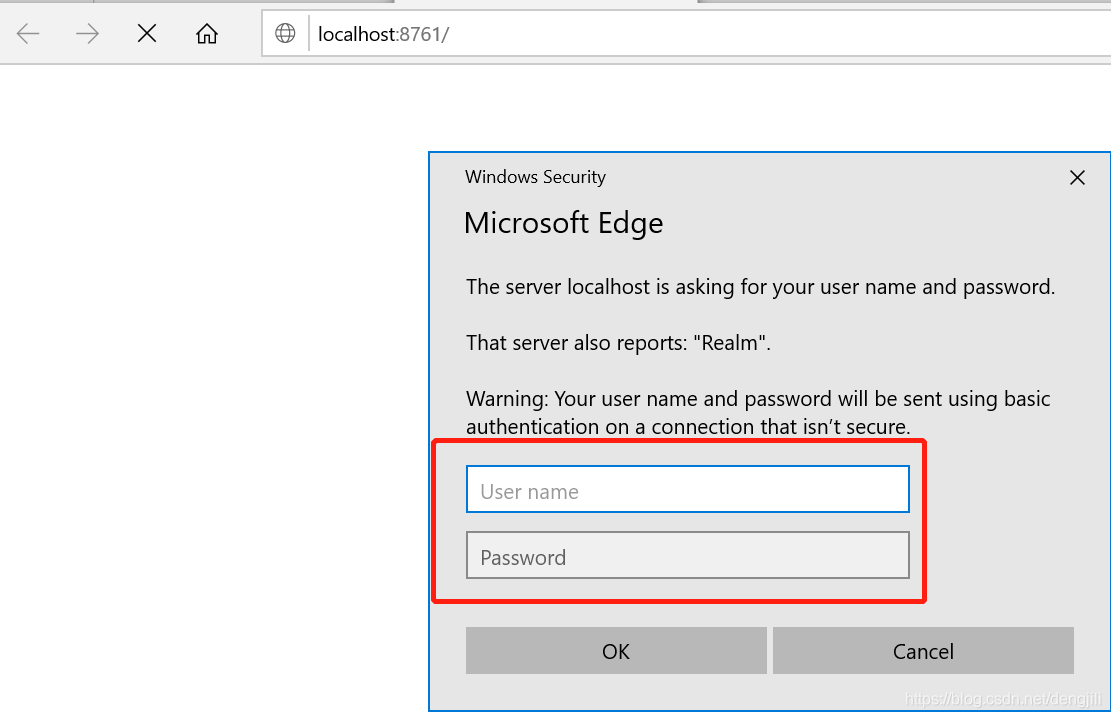

Eureka注册中心开启密码认证-记录

3.2.1 什么是机器学习?

![[GCN multimodal RS] pre training representations of multi modal multi query e-commerce search KDD 2022](/img/9c/0434d40fa540078309249d415b3659.png)

[GCN multimodal RS] pre training representations of multi modal multi query e-commerce search KDD 2022

scrapy 爬虫框架简介

![[dark horse morning post] eBay announced its shutdown after 23 years of operation; Wei Lai throws an olive branch to Volkswagen CEO; Huawei's talented youth once gave up their annual salary of 3.6 mil](/img/d7/4671b5a74317a8f87ffd36be2b34e1.jpg)

[dark horse morning post] eBay announced its shutdown after 23 years of operation; Wei Lai throws an olive branch to Volkswagen CEO; Huawei's talented youth once gave up their annual salary of 3.6 mil

Application and innovation of low code technology in logistics management

Can't delete the blank page in word? How to operate?

Transformer variants (routing transformer, linformer, big bird)

Web programming (II) CGI related

Zuul gateway use

随机推荐

[GCN multimodal RS] pre training representations of multi modal multi query e-commerce search KDD 2022

MySQL exercise 2

mysql的表分区

Eureka注册中心开启密码认证-记录

Implement anti-theft chain through referer request header

【九】坐标格网添加以及调整

【6篇文章串讲ScalableGNN】围绕WWW 2022 best paper《PaSca》

Brpc source code analysis (I) -- the main process of RPC service addition and server startup

[high concurrency] Why is the simpledateformat class thread safe? (six solutions are attached, which are recommended for collection)

氢能创业大赛 | 国家能源局科技司副司长刘亚芳:构建高质量创新体系是我国氢能产业发展的核心

Eureka使用记录

R language ggpubr package ggarrange function combines multiple images and annotates_ Figure function adds annotation, annotation and annotation information for the combined image, adds image labels fo

scrapy 设置随机的user_agent

Heterogeneous graph neural network for recommendation system problems (ackrec, hfgn)

Introduction to redis

selenium使用———安装、测试

Eureka usage record

scrapy爬虫爬取动态网站

【GCN-RS】Are Graph Augmentations Necessary? Simple Graph Contrastive Learning for RS (SIGIR‘22)

Video caption (cross modal video summary / subtitle generation)