当前位置:网站首页>[camera Foundation (I)] working principle and overall structure of camera

[camera Foundation (I)] working principle and overall structure of camera

2022-06-24 21:44:00 【Sweet galier】

Camera The working principle of the camera and the structure of the whole machine

One 、 The basic working principle of the camera

As shown in the figure , The reflected light of a scene is captured by the lens ( The aperture of the lens can adjust the amount of light , The motor is used to adjust the focus ), Finally, the focused image is accurately focused on the image sensor ( Color filtering produces three primary colors ), Optical signals are converted into digital signals , The original code stream data is finally obtained through analog-to-digital conversion .

problem : Can we directly use the original bitstream data containing image information and color information ?

answer : You can't , According to the request of the client VC The interface carries the format decision of the request data stream .

Two 、 code

Camera software layer , It generally provides parameters of various formats and resolutions , For upper layer selection , Common formats are :YUYV、MJPEG、H264、NV12 etc. . among :

- YUYV: Original bitstream , Every pixel takes up 2 Bytes .

- MJPEG: Data can be compressed 7 About times , It can be NV12 It can also be YUYV

- H264 code : It mainly depends on the configuration , among I Frame compression 7 About times ,P frame 20 About times ,B frame 50 About times , Theoretically B More frames , It may support high-resolution and high frame rate code streams

- NV12: Original bitstream , Every pixel 1.5 Bytes .

3、 ... and 、 The purpose of coding

If there is no code , Let's calculate 1s request NV12 4K 30HZ How much bandwidth does the code stream need ?

answer :(3840*2160*1.5*30) byte = 373248000 byte =356M

according to , We usually use camera Interface usb 2.0 The theoretical bandwidth of :480Mbps = 60M/s,

Unable to meet NV12 Original bitstream 4K 30HZ Preview of required , Codec technology , It can effectively compress the volume of data without or less image quality .

Four 、 transmission

As a data transmission protocol for cameras , It must be unified , Extensive , Agreements supported by manufacturers and developers . among USB The agreement must have a place . Complete machine scheme , Basically USB Camera programme .

UVC yes USB Video Class Abbreviation , That is to say USB Interface video device . One UVC equipment , Need to include 1 individual VC Interface and 1 One or more VS Interface

- VC Interface Transfer configuration parameters , Such as turning auto focus on and off , White balance, etc .

- VS Interface Transfer picture data stream .

USB agreement

UVC agreement :

UVC Model :

UVC Software architecture

Summary :

stay Linux In the system , The application and USB The camera passes through UVC Protocol to interact . The system is designed to be compatible with different interaction protocols . stay kernel The layers are abstract V4L2 drive , It is convenient for the upper layer process to connect with various protocols .

V4L2 Provides a series of commands , As shown in the figure , The upper layer process passes ioctl And the ground floor kernel Interaction .

such , The upper layer application can get the encoded code stream given by the camera device . But if the overall scheme is Android System , Na he linux There is a certain difference .Android The system can go down , You can make V4l2 adopt ioctl and kernel Interaction .

5、 ... and 、Android Systematic camera framework

Android APSP System as an open source system , There are many factors to consider , Both downstream hardware equipment manufacturers should be considered , Also consider application developers , Also consider user privacy security . therefore ,Google Yes Android Under the Camera The architecture of , Divide up 3 Floor design .

- CameraProvider hal The progress of the layer ,init rc Start on startup .

- CameraServer Framework Layer process , It is mainly used to maintain the upper layer apk Can access Camera service

- Camera Of Runtime layer , For the top apk Provide services , And encapsulates a series of api, It is convenient for developers to call and develop quickly .

Android camera Architecture diagram :

Summary :

android The system is designed by layers , Give each upper layer application the ability to independently access camera devices , The upper layer application can be realized by simply api Call to realize the preview and photographing functions of the camera .

The original code stream data is encoded , Compress the data through USB Deliver to kernel,kernel adopt UVC agreement Hal Layers interact ,HAL After the layer gets the data ,CamerServer Will pass hwbinder And Hal Layers interact , Then display .

边栏推荐

- Tournament sort

- Slider控制Animator动画播放进度

- Minimum cost and maximum flow (template question)

- 多路转接select

- 虚拟机CentOS7中无图形界面安装Oracle(保姆级安装)

- Please open online PDF carefully

- Slider controls the playback progress of animator animation

- 介绍BootLoader、PM、kernel和系统开机的总体流程

- Wireshark packet capturing skills summarized by myself

- socket(1)

猜你喜欢

C语言-关键字1

使用 Go 编程语言 66 个陷阱:Golang 开发者的陷阱和常见错误指北

去掉录屏提醒(七牛云demo)

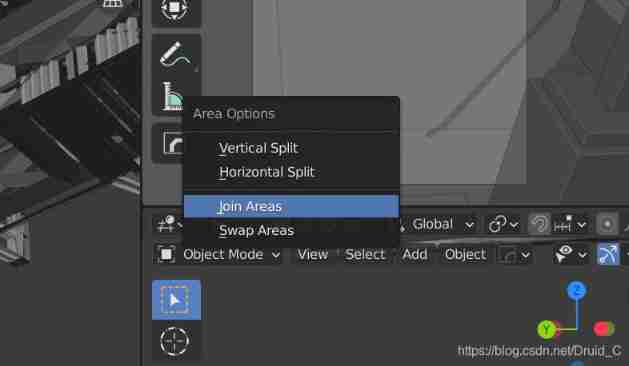

Multi view function in blender

123. the best time to buy and sell shares III

memcached全面剖析–2. 理解memcached的內存存儲

Pod lifecycle in kubernetes

Volcano becomes spark default batch scheduler

123. 买卖股票的最佳时机 III

188. the best time to buy and sell stocks IV

随机推荐

2022国际女性工程师日:戴森设计大奖彰显女性设计实力

为什么生命科学企业都在陆续上云?

Foundations of Cryptography

Intelligent fish tank control system based on STM32 under Internet of things

Rewrite, maplocal and maplocal operations of Charles

XTransfer技术新人进阶秘诀:不可错过的宝藏Mentor

ping: www.baidu. Com: unknown name or service

188. 买卖股票的最佳时机 IV

TCP specifies the source port

[cloud native learning notes] learn about kubernetes configuration list yaml file

[Web Security Basics] some details

Implementing DNS requester with C language

What does CTO (technical director) usually do?

[product design and R & D collaboration tool] Shanghai daoning provides you with blue lake introduction, download, trial and tutorial

PHP script calls command to get real-time output

Apple mobile phone can see some fun ways to install IPA package

PIXIV Gizmo

Graduation summary of phase 6 of the construction practice camp

多路转接select

leetcode_1365