当前位置:网站首页>coco test-dev 测试代码

coco test-dev 测试代码

2022-07-27 00:16:00 【大黑山修道】

一般来说,coco test-dev 的评估代码都是写进姿态估计框架里的(HRNet,HigherHRNet,DEKR,SWAHR等等),后来改良的代码都是继承了大部分基础功能的框架,改动主要在model内部或者loss部分。直接输入指令即可评估coco test-dev测试集。

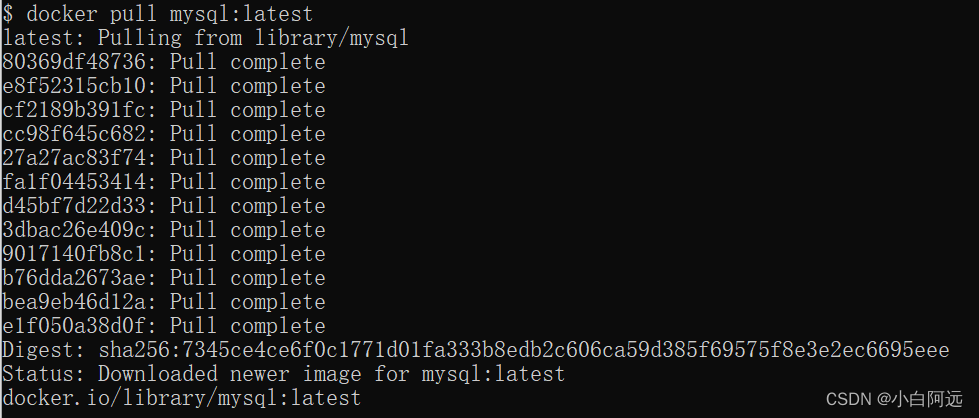

(DEKR)

python tools/valid.py \

--cfg experiments/coco/w32/w32_4x_reg03_bs10_512_adam_lr1e-3_coco_x140.yaml \

TEST.MODEL_FILE model/pose_coco/pose_dekr_hrnetw32_coco.pth \

DATASET.TEST test-dev2017

最后一个参数表示测试的是COCO test-dev,而默认的是COCO val2017 dataset 。

最简单的方法------继承框架(推荐):

因为COCO数据集本身就需要调用cocoapi,总体来说比较复杂。最简单的就是直接继承以前姿态估计代码的框架。

如果就是以HRNet,DEKR之类的代码作为baseline在上面进行修改的,一般都可以直接调用原有的指令,进行COCO test-dev的评估测试。

或者也可以将自己的修改后的网络往原有框架里面套用,根据情况更换model模块,loss模块,transforms模块。更换完之后,再根据原有网络的指令进行测试评估。

注意区分自下而上的姿态估计和自上而下的姿态估计,两者框架差异很大。

推荐的自下而上姿态估计框架(DEKR):

GitHub:

https://github.com/HRNet/DEKR

自上而下没怎么研究,但是HRNet肯定是支持的,大家自行在网上搜下吧。

移植代码:

这个情况挺复杂,自己也没试过,因为不太需要,套用原有的框架就可以直接测试了。自己写的网络,也可以慢慢地把各个部分替换到框架里,可能也比移植测试代码简单。。。

代码来源(DEKR):

https://github.com/HRNet/DEKR

评估模块的代码:

def evaluate(self, cfg, preds, scores, output_dir, tag,

*args, **kwargs):

''' Perform evaluation on COCO keypoint task :param cfg: cfg dictionary :param preds: prediction :param output_dir: output directory :param args: :param kwargs: :return: '''

res_folder = os.path.join(output_dir, 'results')

if not os.path.exists(res_folder):

os.makedirs(res_folder)

res_file = os.path.join(

res_folder, 'keypoints_%s_results.json' % (self.dataset+tag))

# preds is a list of: image x person x (keypoints)

# keypoints: num_joints * 4 (x, y, score, tag)

kpts = defaultdict(list)

for idx, _kpts in enumerate(preds):

img_id = self.ids[idx]

file_name = self.coco.loadImgs(img_id)[0]['file_name']

for idx_kpt, kpt in enumerate(_kpts):

area = (np.max(kpt[:, 0]) - np.min(kpt[:, 0])) * \

(np.max(kpt[:, 1]) - np.min(kpt[:, 1]))

kpt = self.processKeypoints(kpt)

kpts[int(file_name[-16:-4])].append(

{

'keypoints': kpt[:, 0:3],

'score': scores[idx][idx_kpt],

'image': int(file_name[-16:-4]),

'area': area

}

)

# rescoring and oks nms

oks_nmsed_kpts = []

# image x person x (keypoints)

for img in kpts.keys():

# person x (keypoints)

img_kpts = kpts[img]

# person x (keypoints)

# do not use nms, keep all detections

keep = []

if len(keep) == 0:

oks_nmsed_kpts.append(img_kpts)

else:

oks_nmsed_kpts.append([img_kpts[_keep] for _keep in keep])

self._write_coco_keypoint_results(

oks_nmsed_kpts, res_file

)

if 'test' not in self.dataset:

info_str = self._do_python_keypoint_eval(

res_file, res_folder

)

name_value = OrderedDict(info_str)

return name_value, name_value['AP']

else:

return {

'Null': 0}, 0

如果不行,就需要继续移植相关的模块,非常麻烦。

测试COCO test-dev需要的数据集的模块:

COCODataset.py(dataset = ‘test-dev2017’)

评估代码在CocoDataset的evaluate函数内

# ------------------------------------------------------------------------------

# Copyright (c) Microsoft

# Licensed under the MIT License.

# The code is based on HigherHRNet-Human-Pose-Estimation.

# (https://github.com/HRNet/HigherHRNet-Human-Pose-Estimation)

# Modified by Zigang Geng ([email protected]).

# ------------------------------------------------------------------------------

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

from collections import defaultdict

from collections import OrderedDict

import logging

import os

import os.path

import cv2

import json_tricks as json

import numpy as np

from torch.utils.data import Dataset

import pycocotools

from pycocotools.cocoeval import COCOeval

from utils import zipreader

from utils.rescore import COCORescoreEval

logger = logging.getLogger(__name__)

class CocoDataset(Dataset):

def __init__(self, cfg, dataset):

from pycocotools.coco import COCO

self.root = cfg.DATASET.ROOT

self.dataset = dataset

self.data_format = cfg.DATASET.DATA_FORMAT

self.coco = COCO(self._get_anno_file_name())

self.ids = list(self.coco.imgs.keys())

cats = [cat['name']

for cat in self.coco.loadCats(self.coco.getCatIds())]

self.classes = ['__background__'] + cats

logger.info('=> classes: {}'.format(self.classes))

self.num_classes = len(self.classes)

self._class_to_ind = dict(zip(self.classes, range(self.num_classes)))

self._class_to_coco_ind = dict(zip(cats, self.coco.getCatIds()))

self._coco_ind_to_class_ind = dict(

[

(self._class_to_coco_ind[cls], self._class_to_ind[cls])

for cls in self.classes[1:]

]

)

def _get_anno_file_name(self):

# example: root/annotations/person_keypoints_tran2017.json

# image_info_test-dev2017.json

dataset = 'train2017' if 'rescore' in self.dataset else self.dataset

if 'test' in self.dataset:

return os.path.join(

self.root,

'annotations',

'image_info_{}.json'.format(

self.dataset

)

)

else:

return os.path.join(

self.root,

'annotations',

'person_keypoints_{}.json'.format(

dataset

)

)

def _get_image_path(self, file_name):

images_dir = os.path.join(self.root, 'images')

dataset = 'test2017' if 'test' in self.dataset else self.dataset

dataset = 'train2017' if 'rescore' in self.dataset else self.dataset

if self.data_format == 'zip':

return os.path.join(images_dir, dataset) + '[email protected]' + file_name

else:

return os.path.join(images_dir, dataset, file_name)

def __getitem__(self, index):

""" Args: index (int): Index Returns: tuple: Tuple (image, target). target is the object returned by ``coco.loadAnns``. """

coco = self.coco

img_id = self.ids[index]

ann_ids = coco.getAnnIds(imgIds=img_id)

target = coco.loadAnns(ann_ids)

image_info = coco.loadImgs(img_id)[0]

file_name = image_info['file_name']

if self.data_format == 'zip':

img = zipreader.imread(

self._get_image_path(file_name),

cv2.IMREAD_COLOR | cv2.IMREAD_IGNORE_ORIENTATION

)

else:

img = cv2.imread(

self._get_image_path(file_name),

cv2.IMREAD_COLOR | cv2.IMREAD_IGNORE_ORIENTATION

)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

if 'train' in self.dataset:

return img, [obj for obj in target], image_info

else:

return img

def __len__(self):

return len(self.ids)

def __repr__(self):

fmt_str = 'Dataset ' + self.__class__.__name__ + '\n'

fmt_str += ' Number of datapoints: {}\n'.format(self.__len__())

fmt_str += ' Root Location: {}'.format(self.root)

return fmt_str

def processKeypoints(self, keypoints):

tmp = keypoints.copy()

if keypoints[:, 2].max() > 0:

p = keypoints[keypoints[:, 2] > 0][:, :2].mean(axis=0)

num_keypoints = keypoints.shape[0]

for i in range(num_keypoints):

tmp[i][0:3] = [

float(keypoints[i][0]),

float(keypoints[i][1]),

float(keypoints[i][2])

]

return tmp

def evaluate(self, cfg, preds, scores, output_dir, tag,

*args, **kwargs):

''' Perform evaluation on COCO keypoint task :param cfg: cfg dictionary :param preds: prediction :param output_dir: output directory :param args: :param kwargs: :return: '''

res_folder = os.path.join(output_dir, 'results')

if not os.path.exists(res_folder):

os.makedirs(res_folder)

res_file = os.path.join(

res_folder, 'keypoints_%s_results.json' % (self.dataset+tag))

# preds is a list of: image x person x (keypoints)

# keypoints: num_joints * 4 (x, y, score, tag)

kpts = defaultdict(list)

for idx, _kpts in enumerate(preds):

img_id = self.ids[idx]

file_name = self.coco.loadImgs(img_id)[0]['file_name']

for idx_kpt, kpt in enumerate(_kpts):

area = (np.max(kpt[:, 0]) - np.min(kpt[:, 0])) * \

(np.max(kpt[:, 1]) - np.min(kpt[:, 1]))

kpt = self.processKeypoints(kpt)

kpts[int(file_name[-16:-4])].append(

{

'keypoints': kpt[:, 0:3],

'score': scores[idx][idx_kpt],

'image': int(file_name[-16:-4]),

'area': area

}

)

# rescoring and oks nms

oks_nmsed_kpts = []

# image x person x (keypoints)

for img in kpts.keys():

# person x (keypoints)

img_kpts = kpts[img]

# person x (keypoints)

# do not use nms, keep all detections

keep = []

if len(keep) == 0:

oks_nmsed_kpts.append(img_kpts)

else:

oks_nmsed_kpts.append([img_kpts[_keep] for _keep in keep])

self._write_coco_keypoint_results(

oks_nmsed_kpts, res_file

)

if 'test' not in self.dataset:

info_str = self._do_python_keypoint_eval(

res_file, res_folder

)

name_value = OrderedDict(info_str)

return name_value, name_value['AP']

else:

return {

'Null': 0}, 0

def _write_coco_keypoint_results(self, keypoints, res_file):

data_pack = [

{

'cat_id': self._class_to_coco_ind[cls],

'cls_ind': cls_ind,

'cls': cls,

'ann_type': 'keypoints',

'keypoints': keypoints

}

for cls_ind, cls in enumerate(self.classes) if not cls == '__background__'

]

results = self._coco_keypoint_results_one_category_kernel(data_pack[0])

logger.info('=> Writing results json to %s' % res_file)

with open(res_file, 'w') as f:

json.dump(results, f, sort_keys=True, indent=4)

try:

json.load(open(res_file))

except Exception:

content = []

with open(res_file, 'r') as f:

for line in f:

content.append(line)

content[-1] = ']'

with open(res_file, 'w') as f:

for c in content:

f.write(c)

def _coco_keypoint_results_one_category_kernel(self, data_pack):

cat_id = data_pack['cat_id']

keypoints = data_pack['keypoints']

cat_results = []

num_joints = 17

for img_kpts in keypoints:

if len(img_kpts) == 0:

continue

_key_points = np.array(

[img_kpts[k]['keypoints'] for k in range(len(img_kpts))]

)

key_points = np.zeros(

(_key_points.shape[0], num_joints * 3),

dtype=np.float

)

for ipt in range(num_joints):

key_points[:, ipt * 3 + 0] = _key_points[:, ipt, 0]

key_points[:, ipt * 3 + 1] = _key_points[:, ipt, 1]

# keypoints score.

key_points[:, ipt * 3 + 2] = _key_points[:, ipt, 2]

for k in range(len(img_kpts)):

kpt = key_points[k].reshape((num_joints, 3))

left_top = np.amin(kpt, axis=0)

right_bottom = np.amax(kpt, axis=0)

w = right_bottom[0] - left_top[0]

h = right_bottom[1] - left_top[1]

cat_results.append({

'image_id': img_kpts[k]['image'],

'category_id': cat_id,

'keypoints': list(key_points[k]),

'score': img_kpts[k]['score'],

'bbox': list([left_top[0], left_top[1], w, h])

})

return cat_results

def _do_python_keypoint_eval(self, res_file, res_folder):

coco_dt = self.coco.loadRes(res_file)

coco_eval = COCOeval(self.coco, coco_dt, 'keypoints')

coco_eval.params.useSegm = None

coco_eval.evaluate()

coco_eval.accumulate()

coco_eval.summarize()

stats_names = ['AP', 'Ap .5', 'AP .75',

'AP (M)', 'AP (L)', 'AR', 'AR .5', 'AR .75', 'AR (M)', 'AR (L)']

info_str = []

for ind, name in enumerate(stats_names):

info_str.append((name, coco_eval.stats[ind]))

return info_str

class CocoRescoreDataset(CocoDataset):

def __init__(self, cfg, dataset):

CocoDataset.__init__(self, cfg, dataset)

def evaluate(self, cfg, preds, scores, output_dir, tag,

*args, **kwargs):

res_folder = os.path.join(output_dir, 'results')

if not os.path.exists(res_folder):

os.makedirs(res_folder)

res_file = os.path.join(

res_folder, 'keypoints_%s_results.json' % (self.dataset+tag))

kpts = defaultdict(list)

for idx, _kpts in enumerate(preds):

img_id = self.ids[idx]

file_name = self.coco.loadImgs(img_id)[0]['file_name']

for idx_kpt, kpt in enumerate(_kpts):

area = (np.max(kpt[:, 0]) - np.min(kpt[:, 0])) * \

(np.max(kpt[:, 1]) - np.min(kpt[:, 1]))

kpt = self.processKeypoints(kpt)

kpts[int(file_name[-16:-4])].append(

{

'keypoints': kpt[:, 0:3],

'score': scores[idx][idx_kpt],

'image': int(file_name[-16:-4]),

'area': area

}

)

oks_nmsed_kpts = []

for img in kpts.keys():

img_kpts = kpts[img]

keep = []

if len(keep) == 0:

oks_nmsed_kpts.append(img_kpts)

else:

oks_nmsed_kpts.append([img_kpts[_keep] for _keep in keep])

self._write_coco_keypoint_results(

oks_nmsed_kpts, res_file

)

if 'test' not in self.dataset:

self._do_python_keypoint_eval(

cfg.RESCORE.DATA_FILE, res_file, res_folder

)

else:

return {

'Null': 0}, 0

def _do_python_keypoint_eval(self, data_file, res_file, res_folder):

coco_dt = self.coco.loadRes(res_file)

coco_eval = COCORescoreEval(self.coco, coco_dt, 'keypoints')

coco_eval.params.useSegm = None

coco_eval.evaluate()

coco_eval.dumpdataset(data_file)

COCOKeypoints.py:

# ------------------------------------------------------------------------------

# Copyright (c) Microsoft

# Licensed under the MIT License.

# The code is based on HigherHRNet-Human-Pose-Estimation.

# (https://github.com/HRNet/HigherHRNet-Human-Pose-Estimation)

# Modified by Zigang Geng ([email protected]).

# ------------------------------------------------------------------------------

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import logging

import numpy as np

import torch

import pycocotools

from .COCODataset import CocoDataset

logger = logging.getLogger(__name__)

class CocoKeypoints(CocoDataset):

def __init__(self, cfg, dataset, heatmap_generator=None, offset_generator=None, transforms=None):

super().__init__(cfg, dataset)

self.num_joints = cfg.DATASET.NUM_JOINTS

self.num_joints_with_center = self.num_joints+1

self.sigma = cfg.DATASET.SIGMA

self.center_sigma = cfg.DATASET.CENTER_SIGMA

self.bg_weight = cfg.DATASET.BG_WEIGHT

self.heatmap_generator = heatmap_generator

self.offset_generator = offset_generator

self.transforms = transforms

self.ids = [

img_id

for img_id in self.ids

if len(self.coco.getAnnIds(imgIds=img_id, iscrowd=None)) > 0

]

def __getitem__(self, idx):

img, anno, image_info = super().__getitem__(idx)

mask = self.get_mask(anno, image_info)

anno = [

obj for obj in anno

if obj['iscrowd'] == 0 or obj['num_keypoints'] > 0

]

joints, area = self.get_joints(anno)

if self.transforms:

img, mask_list, joints_list, area = self.transforms(

img, [mask], [joints], area

)

heatmap, ignored = self.heatmap_generator(

joints_list[0], self.sigma, self.center_sigma, self.bg_weight)

mask = mask_list[0]*ignored

offset, offset_weight = self.offset_generator(

joints_list[0], area)

return img, heatmap, mask, offset, offset_weight

def cal_area_2_torch(self, v):

w = torch.max(v[:, :, 0], -1)[0] - torch.min(v[:, :, 0], -1)[0]

h = torch.max(v[:, :, 1], -1)[0] - torch.min(v[:, :, 1], -1)[0]

return w * w + h * h

def get_joints(self, anno):

num_people = len(anno)

area = np.zeros((num_people, 1))

joints = np.zeros((num_people, self.num_joints_with_center, 3))

for i, obj in enumerate(anno):

joints[i, :self.num_joints, :3] = \

np.array(obj['keypoints']).reshape([-1, 3])

area[i, 0] = self.cal_area_2_torch(

torch.tensor(joints[i:i+1,:,:]))

if obj['area'] < 32**2:

joints[i, -1, 2] = 0

continue

joints_sum = np.sum(joints[i, :-1, :2], axis=0)

num_vis_joints = len(np.nonzero(joints[i, :-1, 2])[0])

if num_vis_joints <= 0:

joints[i, -1, :2] = 0

else:

joints[i, -1, :2] = joints_sum / num_vis_joints

joints[i, -1, 2] = 1

return joints, area

def get_mask(self, anno, img_info):

m = np.zeros((img_info['height'], img_info['width']))

for obj in anno:

if obj['iscrowd']:

rle = pycocotools.mask.frPyObjects(

obj['segmentation'], img_info['height'], img_info['width'])

m += pycocotools.mask.decode(rle)

elif obj['num_keypoints'] == 0:

rles = pycocotools.mask.frPyObjects(

obj['segmentation'], img_info['height'], img_info['width'])

for rle in rles:

m += pycocotools.mask.decode(rle)

return m < 0.5

cfg:默认的py文件

default.py

# ------------------------------------------------------------------------------

# Copyright (c) Microsoft

# Licensed under the MIT License.

# The code is based on HigherHRNet-Human-Pose-Estimation.

# (https://github.com/HRNet/HigherHRNet-Human-Pose-Estimation)

# Modified by Zigang Geng ([email protected]).

# ------------------------------------------------------------------------------

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import os

from yacs.config import CfgNode as CN

_C = CN()

_C.OUTPUT_DIR = ''

_C.NAME = 'regression'

_C.LOG_DIR = ''

_C.DATA_DIR = ''

_C.GPUS = (0,)

_C.WORKERS = 4

_C.PRINT_FREQ = 20

_C.AUTO_RESUME = False

_C.PIN_MEMORY = True

_C.RANK = 0

_C.VERBOSE = True

_C.DIST_BACKEND = 'nccl'

_C.MULTIPROCESSING_DISTRIBUTED = True

# Cudnn related params

_C.CUDNN = CN()

_C.CUDNN.BENCHMARK = True

_C.CUDNN.DETERMINISTIC = False

_C.CUDNN.ENABLED = True

# common params for NETWORK

_C.MODEL = CN()

_C.MODEL.NAME = 'hrnet_dekr'

_C.MODEL.INIT_WEIGHTS = True

_C.MODEL.PRETRAINED = ''

_C.MODEL.NUM_JOINTS = 17

_C.MODEL.SPEC = CN(new_allowed=True)

_C.LOSS = CN()

_C.LOSS.WITH_HEATMAPS_LOSS = True

_C.LOSS.HEATMAPS_LOSS_FACTOR = 1.0

_C.LOSS.WITH_OFFSETS_LOSS = True

_C.LOSS.OFFSETS_LOSS_FACTOR = 1.0

# DATASET related params

_C.DATASET = CN()

_C.DATASET.ROOT = ''

_C.DATASET.DATASET = 'coco_kpt'

_C.DATASET.DATASET_TEST = ''

_C.DATASET.NUM_JOINTS = 17

_C.DATASET.MAX_NUM_PEOPLE = 30

_C.DATASET.TRAIN = 'train2017'

_C.DATASET.TEST = 'val2017'

_C.DATASET.DATA_FORMAT = 'jpg'

# training data augmentation

_C.DATASET.MAX_ROTATION = 30

_C.DATASET.MIN_SCALE = 0.75

_C.DATASET.MAX_SCALE = 1.25

_C.DATASET.SCALE_TYPE = 'short'

_C.DATASET.MAX_TRANSLATE = 40

_C.DATASET.INPUT_SIZE = 512

_C.DATASET.OUTPUT_SIZE = 128

_C.DATASET.FLIP = 0.5

# heatmap generator

_C.DATASET.SIGMA = 2.0

_C.DATASET.CENTER_SIGMA = 4.0

_C.DATASET.BG_WEIGHT = 0.1

# offset generator

_C.DATASET.OFFSET_RADIUS = 4

# train

_C.TRAIN = CN()

_C.TRAIN.LR_FACTOR = 0.1

_C.TRAIN.LR_STEP = [90, 110]

_C.TRAIN.LR = 0.001

_C.TRAIN.OPTIMIZER = 'adam'

_C.TRAIN.MOMENTUM = 0.9

_C.TRAIN.WD = 0.0001

_C.TRAIN.NESTEROV = False

_C.TRAIN.GAMMA1 = 0.99

_C.TRAIN.GAMMA2 = 0.0

_C.TRAIN.BEGIN_EPOCH = 0

_C.TRAIN.END_EPOCH = 140

_C.TRAIN.RESUME = False

_C.TRAIN.CHECKPOINT = ''

_C.TRAIN.IMAGES_PER_GPU = 32

_C.TRAIN.SHUFFLE = True

# testing

_C.TEST = CN()

# size of images for each device

_C.TEST.IMAGES_PER_GPU = 32

_C.TEST.FLIP_TEST = True

_C.TEST.SCALE_FACTOR = [1]

_C.TEST.MODEL_FILE = ''

_C.TEST.POOL_THRESHOLD1 = 300

_C.TEST.POOL_THRESHOLD2 = 200

_C.TEST.NMS_THRE = 0.15

_C.TEST.NMS_NUM_THRE = 10

_C.TEST.KEYPOINT_THRESHOLD = 0.01

_C.TEST.DECREASE = 1.0

_C.TEST.MATCH_HMP = False

_C.TEST.ADJUST_THRESHOLD = 0.05

_C.TEST.MAX_ABSORB_DISTANCE = 75

_C.TEST.GUASSIAN_KERNEL = 6

_C.TEST.LOG_PROGRESS = True

_C.RESCORE = CN()

_C.RESCORE.VALID = True

_C.RESCORE.GET_DATA = False

_C.RESCORE.END_EPOCH = 20

_C.RESCORE.LR = 0.001

_C.RESCORE.HIDDEN_LAYER = 256

_C.RESCORE.BATCHSIZE = 1024

_C.RESCORE.MODEL_FILE = 'model/rescore/final_rescore_coco_kpt.pth'

_C.RESCORE.DATA_FILE = 'data/rescore_data/rescore_dataset_train_coco_kpt'

def update_config(cfg, args):

cfg.defrost()

cfg.merge_from_file(args.cfg)

cfg.merge_from_list(args.opts)

if not os.path.exists(cfg.DATASET.ROOT):

cfg.DATASET.ROOT = os.path.join(

cfg.DATA_DIR, cfg.DATASET.ROOT

)

cfg.MODEL.PRETRAINED = os.path.join(

cfg.DATA_DIR, cfg.MODEL.PRETRAINED

)

if cfg.TEST.MODEL_FILE:

cfg.TEST.MODEL_FILE = os.path.join(

cfg.DATA_DIR, cfg.TEST.MODEL_FILE

)

cfg.freeze()

if __name__ == '__main__':

import sys

with open(sys.argv[1], 'w') as f:

print(_C, file=f)

cfg:yaml文件

AUTO_RESUME: True

DATA_DIR: ''

GPUS: (0,)

LOG_DIR: log

OUTPUT_DIR: output

PRINT_FREQ: 100

VERBOSE: False

CUDNN:

BENCHMARK: True

DETERMINISTIC: False

ENABLED: True

DATASET:

DATASET: coco_kpt

DATASET_TEST: coco

DATA_FORMAT: zip

FLIP: 0.5

INPUT_SIZE: 512

OUTPUT_SIZE: 128

MAX_NUM_PEOPLE: 30

MAX_ROTATION: 30

MAX_SCALE: 1.5

SCALE_TYPE: 'short'

MAX_TRANSLATE: 40

MIN_SCALE: 0.75

NUM_JOINTS: 17

ROOT: 'data/coco'

TEST: val2017

TRAIN: train2017

OFFSET_RADIUS: 4

SIGMA: 2.0

CENTER_SIGMA: 4.0

BG_WEIGHT: 0.1

LOSS:

WITH_HEATMAPS_LOSS: True

HEATMAPS_LOSS_FACTOR: 1.0

WITH_OFFSETS_LOSS: True

OFFSETS_LOSS_FACTOR: 0.03

MODEL:

SPEC:

FINAL_CONV_KERNEL: 1

PRETRAINED_LAYERS: ['*']

STAGES:

NUM_STAGES: 3

NUM_MODULES:

- 1

- 4

- 3

NUM_BRANCHES:

- 2

- 3

- 4

BLOCK:

- BASIC

- BASIC

- BASIC

NUM_BLOCKS:

- [4, 4]

- [4, 4, 4]

- [4, 4, 4, 4]

NUM_CHANNELS:

- [32, 64]

- [32, 64, 128]

- [32, 64, 128, 256]

FUSE_METHOD:

- SUM

- SUM

- SUM

HEAD_HEATMAP:

BLOCK: BASIC

NUM_BLOCKS: 1

NUM_CHANNELS: 32

DILATION_RATE: 1

HEAD_OFFSET:

BLOCK: ADAPTIVE

NUM_BLOCKS: 2

NUM_CHANNELS_PERKPT: 15

DILATION_RATE: 1

INIT_WEIGHTS: True

NAME: hrnet_dekr

NUM_JOINTS: 17

PRETRAINED: 'model/imagenet/hrnet_w32-36af842e.pth'

TEST:

FLIP_TEST: True

IMAGES_PER_GPU: 1

MODEL_FILE: ''

SCALE_FACTOR: [1]

NMS_THRE: 0.05

NMS_NUM_THRE: 8

KEYPOINT_THRESHOLD: 0.01

ADJUST_THRESHOLD: 0.05

MAX_ABSORB_DISTANCE: 75

GUASSIAN_KERNEL: 6

DECREASE: 0.9

RESCORE:

VALID: True

MODEL_FILE: 'model/rescore/final_rescore_coco_kpt.pth'

TRAIN:

BEGIN_EPOCH: 0

CHECKPOINT: ''

END_EPOCH: 140

GAMMA1: 0.99

GAMMA2: 0.0

IMAGES_PER_GPU: 10

LR: 0.001

LR_FACTOR: 0.1

LR_STEP: [90, 120]

MOMENTUM: 0.9

NESTEROV: False

OPTIMIZER: adam

RESUME: False

SHUFFLE: True

WD: 0.0001

WORKERS: 4

评估函数入口:valid.py

# ------------------------------------------------------------------------------

# Copyright (c) Microsoft

# Licensed under the MIT License.

# The code is based on HigherHRNet-Human-Pose-Estimation.

# (https://github.com/HRNet/HigherHRNet-Human-Pose-Estimation)

# Modified by Zigang Geng ([email protected]).

# ------------------------------------------------------------------------------

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import argparse

import os

import sys

import stat

import pprint

import torch

import torch.backends.cudnn as cudnn

import torch.nn.parallel

import torch.optim

import torch.utils.data

import torch.utils.data.distributed

import torchvision.transforms

import torch.multiprocessing

from tqdm import tqdm

import _init_paths

import models

from config import cfg

from config import update_config

from core.inference import get_multi_stage_outputs

from core.inference import aggregate_results

from core.nms import pose_nms

from core.match import match_pose_to_heatmap

from dataset import make_test_dataloader

from utils.utils import create_logger

from utils.transforms import resize_align_multi_scale

from utils.transforms import get_final_preds

from utils.transforms import get_multi_scale_size

from utils.rescore import rescore_valid

torch.multiprocessing.set_sharing_strategy('file_system')

def parse_args():

parser = argparse.ArgumentParser(description='Test keypoints network')

# general

parser.add_argument('--cfg',

help='experiment configure file name',

required=True,

type=str)

parser.add_argument('opts',

help="Modify config options using the command-line",

default=None,

nargs=argparse.REMAINDER)

args = parser.parse_args()

return args

# markdown format output

def _print_name_value(logger, name_value, full_arch_name):

names = name_value.keys()

values = name_value.values()

num_values = len(name_value)

logger.info(

'| Arch ' +

' '.join(['| {}'.format(name) for name in names]) +

' |'

)

logger.info('|---' * (num_values+1) + '|')

if len(full_arch_name) > 15:

full_arch_name = full_arch_name[:8] + '...'

logger.info(

'| ' + full_arch_name + ' ' +

' '.join(['| {:.3f}'.format(value) for value in values]) +

' |'

)

def main():

args = parse_args()

update_config(cfg, args)

logger, final_output_dir, _ = create_logger(

cfg, args.cfg, 'valid'

)

logger.info(pprint.pformat(args))

logger.info(cfg)

# cudnn related setting

cudnn.benchmark = cfg.CUDNN.BENCHMARK

torch.backends.cudnn.deterministic = cfg.CUDNN.DETERMINISTIC

torch.backends.cudnn.enabled = cfg.CUDNN.ENABLED

model = eval('models.'+cfg.MODEL.NAME+'.get_pose_net')(

cfg, is_train=False

)

if cfg.TEST.MODEL_FILE:

logger.info('=> loading model from {}'.format(cfg.TEST.MODEL_FILE))

model.load_state_dict(torch.load(cfg.TEST.MODEL_FILE), strict=True)

else:

model_state_file = os.path.join(

final_output_dir, 'model_best.pth.tar'

)

logger.info('=> loading model from {}'.format(model_state_file))

model.load_state_dict(torch.load(model_state_file))

model = torch.nn.DataParallel(model, device_ids=cfg.GPUS).cuda()

model.eval()

data_loader, test_dataset = make_test_dataloader(cfg)

transforms = torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize(

mean=[0.485, 0.456, 0.406],

std=[0.229, 0.224, 0.225]

)

])

all_reg_preds = []

all_reg_scores = []

pbar = tqdm(total=len(test_dataset)) if cfg.TEST.LOG_PROGRESS else None

for i, images in enumerate(data_loader):

assert 1 == images.size(0), 'Test batch size should be 1'

image = images[0].cpu().numpy()

# size at scale 1.0

base_size, center, scale = get_multi_scale_size(

image, cfg.DATASET.INPUT_SIZE, 1.0, 1.0

)

with torch.no_grad():

heatmap_sum = 0

poses = []

for scale in sorted(cfg.TEST.SCALE_FACTOR, reverse=True):

image_resized, center, scale_resized = resize_align_multi_scale(

image, cfg.DATASET.INPUT_SIZE, scale, 1.0

)

image_resized = transforms(image_resized)

image_resized = image_resized.unsqueeze(0).cuda()

heatmap, posemap = get_multi_stage_outputs(

cfg, model, image_resized, cfg.TEST.FLIP_TEST

)

heatmap_sum, poses = aggregate_results(

cfg, heatmap_sum, poses, heatmap, posemap, scale

)

heatmap_avg = heatmap_sum/len(cfg.TEST.SCALE_FACTOR)

poses, scores = pose_nms(cfg, heatmap_avg, poses)

if len(scores) == 0:

all_reg_preds.append([])

all_reg_scores.append([])

else:

if cfg.TEST.MATCH_HMP:

poses = match_pose_to_heatmap(cfg, poses, heatmap_avg)

final_poses = get_final_preds(

poses, center, scale_resized, base_size

)

if cfg.RESCORE.VALID:

scores = rescore_valid(cfg, final_poses, scores)

all_reg_preds.append(final_poses)

all_reg_scores.append(scores)

if cfg.TEST.LOG_PROGRESS:

pbar.update()

sv_all_preds = [all_reg_preds]

sv_all_scores = [all_reg_scores]

sv_all_name = [cfg.NAME]

if cfg.TEST.LOG_PROGRESS:

pbar.close()

for i in range(len(sv_all_preds)):

print('Testing '+sv_all_name[i])

preds = sv_all_preds[i]

scores = sv_all_scores[i]

if cfg.RESCORE.GET_DATA:

test_dataset.evaluate(

cfg, preds, scores, final_output_dir, sv_all_name[i]

)

print('Generating dataset for rescorenet successfully')

else:

name_values, _ = test_dataset.evaluate(

cfg, preds, scores, final_output_dir, sv_all_name[i]

)

if isinstance(name_values, list):

for name_value in name_values:

_print_name_value(logger, name_value, cfg.MODEL.NAME)

else:

_print_name_value(logger, name_values, cfg.MODEL.NAME)

if __name__ == '__main__':

main()

边栏推荐

- How small programs help the new model of smart home ecology

- 次轮Okaleido Tiger即将登录Binance NFT,引发社区热议

- Arduinouno drive RGB module full color effect example

- [untitled]

- 红宝书第四版的一个错误?

- Jmeter接口测试, 快速完成一个单接口请求

- iNFTnews | GGAC联合中国航天ASES 独家出品《中国2065典藏版》

- c语言:深度学习递归

- 基于.NetCore开发博客项目 StarBlog - (16) 一些新功能 (监控/统计/配置/初始化)

- Manually build ABP framework from 0 -abp official complete solution and manually build simplified solution practice

猜你喜欢

随机推荐

【RYU】安装RYU常见问题及解决办法

软件测试相关试题知识点

Pyqt5 use pyqtgraph to draw dynamic scatter chart

bp 插件临时代码记录

FormData的使用

基于GoLang实现API短信网关

Function stack frame explanation

Shell 分析日志文件命令全面总结

智能指针shared_ptr、unique_ptr、weak_ptr

面试突击68:为什么 TCP 需要 3 次握手?

CS224W fall 1.2 Applications of Graph ML

Blog competition dare to try BAC for beginners

[Li Kou] 1859. Sort sentences

Inftnews | ggac and China Aerospace ases exclusively produce "China 2065 Collection Edition"

想要彻底搞的性能优化,得先从底层逻辑开始了解~

Interview shock 68: why does TCP need three handshakes?

Kubeadmin到底做了什么?

Arduino UNO +74hc164 water lamp example

Goatgui invites you to attend a machine learning seminar

Applet utils

![[Li Kou] 1859. Sort sentences](/img/0c/f7f698ad0052d07be98e5f888d7da9.png)