当前位置:网站首页>从概率论基础出发推导卡尔曼滤波

从概率论基础出发推导卡尔曼滤波

2022-06-11 20:51:00 【找不到服务器1703】

本文从联合正态分布的一些性质出发推导标量和向量形式的卡尔曼滤波。下面的一些性质出于方便考虑给起了名字,不一定是正式的学术命名。

ps:有几个性质我没证出来,欢迎指正。

正式证明还没写完。

公式与符号说明

离散系统

x ( k ) = A x ( k − 1 ) + B u ( k − 1 ) + v ( k − 1 ) y ( k ) = C x ( k ) + W ( k ) \begin{aligned} & x(k)=Ax(k-1)+Bu(k-1)+v(k-1) \\ & y(k)=Cx(k)+W(k) \end{aligned} x(k)=Ax(k−1)+Bu(k−1)+v(k−1)y(k)=Cx(k)+W(k)

其中 V , W V,W V,W为零均值高斯白噪声的协方差矩阵。

卡尔曼滤波器递推公式如下

x ^ ( k ∣ k − 1 ) = A x ^ ( k − 1 ) + B u ( k − 1 ) x ^ ( k ) = x ^ ( k ∣ k − 1 ) + K ( k ) [ y ( k ) − C x ^ ( k ∣ k − 1 ) ] P ( k ∣ k − 1 ) = A P ( k − 1 ) A T + V P ( k ) = [ I − K ( k ) C ] P ( k ∣ k − 1 ) K ( k ) = P ( k ∣ k − 1 ) C T [ C P ( k ∣ k − 1 ) C T + W ] − 1 \begin{aligned} & \hat{x}(k|k-1)=A\hat{x}(k-1)+Bu(k-1) \\ & \hat{x}(k)=\hat{x}(k|k-1)+K(k)[y(k)-C\hat{x}(k|k-1)] \\ & P(k|k-1)=AP(k-1)A^{\text{T}}+V \\ & P(k)=[I-K(k)C]P(k|k-1) \\ & K(k)=P(k|k-1)C^{\text{T}}[CP(k|k-1)C^{\text{T}}+W]^{-1} \\ \end{aligned} x^(k∣k−1)=Ax^(k−1)+Bu(k−1)x^(k)=x^(k∣k−1)+K(k)[y(k)−Cx^(k∣k−1)]P(k∣k−1)=AP(k−1)AT+VP(k)=[I−K(k)C]P(k∣k−1)K(k)=P(k∣k−1)CT[CP(k∣k−1)CT+W]−1

- Y ( k ) = [ y ( 0 ) , y ( 1 ) , ⋯ , y ( k ) ] \mathbf{Y}(k)=[y(0),y(1),\cdots,y(k)] Y(k)=[y(0),y(1),⋯,y(k)]表示前 k k k个时刻的观测数据

- x ^ ( k ) = E [ x ( k ) ∣ Y ( k ) ] \hat{x}(k)=\text{E}[x(k)|Y(k)] x^(k)=E[x(k)∣Y(k)]表示根据前 k k k个时刻的观测数据预测 x ( k ) x(k) x(k)

- x ^ ( k ∣ k − 1 ) \hat{x}(k|k-1) x^(k∣k−1)表示根据前 k − 1 k-1 k−1个时刻的观测数据预测 x ( k ) x(k) x(k)

- P ( k ) P(k) P(k)表示估计误差

- P ( k ∣ k − 1 ) P(k|k-1) P(k∣k−1)表示预测误差

几个性质及部分证明

贝叶斯最小均方误差估计量(Bmse)

观测到 x x x后 θ \theta θ的最小均方误差估计量 θ ^ \hat{\theta} θ^为

θ ^ = E ( θ ∣ x ) \hat{\theta}=\text{E}(\theta|x) θ^=E(θ∣x)

证明的主要思路是求 θ ^ \hat{\theta} θ^使得最小均方误差最小。证明:

Bmse ( θ ^ ) = E [ ( θ − θ ^ ) 2 ] = ∬ ( θ − θ ^ ) 2 p ( x , θ ) d x d θ = ∫ [ ∫ ( θ − θ ^ ) 2 p ( θ ∣ x ) d θ ] p ( x ) d x J = ∫ ( θ − θ ^ ) 2 p ( θ ∣ x ) d θ ∂ J ∂ θ ^ = − 2 ∫ θ p ( θ ∣ x ) d θ + 2 θ ^ ∫ p ( θ ∣ x ) d θ = 0 θ ^ = 2 ∫ θ p ( θ ∣ x ) d θ 2 ∫ p ( θ ∣ x ) d θ = E ( θ ∣ x ) \begin{aligned} \text{Bmse}(\hat{\theta}) &= \text{E}[(\theta-\hat{\theta})^2] \\ &= \iint(\theta-\hat{\theta})^2p(x,\theta)\text{d}x\text{d}\theta \\ &=\int\left[\int(\theta-\hat{\theta})^2p(\theta|x)\text{d}\theta\right] p(x)\text{d}x \\ J &= \int(\theta-\hat{\theta})^2p(\theta|x)\text{d}\theta \\ \frac{\partial J}{\partial\hat{\theta}} &= -2\int\theta p(\theta|x)\text{d}\theta +2\hat{\theta}\int p(\theta|x)\text{d}\theta=0 \\ \hat{\theta} &= \frac{2\displaystyle\int\theta p(\theta|x)\text{d}\theta} {2\displaystyle\int p(\theta|x)\text{d}\theta}=\text{E}(\theta|x) \\ \end{aligned} Bmse(θ^)J∂θ^∂Jθ^=E[(θ−θ^)2]=∬(θ−θ^)2p(x,θ)dxdθ=∫[∫(θ−θ^)2p(θ∣x)dθ]p(x)dx=∫(θ−θ^)2p(θ∣x)dθ=−2∫θp(θ∣x)dθ+2θ^∫p(θ∣x)dθ=0=2∫p(θ∣x)dθ2∫θp(θ∣x)dθ=E(θ∣x)

零均值应用定理(标量形式)

设 x x x和 y y y为联合正态分布的随机变量,则

E ( y ∣ x ) = E y + Cov ( x , y ) D x ( x − E x ) D ( y ∣ x ) = D y − Cov 2 ( x , y ) D x \begin{aligned} & \text{E}(y|x)=\text{E}y+\frac{\text{Cov}(x,y)}{\text{D}x}(x-\text{E}x) \\ & \text{D}(y|x)=\text{D}y-\frac{\text{Cov}^2(x,y)}{\text{D}x} \\ \end{aligned} E(y∣x)=Ey+DxCov(x,y)(x−Ex)D(y∣x)=Dy−DxCov2(x,y)

两式可另外写作

y ^ − E y D y = ρ x − E x D x D ( y ∣ x ) = D y ( 1 − ρ 2 ) \begin{aligned} & \frac{\hat{y}-\text{E}y}{\sqrt{\text{D}y}} =\rho\frac{x-\text{E}x}{\sqrt{\text{D}x}} \\ & \text{D}(y|x)=\text{D}y(1-\rho^2) \\ \end{aligned} Dyy^−Ey=ρDxx−ExD(y∣x)=Dy(1−ρ2)

证明:

y ^ = a x + b J = E ( y − y ^ ) 2 = E [ y 2 − 2 y ( a x + b ) + ( a x + b ) 2 ] = y 2 − 2 E x y ⋅ a − 2 E y ⋅ b + E x 2 ⋅ a 2 + 2 E x ⋅ a b + b 2 d J = ( − 2 E x y + 2 E x 2 + 2 b E x ) d a + ( − 2 E y + 2 a E x + 2 b ) d b ∂ J ∂ a = − 2 E x y + 2 E x 2 + 2 b E x = 0 ∂ J ∂ b = − 2 E y + 2 a E x + 2 b = 0 b = E x y − E x 2 E x a = E y − b E x = E x E y − E x y + E x 2 ( E x ) 2 y ^ = a x + b = \begin{aligned} \hat{y} &= ax+b \\ J &= \text{E}(y-\hat{y})^2 \\ &= \text{E}[y^2-2y(ax+b)+(ax+b)^2] \\ &= y^2-2\text{E}xy\cdot a-2\text{E}y\cdot b +\text{E}x^2\cdot a^2+2\text{E}x\cdot ab+b^2 \\ \text{d}J &= (-2\text{E}xy+2\text{E}x^2+2b\text{E}x)\text{d}a +(-2\text{E}y+2a\text{E}x+2b)\text{d}b \\ \frac{\partial J}{\partial a} &= -2\text{E}xy+2\text{E}x^2+2b\text{E}x = 0 \\ \frac{\partial J}{\partial b} &= -2\text{E}y+2a\text{E}x+2b = 0 \\ b &= \frac{\text{E}xy-\text{E}x^2}{\text{E}x} \\ a &= \frac{\text{E}y-b}{\text{E}x} = \frac{\text{E}x\text{E}y-\text{E}xy+\text{E}x^2}{(\text{E}x)^2} \\ \hat{y} &= ax+b = \\ \end{aligned} y^JdJ∂a∂J∂b∂Jbay^=ax+b=E(y−y^)2=E[y2−2y(ax+b)+(ax+b)2]=y2−2Exy⋅a−2Ey⋅b+Ex2⋅a2+2Ex⋅ab+b2=(−2Exy+2Ex2+2bEx)da+(−2Ey+2aEx+2b)db=−2Exy+2Ex2+2bEx=0=−2Ey+2aEx+2b=0=ExExy−Ex2=ExEy−b=(Ex)2ExEy−Exy+Ex2=ax+b=

该定理只对包括正态分布在内的满足线性关系的随机变量有效(具体什么地方满足线性暂时没搞清楚)。例如,对两个联合均匀分布

f ( x , y ) = 2 , 0 < x < 1 , 0 < y < x f ( x , y ) = 3 , 0 < x < 1 , x 2 < y < x \begin{aligned} & f(x,y)=2,\quad 0<x<1,0<y<x \\ & f(x,y)=3,\quad 0<x<1,x^2<y<\sqrt{x} \end{aligned} f(x,y)=2,0<x<1,0<y<xf(x,y)=3,0<x<1,x2<y<x

第一个成立,第二个由于非线性的存在而不成立,也就是说 y y y在数据 x x x下的线性贝叶斯估计量不是最佳估计量,两者分别为

y ^ = E y + Cov ( x , y ) D x ( x − E x ) = 133 x + 9 153 y ^ = E ( y ∣ x ) = x + x 2 2 \begin{aligned} & \hat{y}=\text{E}y+\frac{\text{Cov}(x,y)}{\text{D}x}(x-\text{E}x) =\frac{133x+9}{153} \\ & \hat{y}=\text{E}(y|x)=\frac{\sqrt{x}+x^2}{2} \\ \end{aligned} y^=Ey+DxCov(x,y)(x−Ex)=153133x+9y^=E(y∣x)=2x+x2

零均值应用定理(向量形式)

x \boldsymbol{x} x和 y \boldsymbol{y} y为联合正态分布的随机向量, x \boldsymbol{x} x是m×1, y \boldsymbol{y} y是n×1,分块协方差矩阵

C = [ C x x C x y C y x C y y ] \mathbf{C}=\left[\begin{matrix} \mathbf{C}_{xx} & \mathbf{C}_{xy} \\ \mathbf{C}_{yx} & \mathbf{C}_{yy} \end{matrix}\right] C=[CxxCyxCxyCyy]

则

E ( y ∣ x ) = E ( y ) + C y x C x x − 1 ( x − E ( x ) ) \text{E}(\boldsymbol{y}|\boldsymbol{x})=\text{E}(\boldsymbol{y}) +\mathbf{C}_{yx}\mathbf{C}_{xx}^{-1}(\boldsymbol{x}-\text{E}(\boldsymbol{x})) E(y∣x)=E(y)+CyxCxx−1(x−E(x))

其中 C x y C_{xy} Cxy表示 Cov ( x , y ) \text{Cov}(x,y) Cov(x,y)。证明:

y ^ = A x + b J = E ( y − y ^ ) ⊤ ( y − y ^ ) = E ( y ⊤ y − y ^ ⊤ y − y ⊤ y ^ + y ^ ⊤ y ^ ) d J = dE ( − y ^ ⊤ y − y ⊤ y ^ + y ^ ⊤ y ^ ) = dE [ tr ( − y ^ ⊤ y − y ⊤ y ^ + y ^ ⊤ y ^ ) ] = ⋯ A = C x x − 1 C x y b = E y − A E x y ^ = C x x − 1 C x y x + E y − C x x − 1 C x y E x = E y + C x x − 1 C x y ( x − E x ) \begin{aligned} \hat{y} &= Ax+b \\ J &= \text{E}(y-\hat{y})^{\top}(y-\hat{y}) \\ &= \text{E}(y^{\top}y-\hat{y}^{\top}y-y^{\top}\hat{y}+\hat{y}^{\top}\hat{y}) \\ \text{d}J &= \text{dE}(-\hat{y}^{\top}y-y^{\top}\hat{y}+\hat{y}^{\top}\hat{y}) \\ &= \text{dE}[\text{tr}(-\hat{y}^{\top}y-y^{\top}\hat{y}+\hat{y}^{\top}\hat{y})] \\ &= \cdots \\ A &= C_{xx}^{-1}C_{xy} \\ b &= \text{E}y-A\text{E}x \\ \hat{y} &= C_{xx}^{-1}C_{xy}x+\text{E}y-C_{xx}^{-1}C_{xy}\text{E}x \\ &= \text{E}y+C_{xx}^{-1}C_{xy}(x-\text{E}x) \\ \end{aligned} y^JdJAby^=Ax+b=E(y−y^)⊤(y−y^)=E(y⊤y−y^⊤y−y⊤y^+y^⊤y^)=dE(−y^⊤y−y⊤y^+y^⊤y^)=dE[tr(−y^⊤y−y⊤y^+y^⊤y^)]=⋯=Cxx−1Cxy=Ey−AEx=Cxx−1Cxyx+Ey−Cxx−1CxyEx=Ey+Cxx−1Cxy(x−Ex)

投影定理(正交原理)

当利用数据样本的线性组合来估计一个随机变量的时候,当估计值与真实值的误差和每一个数据样本正交时,该估计值是最佳估计量,即数据样本 x x x与最佳估计量 y ^ \hat{y} y^满足

E [ ( y − y ^ ) ⊤ x ( n ) ] = 0 n = 0 , 1 , ⋯ , N − 1 \text{E}[(y-\hat{y})^\top x(n)]=0\quad n=0,1,\cdots,N-1 E[(y−y^)⊤x(n)]=0n=0,1,⋯,N−1

零均值的随机变量满足内积空间中的性质。定义变量的长度 ∣ ∣ x ∣ ∣ = E x 2 ||x||=\sqrt{\text{E}x^2} ∣∣x∣∣=Ex2,变量 x x x和 y y y的内积 ( x , y ) (x,y) (x,y)定义为 E ( x y ) \text{E}(xy) E(xy),两个变量的夹角定义为相关系数 ρ \rho ρ。当 E ( x y ) = 0 \text{E}(xy)=0 E(xy)=0时称变量 x x x和 y y y正交。

均值不为零时,定义变量的长度 ∣ ∣ x ∣ ∣ = D x ||x||=\sqrt{\text{D}x} ∣∣x∣∣=Dx,变量 x x x和 y y y的内积 ( x , y ) (x,y) (x,y)定义为 Cov ( x y ) \text{Cov}(xy) Cov(xy),两个变量的夹角定义为相关系数 ρ \rho ρ。均值不为零的情况是我自己的猜测,很多资料都没有详细说明,但卡尔曼滤波的推导里全是均值非零的。

将 x x x和 y y y对应成数据的形式,即 x ( 0 ) x(0) x(0)是 x x x, x ( 1 ) x(1) x(1)是 y y y, x ^ ( 1 ∣ 0 ) \hat{x}(1|0) x^(1∣0)是 y ^ \hat{y} y^,得到

E [ ( x ( 1 ) − x ^ ( 1 ∣ 0 ) ) ⊤ x ( 0 ) ] = 0 \text{E}[(x(1)-\hat{x}(1|0))^\top x(0)]=0 E[(x(1)−x^(1∣0))⊤x(0)]=0

其中

x ~ ( k ∣ k − 1 ) = x ( k ) − x ^ ( k ∣ k − 1 ) \widetilde{x}(k|k-1) = x(k)-\hat{x}(k|k-1) x(k∣k−1)=x(k)−x^(k∣k−1)

称为新息(innovation),与旧数据 x ( 0 ) x(0) x(0)正交。

标量形式证明:

E [ x ( y ^ − y ) ] = E [ x E y + Cov ( x , y ) D x ( x 2 − x E x ) − x y ] = E x E y + Cov ( x , y ) D x ( E x 2 − ( E x ) 2 ) − E x y = Cov ( x , y ) + E x E y − E x y = 0 \begin{aligned} \text{E}[x(\hat{y}-y)] &= \text{E}[x\text{E}y +\frac{\text{Cov}(x,y)}{\text{D}x}(x^2-x\text{E}x)-xy] \\ &= \text{E}x\text{E}y +\frac{\text{Cov}(x,y)}{\text{D}x}(\text{E}x^2-(\text{E}x)^2)-\text{E}xy \\ &= \text{Cov}(x,y)+\text{E}x\text{E}y-\text{E}xy=0 \end{aligned} E[x(y^−y)]=E[xEy+DxCov(x,y)(x2−xEx)−xy]=ExEy+DxCov(x,y)(Ex2−(Ex)2)−Exy=Cov(x,y)+ExEy−Exy=0

向量形式证明:

E [ x ⊤ ( y ^ − y ) ] = E [ x ⊤ E y + x ⊤ C x x − 1 C x y ( x − E x ) ] = ⋯ = 0 \begin{aligned} \text{E}[x^\top(\hat{y}-y)] &= \text{E}[x^\top\text{E}y+x^\top C_{xx}^{-1}C_{xy}(x-\text{E}x)] \\ &= \cdots \\ &= 0 \end{aligned} E[x⊤(y^−y)]=E[x⊤Ey+x⊤Cxx−1Cxy(x−Ex)]=⋯=0

由于 E ( y − y ^ ) = 0 \text{E}(y-\hat{y})=0 E(y−y^)=0,所以均值非零时正交条件也恰好成立。由图可得投影定理的另一个公式

E [ ( y − y ^ ) y ^ ] = 0 \text{E}[(y-\hat{y})\hat{y}]=0 E[(y−y^)y^]=0

证明:

E [ y ^ ( y ^ − y ) ] = E [ ( E y + k x − k E x ) ( E y + k x − k E x − y ) ] = ( E y ) 2 + k E x E y − k E x E y − ( E y ) 2 + k E x E y + k 2 E x 2 − k 2 ( E x ) 2 − k E x y − k E x E y − k 2 ( E x ) 2 + k 2 ( E x ) 2 + k E x E y = k 2 D x − k Cov ( x , y ) = [ Cov ( x , y ) ] 2 [ D x ] 2 D x − Cov ( x , y ) D x Cov ( x , y ) = 0 \begin{aligned} & \text{E}[\hat{y}(\hat{y}-y)] \\ =& \text{E}[(\text{E} y+k x-k \text{E} x)(\text{E} y+k x-k \text{E} x-y)] \\ =& (\text{E}y)^{2}+k\text{E}x\text{E}y-k\text{E}x\text{E}y-(\text{E}y)^{2}\\ &+ k\text{E}x\text{E}y+k^{2}Ex^2-k^{2}(\text{E}x)^{2}-k\text{E}xy \\ &- k\text{E}x\text{E}y-k^{2}(\text{E}x)^{2}+k^{2}(\text{E}x)^{2}+k\text{E}x\text{E}y \\ =& k^{2}\text{D}x-k\text{Cov}(x,y) \\ =& \frac{[\text{Cov}(x,y)]^2}{[\text{D}x]^2}\text{D}x-\frac{\text{Cov}(x,y)}{\text{D}x}\text{Cov}(x,y) \\ =&0 \end{aligned} =====E[y^(y^−y)]E[(Ey+kx−kEx)(Ey+kx−kEx−y)](Ey)2+kExEy−kExEy−(Ey)2+kExEy+k2Ex2−k2(Ex)2−kExy−kExEy−k2(Ex)2+k2(Ex)2+kExEyk2Dx−kCov(x,y)[Dx]2[Cov(x,y)]2Dx−DxCov(x,y)Cov(x,y)0

期望可加性

E [ y 1 + y 2 ∣ x ] = E [ y 1 ∣ x ] + E [ y 2 ∣ x ] \text{E}[y_1+y_2|x]=\text{E}[y_1|x]+\text{E}[y_2|x] E[y1+y2∣x]=E[y1∣x]+E[y2∣x]

独立条件可加性

若 x 1 x_1 x1和 x 2 x_2 x2独立,则

E [ y ∣ x 1 , x 2 ] = E [ y ∣ x 1 ] + E [ y ∣ x 2 ] − E y \text{E}[y|x_1,x_2]=\text{E}[y|x_1]+\text{E}[y|x_2]-\text{E}y E[y∣x1,x2]=E[y∣x1]+E[y∣x2]−Ey

证明:

令 x = [ x 1 ⊤ , x 2 ⊤ ] ⊤ x=[x_1^\top,x_2^\top]^\top x=[x1⊤,x2⊤]⊤,则

C x x − 1 = [ C x 1 x 1 C x 1 x 2 C x 2 x 1 C x 2 x 2 ] − 1 = [ C x 1 x 1 − 1 O O C x 2 x 2 − 1 ] C y x = [ C y x 1 C y x 2 ] E ( y ∣ x ) = E y + C y x C x x − 1 ( x − E x ) = E y + [ C y x 1 C y x 2 ] [ C x 1 x 1 − 1 O O C x 2 x 2 − 1 ] [ x 1 − E x 1 x 2 − E x 2 ] = E [ y ∣ x 1 ] + E [ y ∣ x 2 ] − E y \begin{aligned} C_{xx}^{-1} &= \left[\begin{matrix} C_{x_1x_1} & C_{x_1x_2} \\ C_{x_2x_1} & C_{x_2x_2} \end{matrix}\right]^{-1} = \left[\begin{matrix} C_{x_1x_1}^{-1} & O \\ O & C_{x_2x_2}^{-1} \end{matrix}\right] \\ C_{yx} &= \left[\begin{matrix} C_{yx_1} & C_{yx_2} \end{matrix}\right] \\ \text{E}(y|x) &= \text{E}y+C_{yx}C_{xx}^{-1}(x-\text{E}x) \\ &= \text{E}y+\left[\begin{matrix} C_{yx_1} & C_{yx_2} \end{matrix}\right] \left[\begin{matrix} C_{x_1x_1}^{-1} & O \\ O & C_{x_2x_2}^{-1} \end{matrix}\right] \left[\begin{matrix} x_1-\text{E}x_1 \\ x_2-\text{E}x_2 \end{matrix}\right] \\ &= \text{E}[y|x_1]+\text{E}[y|x_2]-\text{E}y \end{aligned} Cxx−1CyxE(y∣x)=[Cx1x1Cx2x1Cx1x2Cx2x2]−1=[Cx1x1−1OOCx2x2−1]=[Cyx1Cyx2]=Ey+CyxCxx−1(x−Ex)=Ey+[Cyx1Cyx2][Cx1x1−1OOCx2x2−1][x1−Ex1x2−Ex2]=E[y∣x1]+E[y∣x2]−Ey

非独立条件可加性(新息定理)

若 x 1 x_1 x1和 x 2 x_2 x2不独立,则根据投影定理取 x 2 x_2 x2与 x 1 x_1 x1独立的分量 x ~ 2 \widetilde{x}_2 x2,满足

E [ y ∣ x 1 , x 2 ] = E [ y ∣ x 1 , x ~ 2 ] = E [ y ∣ x 1 ] + E [ y ∣ x ~ 2 ] − E y \text{E}[y|x_1,x_2] =\text{E}[y|x_1,\widetilde{x}_2] =\text{E}[y|x_1]+\text{E}[y|\widetilde{x}_2]-\text{E}y E[y∣x1,x2]=E[y∣x1,x2]=E[y∣x1]+E[y∣x2]−Ey

其中 x ~ 2 = x 2 − x ^ 2 = x 2 − E ( x 1 ∣ x 2 ) \widetilde{x}_2=x_2-\hat{x}_2=x_2-\text{E}(x_1|x_2) x2=x2−x^2=x2−E(x1∣x2),由投影定理, x 1 x_1 x1与 x ~ 2 \widetilde{x}_2 x2独立, x ~ 2 \widetilde{x}_2 x2称为新息。

证明(下面的每个式子是先求部分后求整体,为便于理解可以从下往上看):

E ( y ∣ x 1 , x 2 ) = E y + [ C y x 1 C y x 2 ] D x 1 D x 2 − C x 1 x 2 2 [ D x 2 − C x 1 x 2 − C x 1 x 2 D x 1 ] [ x 1 − E x 1 x 2 − E x 2 ] Cov ( y , x ^ 2 ) = E [ y ( E x 2 + Cov ( x 2 , x 1 ) D x 1 ( x 1 − E x 1 ) ) ] − E y E x ^ 2 = E y E x 2 + Cov ( x 2 , x 1 ) D x 1 ( E x 1 y − E x 1 E y ) − E y E x 2 = C x 1 x 2 C y x 1 D x 1 Cov ( x 2 , x ^ 2 ) = E x 2 x ^ 2 − E x 2 E x ^ 2 = E [ x 2 ( E x 2 + Cov ( x 2 , x 1 ) D x 1 ( x 1 − E x 1 ) ) ] − ( E x 2 ) 2 = C x 1 x 2 2 D x 1 D x ^ 2 = D ( E x 2 + Cov ( x 2 , x 1 ) D x 1 ( x 1 − E x 1 ) ) = C x 1 x 2 2 ( D x 1 ) 2 D x 1 = C x 1 x 2 2 D x 1 D x ~ 2 = D x 2 + D x ^ 2 − 2 Cov ( x 2 , x ^ 2 ) = D x 2 − C x 1 x 2 2 D x 1 E [ y ∣ x 1 ] + E [ y ∣ x ~ 2 ] − E y = E y + C x 1 y D x 1 ( x 1 − E x 1 ) + Cov ( y , x ~ 2 ) D x ~ 2 ( x ~ 2 − E x ~ 2 ) = ⋯ + Cov ( y , x 2 ) − Cov ( y , x ^ 2 ) D x ~ 2 ( x 2 − x ^ 2 ) = ⋯ + C y x 2 − C x 1 x 2 C y x 1 D x 1 D x 2 − C x 1 x 2 2 D x 1 ( x 2 − E 2 − Cov ( x 2 , x 1 ) D x 1 ( x 1 − E 1 ) ) = ⋯ + C y x 2 D x 1 − C x 1 x 2 C y x 1 D x 1 D x 2 − C x 1 x 2 2 ( x 2 − E 2 − C x 1 x 2 D x 1 ( x 1 − E 1 ) ) = E y + A ( x 1 − E x 1 ) + B ( x 2 − E 2 ) \begin{aligned} \text{E}(y|x_1,x_2) &= \text{E}y +\frac{\left[\begin{matrix} C_{yx_1} & C_{yx_2} \end{matrix}\right]} {\text{D}x_1\text{D}x_2-C^2_{x_1x_2}} \left[\begin{matrix} \text{D}x_2 & -C_{x_1x_2} \\ -C_{x_1x_2} & \text{D}x_1 \end{matrix}\right] \left[\begin{matrix} x_1-\text{E}x_1 \\ x_2-\text{E}x_2 \end{matrix}\right] \\ \text{Cov}(y,\hat{x}_2) &= \text{E}[y\left(\text{E}x_2 +\frac{\text{Cov}(x_2,x_1)}{\text{D}x_1}(x_1-\text{E}x_1)\right)] -\text{E}y\text{E}\hat{x}_2 \\ &= \text{E}y\text{E}x_2+\frac{\text{Cov}(x_2,x_1)}{\text{D}x_1} (\text{E}x_1y-\text{E}x_1\text{E}y)-\text{E}y\text{E}x_2 \\ &= \frac{C_{x_1x_2}C_{yx_1}}{\text{D}x_1} \\ \text{Cov}(x_2,\hat{x}_2) &= \text{E}x_2\hat{x}_2-\text{E}x_2\text{E}\hat{x}_2 \\ &= \text{E}[x_2(\text{E}x_2+\frac{\text{Cov}(x_2,x_1)}{\text{D}x_1} (x_1-\text{E}x_1))]-(\text{E}x_2)^2 \\ &= \frac{C_{x_1x_2}^2}{\text{D}x_1} \\ \text{D}\hat{x}_2 &= \text{D}(\text{E}x_2+\frac{\text{Cov}(x_2,x_1)}{\text{D}x_1} (x_1-\text{E}x_1)) \\ &= \frac{C_{x_1x_2}^2}{(\text{D}x_1)^2}\text{D}x_1 =\frac{C_{x_1x_2}^2}{\text{D}x_1} \\ \text{D}\widetilde{x}_2 &= \text{D}x_2+\text{D}\hat{x}_2 -2\text{Cov}(x_2,\hat{x}_2) \\ &= \text{D}x_2-\frac{C_{x_1x_2}^2}{\text{D}x_1} \\ \text{E}[y|x_1]+\text{E}[y|\widetilde{x}_2]-\text{E}y &= \text{E}y +\frac{C_{x_1y}}{\text{D}x_1}(x_1-\text{E}x_1) +\frac{\text{Cov}(y,\widetilde{x}_2)}{\text{D}\widetilde{x}_2} (\widetilde{x}_2-\text{E}\widetilde{x}_2) \\ &= \cdots+\frac{\text{Cov}(y,x_2)-\text{Cov}(y,\hat{x}_2)}{\text{D}\widetilde{x}_2} (x_2-\hat{x}_2) \\ &= \cdots+\frac{C_{yx_2}-\frac{C_{x_1x_2}C_{yx_1}}{\text{D}x_1}} {\text{D}x_2-\frac{C_{x_1x_2}^2}{\text{D}x_1}}(x_2-\text{E}_2 -\frac{\text{Cov}(x_2,x_1)}{\text{D}x_1}(x_1-\text{E}_1)) \\ &= \cdots+\frac{C_{yx_2}\text{D}x_1-C_{x_1x_2}C_{yx_1}} {\text{D}x_1\text{D}x_2-C_{x_1x_2}^2}(x_2-\text{E}_2 -\frac{C_{x_1x_2}}{\text{D}x_1}(x_1-\text{E}_1)) \\ &= \text{E}y+A(x_1-\text{E}x_1) +B(x_2-\text{E}_2) \\ \end{aligned} E(y∣x1,x2)Cov(y,x^2)Cov(x2,x^2)Dx^2Dx2E[y∣x1]+E[y∣x2]−Ey=Ey+Dx1Dx2−Cx1x22[Cyx1Cyx2][Dx2−Cx1x2−Cx1x2Dx1][x1−Ex1x2−Ex2]=E[y(Ex2+Dx1Cov(x2,x1)(x1−Ex1))]−EyEx^2=EyEx2+Dx1Cov(x2,x1)(Ex1y−Ex1Ey)−EyEx2=Dx1Cx1x2Cyx1=Ex2x^2−Ex2Ex^2=E[x2(Ex2+Dx1Cov(x2,x1)(x1−Ex1))]−(Ex2)2=Dx1Cx1x22=D(Ex2+Dx1Cov(x2,x1)(x1−Ex1))=(Dx1)2Cx1x22Dx1=Dx1Cx1x22=Dx2+Dx^2−2Cov(x2,x^2)=Dx2−Dx1Cx1x22=Ey+Dx1Cx1y(x1−Ex1)+Dx2Cov(y,x2)(x2−Ex2)=⋯+Dx2Cov(y,x2)−Cov(y,x^2)(x2−x^2)=⋯+Dx2−Dx1Cx1x22Cyx2−Dx1Cx1x2Cyx1(x2−E2−Dx1Cov(x2,x1)(x1−E1))=⋯+Dx1Dx2−Cx1x22Cyx2Dx1−Cx1x2Cyx1(x2−E2−Dx1Cx1x2(x1−E1))=Ey+A(x1−Ex1)+B(x2−E2)

其中

B = C y x 2 D x 1 − C x 1 x 2 C y x 1 D x 1 D x 2 − C x 1 x 2 2 A = C x 1 y D x 1 − B C x 1 x 2 D x 1 \begin{aligned} B &= \frac{C_{yx_2}\text{D}x_1-C_{x_1x_2}C_{yx_1}} {\text{D}x_1\text{D}x_2-C_{x_1x_2}^2} \\ A &= \frac{C_{x_1y}}{\text{D}x_1}-B\frac{C_{x_1x_2}}{\text{D}x_1} \end{aligned} BA=Dx1Dx2−Cx1x22Cyx2Dx1−Cx1x2Cyx1=Dx1Cx1y−BDx1Cx1x2

在另一个式子 E ( y ∣ x 1 , x 2 ) \text{E}(y|x_1,x_2) E(y∣x1,x2)中,

A = C y x 1 D x 2 − C y x 2 C x 1 x 2 D x 1 D x 2 − C x 1 x 2 2 B = C y x 2 D x 1 − C y x 1 C x 1 x 2 D x 1 D x 2 − C x 1 x 2 2 \begin{aligned} A &= \frac{C_{yx_1}\text{D}x_2-C_{yx_2}C_{x_1x_2}} {\text{D}x_1\text{D}x_2-C^2_{x_1x_2}} \\ B &= \frac{C_{yx_2}\text{D}x_1-C_{yx_1}C_{x_1x_2}} {\text{D}x_1\text{D}x_2-C^2_{x_1x_2}} \\ \end{aligned} AB=Dx1Dx2−Cx1x22Cyx1Dx2−Cyx2Cx1x2=Dx1Dx2−Cx1x22Cyx2Dx1−Cyx1Cx1x2

两式相等。

高斯白噪声中的直流电平

这个例子可以作为铺垫,有助于理解卡尔曼滤波各个公式的来源,比如 x ( k ) x(k) x(k)和 x ( k − 1 ) x(k-1) x(k−1)之间为什么还要有一个 x ^ ( k ∣ k − 1 ) \hat{x}(k|k-1) x^(k∣k−1)等。考虑模型

x ( k ) = A + w ( k ) x(k)=A+w(k) x(k)=A+w(k)

其中 A A A是待估计参数, w ( k ) w(k) w(k)是均值为0、方差为 σ 2 \sigma^2 σ2的高斯白噪声, x ( k ) x(k) x(k)是观测。可以得到 x ^ ( 0 ) = x ( 0 ) \hat{x}(0)=x(0) x^(0)=x(0),然后根据 x ( 0 ) x(0) x(0)和 x ( 1 ) x(1) x(1)预测 k = 1 k=1 k=1时刻的值 E [ x ( 1 ) ∣ x ( 1 ) , x ( 0 ) ] \text{E}[x(1)|x(1),x(0)] E[x(1)∣x(1),x(0)]时,需要用到联合正态分布的条件可加性,但由于 x ( 1 ) x(1) x(1)和 x ( 0 ) x(0) x(0)不独立,需要使用投影定理计算出两个独立的变量 x ( 0 ) x(0) x(0)与 x ~ ( 1 ∣ 0 ) \widetilde{x}(1|0) x(1∣0),进而计算 x ^ ( 1 ) \hat{x}(1) x^(1),即

x ^ ( 1 ) = E [ x ( 1 ) ∣ x ( 1 ) , x ( 0 ) ] = E [ x ( 1 ) ∣ x ( 0 ) , x ~ ( 1 ∣ 0 ) ] = E [ x ( 1 ) ∣ x ( 0 ) ] + E [ x ( 1 ) ∣ x ~ ( 1 ∣ 0 ) ] − E x ( 1 ) \begin{aligned} \hat{x}(1) &= \text{E}[x(1)|x(1),x(0)] \\ &= \text{E}[x(1)|x(0),\widetilde{x}(1|0)] \\ &= \text{E}[x(1)|x(0)]+\text{E}[x(1)|\widetilde{x}(1|0)]-\text{E}x(1) \end{aligned} x^(1)=E[x(1)∣x(1),x(0)]=E[x(1)∣x(0),x(1∣0)]=E[x(1)∣x(0)]+E[x(1)∣x(1∣0)]−Ex(1)

其中 E [ x ( 1 ) ∣ x ( 0 ) ] = x ^ ( 1 ∣ 0 ) \text{E}[x(1)|x(0)]=\hat{x}(1|0) E[x(1)∣x(0)]=x^(1∣0),

x ^ ( 1 ∣ 0 ) = E x ( 1 ) + Cov ( x ( 1 ) , x ( 0 ) ) D x ( 0 ) ( x ( 0 ) − E x ( 0 ) ) = A + E ( A + w ( 1 ) ) ( A + w ( 0 ) ) − E ( A + w ( 1 ) ) E ( A + w ( 0 ) ) E ( A + w ( 1 ) ) 2 − [ E ( A + w ( 1 ) ) ] 2 ( x ( 0 ) − A ) = A \begin{aligned} \hat{x}(1|0) &= \text{E}x(1)+\frac{\text{Cov}(x(1),x(0))} {\text{D}x(0)}(x(0)-\text{E}x(0)) \\ &= A+\frac{\text{E}(A+w(1))(A+w(0))-\text{E}(A+w(1))\text{E}(A+w(0))} {\text{E}(A+w(1))^2-[\text{E}(A+w(1))]^2}(x(0)-A) \\ &= A \end{aligned} x^(1∣0)=Ex(1)+Dx(0)Cov(x(1),x(0))(x(0)−Ex(0))=A+E(A+w(1))2−[E(A+w(1))]2E(A+w(1))(A+w(0))−E(A+w(1))E(A+w(0))(x(0)−A)=A

此时式中就出现了 x ^ ( k ∣ k − 1 ) \hat{x}(k|k-1) x^(k∣k−1),和另一个未知式 E [ x ( 1 ) ∣ x ~ ( 1 ∣ 0 ) ] \text{E}[x(1)|\widetilde{x}(1|0)] E[x(1)∣x(1∣0)]。由零均值应用定理,

E [ x ( 1 ) ∣ x ~ ( 1 ∣ 0 ) ] = E x ( 0 ) + Cov ( x ( 1 ) , x ~ ( 1 ∣ 0 ) ) D x ~ ( 1 ∣ 0 ) ( x ~ ( 1 ∣ 0 ) − E x ~ ( 1 ∣ 0 ) ) \text{E}[x(1)|\widetilde{x}(1|0)] = \text{E}x(0)+\frac{\text{Cov}(x(1),\widetilde{x}(1|0))} {\text{D}\widetilde{x}(1|0)}(\widetilde{x}(1|0)-\text{E}\widetilde{x}(1|0)) E[x(1)∣x(1∣0)]=Ex(0)+Dx(1∣0)Cov(x(1),x(1∣0))(x(1∣0)−Ex(1∣0))

其中

x ~ ( 1 ∣ 0 ) = x ( 1 ) − x ^ ( 1 ∣ 0 ) E x ~ ( 1 ∣ 0 ) = E ( x − x ^ ) = 0 D x ~ ( 1 ∣ 0 ) = E ( x ( 1 ) − x ^ ( 1 ∣ 0 ) ) 2 = E ( A + w ( 1 ) ) 2 = a 2 P ( 0 ) + σ 2 = P ( 1 ∣ 0 ) \begin{aligned} \widetilde{x}(1|0) &= x(1)-\hat{x}(1|0) \\ \text{E}\widetilde{x}(1|0) &= \text{E}(x-\hat{x}) = 0 \\ \text{D}\widetilde{x}(1|0) &= \text{E}(x(1)-\hat{x}(1|0))^2 \\ &= \text{E}(A+w(1))^2 \\ &= a^2P(0)+\sigma^2 \\ &= P(1|0) \end{aligned} x(1∣0)Ex(1∣0)Dx(1∣0)=x(1)−x^(1∣0)=E(x−x^)=0=E(x(1)−x^(1∣0))2=E(A+w(1))2=a2P(0)+σ2=P(1∣0)

卡尔曼滤波正式推导

标量形式

x ^ ( k ∣ k − 1 ) = E [ x ( k ) ∣ Y ( k − 1 ) ] = E [ a x ( k − 1 ) + b u ( k − 1 ) + v ( k − 1 ) ∣ Y ( k − 1 ) ] = a E [ x ( k − 1 ) ∣ Y ( k − 1 ) ] + b E [ u ( k − 1 ) ∣ Y ( k − 1 ) ] + E [ v ( k − 1 ) ∣ Y ( k − 1 ) ] = a x ^ ( k − 1 ) + b u ( k − 1 ) x ~ ( k ∣ k − 1 ) = x ( k ) − x ^ ( k ∣ k − 1 ) = ( a x ( k − 1 ) + b u ( k − 1 ) + v ( k − 1 ) ) − ( a x ^ ( k − 1 ) + b u ( k − 1 ) ) = a x ~ ( k − 1 ) + v ( k − 1 ) (1) \begin{aligned} \hat{x}(k|k-1) &= \text{E}[x(k)|Y(k-1)] \\ &= \text{E}[ax(k-1)+bu(k-1)+v(k-1)|Y(k-1)] \\ &= a\text{E}[x(k-1)|Y(k-1)]+b\text{E}[u(k-1)|Y(k-1)]+\text{E}[v(k-1)|Y(k-1)] \\ &= a\hat{x}(k-1)+bu(k-1) \\ \widetilde{x}(k|k-1) &= x(k)-\hat{x}(k|k-1) \\ &= (ax(k-1)+bu(k-1)+v(k-1))-(a\hat{x}(k-1)+bu(k-1)) \\ &= a\widetilde{x}(k-1)+v(k-1) \end{aligned} \tag{1} x^(k∣k−1)x(k∣k−1)=E[x(k)∣Y(k−1)]=E[ax(k−1)+bu(k−1)+v(k−1)∣Y(k−1)]=aE[x(k−1)∣Y(k−1)]+bE[u(k−1)∣Y(k−1)]+E[v(k−1)∣Y(k−1)]=ax^(k−1)+bu(k−1)=x(k)−x^(k∣k−1)=(ax(k−1)+bu(k−1)+v(k−1))−(ax^(k−1)+bu(k−1))=ax(k−1)+v(k−1)(1)

已知 x ^ ( k ) = E [ x ( k ) ∣ Y ( k ) ] \hat{x}(k)=\text{E}[x(k)|Y(k)] x^(k)=E[x(k)∣Y(k)],且因为 x ~ ( k − 1 ) \widetilde{x}(k-1) x(k−1)包含 x ( k ) x(k) x(k), x ( k ) x(k) x(k)又包含 y ( k ) y(k) y(k),所以数据集 Y ( k ) Y(k) Y(k)等价于数据集 Y ( k − 1 ) Y(k-1) Y(k−1)、 x ~ ( k ∣ k − 1 ) \widetilde{x}(k|k-1) x(k∣k−1),于是由条件可加性,

x ^ ( k ) = E [ x ( k ) ∣ Y ( k − 1 ) , x ~ ( k − 1 ) ] = E [ x ( k ) ∣ Y ( k − 1 ) ] + E [ x ( k ) ∣ x ~ ( k ∣ k − 1 ) ] − E x ( k ) = x ^ ( k ∣ k − 1 ) + E [ x ( k ) ∣ x ~ ( k ∣ k − 1 ) ] − E x ( k ) \begin{aligned} \hat{x}(k) &= \text{E}[x(k)|Y(k-1),\widetilde{x}(k-1)] \\ &= \text{E}[x(k)|Y(k-1)]+\text{E}[x(k)|\widetilde{x}(k|k-1)]-\text{E}x(k) \\ &= \hat{x}(k|k-1)+\text{E}[x(k)|\widetilde{x}(k|k-1)]-\text{E}x(k) \end{aligned} x^(k)=E[x(k)∣Y(k−1),x(k−1)]=E[x(k)∣Y(k−1)]+E[x(k)∣x(k∣k−1)]−Ex(k)=x^(k∣k−1)+E[x(k)∣x(k∣k−1)]−Ex(k)

由零均值应用定理,

E [ x ( k ) ∣ x ~ ( k ∣ k − 1 ) ] = E x ( k ) + Cov ( x ( k ) , x ~ ( k ∣ k − 1 ) ) D x ~ ( k ∣ k − 1 ) ( x ~ ( k ∣ k − 1 ) − E x ~ ( k ∣ k − 1 ) ) \text{E}[x(k)|\widetilde{x}(k|k-1)] = \text{E}x(k)+\frac{\text{Cov}(x(k),\widetilde{x}(k|k-1))} {\text{D}\widetilde{x}(k|k-1)}(\widetilde{x}(k|k-1)-\text{E}\widetilde{x}(k|k-1)) E[x(k)∣x(k∣k−1)]=Ex(k)+Dx(k∣k−1)Cov(x(k),x(k∣k−1))(x(k∣k−1)−Ex(k∣k−1))

其中

E x ~ ( k ∣ k − 1 ) = E ( x − x ^ ) = 0 D x ~ ( k ∣ k − 1 ) = E ( a x ~ ( k − 1 ) + v ( k − 1 ) ) 2 = a 2 P ( k − 1 ) + V = P ( k ∣ k − 1 ) (3) \begin{aligned} \text{E}\widetilde{x}(k|k-1) &= \text{E}(x-\hat{x}) = 0 \\ \text{D}\widetilde{x}(k|k-1) &= \text{E}(a\widetilde{x}(k-1)+v(k-1))^2 \\ &= a^2P(k-1)+V \\ &= P(k|k-1) \tag{3} \end{aligned} Ex(k∣k−1)Dx(k∣k−1)=E(x−x^)=0=E(ax(k−1)+v(k−1))2=a2P(k−1)+V=P(k∣k−1)(3)

令

M ( k ) = Cov ( x ( k ) , x ~ ( k ∣ k − 1 ) ) D x ~ ( k ∣ k − 1 ) M(k)=\frac{\text{Cov}(x(k),\widetilde{x}(k|k-1))} {\text{D}\widetilde{x}(k|k-1)} M(k)=Dx(k∣k−1)Cov(x(k),x(k∣k−1))

则

E [ x ( k ) ∣ x ~ ( k ∣ k − 1 ) ] = E x ( k ) + M ( k ) ( x ( k ) − x ^ ( k ∣ k − 1 ) ) x ^ ( k ) = E [ x ( k ) ∣ Y ( k − 1 ) , x ~ ( k − 1 ) ] = E [ x ( k ) ∣ Y ( k − 1 ) ] + E [ x ( k ) ∣ x ~ ( k ∣ k − 1 ) ] − E x ( k ) = x ^ ( k ∣ k − 1 ) + M ( k ) ( x ( k ) − x ^ ( k ∣ k − 1 ) ) \begin{aligned} \text{E}[x(k)|\widetilde{x}(k|k-1)] &= \text{E}x(k)+M(k)(x(k)-\hat{x}(k|k-1)) \\ \hat{x}(k) &= \text{E}[x(k)|Y(k-1),\widetilde{x}(k-1)] \\ &= \text{E}[x(k)|Y(k-1)]+\text{E}[x(k)|\widetilde{x}(k|k-1)]-\text{E}x(k) \\ &= \hat{x}(k|k-1)+M(k)(x(k)-\hat{x}(k|k-1)) \end{aligned} E[x(k)∣x(k∣k−1)]x^(k)=Ex(k)+M(k)(x(k)−x^(k∣k−1))=E[x(k)∣Y(k−1),x(k−1)]=E[x(k)∣Y(k−1)]+E[x(k)∣x(k∣k−1)]−Ex(k)=x^(k∣k−1)+M(k)(x(k)−x^(k∣k−1))

由投影定理 E [ x ^ ( x − x ^ ) ] = 0 \text{E}[\hat{x}(x-\hat{x})]=0 E[x^(x−x^)]=0计算 M ( k ) M(k) M(k),

M ( k ) = Cov ( x ( k ) , x ~ ( k ∣ k − 1 ) ) D x ~ ( k ∣ k − 1 ) = E [ x ( k ) ( x ( k ) − x ^ ( k ∣ k − 1 ) ) ] − E x ( k ) E x ~ ( k ∣ k − 1 ) P ( k ∣ k − 1 ) = E [ ( x ( k ) − x ^ ( k ∣ k − 1 ) ) ( x ( k ) − x ^ ( k ∣ k − 1 ) ) ] P ( k ∣ k − 1 ) \begin{aligned} M(k) &= \frac{\text{Cov}(x(k),\widetilde{x}(k|k-1))} {\text{D}\widetilde{x}(k|k-1)} \\ &= \frac{\text{E}[x(k)(x(k)-\hat{x}(k|k-1))] -\text{E}x(k)\text{E}\widetilde{x}(k|k-1)}{P(k|k-1)} \\ &= \frac{\text{E}[(x(k)-\hat{x}(k|k-1))(x(k)-\hat{x}(k|k-1))]}{P(k|k-1)} \\ \end{aligned} M(k)=Dx(k∣k−1)Cov(x(k),x(k∣k−1))=P(k∣k−1)E[x(k)(x(k)−x^(k∣k−1))]−Ex(k)Ex(k∣k−1)=P(k∣k−1)E[(x(k)−x^(k∣k−1))(x(k)−x^(k∣k−1))]

5个方程分别为,

(1)预测方程

(2)修正方程

(3)最小预测均方误差

向量形式

x ^ ( k ∣ k − 1 ) = E [ x ( k ) ∣ Y ( k − 1 ) ] = E [ A x ( k − 1 ) + B u ( k − 1 ) + v ( k − 1 ) ∣ Y ( k − 1 ) ] = A E [ x ( k − 1 ) ∣ Y ( k − 1 ) ] + B E [ u ( k − 1 ) ∣ Y ( k − 1 ) ] + E [ v ( k − 1 ) ∣ Y ( k − 1 ) ] = A x ^ ( k − 1 ) + B u ( k − 1 ) \begin{aligned} \hat{x}(k|k-1) &= \text{E}[x(k)|Y(k-1)] \\ &= \text{E}[Ax(k-1)+Bu(k-1)+v(k-1)|Y(k-1)] \\ &= A\text{E}[x(k-1)|Y(k-1)]+B\text{E}[u(k-1)|Y(k-1)]+\text{E}[v(k-1)|Y(k-1)] \\ &= A\hat{x}(k-1)+Bu(k-1) \\ \end{aligned} x^(k∣k−1)=E[x(k)∣Y(k−1)]=E[Ax(k−1)+Bu(k−1)+v(k−1)∣Y(k−1)]=AE[x(k−1)∣Y(k−1)]+BE[u(k−1)∣Y(k−1)]+E[v(k−1)∣Y(k−1)]=Ax^(k−1)+Bu(k−1)

参考

- 孙增圻. 计算机控制理论与应用[M]. 清华大学出版社, 2008.

- StevenM.Kay, 罗鹏飞. 统计信号处理基础[M]. 电子工业出版社, 2014.

- 赵树杰, 赵建勋. 信号检测与估计理论[M]. 电子工业出版社, 2013.

- 卡尔曼滤波的推导过程详解

边栏推荐

- 周刊02|不瞒你说,我其实是MIT的学生

- UDP、TCP

- 黑圆圈显示实现

- 频域滤波器

- 新品发布:LR-LINK联瑞推出首款25G OCP 3.0 网卡

- On scale of canvas recttransform in ugui

- 机器视觉工控机PoE图像采集卡应用解析

- [nk] deleted number of 100 C Xiaohong in Niuke practice match

- Usage methods and cases of PLSQL blocks, cursors, functions, stored procedures and triggers of Oracle Database

- 【指标体系】最新数仓指标体系建模方法

猜你喜欢

26. 定时器

Weekly 02 | pour être honnête, je suis un étudiant du MIT

修改本地微信小程序的AppID

7905 and TL431 negative voltage regulator circuit - regulator and floating circuit relative to the positive pole of the power supply

Usage methods and cases of PLSQL blocks, cursors, functions, stored procedures and triggers of Oracle Database

UDP、TCP

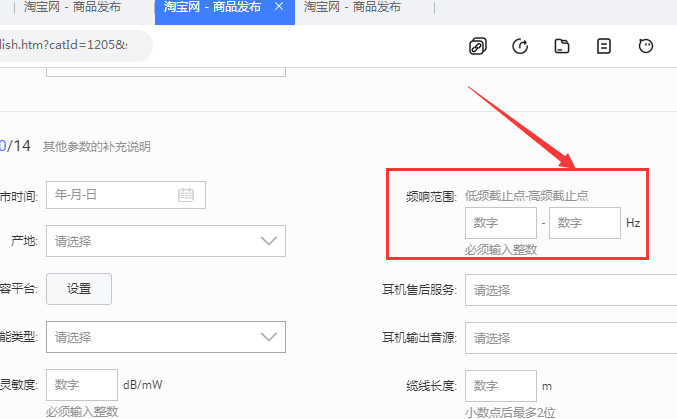

输入值“18-20000hz”错误,设置信息不完整,请选择单位

What is the essence and process of SCM development? Chengdu Automation Development Undertaking

File upload vulnerability - simple exploitation 2 (Mozhe college shooting range)

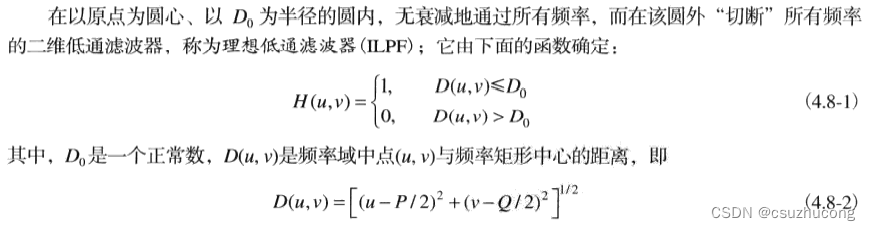

频域滤波器

随机推荐

2022-2028 current situation and future development trend of fuel cell market for cogeneration application in the world and China

29. location對象

On scale of canvas recttransform in ugui

Docker installing MySQL

STL container nested container

浅谈UGUI中Canvas RectTransform的Scale

银泰百货与淘宝天猫联合打造绿色潮玩展,助力“碳中和”

重投农业,加码技术服务,拼多多底盘进一步夯实

How to add text on the border in bar code software

Product information | Poe network card family makes a collective appearance, the perfect partner of machine vision!

新品发布:国产单电口千兆网卡正式量产!

27. this pointing problem

Using the flask framework to write the bezel

The input value "18-20000hz" is incorrect. The setting information is incomplete. Please select a company

Mysql add 新增多个新字段并指定字段位置

Title does not display after toolbar replaces actionbar

moderlarts第一次培训

Systematically study the recommendation system from a global perspective to improve competitiveness in actual combat (Chapter 8)

29. location object

[computer exemption] the Internet of things and ubiquitous intelligence research center of Harbin Institute of technology recruits 2023 graduate students (Master, doctoral and direct doctoral) from un