当前位置:网站首页>Lightweight Pyramid Networks for Image Deraining

Lightweight Pyramid Networks for Image Deraining

2022-07-04 01:19:00 【Programmer base camp】

author : Department of communications, Xiamen University

SmartDSPlaboratory , Project websitesubject : Lightweight pyramid network for image rain removal

Abstract

- The existing rain removal network exists A lot of training parameters , It is not conducive to the landing use of the mobile terminal

- utilize Special areas Knowledge simplifies the learning process :

- introduce Gaussian Laplace image pyramid decomposition technology , Therefore, the learning task at each level can be simplified into a shallow network with less parameters

- utilize recursive and residual Network module , So in parameter quantity Less than 8000 It can also achieve the best rain removal effect at present

introduction

Rainy days are common weather systems , Not only affect people's vision , It also affects the computer vision system , Such as : Autopilot 、 The monitoring system etc. . because Refraction and scattering of light , Objects in the image are easy Blurred and covered by rain . When encountering heavy rain , Dense rain will make this phenomenon more serious . Because the input setting of the current computer vision system is a clean and clear image , Therefore, the accuracy of the model will easily deteriorate in rainy days . therefore , Designing efficient and useful rain removal algorithms is very important for the landing of many applications .

Related work

Video rain removal : The spatiotemporal information of adjacent frame time can be used , For example, the average density between adjacent frames is used to remove rain from the static background . There are the following ways :

- In Fourier domain , Using Gaussian mixture model 、 Low rank approximation 、 Complete through matrix

- Divide the rain into sparse areas and dense areas , Then use the algorithm based on matrix decomposition

- Patch based Gaussian mixture matrix

Single frame picture to rain : Single frame rain removal cannot use adjacent frame information , So it's more difficult to go to the rain than video . There are the following ways :

- Using the kernel 、 Low rank approximation 、 Dictionary learning

- Kernel regression and nonlocal mean filter

- Divide the image into high-frequency and low-frequency parts , For the high-frequency part, dictionary learning based on sparse coding is used to separate and remove rain

- Carry out self-learning from the high-frequency part to remove rain

- Discriminative coding : By forcing the sparse vector of the rain layer to be sparse , The objective function can solve the problem of separating the background from the rain

- Hybrid model and local gradient descent

- Gaussian mixture model

GMMs: The background layer learns from natural images , The rain layer learns from images with rain - Variable direction multiplier

ADMM - Deep learning method based on convolutional neural network

Contribution of this paper

- Put forward

LPNetModel , This model The parameter quantity is less than 8k, Compared with the previous deep neural network , It is more conducive to the application landing on the mobile terminal . - utilize Special areas Knowledge simplifies the learning process

- For the first time The pyramid of Laplace Module to decompose degraded pictures and rainy pictures to different network levels

- Use Recursive module and Residual module Establish a sub network to reconstruct each level of the rain removal image The pyramid of Gauss

- According to the physical characteristics of different levels special

Lossfunction - Realized Best at present The effect of removing rain , It also has strong applicability in other computer vision fields

LPNet A network model

Motivation

- The rain will be blocked by the edge of the object and the background , So it is difficult to learn in the image domain , But you can High frequency information Learn from , Because the high-frequency part mainly contains the rain and object edge information without the interference of image background

- Previously, in order to achieve the above operations , utilize Pilot filter To obtain high-frequency information as network input , Then, the rain is removed and the background is fused , But in On the thin rain It's hard to extract high-frequency information

- Based on the above decomposition idea , Put forward Lightweight pyramid neural network Simplify the training process , At the same time, reduce the number of parameters

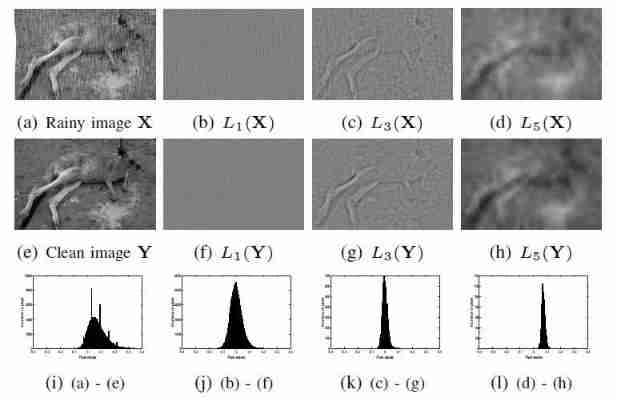

Stage I : The pyramid of Laplace

The Laplace pyramid is obtained through the operation of Gauss pyramid , It is through Gaussian down sampling and then Laplace up sampling , It depends on subtracting the original image to obtain the high-frequency residual 《 The relationship between Laplace pyramid and Gauss pyramid 》

Ln(X)=Gn(X)−upsample(Gn+1(X))L_n(X) = G_n(X) - upsample(G_{n+1}(X))Ln(X)=Gn(X)−upsample(Gn+1(X))

Gn(X) Gauss pyramid Ln(X) It's the pyramid of Laplace G_n(X) Gauss pyramid \qquad L_n(X) It's the pyramid of Laplace Gn(X) yes high Si gold word tower Ln(X) yes PULL universal PULL Si gold word tower

Choose the traditional Laplace pyramid advantage :

- Background removal , So the network only needs to deal with High frequency information

- Make use of the learning of each layer sparsity

- Make each layer of learning more like Identity mapping

identity mapping - stay

GPUIt is easy to calculate

Stage II : Sub network structure

For each level , Are the same network structure , Just convolution kernel kernel The quantity will be adjusted according to the characteristics of different levels , Mainly adopts residual learning and recursive blocks

- feature extraction

Hn,0=σ(Wn0∗Ln(X)+bn0)H_{n,0} = \sigma(W_{n}^{0}*L_n(X)+b_{n}^{0})Hn,0=σ(Wn0∗Ln(X)+bn0)

- Recursive module

recursive blocks: Use parameters to share with several othersrecursive blocksTo reduce the amount of training parameters , It mainly applies everyblockUse three convolutions as follows :

Fn,t1=σ(Wn1∗Hn,t−1+bn1),Fn,t2=σ(Wn2∗Fn,t1+bn2),Fn,t3=Wn3∗Fn,t2+bn3,F_{n,t}^{1}=σ(W_{n}^{1}*H_{n,t-1}+b_{n}^{1}), \\ F_{n,t}^{2}=σ(W_{n}^{2}*F_{n,t}^{1}+b_{n}^{2}), \\ F_{n,t}^{3}=W_{n}^{3}*F_{n,t}^{2}+b_{n}^{3},Fn,t1=σ(Wn1∗Hn,t−1+bn1),Fn,t2=σ(Wn2∗Fn,t1+bn2),Fn,t3=Wn3∗Fn,t2+bn3,

F{1,2,3} It's an intermediate feature ,W{1,2,3} and b{1,2,3} It's in multiple block Parameters shared in F^{\{1,2,3\}} It's an intermediate feature ,W^{\{1,2,3\}} and b^{\{1,2,3\}} It's in multiple block Parameters shared in F{1,2,3} yes in between , sign ,W{1,2,3} and b{1,2,3} yes stay many individual block in common enjoy Of ginseng Count

In order to facilitate forward propagation and reverse derivation , utilize Residual module To add the input to the recursive module output :

Hn,t=σ(Fn,t3+Hn,0)H_{n,t}=σ(F_{n,t}^{3}+H_{n,0})Hn,t=σ(Fn,t3+Hn,0)

- Gaussian pyramid reconstruction :

Ln(Y)=(Wn4∗Hn,T+bn4)+Ln(X),L_n(Y) = (W_{n}^{4}*H_{n,T}+b_{n}^4)+L_n(X),Ln(Y)=(Wn4∗Hn,T+bn4)+Ln(X),

The above formula is The output of the Laplace pyramid , Then the corresponding Gaussian pyramid reconstruction result is :

GN(Y)=max(0,LN(Y)),Gn(Y)=max(0,Ln(Y)+upsample(Gn+1(Y))),G_N(Y)=max(0,L_N(Y)),\\ G_n(Y)=max(0,L_n(Y)+upsample(G_{n+1}(Y))),GN(Y)=max(0,LN(Y)),Gn(Y)=max(0,Ln(Y)+upsample(Gn+1(Y))),

Because the output of each layer should ≥0, So there should be x=max(0,x) namely ReLU function

The final output image of rain removal is

G1(Y)G_1(Y)G1(Y)

Loss Function

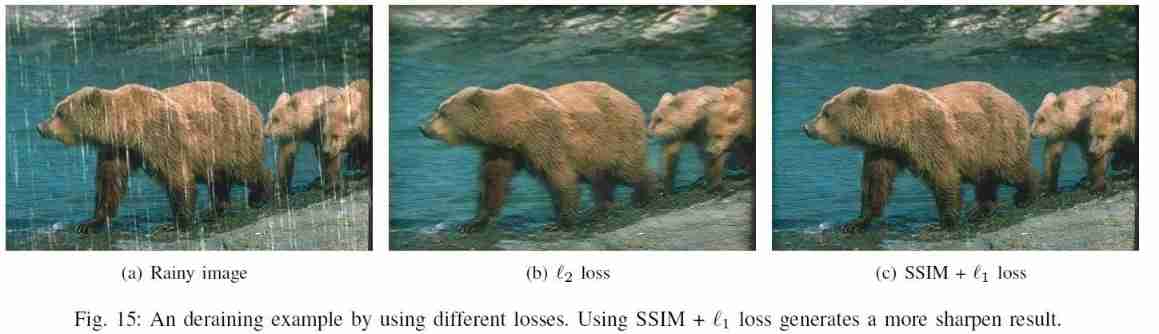

MSE(L2): Due to the square penalty, the training on the edge of the image is poor , The generated image will Too smooththerefore , Different methods are adopted for different network levels

LossTo adapt to different network characteristicsL1 + SSIM: Because better image details and raindrops exist in the lower pyramid network ( Such as Fig 3), So useSSIMTo train the corresponding sub network Keep more high-frequency informationL1: Because there are larger object structures and smooth background areas in the higher pyramid network , So just useL1 LossTo update network parameters

L=1M∑i=1M{∑n=1NLl1(Gn(Yi),Gn(YGTi))+∑n=12LSSIM(Gn(Yi),Gn(YGTi))},L = \frac{1}{M}\sum_{i=1}^{M}\{\sum_{n=1}^{N}L^{l1}(G_n(Y^i),G_n(Y_{GT}^{i}))+ \sum_{n=1}^{2}L^{SSIM}(G_n(Y^i),G_n(Y_{GT}^i))\},L=M1i=1∑M{n=1∑NLl1(Gn(Yi),Gn(YGTi))+n=1∑2LSSIM(Gn(Yi),Gn(YGTi))},

Remove Batch Normalization layer

- Join in

BNLayer is to make the feature mapping of training obey Gaussian distribution( Normal distribution ) - But the lower data of the Laplace pyramid is sparse

( Such as Fig3 Histogram ), It is relatively easy to handle , So no need BN Layer constraint - Remove

BN layerAfter that, the model is more flexible and has fewer parameters

Parameter setting

Fixed smooth kernel

[0.0625,0.25,0.375,0.25,0.0625]It is used for the construction of Laplace pyramid and the reconstruction of Gauss pyramid

Such as

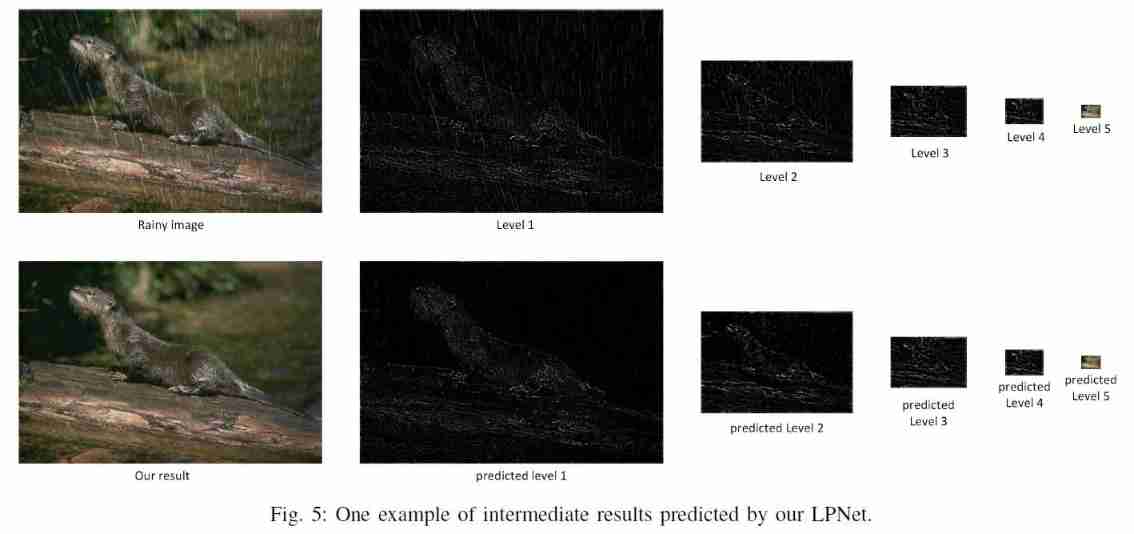

Fig3, Rain is at a lower level , Higher level is closer to feature mapping , So the higher level only needs less training parameters , So from low level to high level , The number of network cores decreases[16, 8, 4, 2, 1]. On the top floor , The image is small and smooth , Rain still exists in the high-frequency part , But its learning is more like simple Global contrast adaptive learning .Such as

Fig5, By means ofRainy imgandOur ResultEach level of , You can see it : Rain is still at a low level , However, the top level is almost the same . so , It is necessary to remove redundant cores at higher levels

Experimental parameters

dataset: Synthetic 1800 Zhang Yuyu image + 200 Zhang Xiaoyu imageoptimizer:Adammini-batch size: 10learning rate:0.001epochs:3

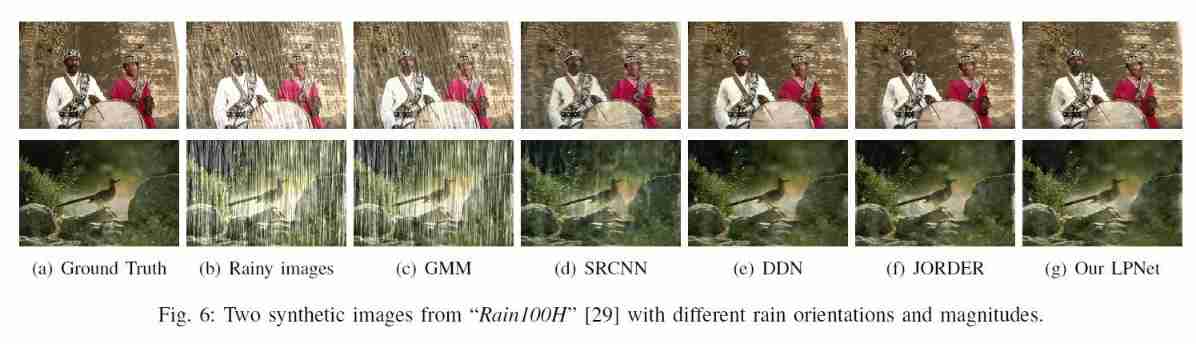

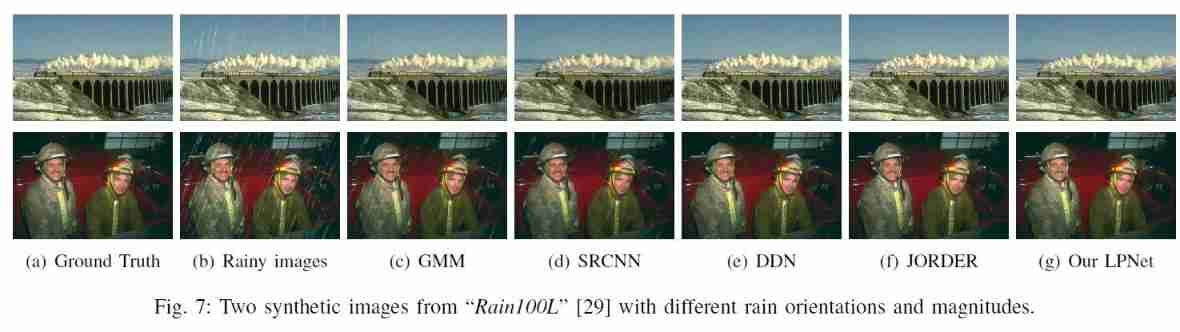

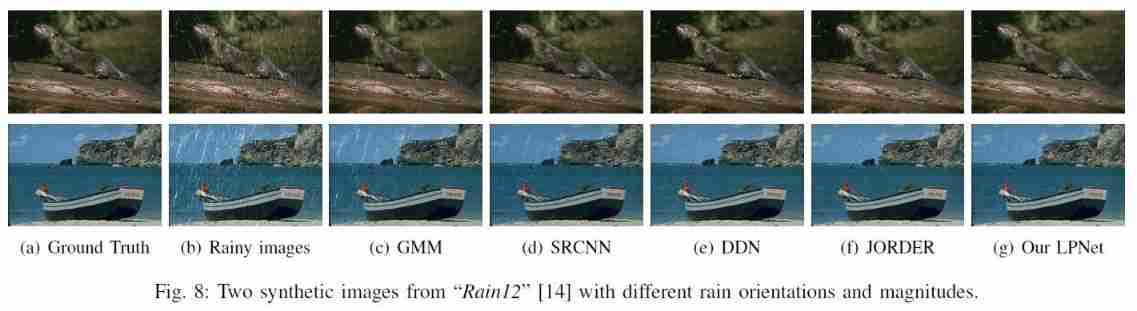

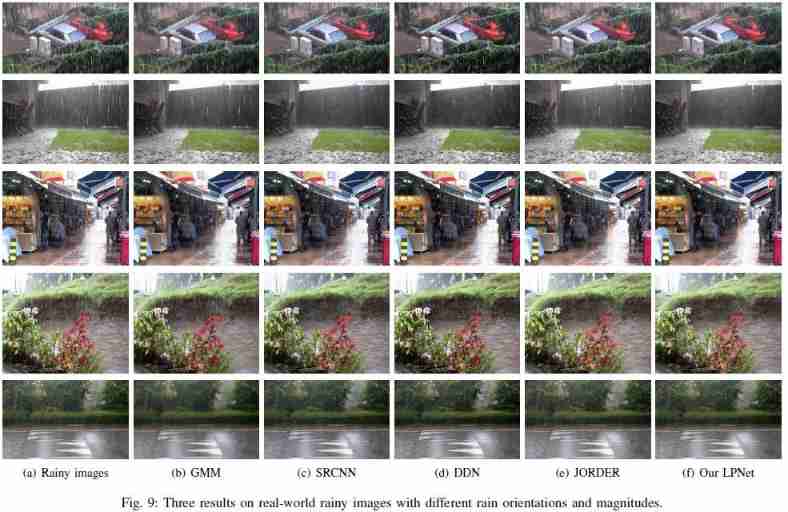

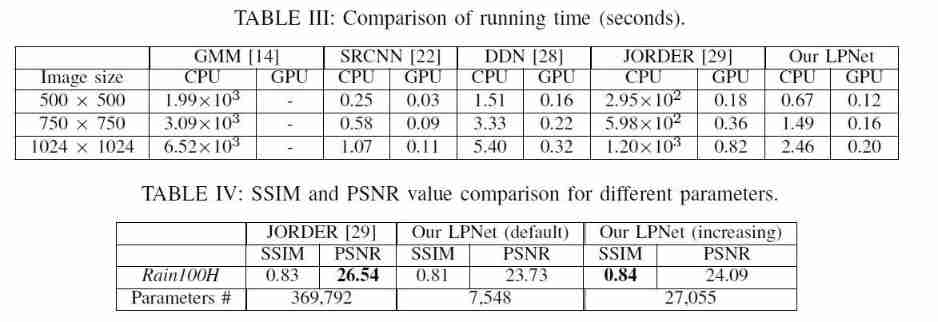

experimental result

By comparing multiple models in Synthetic rain map [ The heavy rain 、 Light rain ]、 Real world rain map 、 Defogging Contrast , Main comparison criteria : Visual comparison of subjective image output 、 Quantitative comparison ( Subjective evaluation score 、PSNR、SSIM)

Other contents of the paper

Because the central idea of the paper is the above part , Although other thoughts are also mentioned , But not as the core

LPNetWhen using more network parameters , Can show better effect . But for lightweight applications , So I didn't add itskip connectionIt is conducive to forward propagation and reverse derivationSSIM LossBased on local image features : Local contrast 、 brightness 、 details . More in line with Human eye system .LPNetIt is also applicable to other computer vision tasks , Such as denoising 、 Demist and other fields- Because the network is lightweight , So it can be used as the preprocessing of other computer vision tasks : Use first in target detection on rainy days

LPNetGo to the rain network

An interesting phenomenon : The visual effect is better , however PSNR It's lower

边栏推荐

- String hash, find the string hash value after deleting any character, double hash

- OS interrupt mechanism and interrupt handler

- 查询效率提升10倍!3种优化方案,帮你解决MySQL深分页问题

- 不得不会的Oracle数据库知识点(四)

- Beijing invites reporters and media

- 长文综述:大脑中的熵、自由能、对称性和动力学

- mysql使用视图报错,EXPLAIN/SHOW can not be issued; lacking privileges for underlying table

- Technical practice online fault analysis and solutions (Part 1)

- Which insurance products can the elderly buy?

- C import Xls data method summary IV (upload file de duplication and database data De duplication)

猜你喜欢

![CesiumJS 2022^ 源码解读[8] - 资源封装与多线程](/img/d2/99932660298b4a4cddd7e5e69faca1.png)

CesiumJS 2022^ 源码解读[8] - 资源封装与多线程

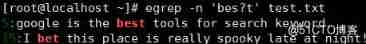

Regular expression of shell script value

1-redis architecture design to use scenarios - four deployment and operation modes (Part 1)

51 MCU external interrupt

2-redis architecture design to use scenarios - four deployment and operation modes (Part 2)

Gee: create a new feature and set corresponding attributes

Weekly open source project recommendation plan

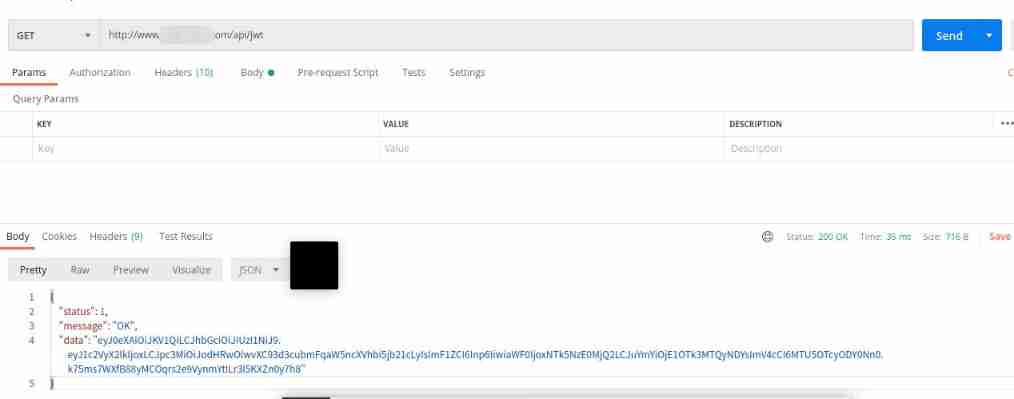

Thinkphp6 integrated JWT method and detailed explanation of generation, removal and destruction

老姜的特点

MySQL introduction - functions (various function statistics, exercises, details, tables)

随机推荐

Unity Shader入门精要读书笔记 第三章 Unity Shader基础

Analysis and solution of lazyinitializationexception

MySQL uses the view to report an error, explain/show can not be issued; lacking privileges for underlying table

The super fully automated test learning materials sorted out after a long talk with a Tencent eight year old test all night! (full of dry goods

中电资讯-信贷业务数字化转型如何从星空到指尖?

Fundamentals of machine learning: feature selection with lasso

51 single chip microcomputer timer 2 is used as serial port

Related configuration commands of Huawei rip

Summary of common tools and technical points of PMP examination

数据库表外键的设计

How to use AHAS to ensure the stability of Web services?

What is the GPM scheduler for go?

A-Frame虚拟现实开发入门

Oracle database knowledge points that cannot be learned (III)

Pratique technique | analyse et solution des défaillances en ligne (Partie 1)

HackTheBox-baby breaking grad

Summary of common tools and technical points of PMP examination

Day05 表格

Logical operator, displacement operator

PMP 考试常见工具与技术点总结