当前位置:网站首页>10 tf. data

10 tf. data

2022-06-26 15:58:00 【X1996_】

Learn this section , The contents are arranged in a mess

tf.data Mainly tensorflow Data input inside

Data Class and related operations TFRecord File saving and reading

All code in notebook Writing in the

Data processing

Code

Code

Dataset class

Dataset Class read numpy data

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

mnist = np.load("mnist.npz")

x_train, y_train = mnist['x_train'],mnist['y_train']

# Add one dimension at the end

x_train = np.expand_dims(x_train, axis=-1)

mnist_dataset = tf.data.Dataset.from_tensor_slices((x_train, y_train))

Pandas data fetch

import pandas as pd

df = pd.read_csv('heart.csv')

df['thal'] = pd.Categorical(df['thal'])

df['thal'] = df.thal.cat.codes

target = df.pop('target')

dataset = tf.data.Dataset.from_tensor_slices((df.values, target.values))

thal,target Is in the file , It feels like the key name

from Python generator Building data pipelines

img_gen = tf.keras.preprocessing.image.ImageDataGenerator(rescale=1./255, rotation_range=20)

flowers = './flower_photos/flower_photos/'

def Gen():

gen = img_gen.flow_from_directory(flowers)

for (x,y) in gen:

yield (x,y)

ds = tf.data.Dataset.from_generator(

Gen,

output_types=(tf.float32, tf.float32)

# output_shapes=([32,256,256,3], [32,5])

)

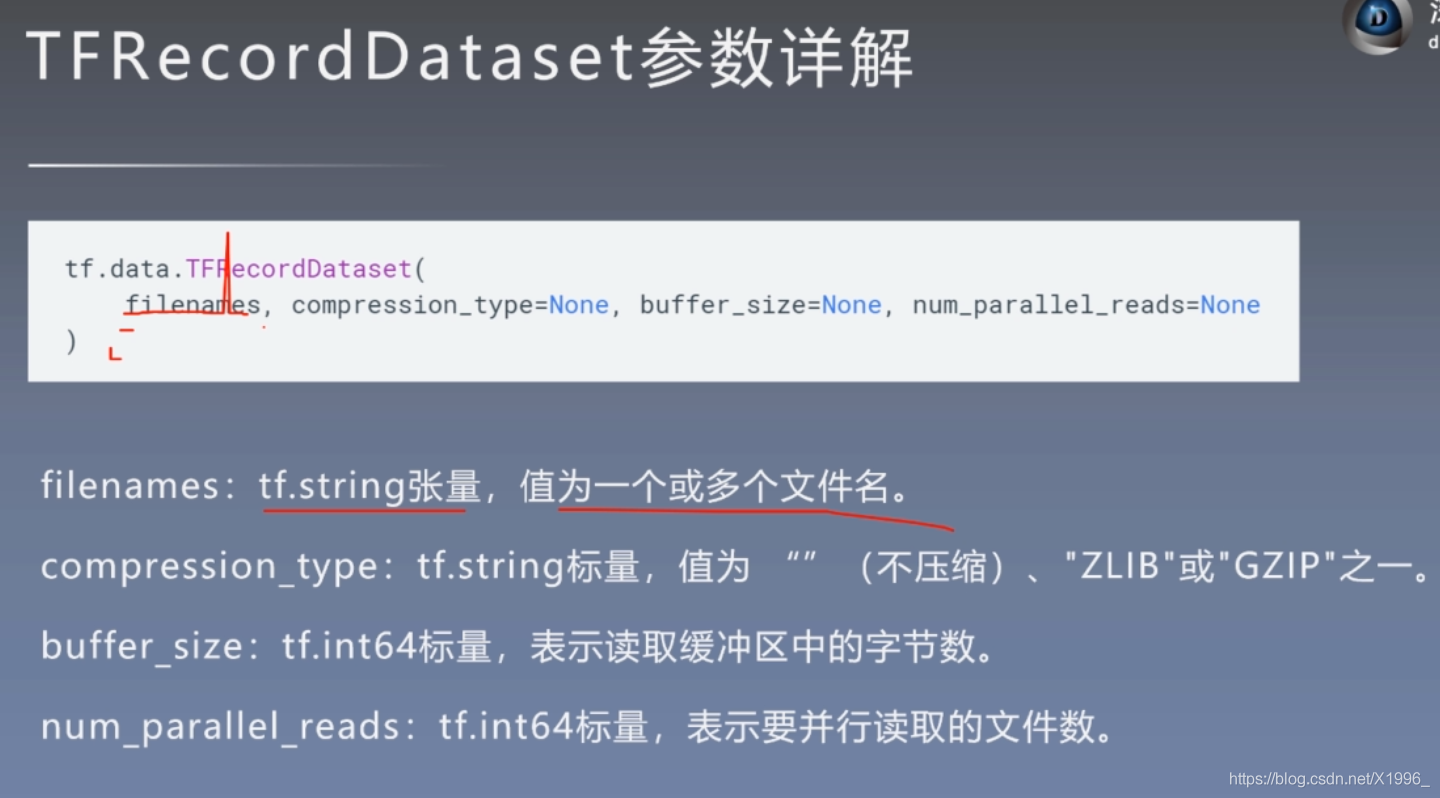

TFRecordDataset class

feature_description = {

# Definition Feature structure , Tell the decoder everyone Feature What is the type of

'image': tf.io.FixedLenFeature([], tf.string),

'label': tf.io.FixedLenFeature([], tf.int64),

}

def _parse_example(example_string): # take TFRecord Each serialized in the file tf.train.Example decode

feature_dict = tf.io.parse_single_example(example_string, feature_description)

feature_dict['image'] = tf.io.decode_jpeg(feature_dict['image']) # decode JPEG picture

feature_dict['image'] = tf.image.resize(feature_dict['image'], [256, 256]) / 255.0

return feature_dict['image'], feature_dict['label']

batch_size = 32

train_dataset = tf.data.TFRecordDataset("sub_train.tfrecords") # Read TFRecord file

# filename label

train_dataset = train_dataset.map(_parse_example)

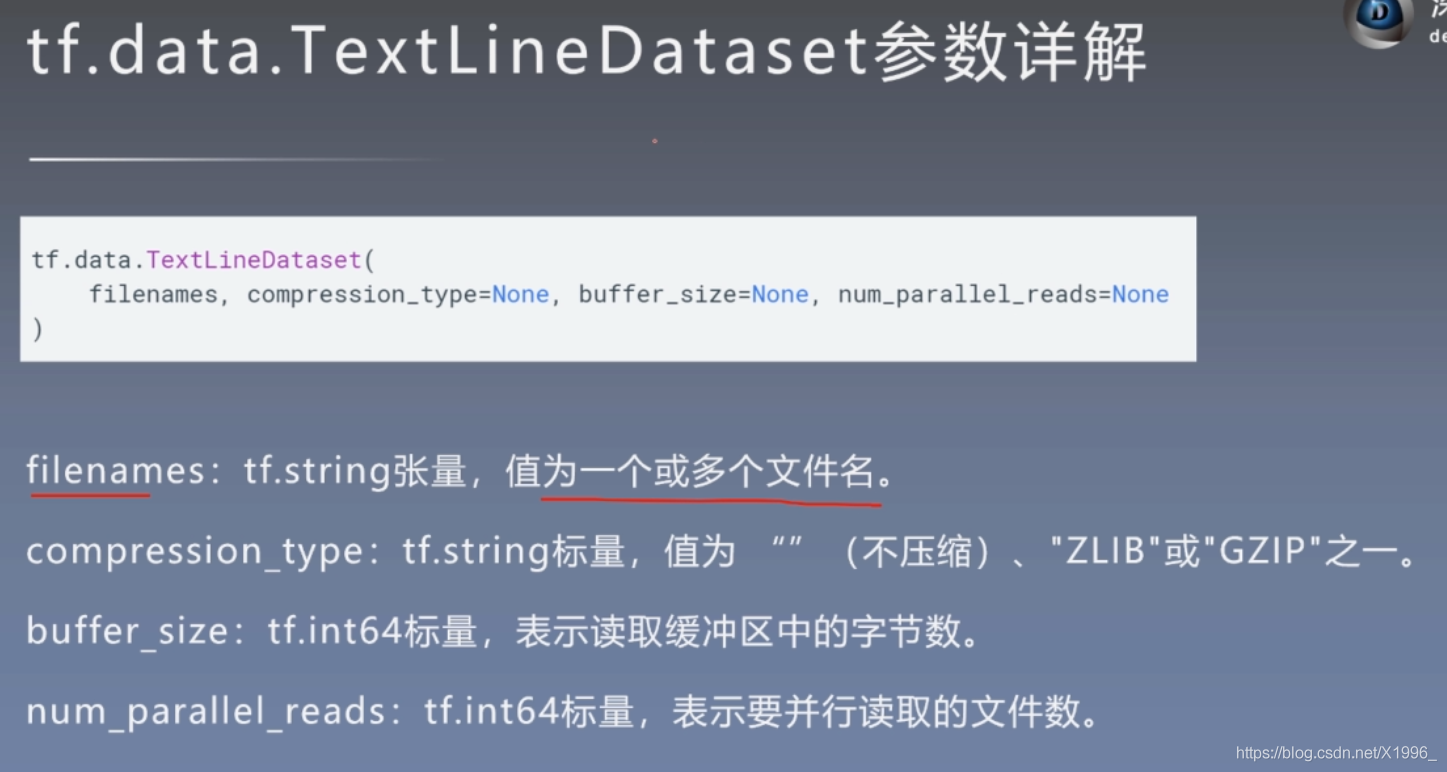

TextLineDataset class

titanic_lines = tf.data.TextLineDataset(['train.csv','eval.csv'])

def data_func(line):

line = tf.strings.split(line, sep = ",")

return line

titanic_data = titanic_lines.skip(1).map(data_func)

Two Dataset Class related operations

flat_map

zip

concatenate

Read from multiple files

Read from multiple files

Code

Code

flat_map

a = tf.data.Dataset.range(1, 6) # ==> [ 1, 2, 3, 4, 5 ]

# NOTE: New lines indicate "block" boundaries.

b=a.flat_map(lambda x: tf.data.Dataset.from_tensors(x).repeat(6))

zip

a = tf.data.Dataset.range(1, 4) # ==> [ 1, 2, 3 ]

b = tf.data.Dataset.range(4, 7) # ==> [ 4, 5, 6 ]

ds = tf.data.Dataset.zip((a, b))

concatenate

# Connect

a = tf.data.Dataset.range(1, 4) # ==> [ 1, 2, 3 ]

b = tf.data.Dataset.range(4, 7) # ==> [ 4, 5, 6 ]

ds = a.concatenate(b)

performance optimization

prefetch Method

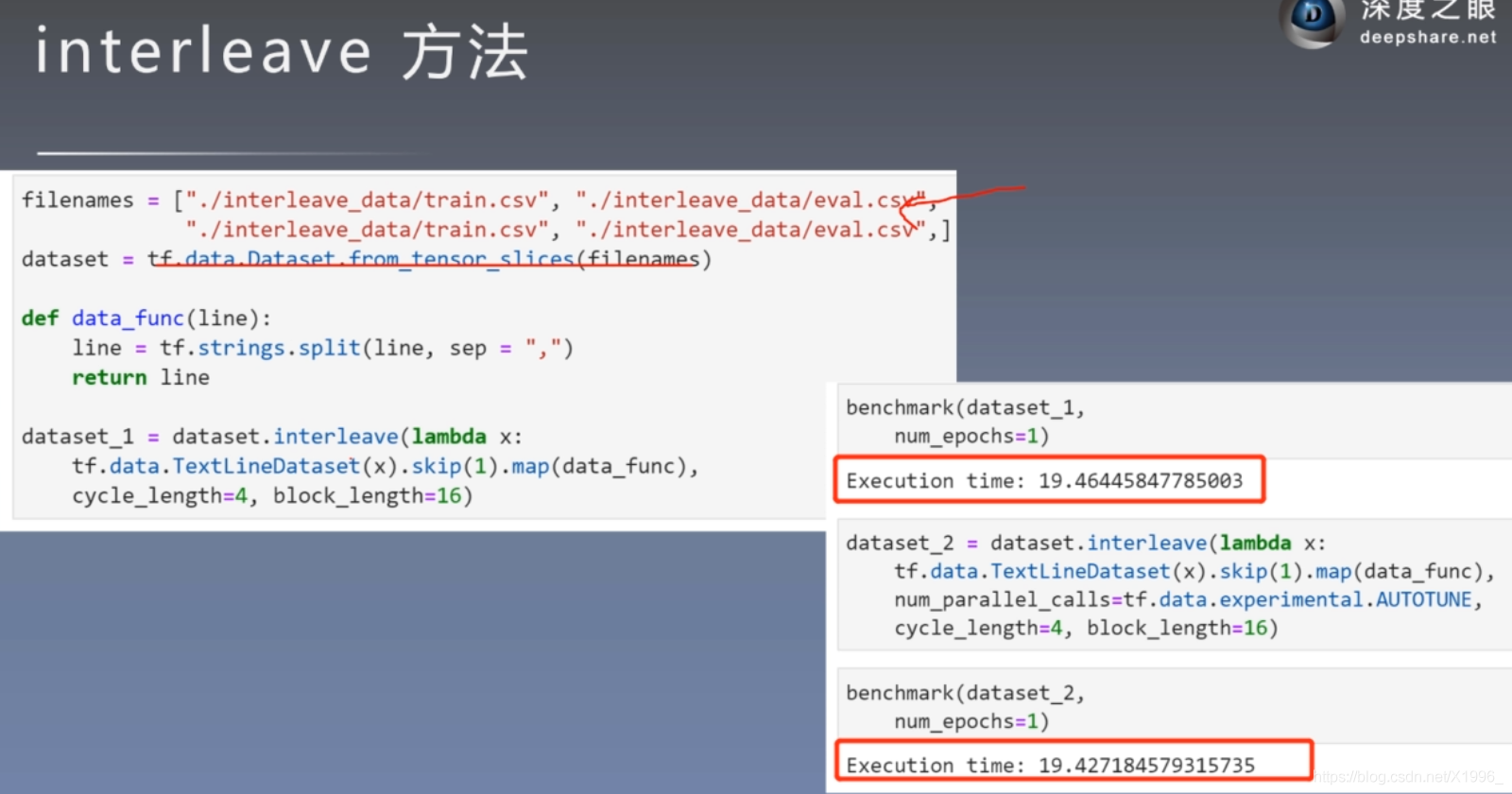

interleave Method

map Method

cache Method

Don't know much about , There is something to be used later · I'll make it up after I understand

Ah , Better than caiwenji

An example of cat dog war

import tensorflow as tf

import os

# Define image path

data_dir = './datasets'

train_cats_dir = data_dir + '/train/cats/'

train_dogs_dir = data_dir + '/train/dogs/'

test_cats_dir = data_dir + '/valid/cats/'

test_dogs_dir = data_dir + '/valid/dogs/'

# os.listdir(train_cats_dir) Get all the file names in this folder

train_cat_filenames = tf.constant([train_cats_dir + filename for filename in os.listdir(train_cats_dir)])

train_dog_filenames = tf.constant([train_dogs_dir + filename for filename in os.listdir(train_dogs_dir)])

train_filenames = tf.concat([train_cat_filenames, train_dog_filenames], axis=-1)

# cat 0 dog :1

train_labels = tf.concat([

tf.zeros(train_cat_filenames.shape, dtype=tf.int32),

tf.ones(train_dog_filenames.shape, dtype=tf.int32)],

axis=-1)

def _decode_and_resize(filename, label):

image_string = tf.io.read_file(filename) # Read the original file

image_decoded = tf.image.decode_jpeg(image_string) # decode JPEG picture

image_resized = tf.image.resize(image_decoded, [256, 256]) / 255.0

return image_resized, label

# Build training sets

def _decode_and_resize(filename, label):

image_string = tf.io.read_file(filename) # Read the original file

image_decoded = tf.image.decode_jpeg(image_string) # decode JPEG picture

image_resized = tf.image.resize(image_decoded, [256, 256]) / 255.0

return image_resized, label

batch_size = 32

train_dataset = tf.data.Dataset.from_tensor_slices((train_filenames, train_labels))

# name

train_dataset = train_dataset.map(

map_func=_decode_and_resize,

num_parallel_calls=tf.data.experimental.AUTOTUNE)

# Before removal buffer_size Put data into buffer, And randomly sample from it , The sampled data is replaced with subsequent data

train_dataset = train_dataset.shuffle(buffer_size=23000)

train_dataset = train_dataset.repeat(count=1)

train_dataset = train_dataset.batch(batch_size)

train_dataset = train_dataset.prefetch(tf.data.experimental.AUTOTUNE)

# Building test data sets

test_cat_filenames = tf.constant([test_cats_dir + filename for filename in os.listdir(test_cats_dir)])

test_dog_filenames = tf.constant([test_dogs_dir + filename for filename in os.listdir(test_dogs_dir)])

test_filenames = tf.concat([test_cat_filenames, test_dog_filenames], axis=-1)

test_labels = tf.concat([

tf.zeros(test_cat_filenames.shape, dtype=tf.int32),

tf.ones(test_dog_filenames.shape, dtype=tf.int32)],

axis=-1)

test_dataset = tf.data.Dataset.from_tensor_slices((test_filenames, test_labels))

test_dataset = test_dataset.map(_decode_and_resize)

test_dataset = test_dataset.batch(batch_size)

class CNNModel(tf.keras.models.Model):

def __init__(self):

super(CNNModel, self).__init__()

self.conv1 = tf.keras.layers.Conv2D(32, 3, activation='relu')

self.maxpool1 = tf.keras.layers.MaxPooling2D()

self.conv2 = tf.keras.layers.Conv2D(32, 5, activation='relu')

self.maxpool2 = tf.keras.layers.MaxPooling2D()

self.flatten = tf.keras.layers.Flatten()

self.d1 = tf.keras.layers.Dense(64, activation='relu')

self.d2 = tf.keras.layers.Dense(2, activation='softmax') #sigmoid and softmax

def call(self, x):

x = self.conv1(x)

x = self.maxpool1(x)

x = self.conv2(x)

x = self.maxpool2(x)

x = self.flatten(x)

x = self.d1(x)

x = self.d2(x)

return x

# softmax CategoricalCrossentropy

#sigmoid tf.keras.losses.BinaryCrossentropy

learning_rate = 0.001

model = CNNModel()

loss_object = tf.keras.losses.SparseCategoricalCrossentropy()

#label No, one-hot

optimizer = tf.keras.optimizers.Adam(learning_rate=learning_rate)

train_loss = tf.keras.metrics.Mean(name='train_loss')

train_accuracy = tf.keras.metrics.SparseCategoricalAccuracy(name='train_accuracy')

test_loss = tf.keras.metrics.Mean(name='test_loss')

test_accuracy = tf.keras.metrics.SparseCategoricalAccuracy(name='test_accuracy')

@tf.function

def train_step(images, labels):

with tf.GradientTape() as tape:

predictions = model(images)

loss = loss_object(labels, predictions)

gradients = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

train_loss(loss)

train_accuracy(labels, predictions)

def test_step(images, labels):

predictions = model(images)

t_loss = loss_object(labels, predictions)

test_loss(t_loss)

test_accuracy(labels, predictions)

EPOCHS=10

for epoch in range(EPOCHS):

# The next epoch At the beginning of the , Reset evaluation indicator

train_loss.reset_states()

train_accuracy.reset_states()

test_loss.reset_states()

test_accuracy.reset_states()

for images, labels in train_dataset:

train_step(images, labels)

for test_images, test_labels in test_dataset:

test_step(test_images, test_labels)

template = 'Epoch {}, Loss: {}, Accuracy: {}, Test Loss: {}, Test Accuracy: {}'

print(template.format(epoch + 1,

train_loss.result(),

train_accuracy.result() * 100,

test_loss.result(),

test_accuracy.result() * 100

))

TFRecord preservation Read

Code

Code

import tensorflow as tf

import os

data_dir = './datasets'

train_cats_dir = data_dir + '/train/cats/'

train_dogs_dir = data_dir + '/train/dogs/'

train_tfrecord_file = data_dir + '/train/train.tfrecords'

test_cats_dir = data_dir + '/valid/cats/'

test_dogs_dir = data_dir + '/valid/dogs/'

test_tfrecord_file = data_dir + '/valid/test.tfrecords'

train_cat_filenames = [train_cats_dir + filename for filename in os.listdir(train_cats_dir)]

train_dog_filenames = [train_dogs_dir + filename for filename in os.listdir(train_dogs_dir)]

train_filenames = train_cat_filenames + train_dog_filenames

train_labels = [0] * len(train_cat_filenames) + [1] * len(train_dog_filenames) # take cat The label of the class is set to 0,dog The label of the class is set to 1

with tf.io.TFRecordWriter(train_tfrecord_file) as writer:

for filename, label in zip(train_filenames, train_labels):

image = open(filename, 'rb').read() # Read data set picture to memory ,image For one Byte String of type

feature = {

# establish tf.train.Feature Dictionaries

'image': tf.train.Feature(bytes_list=tf.train.BytesList(value=[image])), # The picture is a Bytes object

'label': tf.train.Feature(int64_list=tf.train.Int64List(value=[label])) # The label is a Int object

}

example = tf.train.Example(features=tf.train.Features(feature=feature)) # Build... Through a dictionary Example

writer.write(example.SerializeToString()) # take Example Serialize and write TFRecord file

#### Test set

test_cat_filenames = [test_cats_dir + filename for filename in os.listdir(test_cats_dir)]

test_dog_filenames = [test_dogs_dir + filename for filename in os.listdir(test_dogs_dir)]

test_filenames = test_cat_filenames + test_dog_filenames

test_labels = [0] * len(test_cat_filenames) + [1] * len(test_dog_filenames) # take cat The label of the class is set to 0,dog The label of the class is set to 1

with tf.io.TFRecordWriter(test_tfrecord_file) as writer:

for filename, label in zip(test_filenames, test_labels):

image = open(filename, 'rb').read() # Read data set picture to memory ,image For one Byte String of type

feature = {

# establish tf.train.Feature Dictionaries

'image': tf.train.Feature(bytes_list=tf.train.BytesList(value=[image])), # The picture is a Bytes object

'label': tf.train.Feature(int64_list=tf.train.Int64List(value=[label])) # The label is a Int object

}

example = tf.train.Example(features=tf.train.Features(feature=feature)) # Build... Through a dictionary Example

serialized = example.SerializeToString() # take Example serialize

writer.write(serialized) # write in TFRecord file

# Read TFREcoed file

train_dataset = tf.data.TFRecordDataset(train_tfrecord_file) # Read TFRecord file

feature_description = {

# Definition Feature structure , Tell the decoder everyone Feature What is the type of

'image': tf.io.FixedLenFeature([], tf.string),

'label': tf.io.FixedLenFeature([], tf.int64),

}

def _parse_example(example_string): # take TFRecord Each serialized in the file tf.train.Example decode

feature_dict = tf.io.parse_single_example(example_string, feature_description)

feature_dict['image'] = tf.io.decode_jpeg(feature_dict['image']) # decode JPEG picture

feature_dict['image'] = tf.image.resize(feature_dict['image'], [256, 256]) / 255.0

return feature_dict['image'], feature_dict['label']

train_dataset = train_dataset.map(_parse_example)

batch_size = 32

train_dataset = train_dataset.shuffle(buffer_size=23000)

train_dataset = train_dataset.batch(batch_size)

train_dataset = train_dataset.prefetch(tf.data.experimental.AUTOTUNE)

test_dataset = tf.data.TFRecordDataset(test_tfrecord_file) # Read TFRecord file

test_dataset = test_dataset.map(_parse_example)

test_dataset = test_dataset.batch(batch_size)

class CNNModel(tf.keras.models.Model):

def __init__(self):

super(CNNModel, self).__init__()

self.conv1 = tf.keras.layers.Conv2D(32, 3, activation='relu')

self.maxpool1 = tf.keras.layers.MaxPooling2D()

self.conv2 = tf.keras.layers.Conv2D(32, 5, activation='relu')

self.maxpool2 = tf.keras.layers.MaxPooling2D()

self.flatten = tf.keras.layers.Flatten()

self.d1 = tf.keras.layers.Dense(64, activation='relu')

self.d2 = tf.keras.layers.Dense(2, activation='softmax')

def call(self, x):

x = self.conv1(x)

x = self.maxpool1(x)

x = self.conv2(x)

x = self.maxpool2(x)

x = self.flatten(x)

x = self.d1(x)

x = self.d2(x)

return x

learning_rate = 0.001

model = CNNModel()

loss_object = tf.keras.losses.SparseCategoricalCrossentropy()

optimizer = tf.keras.optimizers.Adam(learning_rate=learning_rate)

train_loss = tf.keras.metrics.Mean(name='train_loss')

train_accuracy = tf.keras.metrics.SparseCategoricalAccuracy(name='train_accuracy')

test_loss = tf.keras.metrics.Mean(name='test_loss')

test_accuracy = tf.keras.metrics.SparseCategoricalAccuracy(name='test_accuracy')

#batch

@tf.function

def train_step(images, labels):

with tf.GradientTape() as tape:

predictions = model(images)

loss = loss_object(labels, predictions)

gradients = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

train_loss(loss) #update

train_accuracy(labels, predictions)#update

@tf.function

def test_step(images, labels):

predictions = model(images)

t_loss = loss_object(labels, predictions)

test_loss(t_loss)

test_accuracy(labels, predictions)

EPOCHS=10

for epoch in range(EPOCHS):

# The next epoch At the beginning of the , Reset evaluation indicator

train_loss.reset_states()

train_accuracy.reset_states()

test_loss.reset_states()

test_accuracy.reset_states()

for images, labels in train_dataset:

train_step(images, labels) #mini-batch to update

for test_images, test_labels in test_dataset:

test_step(test_images, test_labels)

template = 'Epoch {}, Loss: {}, Accuracy: {}, Test Loss: {}, Test Accuracy: {}'

print(template.format(epoch + 1,

train_loss.result(),

train_accuracy.result() * 100,

test_loss.result(),

test_accuracy.result() * 100

))

边栏推荐

- [CEPH] MKDIR | mksnap process source code analysis | lock state switching example

- JS text scrolling scattered animation JS special effect

- [graduation season · advanced technology Er] what is a wechat applet, which will help you open the door of the applet

- Anaconda3 installation tensorflow version 2.0 CPU and GPU installation, win10 system

- 查词翻译类应用使用数据接口api总结

- 5 模型保存与加载

- Reflection modification final

- 音视频学习(三)——sip协议

- NFT 项目的开发、部署、上线的流程(2)

- Golang temporary object pool optimization

猜你喜欢

![[file] VFS four structs: file, dentry, inode and super_ What is a block? difference? Relationship-- Editing](/img/b6/d288065747425863b9af95ec6fd554.png)

[file] VFS four structs: file, dentry, inode and super_ What is a block? difference? Relationship-- Editing

Use of abortcontroller

SQLite loads CSV files and performs data analysis

How to configure and use the new single line lidar

![[CEPH] Lock Notes of cephfs](/img/9a/b68e7b07b1187794c0dbed36eea749.png)

[CEPH] Lock Notes of cephfs

NFT transaction principle analysis (2)

How to identify contractual issues

Canvas three dot flashing animation

Stepn novice introduction and advanced

![[graduation season · advanced technology Er] what is a wechat applet, which will help you open the door of the applet](/img/c8/f3f31a8e53c5918abc719603811cc7.png)

[graduation season · advanced technology Er] what is a wechat applet, which will help you open the door of the applet

随机推荐

1 张量的简单使用

Failed to get convolution algorithm. This is probably because cuDNN failed to initialize

Keil4 opens the single-chip microcomputer project to a blank, and the problem of 100% program blocking of cpu4 is solved

效率超级加倍!pycharm十个小技巧就是这么神

Svg canvas canvas drag

音视频学习(一)——PTZ控制原理

NFT contract basic knowledge explanation

golang 1.18 go work 使用

Svg savage animation code

Evaluate:huggingface detailed introduction to the evaluation index module

(1) Keras handwritten numeral recognition and recognition of self written numbers

全面解析Discord安全问题

Reflection modification final

NFT 平台安全指南(2)

svg上升的彩色气泡动画

What is the difference between stm32f1 and gd32f1?

Transaction input data of Ethereum

Nanopi duo2 connection WiFi

JS handwritten bind, apply, call

svg野人动画代码