当前位置:网站首页>Implementation of multi GPU distributed training with horovod in Amazon sagemaker pipeline mode

Implementation of multi GPU distributed training with horovod in Amazon sagemaker pipeline mode

2020-11-07 20:15:00 【InfoQ】

At present , We can use a variety of techniques to train deep learning models with a small amount of data , It includes transfer learning for image classification tasks 、 Small sample learning and even one-time learning , It can also be based on pre training BERT or GPT2 Models fine tune language models . however , In some application cases, we still need to introduce a lot of training data . for example , If the current image and ImageNet The images in the dataset are completely different , Or is the current language corpus only for specific areas 、 It's not a generic type , So it's very difficult for transfer learning to bring about the ideal model performance . As a deep learning researcher , You may need to try new ideas or approaches from scratch . under these circumstances , We have to use large datasets to train large deep learning models ; Without finding the best way to train , The whole process can take a few days 、 Weeks, even months .

In this paper , We'll learn how to do it together Amazon SageMaker Run many on a single instance of GPU Training , And discuss how to do it in Amazon SageMaker On the implementation of more efficient GPU And multi node distributed training .

Link to the original text :【https://www.infoq.cn/article/0867pYEmzviBfvZxW37k】. Without the permission of the author , Prohibited reproduced .

版权声明

本文为[InfoQ]所创,转载请带上原文链接,感谢

边栏推荐

- Using LWA and lync to simulate external test edge free single front end environment

- [original] the impact of arm platform memory and cache on the real-time performance of xenomai

- websocket+probuf.原理篇

- Mac新手必备小技巧

- Awk implements SQL like join operation

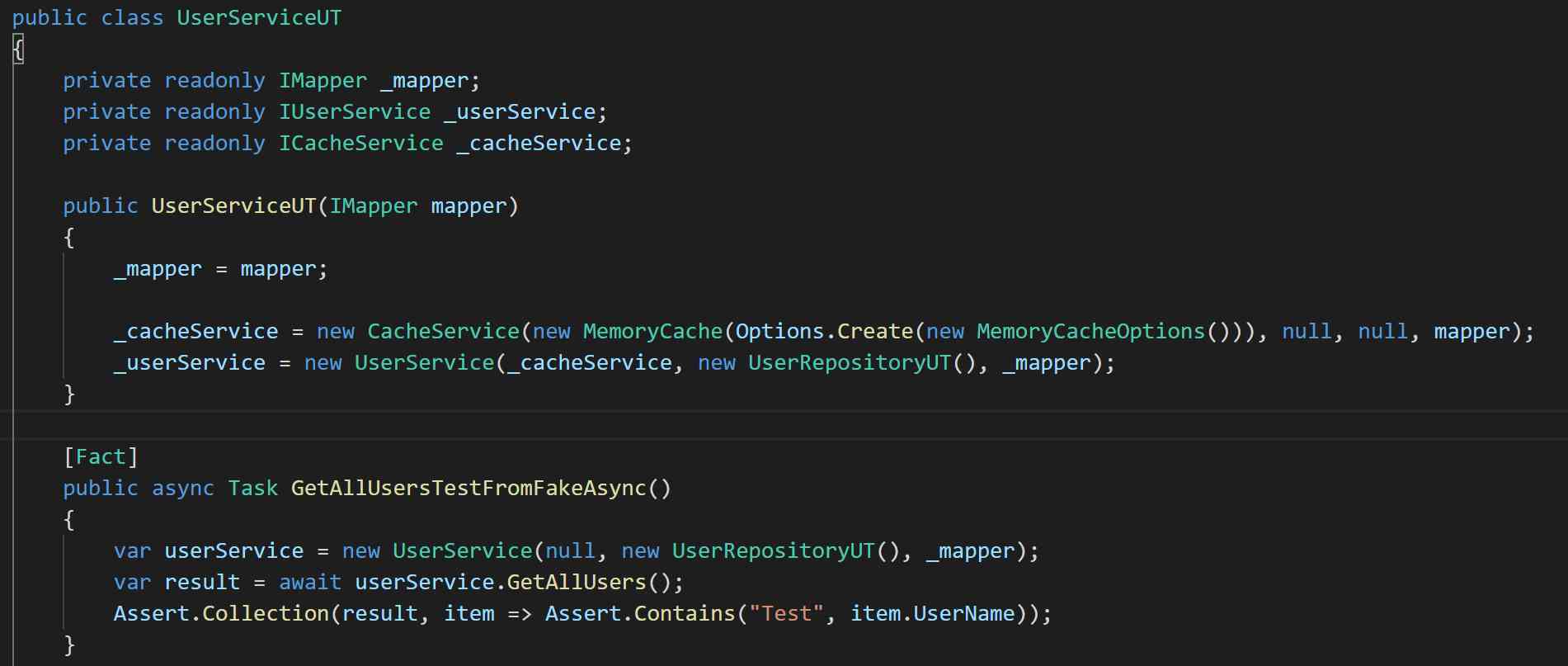

- 使用 Xunit.DependencyInjection 改造测试项目

- Huawei HCIA notes

- PHP安全:变量的前世今生

- HMS Core推送服务,助力电商App开展精细化运营

- 如何应对事关业务生死的数据泄露和删改?

猜你喜欢

How to learn technology efficiently

Classroom exercises

OpenCV計算機視覺學習(10)——影象變換(傅立葉變換,高通濾波,低通濾波)

The JS solution cannot be executed after Ajax loads HTML

If you want to forget the WiFi network you used to connect to your Mac, try this!

Why do we need software engineering -- looking at a simple project

使用 Xunit.DependencyInjection 改造测试项目

Talk about sharing before paying

课堂练习

chrome浏览器跨域Cookie的SameSite问题导致访问iframe内嵌页面异常

随机推荐

使用LWA和Lync模拟外部测试无边缘单前端环境

Chinese sub forum of | 2020 PostgreSQL Asia Conference: Pan Juan

MongoDB下,启动服务时,出现“服务没有响应控制功能”解决方法

The CPU does this without the memory

bgfx编译教程

CPU瞒着内存竟干出这种事

AFO记

PHP security: the past and present of variables

The JS solution cannot be executed after Ajax loads HTML

不懂数据库索引的底层原理?那是因为你心里没点b树

A kind of super parameter optimization technology hyperopt

C language I blog assignment 03

[original] the impact of arm platform memory and cache on the real-time performance of xenomai

Exclusive interview with Yue Caibo

三步一坑五步一雷,高速成长下的技术团队怎么带?

留给快手的时间不多了

How to learn technology efficiently

统计文本中字母的频次(不区分大小写)

Come on in! Take a few minutes to see how reentrantreadwritelock works!

想要忘记以前连接到Mac的WiFi网络,试试这个方法!