Abstract : The content of this article is compiled from mu Chunjin, a big data technology expert of China Unicom, in Flink Forward Asia 2021 Special speech on platform construction . The main contents include :

- Background of real-time computing platform

- Evolution and practice of real-time computing platform

- be based on Flink Cluster governance

- The future planning

FFA 2021 Live playback & speech PDF download

One 、 Background of real-time computing platform

First, let's take a look at the background of real-time computing platform . The business system of telecom industry is very complex , So there are many data sources , At present, the real-time computing platform is connected to 30 Multiple data sources , this 30 Multiple data sources are relatively small compared with the total data types . Even so , Our data volume has also reached the trillion level , Every day 600TB Data increment of , And the type and size of data sources we access continue to grow . The users of the platform come from all over the country 31 Provincial companies and subsidiaries of China Unicom Group , Especially on holidays, a large number of users will subscribe to the rules . Users want to get data , You need to subscribe on the platform , We will encapsulate the data source into a standardized scenario , At present, we have 26 A standardized scenario , Supported 5000 Subscriptions to multiple rules .

There are three categories of subscription scenarios .

- User behavior scenarios include 14 individual , For example, the scene of location class , It's about how long the user stays after entering an area , And terminal login , It's about which network the user is connected to ,4G still 5G network , And roaming 、 voice 、 Various scenarios such as product ordering ;

- The scenarios in which users use classes are 6 individual , For example, how much traffic users use 、 What is the balance of the account 、 Whether there is arrearage, shutdown, etc ;

- The scenarios for users to touch the Internet are probably 10 individual , For example, the handling of business 、 Recharge payment , There are new home access, network access, etc .

For real-time computing platforms , The requirement of real-time is very high . Data from generation to entry into our system , There are about 5~20 Second delay , After the normal processing of the system, there may be 3~10 Second delay , The maximum delay we allow is 5 minute , Therefore, it is necessary to monitor the end-to-end delay of the real-time computing platform .

The data that meets the requirements of user-defined scenarios shall be distributed at least once , There are strict requirements for this , Don't miss it , and Flink This demand is well met ; The accuracy of data needs to meet 95%, A copy of the data distributed by the platform in real time will be saved to HDFS On , Every day we will extract some subscription and offline data , Perform data generation and data quality comparison according to the same rules , If the difference is too large, you need to find the reason and ensure the quality of subsequent data .

This time I share more Flink An in-depth practice of enterprises in the telecommunications industry : How to use Flink Better support our needs .

A common platform cannot support our special scenarios , For example, take the scene of location class as an example , We will draw multiple electronic fences on the map , When the user enters the fence and stays in the fence for a period of time , And meet the user's set gender 、 Age 、 occupation 、 Revenue and other user characteristics , Such data will be distributed .

Our platform can be considered as a real-time scene , Clean the data in real time , Encapsulated into complex scenarios that support multiple conditional combinations , While intensively providing standard real-time capabilities , Closer to the business , It is simple and easy to use 、 Low threshold access mode . The business side calls the standardized interface , Authenticated by gateway , Before you can subscribe to our scene . After the subscription is successful, you will Kafka Some connection information and data Schema Return to the subscriber , The filtering criteria of user subscription are matched with the data flow , After the match is successful, it will be marked with Kafka And then distribute the data in the form of . The above is the process of interaction between our real-time computing platform and downstream systems .

Two 、 Evolution and practice of real-time computing platform

2020 Years ago , Our platform is to use Kafka + Spark Streaming To achieve , It is also a third-party platform for purchasing manufacturers , Encountered many problems and bottlenecks , It's hard to meet our daily needs . Now many enterprises, including us, are carrying out digital reform , The proportion of self research of the system is also higher and higher , Coupled with demand driven , Since the research 、 Flexible customization 、 Controllable systems are imminent . therefore 2020 In, we began to contact Flink, And the implementation is based on Flink Real time computing platform , In this process, we also realized the charm of open source , In the future, we will also participate more in the community .

There are many problems with our previous platforms . The first is the tripartite black box platform , We use the vendor's third-party platform , Too much reliance on external systems ; And in the case of large concurrency, the load of external systems will be very high , Unable to flexibly customize personalized needs ;Kafka The load is also particularly high , Because the subscription of each rule will correspond to multiple topic, So as the rules increase ,topic And the number of partitions will also increase linearly , The delay is relatively high . Each subscription scenario will correspond to multiple real-time streams , Each real-time stream will occupy memory and CPU, Too many scenarios will lead to increased resource consumption and excessive resource load ; The small volume is the support , The number of subscriptions supporting the scenario is limited , For example, during the Spring Festival, the number of subscribers has increased sharply , Often need emergency fire fighting , Unable to meet the growing demand ; Besides , Monitoring granularity is not enough , Unable to flexibly customize monitoring , End to end monitoring is not possible , There are many investigations using human flesh .

Based on the above problems , We have made a comprehensive self-study based on Flink Real time computing platform , Make the best customization according to the characteristics of each scene , Maximize resource efficiency . At the same time, we use Flink Reduce external dependencies , Reduces the complexity of the program , Improve the performance of the program . The optimization of resources is realized through flexible customization , Under the demand of the same volume, resources are greatly saved . At the same time, in order to ensure the low delay rate of the system , We conducted end-to-end monitoring , For example, it increases the backlog of data 、 Delay 、 Data disconnection monitoring .

The architecture of the whole platform is relatively simple , Adopted Flink on Yarn Mode of operation , External only depends on HBase, The data is based on Kafka Access and by Kafka Send out .

Flink The cluster is built independently , It's exclusive 550 Servers , Not mixed with offline computing , Because it requires high stability , Need daily average processing 1.5 Trillions of data , near 600TB Data increment of .

The main reason for our in-depth customization of the scene is the large amount of data , There are many subscriptions for the same scenario , And the conditions of each subscription are different . from Kafka When reading a piece of data , This data must match multiple rules , After matching, it will be distributed to the corresponding... Of the rule topic Inside . So no matter how many subscriptions there are , Only from Kafka Read data once in , This will reduce the risk of Kafka Consumption of .

The mobile phone will be connected to the base station when making a phone call or surfing the Internet , The data of the same base station will be compressed according to a certain time window and fixed messages , For example, a window in three seconds , Or the news reached 1000 Then trigger , In this way, the message received downstream will be reduced in order of magnitude . Then there's the fence match , The pressure of the external system is based on the scale of the base station , Not based on the number of messages . Then make full use of Flink The state of , When people enter and stay, they will be stored in the State , use RocksDB State backend reduces external dependencies , Simplify the complexity of the system . Besides , We also realize the association of 100 million tags, which does not depend on external systems . Through data compression 、 The fence matches 、 Entry dwell 、 After tag Association , We just started to officially match the rules .

After the user subscribes to the scenario , The subscription rule will be in Flink CDC The way to synchronize to the real-time computing platform , This ensures a low latency . Due to the entry of the crowd, the detention will be stored in the State , be based on RocksDB The amount of back-end data is relatively large , We will troubleshoot the problem by analyzing the status data , For example, whether the user is in the fence or not .

We customized HASH Algorithm , Without relying on external systems , Realize the association of 100 million tags .

In large concurrency , If each user wants to associate with an external system to obtain the information of the tag , Then the pressure of the external system will be very large , Especially in the case of such a large amount of data as China Unicom , The cost of relying on external system construction is also high , These tags are offline tags , The data is relatively stable , For example, there are more days and more months . So we use a custom hash algorithm for users , For example, there is a mobile phone number , According to the hash algorithm, it is assigned to index by 0 Of task_0 In the example , Then, through offline calculation, the mobile phone number in the tag file is assigned to the number of... According to the same hash algorithm 0 Of 0_tag in .

task_0 Examples are in open Method to get your own index Number , namely index=0, Then splice the tag file name 0.tag, And load the file into your own memory .Task_0 After receiving the mobile phone number, the instance can obtain the tag data of the mobile phone number from the local memory , It will not cause redundant waste of memory , Improved system performance , Reduce external dependencies .

When there are label updates , open Method will also automatically load new tags , And flush it into your own memory .

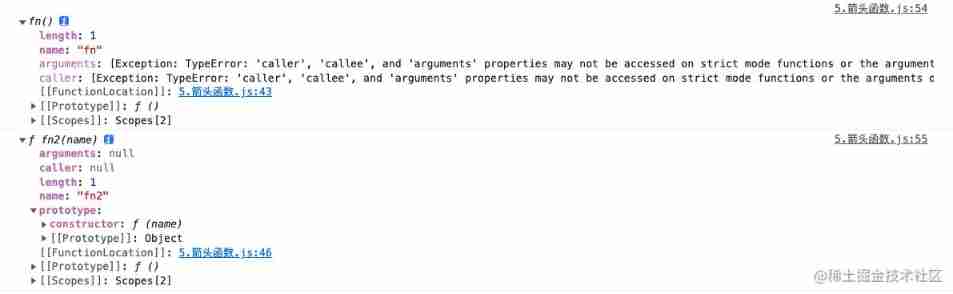

The figure above shows the end-to-end delay monitoring we do . Because our business has high requirements for delay , So we marked the event time , Like going in and out Kafka Marking of time , The events here are news . For operator delay monitoring , We calculate the delay according to the marking time and the current time , Not every message here is calculated after it comes , But by sampling .

The backlog and transmission interruption are also monitored , By collecting Kafka offset Make a comparison before and after , In addition, there is the monitoring of data delay , Use the time of the event and the current time to calculate the delay , The data delay of the upstream system can be monitored .

The figure above is the diagram of end-to-end delay monitoring and back pressure monitoring . You can see that the end-to-end delay is normal at 2~6 Between seconds , It's also in line with our expectations , Because the positioning conditions are more complex . We also monitored the back pressure , Through the monitoring operator input channel The utilization rate is used to locate the backpressure generated by each operator , For example, in the second figure, there is a serious back pressure and it lasts for a period of time , At this time, we need to locate the specific operator , Then check the reason , To ensure the low delay of the system .

The picture above shows our understanding of Kafka Each of the clusters topic The partition offset, And collect the consumption location of each consumer to locate its disconnection and backlog .

First, make a source To get topic List and consumer group list , Then distribute these lists to the downstream , Downstream operators can collect data in a distributed manner offset value , It's also used Flink Characteristics of . Finally write Clickhouse Analysis in .

Flink Daily monitoring mainly includes the following categories :

- Flink Job monitoring 、 The alarm is connected to Unicom's unified alarm SkyEye platform ;

- The running status of the job 、checkpoint It takes a lot of time ;

- The delay of the operator 、 Back pressure 、 Traffic 、 Number of pieces ;

- taskmanager CPU、 Memory usage ,JVM GC Monitoring of indicators such as .

3、 ... and 、 be based on Flink Cluster governance

We are also based on Flink Built our cluster governance platform . The background of building this platform is that our total cluster nodes have reached 1 More than 10000 sets , A single cluster has a maximum of 900 Nodes , in total 40 Multiple clusters , The total data volume of the single copy has reached 100 individual PB, Every day 60 Ten thousand jobs run , Of a single cluster NameNode The maximum number of files reached 1.5 Billion .

With the rapid development of the company's business , The demand for data is becoming more and more complex , The computational power required is also increasing , The scale of clusters is also growing , There are more and more data products , Lead to Hadoop Clusters face great challenges :

- There are a large number of files nameNode Cause a lot of pressure , Affect the stability of the storage system .

- There are a lot of small files , As a result, more files need to be scanned when reading the same amount of data , Lead to more NameNode RPC.

- Many empty files , Need to scan more files , Lead to more RPC.

- The average file size is relatively small , Macroscopically, it also shows that there are a large number of small files .

- Documents will be generated continuously in production , The output file of the job should be tuned .

- There are many cold data , Lack of cleaning mechanism , Waste storage resources .

- High resource load , And the expansion cost is too big , The expansion can't last too long .

- The operation takes a long time and affects the delivery of products .

- The operation consumes a lot of resources , over-occupied CPU、 Cores and memory .

- Data skew exists in the job , Resulting in very long execution time .

In response to these challenges , We built a system based on Flink Cluster governance architecture , By collecting the information of resource queue , analysis NameNode The metadata file of Fsimage, Collect the job and other information of the computing engine , Then do some work on the cluster HDFS portrait 、 Homework portrait , Data consanguinity 、 Redundant computing 、RPC Portraits and resource portraits .

Resource portraits : We will analyze the situation of multiple resource queues in multiple clusters at the same time, such as its IO、 metric Wait for minute level acquisition , You can view the resource usage trend of the whole cluster and subdivided queues in real time .

Store portraits : We conduct a global and multi-dimensional analysis of multi cluster distributed storage in a non-invasive way . For example, where the number of files is distributed , Where are the small files distributed , Where are empty files distributed . For the distribution of cold data , We have also refined the partition directory of each database and table .

Homework portrait : For the jobs of different computing engines in the whole product line of multiple clusters , We do real-time acquisition , From the time dimension 、 Queue dimension and job submission source , From time-consuming to resource consuming , Data skew 、 Large throughput 、 high RPC And so on , Find the problem homework , Filter out those jobs to be optimized .

Data consanguinity : By analyzing the production environment 10 Ten thousand grade SQL sentence , Draw a non intrusive 、 Overall 、 Highly accurate data, blood relationship . The data table level is provided in any cycle / Account level call frequency 、 Dependency of data table 、 Process change of production line processing 、 The influence scope of processing fault and the insight of garbage table .

In addition, we also made some portraits of user operation audit and metadata .

The above figure is a large screen of cluster governance storage . In addition to some macro indicators, such as the total number of documents 、 Number of empty files 、 Empty folder , And the number of cold directories 、 The amount of cold data and the proportion of small files . We also analyze the cold data , For example, what data was last accessed and how much data was accessed in a certain month , From this, we can see the time distribution of cold data ; And for example. 10 Under a trillion 、50 Under a trillion 、100 On which tenants are files less than megabytes distributed . In addition to these indicators , You can also pinpoint which library 、 Which watch 、 Which partition has small files .

The figure above shows the effect of cluster governance , You can see that the resource load has reached 100% The duration of is also significantly shortened , The number of files has decreased 60% above ,RPC The load is also greatly reduced . There will be tens of millions of cost savings every year , It has solved the problem of resource shortage for a long time , Reduce the number of machines with capacity expansion .

Four 、 The future planning

At present, we don't have a perfect real-time stream management platform , And the monitoring is relatively decentralized , It is imperative to develop a general management and monitoring platform .

In the face of growing demand , Although deep customization saves resources , Increased the scale of support , But its development efficiency is not ideal . For scenarios with a small amount of data , We also considered the use of Flink SQL To build a common platform , In order to improve R & D efficiency .

Last , We will continue to explore Flink Application in data Lake .

FFA 2021 Live playback & speech PDF download

more Flink Related technical issues , Can scan code to join the community nail exchange group

Get the latest technical articles and community trends for the first time , Please pay attention to the official account. ~

![[Business Research Report] analysis report on online attention of China's e-sports industry in 2021 - download link attached](/img/f3/87fd24e4944c83475f49ec5e56c640.jpg)