当前位置:网站首页>Ten minutes will take you in-depth understanding of multithreading. Multithreading on lock optimization (I)

Ten minutes will take you in-depth understanding of multithreading. Multithreading on lock optimization (I)

2022-07-03 22:15:00 【Peach blossom key God】

One 、 Some suggestions to improve lock performance

Lock competition will inevitably lead to the decline of the overall performance of the program . To minimize this side effect , Here are some suggestions for using locks , Hope to help you write a higher performance program .

1、 Reduce lock holding time

For applications that use locks for concurrency control , In the lock race , The lock holding time of a single thread is directly related to the system performance . If the thread holds the lock longer , So relatively , The more competitive the locks are . You can imagine , If required 100 Individuals fill in their own identity information , But just give them a pen , So if everyone holds a pen for a long time , Overall, it will take a long time . If there is really only one pen to share with 100 Personal use , So it's better for everyone to spend as little time as possible holding the pen , Be sure to write with a pen when you think about it , Never think about how to fill in this form with a pen . Program development is similar , We should try to reduce the occupancy time of a lock as much as possible , To reduce the possibility of mutual exclusion between threads . Take the following code snippet as an example :

public synchronized void syncMethod(){

othercode1();

mutextMethod();

othercode2();

}

stay syncMethod() In the method , Assuming that only the mutextMethod() Methods need to be synchronized , and othercode1( Methods and othercode2() Method does not need to do synchronization control . If othercode1() and othercode2() They are heavyweight methods , It will take a long time CPU Time . If the concurrency is large , Using this scheme to synchronize the whole method , Will result in a large number of waiting threads . Because a thread , Get the internal lock when entering the method , Only after all tasks have been performed , To release the lock .

A more optimized solution is , Synchronize only if necessary , This can significantly reduce the time for threads to hold locks , Improve system throughput .

public void syncMethod2 (){

othercode1();

synchronized (this){

mutextMethod ();

}

othercode2();

}

In the improved code, only for mutextMethod() The method synchronizes , The lock takes a relatively short time , So we can have a higher degree of parallelism . This kind of technology is in JDK Can also be easily found in the source code package , For example, dealing with regular expressions Pattern class .

public Matcher matcher(CharSequence input) {

if (!compiled){

synchronized (this){

if (!compiled)

compile();

}

}

Matcher m= new Matcher (this, input);

return m;

}

matcher() Method conditional lock application , Only when the expression is not compiled , Local locking . This way of handling greatly improves matcher( The efficiency and reliability of the method .

Be careful : Reducing the holding time of locks helps to reduce the possibility of lock conflicts , And then improve the concurrent ability of the system .

2、 Reduce lock granularity

Reducing lock granularity is also an effective way to reduce lock contention in multithreading . The typical use scenario of this technology is ConcurrentHashMap The realization of the class .

about HashMap Come on , The two most important methods are get() and put(). One of the most natural ideas is , To the whole HashMap Lock to get a thread safe object , But do it , Locking granularity is too large . about ConcurrentHashMap class , It further subdivides several small HashMap, Called paragraph (SEGMENT). By default , One ConcurrentHashMap Classes can be subdivided into 16 Segments .

If you need to ConcurrentHashMap Class to add a new table entry , It's not the whole thing HashMap Lock , But first according to hashcode Get which segment the table item should be stored in , Then lock the section , And finish put() Method of operation . In a multithreaded environment , If multiple threads are running at the same time put() Method of operation , As long as the added table items are not stored in the same segment , Real parallelism can be achieved between threads .

Because the default is 16 Segments , therefore , If you're lucky ,ConcurrentHashMap Class can accept 16 Two threads are inserted at the same time ( If it's all inserted in different segments ), So as to greatly improve its throughput . The following code shows put() Method operation process . The first 5~6 The line code is based on key Get the serial number of the corresponding segment . And then at the 9 OK, get the paragraph , Then insert the data into the given segment .

public v put(K key,v value) {

Segment<K,V> S;

if (value -= null)

throw new NullPointerException();

int hash = hash (key);

int j =(hash >>> segmentShift) & segmentMask;

if((s= (Segment<K, V>)UNSAFE.getObject

(segments,(j<<SSHIFT)+SBASE)) -= null)s =ensureSegment(j);

return s.put (key, hash,value, false);

}

however , Reducing lock granularity brings a new problem , That is, when the system needs to acquire a global lock , It will consume more resources . Still with ConcurrentHashMap Class, for example , Although put() The method separates the lock well , But when trying to visit ConcurrentHashMap Class , You need to get all the locks at the same time to implement it smoothly . such as ConcurrentHashMap Class size() Method , It will return ConcurrentHashMap Number of valid table entries for class , namely ConcurrentHashMap The sum of all valid entries of the class . To get this information, you need to get all the child locks , therefore , Its size() Part of the code for the method is as follows :

sum= 0;

for (int i = 0; i<segments.length; ++i)

// Lock all segments

segments[i].lock();

for (int i =0; i< segments.length; ++i)

// Count the total

sum +=segments[i].count;

for (int i =0; i<segments.length; ++i)

// Release all locks

segments[i].unlock();

You can see that when you calculate the total , First get the lock of all segments and then sum them up . however ,ConcurrentHashMap Class size() Methods don't always work like this , in fact ,size() Method will first use a lock free way to sum , If you fail, you will try this locking method . But anyway , In the case of high concurrency ConcurrentHashMap Class size() The performance of the method is still worse than that of the synchronous one HashMap.

therefore , Only when it's similar to size() Method to get global information is not frequently called , This method of reducing lock granularity can improve the throughput of the system in a real sense .

Be careful : Reducing lock granularity , It means narrowing the scope of the locked object , So as to reduce the possibility of lock conflict , And then improve the concurrent ability of the system .

3、 Replace exclusive lock with read-write separate lock

We have mentioned before , Use the read-write split lock ReadWriteLock It can improve the performance of the system . It is a special case of reducing lock granularity to use read-write split lock instead of exclusive lock . If we say that reducing the lock granularity is achieved by dividing the data structure , Then the read-write separation lock is the division of system function points .

In the situation of reading more and writing less , Read write locks are good for system performance . Because if the system only uses exclusive lock when reading and writing data , So between read and write operations 、 Read operation and read operation 、 There is no real concurrency between write operations and write operations , And need to wait for each other . The read operation itself does not affect the integrity and consistency of the data . therefore , In theory , In most cases , You can allow multiple threads to read at the same time , Read write lock is to achieve this function . Because we are in 3 Read write locks have been introduced in this chapter , So it's not going to be repeated here .

Be careful : In the case of more read and less write, using read-write lock can effectively improve the concurrency ability of the system .

4、 Lock separation

If the idea of read-write lock is further extended , It's lock separation . The read-write lock depends on the function of the read-write operation , Effective lock separation . According to the functional characteristics of the application , Using a similar idea of separation , You can also separate exclusive locks . A typical case is java.util.concurrent.LinkedBlockingQueue The implementation of the .

stay LinkedBlockingQueue In the implementation of ,take() Functions and put() Function to get data from the queue and add data to the queue . Although both functions modify the current queue , But because of LinkedBlockingQueue It's based on linked lists , So the two operations act on the front end and the end of the queue, respectively , In theory , There is no conflict between the two .

If exclusive lock is used , It is required to obtain the exclusive lock of the current queue when two operations are in progress , that take() Methods and put() Methods can't really be concurrent , At run time , They wait for each other to release lock resources . under these circumstances , Lock competition will be relatively fierce , This will affect the performance of the program in high concurrency .

therefore , stay JDK In the implementation of , Not in this way , Instead, it's separated with two different locks take() Methods and put) Method operation .

/** Lock held by take, poll, etc */

private final ReentrantLock takeLock = new ReentrantLock();//take() Methods need to hold takeLock/**ait queue for waiting takes * /

private final Condition notEmpty - takeLock.newCondition ();/** Lock held by put,offer,etc*/

private final ReentrantLock putLock = new ReentrantLock();//put() Methods need to hold putLock./**wait queue for waiting puts */

private final Condition notFull= putLock.newCondition();

The above code fragment defines takeLock and putLock, They are in take() Methods and put() Method used in . therefore ,take() Methods and put() Methods are independent of each other , There is no lock competition between them , Only need take() Methods and take() Between methods 、put() Methods and put() The methods are different from each other takeLock and putLock Compete . thus , Weakens the possibility of lock competition .

take() The method is implemented as follows , The author gives detailed comments in the code , Therefore, it will not be further explained in the text .

public E take()throws InterruptedException{

Ex;

int c - -1;

final AtomicInteger count = this.count;

final ReentrantLock takeLock = this.takeLock;takeLock. lockInterruptibly();

// Two threads cannot fetch data at the same time

try {

try {

while (count.get( =0)

// If no data is currently available , Then wait all the time

notEmpty.await ();

// wait for put() Notification of method operation

] catch (InterruptedException ie){

notEmpty.signal ();

// Notify other non interrupted threads

throw ie;

x=extract();

// Get the first data

C= count.getAndDecrement (O;

// Quantity reduction 1, Atomic manipulation , Because it will be with put ()// Functions access at the same time count. Be careful : Variable c yes //count reduce 1 The value of the former

if(c >1)

notEmpty.signal ();

// Inform others take() Method of operation

} finally {

takeLock.unlock();

// Release the lock

if(c -= capacity)

signalNotFul1(0);

// notice put() Method of operation , There is free space

return x;

function put() The implementation is as follows .

public void put(Ee) throws InterruptedException{

if (e -= null)throw new NullPointerException();int c= -1;

final ReentrantLock putLock = this.putLock;final AtomicInteger count = this.count;putLock.lockInterruptiblyO;

// You cannot have two threads running at the same time put() Method

try {

try {

while (count.get( -=capacity)

// If the queue is full

notFull.await(;

// wait for

}catch (InterruptedException ie) {

notFull.signal();

// Notify non interrupted threads

throw ie;

insert(e);

// insert data

C=count.getAndIncrement ();

// Total number of updates , Variable c yes count Add 1 The value of the former

if (c+1< capacity)

notFull.signal ();

// There is enough space , Notify other threads

}finally{

putLock.unlock();

// Release the lock

if (c ==0)

signalNotEmpty();// After successful insertion , notice take () Method to get data

}

adopt takeLock and putLock Two locks ,LinkedBlockingQueue The separation of fetching data and writing data is realized , Make them both concurrent operations in a real sense .

4、 Lock coarsening

Usually , In order to ensure the effective concurrency among multiple threads , Each thread will be required to hold the lock for as short a time as possible , That is, after the use of public resources , The lock should be released immediately . That's the only way , Other threads waiting on this lock can get resources to execute tasks as soon as possible . however , Everything has a degree , If the same lock is constantly requested 、 Synchronization and release , It also consumes valuable resources of the system , It's not good for performance optimization .

So , When a virtual machine encounters a series of operations that continuously request and release the same lock , It will integrate all the lock operations into one request of the lock , So as to reduce the number of synchronization of lock requests , This operation is called lock coarsening .

public void demoMethod () {

synchronized(lock){

//do sth.

)

// Do other work that doesn't need to be synchronized , But it can be done soon synchronized(lock){

//do sth.

The above code snippet will be integrated into the following form ;

public void demoMethod({

// Integration into a lock request synchronized (lock){

//do sth.

// Do other work that doesn't need to be synchronized , But it can be done soon

)

In the development process , We should also consciously roughen the lock in a reasonable situation , Especially when a lock is requested within a loop . Here is an example of a lock request in a loop , under these circumstances , It means that every cycle has the operation of applying for and releasing the lock . But in this case , Obviously there is no need .

for(int i=0;i<CIRCLE;i++){

synchronized (lock){

}}

therefore , A more reasonable approach would be to request a lock only once in the outer layer :

synchronized (lock){

for(int i=0;i<CIRCLE;i++){

)

)

Be careful : Performance optimization is a trade-off process for each resource point according to the real situation of the runtime . The idea of lock coarsening is the opposite of reducing lock holding time , But on different occasions , They don't have the same effect , Therefore, it is necessary to make a trade-off according to the actual situation .

Excerpt from JAVA High concurrency programming , Recommend

边栏推荐

- JS Demo calcule combien de jours il reste de l'année

- Farmersworld farmers world, no faith, how to talk about success?

- Miscellaneous things that don't miss the right business

- Morning flowers and evening flowers

- Sow of PMP

- SDNU_ ACM_ ICPC_ 2022_ Winter_ Practice_ 4th [individual]

- Conditional statements of shell programming

- Minio deployment

- Compréhension de la technologie gslb (Global Server load balance)

- Development mode and Prospect of China's IT training industry strategic planning trend report Ⓣ 2022 ~ 2028

猜你喜欢

How PHP drives mongodb

常用sql集合

Yyds dry inventory hcie security Day12: concept of supplementary package filtering and security policy

Some 5000+ likes, the development notes of a director of cosmic factory, leaked

string

The latest analysis of crane driver (limited to bridge crane) in 2022 and the test questions and analysis of crane driver (limited to bridge crane)

Cesium terrain clipping draw polygon clipping

![Intimacy communication -- [repair relationship] - use communication to heal injuries](/img/c2/f10405e3caf570dc6bd124d65b2e93.jpg)

Intimacy communication -- [repair relationship] - use communication to heal injuries

320. Energy Necklace (ring, interval DP)

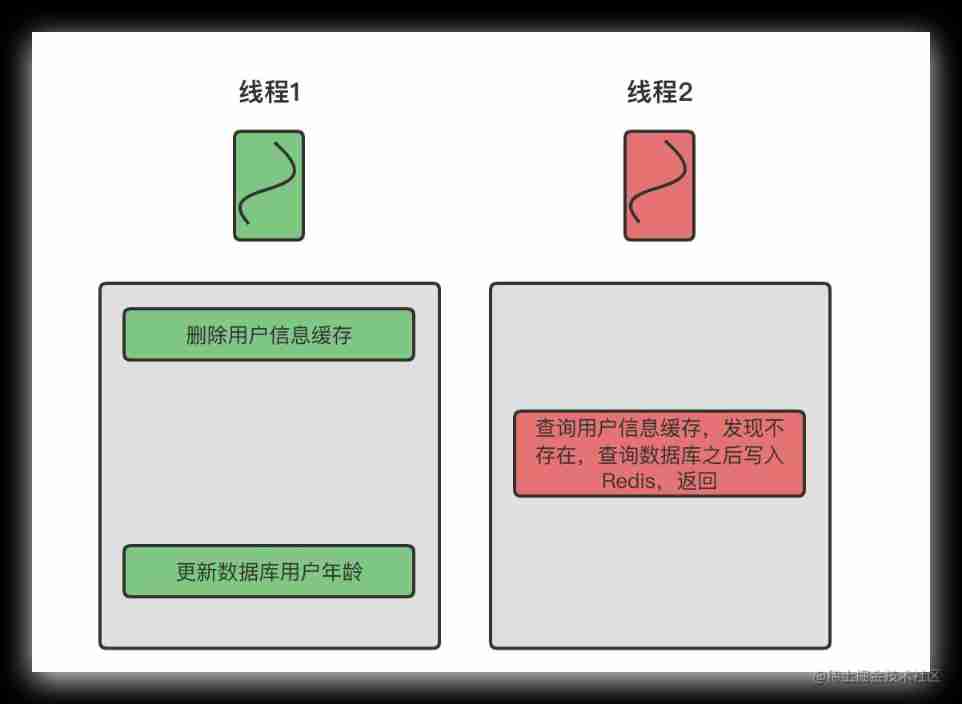

Data consistency between redis and database

随机推荐

Implementation principle of inheritance, encapsulation and polymorphism

Development mode and Prospect of China's IT training industry strategic planning trend report Ⓣ 2022 ~ 2028

Nacos common configuration

How PHP gets all method names of objects

WFC900M-Network_ Card/Qualcomm-Atheros-AR9582-2T-2R-MIMO-802.11-N-900M-high-power-Mini-PCIe-Wi-Fi-Mod

Rest参考

DR882-Qualcomm-Atheros-QCA9882-2T2R-MIMO-802.11ac-Mini-PCIe-Wi-Fi-Module-5G-high-power

(POJ - 2912) rochambau (weighted concurrent search + enumeration)

string

DR-NAS26-Qualcomm-Atheros-AR9582-2T-2R-MIMO-802.11-N-5GHz-high-power-Mini-PCIe-Wi-Fi-Module

Ansible common usage scenarios

Data consistency between redis and database

On my first day at work, this API timeout optimization put me down!

Decompile and modify the non source exe or DLL with dnspy

2022 free examination questions for safety management personnel of hazardous chemical business units and reexamination examination for safety management personnel of hazardous chemical business units

(5) User login - services and processes - History Du touch date stat CP

Redis single thread and multi thread

Unique in China! Alibaba cloud container service enters the Forrester leader quadrant

6.0 kernel driver character driver

LeetCode 1647. Minimum deletion times of unique character frequency