当前位置:网站首页>Linux Redis cache avalanche, breakdown, penetration

Linux Redis cache avalanche, breakdown, penetration

2022-08-04 08:08:00 【1 2 3 Chasing dreams together】

1, cache avalanche

1.1 Causes

One, simply put, there was a problem in the design of the cache at that time, which caused most of the cached data to expire in large quantities at the same time.This will put all the pressure on the database.

2. The Redis cache instance fails and goes down.

1.2 Solutions

1. Solve the central failure of hotspot data

The following solutions can be used to solve the cache avalanche problem caused by the failure of a large number of data sets:

- Uniform expiration: Set different expiration times for hotspot data, and add a random value to the expiration time of each key;

- Set the hotspot data to never expire: do not set the expiration time, if there is an update, you need to update the cache;

- Service downgrade: refers to the different processing methods of the service for different data:

- The business accesses non-core data and directly returns predefined information, null value or error;

- If the business accesses the core data, it is allowed to access the cache. If the cache is missing, the database can be read.

2. Solve the problem of Redis instance downtime

Option 1: Implement service fuse or request current limiting mechanism

We monitor the load indicators of Redis and the server where the database instance is located. If the Redis service is found to be down, the load pressure of the database will increase., we can start the service circuit breaker mechanism to suspend access to the cache service.

However, this method has a greater impact on business applications, and we can also reduce this impact by limiting current.

For example: For example, when the business system is running normally, the maximum number of incoming requests per second by the request portal is 10,000, of which 9,000 requests can be processed by the cache, and the remaining 1,000 requests will be sent to the database for processing.

Once an avalanche occurs, the number of requests processed by the database per second suddenly increases to 10,000. At this time, we can start the current limiting mechanism.At the front-end request entry, only 1000 requests per second are allowed, and the others are directly rejected.This avoids sending a large number of concurrent requests to the database.

Option 2: Pre-prevention

Build a Redis cache highly reliable cluster through master-slave nodes.If the master node of the Redis cache fails, the slave node can also switch to become the master node and continue to provide cache services, avoiding the cache avalanche problem caused by the downtime of the cache instance.

2, cache penetration

2.1 Causes

Cache penetration means that the data to be accessed by the user is neither in the cache nor in the database, causing the user to check the database every time they request the data, and then return empty.If malicious attackers continuously request data that does not exist in the system, it will cause excessive pressure on the database, which will seriously overwhelm the database.

2.2 Solutions

- The interface layer adds verification: user authentication, parameter verification (whether the request parameter is legal, whether the request field does not exist, etc.);

- Cache null/default value: When cache penetration occurs, we can cache a null value or default value in Redis (for example, the inventory default value is 0), which avoids sending a large number of requests toDatabase processing, maintaining the normal operation of the database.There are two problems with this approach:

- If there are a large number of key penetrations, the cached empty objects will occupy precious memory space.In this case, you can set an expiration time for the empty object.After the expiration time is set, the cache may be inconsistent with the database.

- Bloom filter: Quickly determine whether the data exists, avoid querying whether the data exists from the database, and reduce the pressure on the database.

3, cache breakdown

3.1 Causes

For a hot key, at the moment when the cache expires, a large number of requests come in. Since the cache expires at this time, the requests will eventually go to the database, resulting in a large amount of instantaneous database requests and a sudden increase in pressure, resulting inThe database is at risk of being hit.

3.2 Solutions

1. Add mutex lock.When a large number of requests flood in after the hotspot key expires, only the first request can acquire the lock and block. At this time, the request queries the database, writes the query result to redis, and releases the lock.Subsequent requests go directly to the cache.

2. Set the cache not to expire or there are threads in the background to renew the hot data all the time.

边栏推荐

- 金仓数据库KingbaseES客户端编程接口指南-JDBC(10. JDBC 读写分离最佳实践)

- 从底层看 Redis 的五种数据类型

- 使用requests post请求爬取申万一级行业指数行情

- 华为设备配置VRRP与路由联动监视上行链路

- 电脑系统数据丢失了是什么原因?找回方法有哪些?

- The sorting algorithm including selection, bubble, and insertion

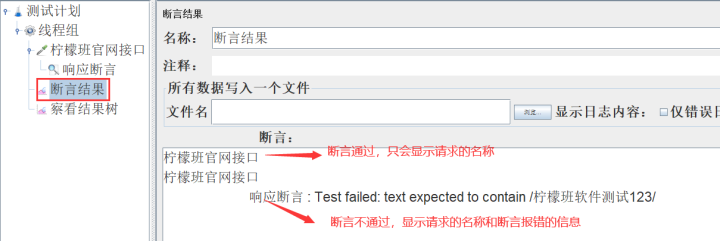

- unittest使用简述

- 高等代数_证明_两个矩阵乘积为0,则两个矩阵的秩之和小于等于n

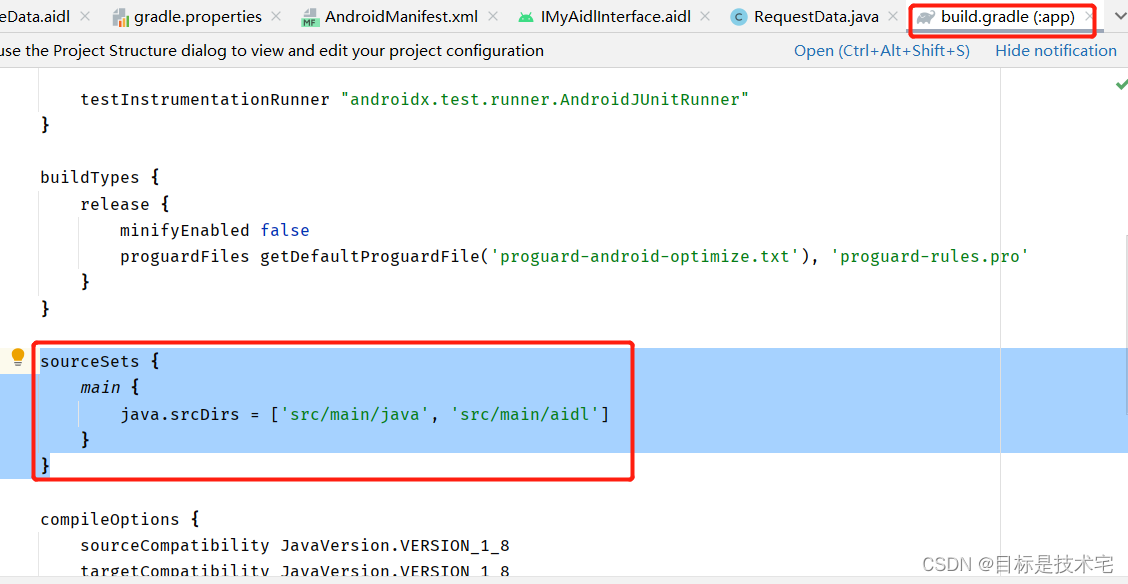

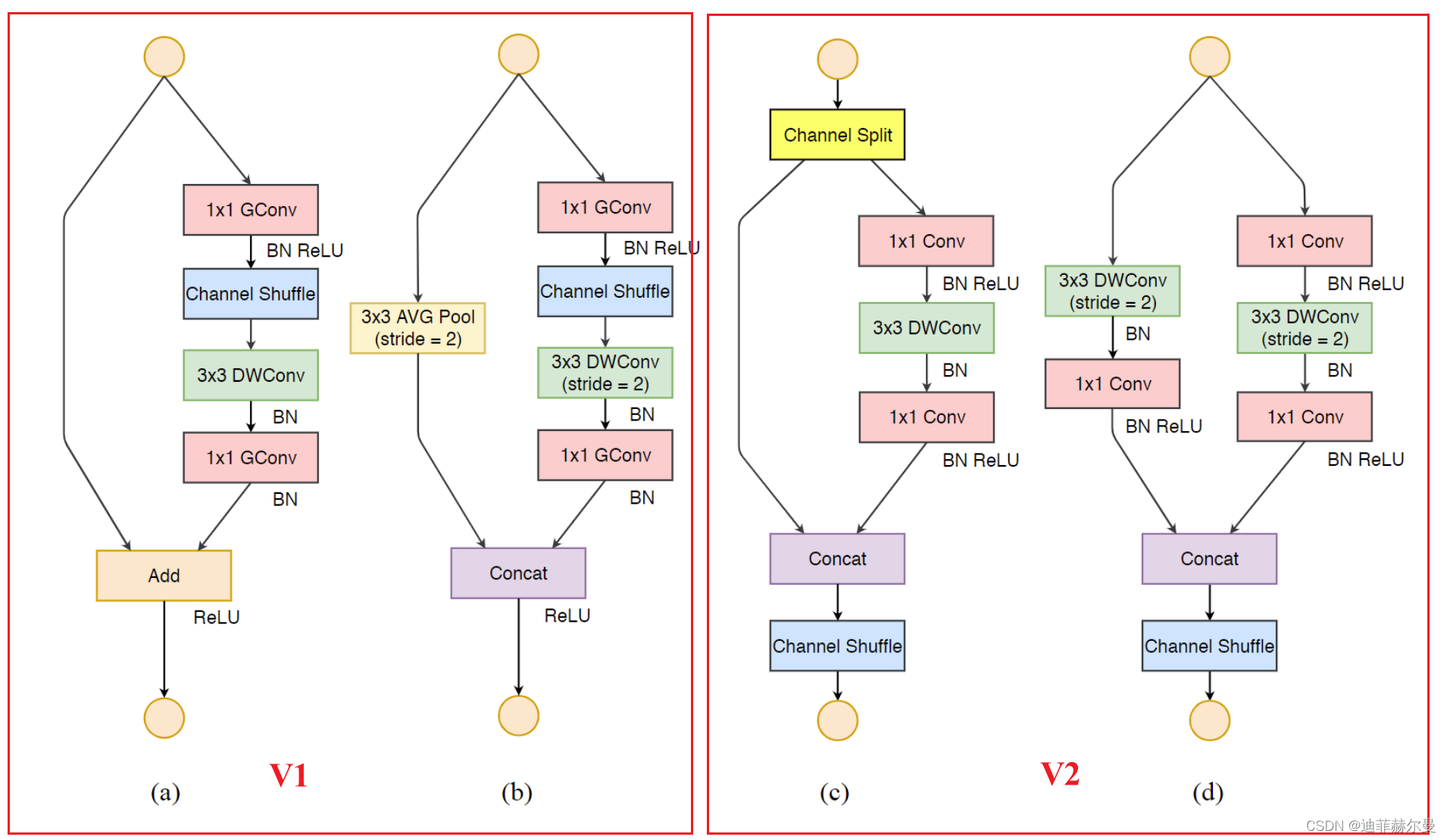

- Yolov5更换主干网络之《旷视轻量化卷积神经网络ShuffleNetv2》

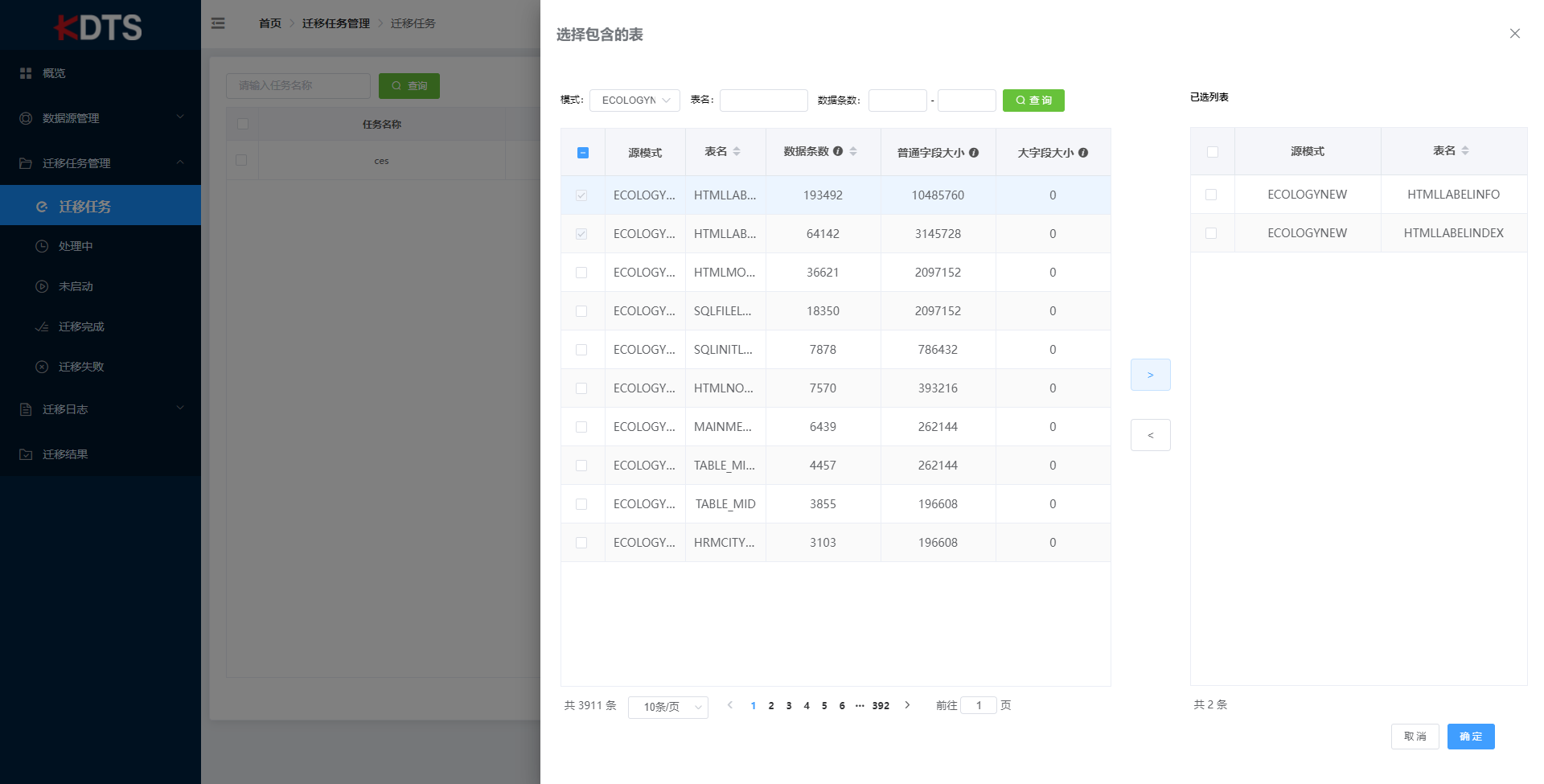

- 金仓数据库 KDTS 迁移工具使用指南 (7. 部署常见问题)

猜你喜欢

随机推荐

MySQL 8.0.29 详细安装(windows zip版)

【虚幻引擎UE】UE5实现WEB和UE通讯思路

金仓数据库 KDTS 迁移工具使用指南 (5. SHELL版使用说明)

[Paper Notes] - Low Illumination Image Enhancement - Supervised - RetinexNet - 2018-BMVC

【UE虚幻引擎】UE5三步骤实现AI漫游与对话行为

华为设备配置VRRP与NQA联动监视上行链路

【电脑录制屏】如何使用bandicam录游戏 设置图文教程

金仓数据库KingbaseES客户端编程接口指南-JDBC(5. JDBC 查询结果集处理)

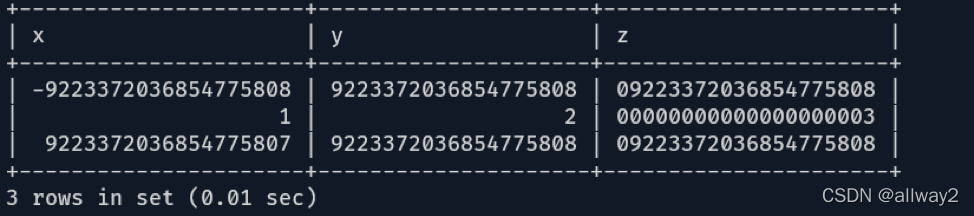

大佬们,mysql里text类型的字段,FlinkCDC需要特殊处理吗 就像处理bigint uns

js-第一个出现两次的字母

通过GBase 8c Platform安装数据库集群时报错

[想要访问若依后台]若依框架报错401请求访问:error认证失败,无法访问系统资源

[Computer recording screen] How to use bandicam to record the game setting graphic tutorial

金仓数据库的单节点如何转集群?

Redis分布式锁的应用

IDEA引入类报错:“The file size (2.59 MB) exceeds the configured limit (2.56MB)

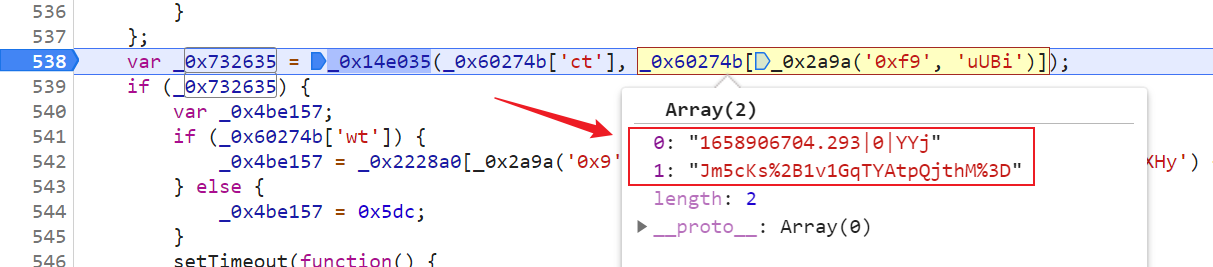

【JS 逆向百例】某网站加速乐 Cookie 混淆逆向详解

New Questions in Module B of Secondary Vocational Network Security Competition

Cross-species regulatory sequence activity prediction

使用requests post请求爬取申万一级行业指数行情