当前位置:网站首页>Brief description of activation function

Brief description of activation function

2022-07-01 05:37:00 【frostjsy】

1、 The function of activation

1. The single-layer perceptron without activation function is a linear classifier , Can't solve the problem of linear indivisibility

2. The combined perceptrons are essentially a linear classifier , It still can't solve the nonlinear problem

3. The activation function is used to add nonlinear factors , Improve the expression ability of neural network to the model , Solve problems that linear models can't solve .

2、 Common activation functions

1.sigmiod

f(x)=1/(1+e^-x)

characteristic :sigmiod The output range of is [0,1], Applicable as the output of prediction probability . Gradient smoothing , Avoid jumping output values , Function differentiable , Can find any two points sigmoid The slope of the curve .

shortcoming : In deep neural networks, gradient explosion and gradient disappearance are caused by gradient reverse transmission , The probability of gradient explosion is very small , The probability of gradient disappearance is relatively large , The value range of its derivative function is [0,0.25] Between . The output of the function is not in 0 Centred , Cause the reciprocal to be greater than 0, This will reduce the efficiency of weight update .Sigmoid Functions perform exponential operations , The computer is running slowly .

2.tanh

f(x)=2/(1+e^-2x)-1

characteristic :tanh It's a hyperbolic tangent function .tanh Functions and sigmoid The curves of functions are relatively similar . But it's better than sigmoid Functions have some advantages .tanh Output Interval is [-1,1] , And the whole function takes 0 Centered , Than sigmoid Functions are better ; stay tanh In the figure , Negative input will be strongly mapped to negative , And zero input is mapped to near zero .

shortcoming : It solves Sigmoid Function is not zero-centered Output problems , However , The gradient disappears (gradient vanishing) And the problem of power operation still exists

Be careful : In the general binary classification problem ,tanh Function is used to hide layers , and sigmoid Function for the output layer , But it's not fixed , It needs to be adjusted to specific problems .

3.relu (dead relu)

f(x)=max(0,x)

characteristic :

ReLU A function is actually a function that takes the maximum value , Note that this is not differentiable across regions , But we can take sub-gradient.ReLU Simple though , But it is an important achievement in recent years , It has the following advantages :

1) It's solved gradient vanishing problem ( In the positive range )

2) It's very fast , Just judge whether the input is greater than 0

3) The convergence rate is much faster than sigmoid and tanh

shortcoming :

1)ReLU The output of is not zero-centered

2)Dead ReLU Problem, It means that some neurons may never be activated , So that the corresponding parameters can never be updated . There are two main reasons for this : (1) Very unfortunate parameter initialization , This is rare (2) learning rate Too high results in too large parameter update during training , Unfortunately, it brings the network into this state . The solution is that Xavier Initialization method , And avoid learning rate Set too large or use adagrad And so on learning rate The algorithm of .

4.leaky relu (prelu)

f(x)=max(αx,x)

People in order to solve Dead ReLU Problem, Put forward the idea of ReLU The first half of is set to αx Instead of 0, Usually α=0.01. Another intuitive idea is a parameter based approach , namely ParametricReLU:f(x)=max(αx,x), among α It can be learned from the directional propagation algorithm .

5.elu

ELU It's also about solving ReLU The existing problems , obviously ,ELU Yes ReLU All the basic advantages of , There will be no dead relu problem , The average output is close to 0,zero-centered

6.softmax

f(x)=e^x_i/sum(x_j)

characteristic :

Softmax And normal max Functions are different :max The function only outputs the maximum value , but Softmax Make sure that smaller values have smaller probabilities , And they don't just throw it away . We can think of it as argmax The probability version of the function or 「soft」 edition .

Softmax The denominator of the function combines all the factors of the original output value , It means Softmax The probabilities obtained by functions are related to each other .

shortcoming :

It's nondifferentiable at zero . The gradient of negative input is zero , For activation of this area , Weights are not updated during back propagation , It produces dead neurons that never activate .

7.Swish

f(x)=x * sigmoid (x)

characteristic :

Swish The design of is influenced by LSTM and highway network Use in sigmoid Function for gating . We use the same value for gating to simplify the gating mechanism , Called self gating (self-gating). The advantage of self gating is that it requires only a simple scalar input , Normal gating requires multiple scalar inputs . This feature enables the use of self gated activation functions such as Swish It can easily replace the activation function with a single scalar as input ( Such as ReLU), There is no need to change the hidden capacity or quantity of parameters .

Swish Is a new activation function , Formula for : f(x) = x · sigmoid(x).Swish There is no upper bound, there is a lower bound 、 smooth 、 Nonmonotonic properties , These are all in Swish And similar activation functions . In our experiments, we used a method specially designed for ReLU Design model and superparameter , And then use Swish Replace ReLU Activation function ; It's just so simple 、 The non optimal number of iteration steps still makes Swish Consistently superior to ReLU And other activation functions . We expect that when both the model and the hyperparameter are designed for Swish When designing ,Swish It can be further improved .Swish The conciseness of and its relationship with ReLU The similarity of means substitution in any network ReLU It's just a matter of changing one line of code .

8.maxout

Maxout It is a layer of deep learning network , Like a pool layer 、 Convolution layer, etc , We can maxout As the activation function layer of the network

maxout network Of TensorFlow Realization - Simple books

9.softplus

f(x)= ln(1+e^x)

characteristic :softplus Its derivative function is sigmiod function ,Softplus The function is similar to ReLU function , But it's relatively smooth , image ReLU The same is unilateral inhibition . It's widely accepted :(0, + inf).

10.softsign function

f(x)=x/(1+|x|)

Softsign The function is Tanh Another alternative to functions . It's like Tanh The function is the same ,Softsign The function is antisymmetric 、 Go to the center 、 Differentiable , And back to -1 and 1 Between the value of the . Its flatter curve and slower descent derivative show that it can learn more efficiently , Than tanh Function better solves the problem of gradient disappearance . On the other hand ,Softsign The calculation ratio of the derivative of a function Tanh Functions are more troublesome .

11.gelu

f(x)=xP(X<=x)=xΦ(x)

Φ(x) Is the probability function of the positive distribution , We can simply use Zhengtai distribution N(0,1), You can also use a parameterized positive distribution N(μ,σ), Then get through training μ,σ

The approximate calculation of the Abelian distribution provided in the paper is as follows :

Φ(x)=0.5x(1+tanh[2/π(x+0.044715x3)])

In the modeling process of neural network , The important property of the model is nonlinearity , At the same time, in order to model generalization ability , You need to add random regularization , for example dropout( Randomly set some outputs to 0, In fact, it is also a disguised random nonlinear activation ), Stochastic regularization and nonlinear activation are two separate things , In fact, the input of the model is determined by both nonlinear activation and stochastic regularization .

GELUs It is in activation that the idea of random regularity is introduced , It is a probabilistic description of neuron input , Intuitively, it is more in line with the understanding of nature , At the same time, the experimental effect is better than Relus And ELUs All right .

advantage :

- It seems to be NLP The current best in the field ; Especially in Transformer The best model ;

- It can avoid the problem of gradient disappearance .

shortcoming :

- Even though it is 2016 Put forward in , But it is still a quite novel activation function in practical application .

3、 Activation function tf Realization

1.sigmiod

y=tf.sigmoid(x)2.tanh

y=tf.tanh(x)3.relu (dead relu)

y=tf.nn.relu(x)4.leaky relu (prelu)

y=tf.nn.leaky_relu(x)5.elu

y=tf.nn.elu(x)6.softmax

y=tf.nn.softmax(x)7.Swish

y=tf.nn.swish(x)

8.maxout

def maxout(x, k, m):

d = x.get_shape().as_list()[-1]

W = tf.Variable(tf.random_normal(shape=[d, m, k]))

b = tf.Variable(tf.random_normal(shape = [m, k]))

z = tf.tensordot(x, W, axes=1) + b

z = tf.reduce_max(z, axis=2)

return z9.softplus

y=tf.nn.softplus(x)

10.softsign function

y=tf.nn.softsign(x)

10.gelu

def gelu(input_tensor):

cdf = 0.5 * (1.0 + tf.erf(input_tensor / tf.sqrt(2.0)))

return input_tesnsor*cdf

reference

2、sigmoid,softmax,tanh Simple implementation _dongcjava The blog of -CSDN Blog

4、BERT The activation function in GELU: Gaussian error linear element - You know

6、softsign And tanh Comparison _Takoony The blog of -CSDN Blog _softsign

9、GELU Activation function _alwayschasing The blog of -CSDN Blog _gelu Activation function

10、tensorflow The activation function and loss function are often used in - Fate0729 - Blog Garden

边栏推荐

- Actual combat: basic use of Redux

- Tar command

- Leetcode top 100 question 2 Add two numbers

- 3D建模與處理軟件簡介 劉利剛 中國科技大學

- Set set detailed explanation

- Use and principle of Park unpark

- 轻松上手Fluentd,结合 Rainbond 插件市场,日志收集更快捷

- 【问题思考总结】为什么寄存器清零是在用户态进行的?

- vsCode函数注解/文件头部注解快捷键

- Ssm+mysql second-hand trading website (thesis + source code access link)

猜你喜欢

Application of industrial conductive slip ring

CockroachDB: The Resilient Geo-Distributed SQL Database 论文阅读笔记

Leetcode top 100 questions 1 Sum of two numbers

Explanation of characteristics of hydraulic slip ring

数字金额加逗号;js给数字加三位一逗号间隔的两种方法;js数据格式化

Fluentd is easy to use. Combined with the rainbow plug-in market, log collection is faster

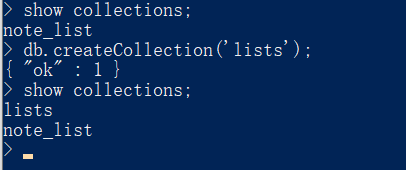

Chapitre d'apprentissage mongodb: Introduction à la première leçon après l'installation

LevelDB源码分析之LRU Cache

mysql 将毫秒数转为时间字符串

Precautions for use of conductive slip ring

随机推荐

Explanation of characteristics of hydraulic slip ring

json数据比较器

What things you didn't understand when you were a child and didn't understand until you grew up?

0xc000007b应用程序无法正常启动解决方案(亲测有效)

QT waiting box production

Mongodb學習篇:安裝後的入門第一課

ssm+mysql二手交易网站(论文+源码获取链接)

CockroachDB 分布式事务源码分析之 TxnCoordSender

Use and principle of Park unpark

And search: the suspects (find the number of people related to the nth person)

Practice of combining rook CEPH and rainbow, a cloud native storage solution

Set集合详细讲解

Use and principle of AQS related implementation classes

Ssgssrcsr differences

tese_Time_2h

Causes of short circuit of conductive slip ring and Countermeasures

Usage and principle of synchronized

Thread process foundation of JUC

QT等待框制作

Rainbow combines neuvector to practice container safety management