当前位置:网站首页>Explain AI accelerator in detail: why is this the golden age of AI accelerator?

Explain AI accelerator in detail: why is this the golden age of AI accelerator?

2022-07-04 03:06:00 【Computer Vision Research Institute】

selected from Medium

author :Adi Fuchs

Heart of machine compilation

Machine center editorial department

stay Last article in , Former Apple Engineer 、 Dr. Princeton University Adi Fuchs Explained AI The motivation for the birth of accelerators . In this article , We will follow the author's ideas to review the whole development process of processors , have a look AI Why can accelerators become the focus of the industry .

from 《 Almost Human 》

This is the second in a series of blogs , We come to the key of the whole series . When promoting a new company or project , Venture capitalists or executives often ask a basic question :「 Why now ?」

To answer this question , We need to briefly review the development history of processors , Look at what major changes have taken place in this field in recent years .

What is a processor ?

In short , The processor is the part of the computer system that is responsible for the actual numerical calculation . It receives user input data ( Expressed as a number ), And generate new data according to the user's request , That is, perform a set of arithmetic operations desired by the user . The processor uses its arithmetic unit to generate calculation results , This means running the program .

20 century 80 years , Processors are commercialized in personal computers . They gradually become an indispensable part of our daily life , laptop 、 They are found in mobile phones and the global infrastructure computing structure that connects billions of cloud and data center users . With the increasing popularity of computing intensive applications and the emergence of a large number of new user data , Contemporary computing systems must meet the growing demand for processing power . therefore , We always need better processors . For a long time ,「 Better 」 signify 「 faster 」, But now it can also be 「 More efficient 」, Spend the same time , But it uses less energy , Less carbon footprint .

Processor evolution

The evolution of computer system is one of the most outstanding engineering achievements of mankind . We spent about 50 It took years to reach such a height : The computing power of an ordinary smart phone in a person's pocket is that of a room sized computer used in the Apollo moon landing mission 100 ten thousandfold . The key to this evolution lies in the semiconductor industry , And how it improves the speed of the processor 、 Power and cost .

Intel 4004: The first commercial microprocessor , Published on 1971 year .

The processor is called 「 The transistor 」 Of electronic components . Transistors are logic switches , Used as a function from the original logic ( Such as and 、 or 、 Not ) To complex arithmetic ( Floating point addition 、 Sine function )、 Memory ( Such as ROM、DRAM) Building blocks for everything . Over the years , Transistors have been shrinking .

1965 year , Gordon · Moore found , The number of transistors in integrated circuits doubles every year ( Later, it was updated to every 18-24 Months ). He predicts that this trend will continue for at least ten years . Although some people think , This is not so much a 「 The laws of 」, It's more like a 「 Industry trend 」, But it did last for about 50 year , It is one of the longest lasting man-made trends in history .

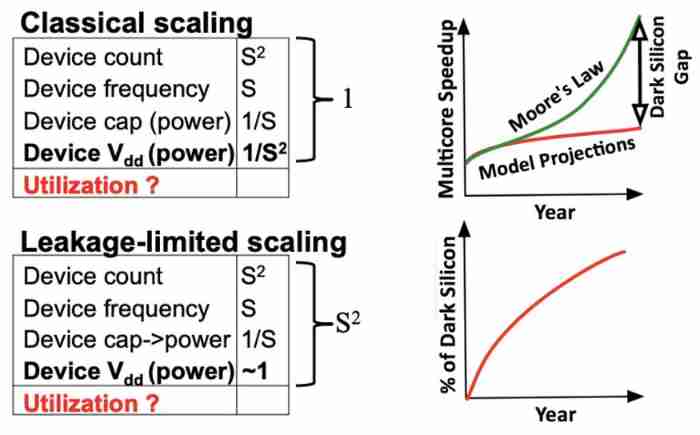

The electrical characteristics of transistor scaling .

But apart from Moore's law , There is also a less famous but equally important law . It is called 「 Leonard's law of scaling 」, By Robert · Donald is 1974 in . Although Moore's law predicts that transistors will shrink year by year , But dunnard asked :「 In addition to being able to install more transistors on a single chip , What are the practical benefits of having smaller transistors ?」 His observation is , When the transistor is in k Reduce the number of times , The current will also decrease . Besides , Because electrons move closer , The transistor we finally got is fast k times , most important of all —— Its power drops to 1/k^2. therefore , in general , We can pack more k^2 A transistor , The logical function will be about k times , But the power consumption of the chip will not increase .

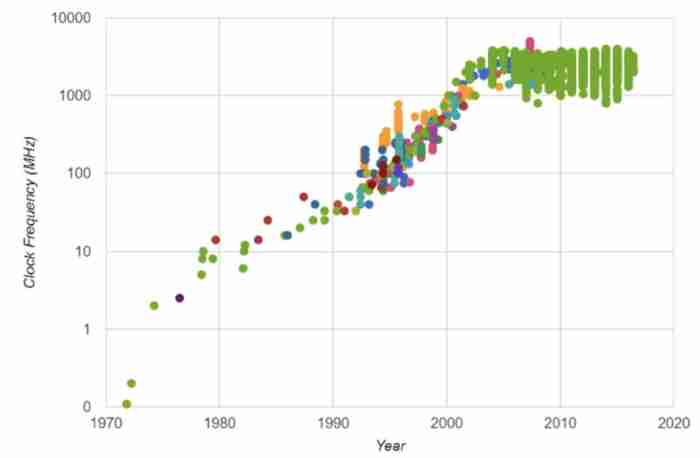

The first stage of processor development : Frequency era (1970-2000 years )

Evolution of microprocessor frequency rate .

In the early , The microprocessor industry is mainly concentrated in CPU On , because CPU It was the main force of the computer system at that time . Microprocessor manufacturers make full use of the scaling law . say concretely , Their goal is to improve CPU The frequency of , Because faster transistors enable the processor to perform the same calculations at a higher rate ( Higher frequency = More calculations per second ). This is a somewhat simple way of looking at things ; Processors have many architectural innovations , But in the end , In the early , Frequency contributes a lot to performance , From Intel 4004 Of 0.5MHz、486 Of 50MHz、 Galloping 500MHz To Pentium 4 Series of 3–4GHz.

Evolution of power density .

Around the 2000 year , Leonard's scaling law began to collapse . say concretely , As the frequency increases , The voltage stops falling at the same rate , So is the power density rate . If this trend continues , The problem of chip heating cannot be ignored . However , Powerful heat dissipation scheme is not mature . therefore , Suppliers cannot continue to rely on improvement CPU Frequency to get higher performance , We need to think about other ways .

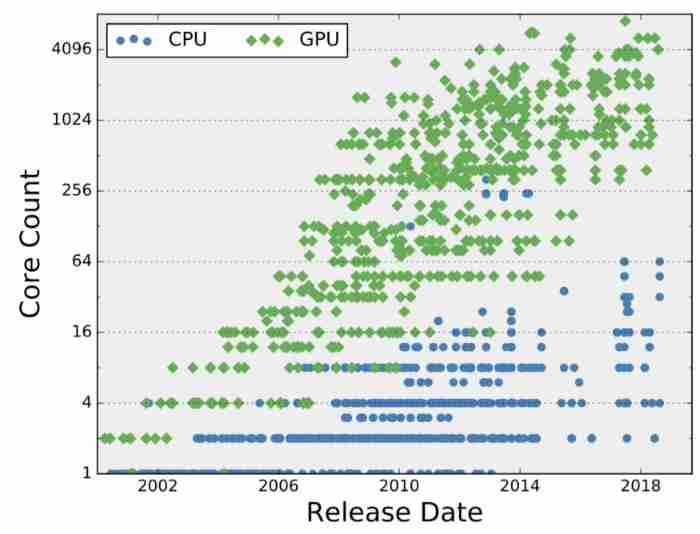

The second stage of processor development : The age of multi-core (2000 years - 2010 The mid - )

Stagnant CPU Frequency means that it becomes very difficult to improve the speed of a single application , Because a single application is written in the form of a continuous instruction stream . however , As Moore's law says , every 18 Months , The transistors in our chip will double . therefore , The solution this time is not to speed up a single processor , Instead, the chip is divided into multiple identical processing cores , Each kernel executes its instruction stream .

CPU and GPU Evolution of kernel number .

about CPU Come on , It's natural to have multiple cores , Because it is already executing multiple independent tasks concurrently , For example, your Internet browser 、 Word processor and sound player ( More precisely , The operating system does a good job in creating this abstraction of concurrent execution ). therefore , An application can run on a kernel , Another application can run on another kernel . Through this practice , Multi core chips can perform more tasks in a given time . However , To speed up a single program , Programmers need to parallelize it , This means that the instruction stream of the original program is decomposed into multiple instructions 「 subflow 」 or 「 Threads 」. In short , A set of threads can run concurrently on multiple cores in any order , No thread will interfere with the execution of another thread . This practice is called 「 Multithreaded programming 」, It is the most common way for a single program to improve performance from multi-core execution .

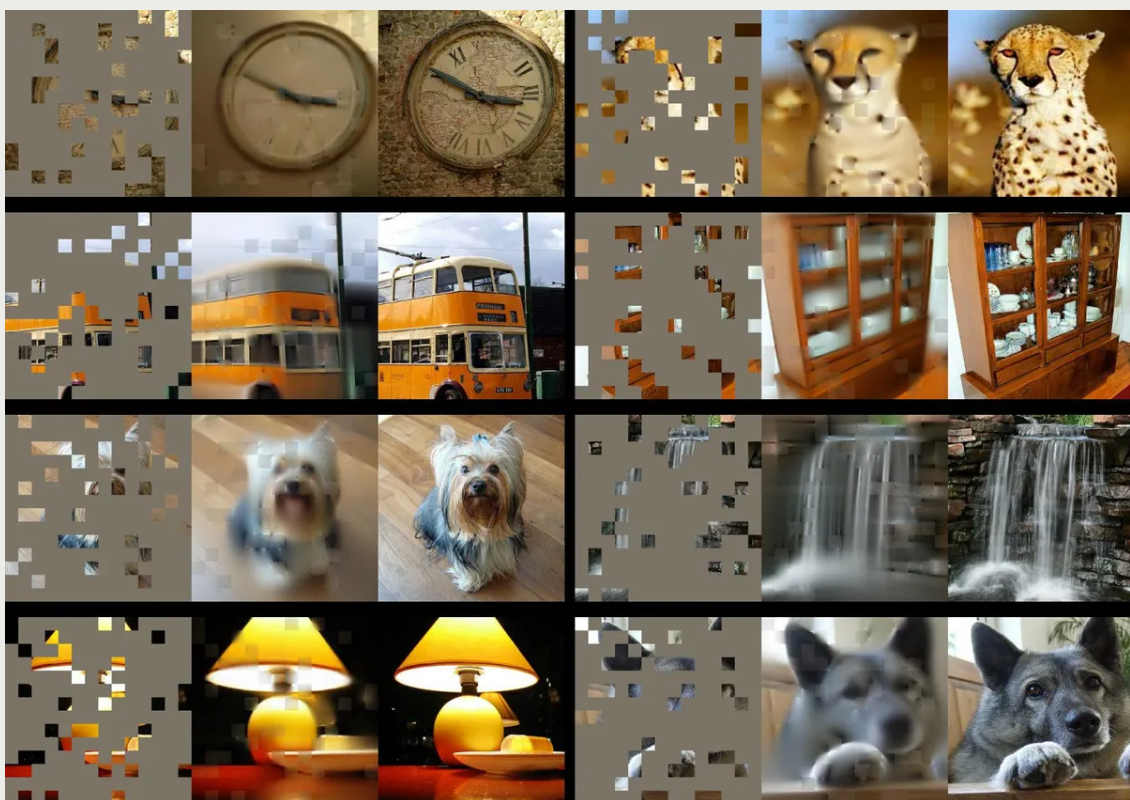

A common form of multi-core execution is in GPU in . although CPU It consists of a small number of fast and complex cores , but GPU Rely on a lot of simpler kernels . Generally speaking ,GPU Focus on graphic applications , Because graphics and images ( For example, the image in the video ) It consists of thousands of pixels , It can be handled independently by a series of simple and predetermined calculations . Conceptually speaking , Each pixel can be assigned a thread , And execute a simple 「 Mini program 」 To calculate its behavior ( Such as color and brightness level ). High pixel level parallelism makes it natural to develop thousands of processing cores . therefore , In the next round of processor evolution ,CPU and GPU Suppliers did not speed up individual tasks , Instead, Moore's law is used to increase the number of cores , Because they can still get and use more transistors on a single chip .

Unfortunately , here we are 2010 Around the year , Things get more complicated : Leonard's scaling law has come to an end , Because the voltage of the transistor is close to the physical limit , Cannot continue to shrink . Although it was previously possible to increase the number of transistors while maintaining the same power budget , But doubling the number of transistors means doubling the power consumption . The demise of Leonard's scaling law means that contemporary chips will encounter 「 Use the wall (utilization wall)」. here , It doesn't matter how many transistors we have on our chip —— As long as there is power consumption limit ( Limited by the cooling capacity of the chip ), We can't use more than a given part of the transistor in the chip . The rest of the chip must be powered off , This phenomenon is also called 「 Dark silicon 」.

The third stage of processor development : Accelerator era (2010 Age to date )

Dark silicon is essentially 「 Moore's law ends 」 A preview of —— For processor manufacturers , The times have become challenging . One side , Computing demand is growing rapidly : Smartphones have become ubiquitous , And it has powerful computing power , ECS needs to handle more and more services ,「 The worst part is 」—— Artificial intelligence is back on the stage of history , And devour computing resources at an amazing speed . On the other hand , In this unfortunate era , Dark silicon has become an obstacle to the development of transistor chips . therefore , When we need to improve our processing capacity more than ever , This matter has become more difficult than ever .

Training SOTA AI The amount of calculation required for the model .

Since the new generation of chips was bound by dark silicon , The computer industry began to focus on hardware accelerators . Their idea is : If you can't add more transistors , Then make good use of the existing transistors . How do you do that ? The answer is : Specialization .

Conventional CPU Designed to be universal . They use the same hardware structure to run all our applications ( operating system 、 Word processor 、 Calculator 、 Internet browser 、 Email client 、 Media player, etc ) Code for . These hardware structures need to support a large number of logical operations , And capture many possible patterns and program induced behaviors . This is equivalent to good hardware availability , But the efficiency is quite low . If we only focus on certain applications , We can narrow the problem area , And then remove a lot of structural redundancy from the chip .

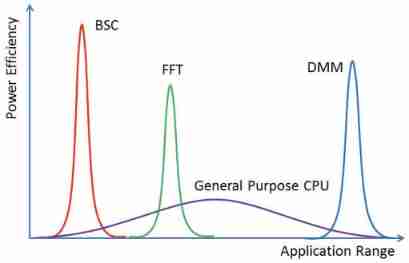

Universal CPU vs. Accelerators for specific applications .

Accelerators are chips that are specifically designed for specific applications or fields , in other words , They won't run all applications ( For example, do not run the operating system ), Instead, a very narrow range is considered at the hardware design level , because :1) Their hardware structure only meets the operation of specific tasks ;2) The interface between hardware and software is simpler . say concretely , Because the accelerator runs in a given domain , The code of accelerator program should be more compact , Because it encodes less data .

for instance , If you want to open a restaurant , But the area 、 The electricity budget is limited . Now you have to decide what dishes this restaurant does , It's Pizza 、 Vegetarian diet 、 Hamburger 、 Sushi is all made (a) Or just pizza (b)?

If elected a, Your restaurant can really satisfy many customers with different tastes , But your chef has to cook a lot of dishes , And not all of them are good at it . Besides , You may also need to buy multiple refrigerators to store different ingredients , And pay close attention to which ingredients are used up , What went bad , Different ingredients may also mix , The management cost is greatly increased .

But if you choose b, You can hire a top pizza expert , Prepare a small amount of ingredients , Buy a custom oven to make pizza . Your kitchen will be very tidy 、 Efficient : A table to make dough , A table with sauce and cheese , Put ingredients on a table . But at the same time , There are also risks to this approach : What if no one wants pizza tomorrow ? What if you can't make the pizza you want in your customized oven ? You have spent a lot of money to build this specialized kitchen , Now it's a dilemma : If you don't transform the kitchen, you may face closing the store , The transformation will cost a lot of money , And after the change , The customer's taste may have changed again .

Back to the processor world : Analogy to the above example ,CPU It's equivalent to options a, Domain specific accelerators are options b, The store size limit is equivalent to the silicon budget . How will you design your chip ? obviously , The reality is not so polarized , Instead, there is a transition region similar to the spectrum . In this spectrum , People more or less trade versatility for efficiency . Early hardware accelerators were designed for specific areas , Such as digital signal processing 、 Network processing , Or as the Lord CPU Auxiliary coprocessor .

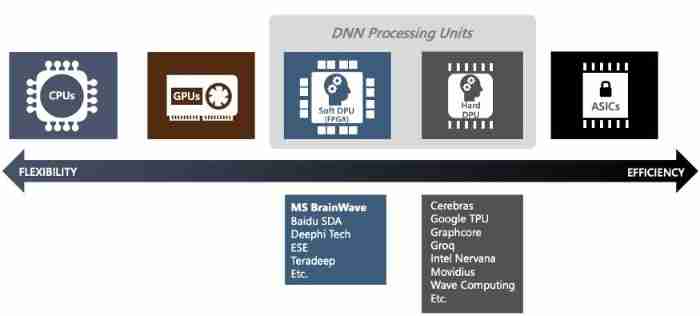

from CPU The first shift to major acceleration applications is GPU. One CPU There are several complex processing cores , Each core uses various techniques , Such as branch predictor and out of order execution engine , To speed up single threaded jobs as much as possible .GPU The structure of is different .GPU It consists of many simple kernels , These kernels have simple control flows and run simple programs . first ,GPU For graphic applications , Such as computer games , Because these applications contain images composed of thousands or millions of pixels , Each pixel can be calculated independently in parallel . One GPU Programs usually consist of some kernel functions , be called 「 kernel (kernel)」. Each kernel contains a series of simple calculations , And in different data sections ( Such as one pixel or several pixels patch) Thousands of times . These attributes make graphics applications the target of hardware acceleration . They behave simply , Therefore, there is no need for complex instruction control flow in the form of branch predictor ; They require only a few operations , Therefore, there is no need for complex arithmetic units ( For example, calculate sine function or 64 Bit floating-point division unit ). People later found that , These attributes are not only applicable to graphic applications ,GPU Its applicability can also be extended to other fields , Such as linear algebra or scientific applications . Now , Accelerated computing is no longer limited to GPU. From fully programmable but inefficient CPU To efficient but limited programmability ASIC, The concept of accelerated computing is everywhere .

Processing alternatives to deep Neural Networks . source : Microsoft .

Now , As more and more people show 「 good 」 Feature applications become the target of acceleration , Accelerators are getting more and more attention : Video codec 、 Database processor 、 Cryptocurrency miner 、 Molecular dynamics , Of course, there is artificial intelligence .

What makes AI Become an acceleration target ?

Commercial feasibility

Designing a chip is a laborious 、 Things that cost money —— You need to hire industry experts 、 Use expensive tools for chip design and verification 、 Developing prototypes and making chips . If you want to use cutting-edge processes ( For example, today's 5nm CMOS), The cost will reach tens of millions of dollars , Whether success or failure . Fortunately, , For AI , Spending money is not a problem .AI The potential benefits are huge ,AI The platform is expected to generate trillions of dollars in revenue in the near future . If your idea is good enough , You should be able to easily find money for this job .

AI It's a 「 Can accelerate 」 Application fields of

AI The program has all the attributes that make it suitable for hardware acceleration . First and foremost , They are massively parallel : Most calculations are spent on tensor operations , Such as convolution or self attention operator . If possible , You can also add batch size, So that the hardware can process multiple samples at a time , Improve hardware utilization and further promote parallelism . The main factor that drives the fast running ability of hardware processors is parallel computing . secondly ,AI Calculation is limited to a few types of operations : Mainly multiplication and addition of linear algebraic kernel 、 Some nonlinear operators , For example, simulate synaptic activation ReLU, And based on softmax Index operation of classification . The narrow problem space enables us to simplify the computing hardware , Focus on certain operators .

Last , because AI The program can be expressed as a calculation diagram , So we can know the control flow at compile time , It's like having a known number of iterations for The cycle is the same , Communication and data reuse patterns are also quite limited , Therefore, we can characterize which network topologies we need to communicate data between different computing units and software defined temporary storage , To control the storage and arrangement of data .

AI The algorithm is built in a hardware friendly way

Not long ago , If you want to innovate in the field of computing architecture , You might say :「 I have a new idea of architecture improvement , It can significantly improve something , however —— What I need to do is to slightly change the programming interface and let programmers use this function .」 At that time, this idea will not work . The programmer's API Is inaccessible , And use destructive programs 「 clean 」 It is difficult to burden programmers with low-level details of semantic flow .

Besides , Mixing the details of the underlying architecture with programmer oriented code is not a good habit . First of all, it is not portable , Because some architectural features change between chip generations . Second, it may be programmed incorrectly , Because most programmers have no deep understanding of the underlying hardware .

Although you can say GPU And multicore CPU Because of multithreading ( Sometimes even —— Memory wall ) It deviates from the traditional programming model , But because single thread performance is no longer exponential growth , We can only resort to multithreaded programming , Because this is our only choice . Multithreaded programming is still difficult to master , A lot of education is needed . Fortunately, , When people write AI The program , They will use neural layers and other well-defined blocks to build computational diagrams .

Advanced program code ( for example TensorFlow or PyTorch The code in ) It has been written in a way that can mark parallel blocks and build data flow diagrams . So theoretically , You can build a rich software library and a sufficiently sophisticated compiler tool chain to understand the semantics of the program and effectively reduce it to hardware representation , Without any involvement of programmers who develop applications , Let data scientists do their work , They don't care what hardware the task runs on . In practice , It will take time for the compiler to fully mature .

There are few other options

AI is everywhere , Big data centers 、 A smart phone 、 sensor , It can be found in robots and autonomous vehicle . Each system has different practical limitations : People certainly don't want autonomous vehicle to be unable to detect obstacles because their computing power is too small , It's also unacceptable to spend thousands of dollars a day training a super large-scale pre training model because of low efficiency ,AI There is no saying that one chip is suitable for all scenarios , Computing needs are huge , Every bit of efficiency means spending a lot of time 、 Energy and cost . If there is no appropriate acceleration hardware to meet your AI demand , Yes AI The ability to experiment and discover will be limited .

Link to the original text :

https://medium.com/@adi.fu7/ai-accelerators-part-ii-transistors-and-pizza-or-why-do-we-need-accelerators-75738642fdaa

边栏推荐

- Amélioration de l'efficacité de la requête 10 fois! 3 solutions d'optimisation pour résoudre le problème de pagination profonde MySQL

- Stm32bug [stlink forced update prompt appears in keilmdk, but it cannot be updated]

- Unity writes a character controller. The mouse controls the screen to shake and the mouse controls the shooting

- Contest3145 - the 37th game of 2021 freshman individual training match_ G: Score

- Rhcsa day 2

- Contest3145 - the 37th game of 2021 freshman individual training match_ F: Smallest ball

- Imperial cms7.5 imitation "D9 download station" software application download website source code

- Keepalived set the master not to recapture the VIP after fault recovery (it is invalid to solve nopreempt)

- [UE4] parse JSON string

- Network communication basic kit -- IPv4 socket structure

猜你喜欢

How to use websocket to realize simple chat function in C #

(column 23) typical C language problem: find the minimum common multiple and maximum common divisor of two numbers. (two solutions)

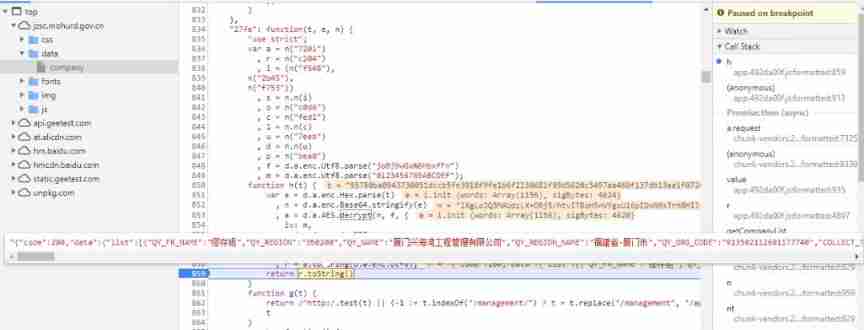

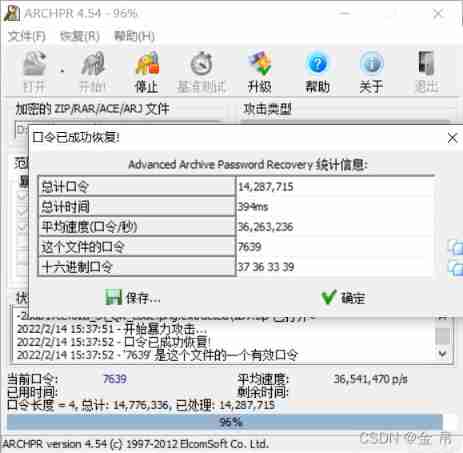

Dare to climb here, you're not far from prison, reptile reverse actual combat case

Have you entered the workplace since the first 00???

Bugku Zhi, you have to stop him

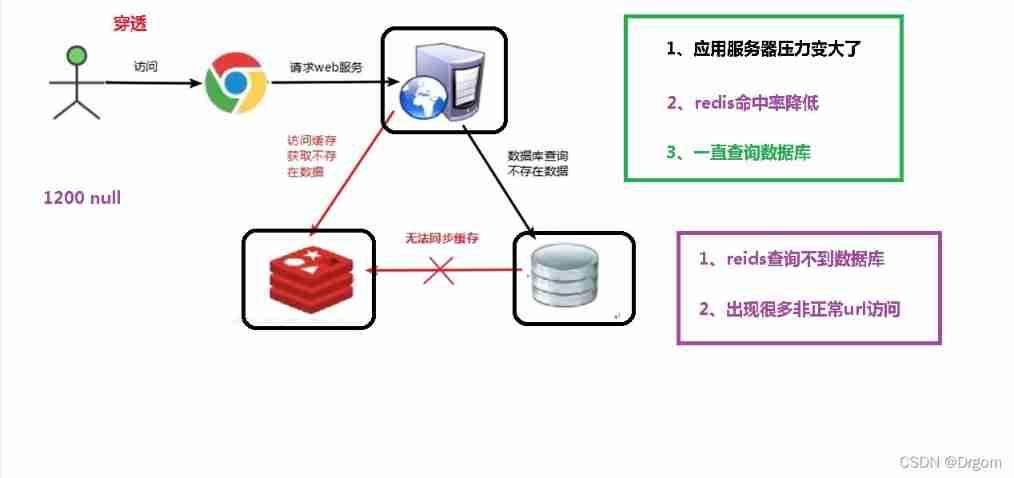

Problems and solutions of several concurrent scenarios of redis

Buuctf QR code

What are the conditions for the opening of Tiktok live broadcast preview?

Teach you how to optimize SQL

Dans la recherche de l'intelligence humaine ai, Meta a misé sur l'apprentissage auto - supervisé

随机推荐

Unspeakable Prometheus monitoring practice

The "two-way link" of pushing messages helps app quickly realize two-way communication capability

FRP intranet penetration

3D game modeling is in full swing. Are you still confused about the future?

(practice C language every day) pointer sorting problem

Record a problem that soft deletion fails due to warehouse level error

Leetcode 110 balanced binary tree

Problems and solutions of several concurrent scenarios of redis

2022 Guangxi provincial safety officer a certificate examination materials and Guangxi provincial safety officer a certificate simulation test questions

2022 registration examination for safety production management personnel of fireworks and firecracker production units and examination skills for safety production management personnel of fireworks an

Gee import SHP data - crop image

Zblog collection plug-in does not need authorization to stay away from the cracked version of zblog

Zhihu million hot discussion: why can we only rely on job hopping for salary increase? Bosses would rather hire outsiders with a high salary than get a raise?

Code Execution Vulnerability - no alphanumeric rce create_ function()

2022 attached lifting scaffold worker (special type of construction work) free test questions and attached lifting scaffold worker (special type of construction work) examination papers 2022 attached

PTA tiantisai l1-079 tiantisai's kindness (20 points) detailed explanation

The difference between MCU serial communication and parallel communication and the understanding of UART

I stepped on a foundation pit today

Hospital network planning and design document based on GLBP protocol + application form + task statement + opening report + interim examination + literature review + PPT + weekly progress + network to

Latex tips slash \backslash