当前位置:网站首页>Text driven for creating and editing images (with source code)

Text driven for creating and editing images (with source code)

2022-06-11 16:32:00 【Computer Vision Research Institute】

Pay attention to the parallel stars

Never get lost

Institute of computer vision

official account ID|ComputerVisionGzq

Study Group | Scan the code to get the join mode on the homepage

Address of thesis :https://arxiv.org/pdf/2206.02779.pdf

Computer Vision Institute column

author :Edison_G

Great progress in neural image generation , Coupled with the appearance of the seemingly omnipotent visual language model , Finally, the text-based interface can be used to create and edit images .

1

Generalization

Processing generic images requires a diverse underlying generation model , So the latest work uses the diffusion model , This proved to be more diverse than GAN. However , A major drawback of diffusion models is that their reasoning time is relatively slow .

In today's sharing , Researchers have proposed an accelerated solution for the local text driven editing task of general-purpose images , The required edits are limited to user supplied masks . The researchers' solution takes advantage of the recent text to image potential diffusion model (LDM), The model accelerates diffusion by running in a low dimensional potential space .

First transform by blending diffusion into LDM To the local image editor . Next , In view of this LDM The inherent problem of not being able to accurately reconstruct an image , A solution based on optimization is proposed . Last , The researchers solved the scenario of using a thin mask to perform local editing . Evaluate the newly proposed method qualitatively and quantitatively based on the available baseline , And prove that in addition to being faster , The new method achieves better accuracy than baseline while reducing some artifacts .

Project page address :https://omriavrahami.com/blended-latent-diffusion-page

2

New framework method analysis

Blended Latent Diffusion This paper aims to provide a solution for the local text driven editing task of general-purpose images introduced in the mixed diffusion paper .Blended Diffusion The reasoning time is slow ( In a single GPU It takes about... To get good results on 25 minute ) And pixel level artifacts .

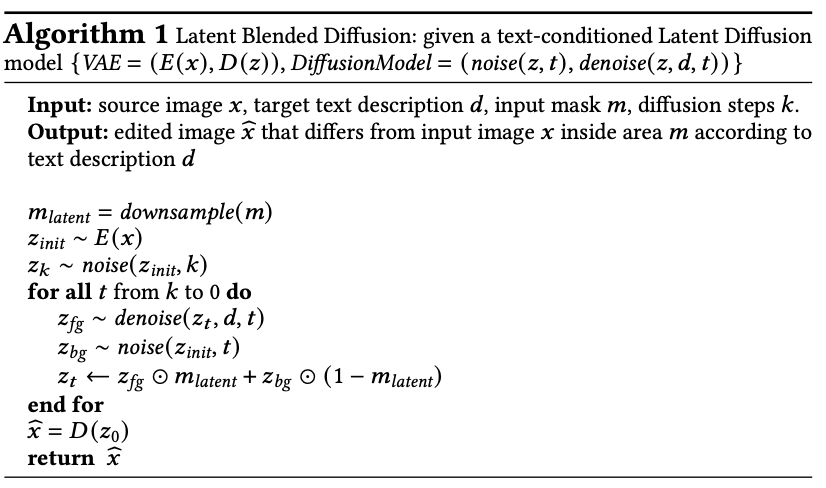

To solve these problems , Researchers propose to incorporate mixed diffusion into a potential diffusion model from text to image . To do this , Operate on potential spaces , And repeatedly mix the foreground and background parts in the potential space , The diffusion process is as follows :

Operating in potential space does enjoy fast reasoning speed , But it has imperfect reconstruction of unshielded area and can not deal with thin mask . More details on how we can solve these problems , Please continue reading .

Noise artifacts

Given input image (a) and mask(b) And guide text “ Blond curls ”, And the newly proposed method (d) comparison , Mixed diffusion will produce obvious pixel level noise artifacts (c).

As mentioned earlier , Potential diffusion can generate images from a given text ( Text to image LDM). However , The model lacks the ability to edit existing images locally , Therefore, the researchers suggest merging blending diffusion from text to image LDM.

The new method is summarized in the first chapter , Description of the algorithm , Please read the original paper .LDM In variational automatic encoder VAE = (𝐸(𝑥), 𝐷(𝑧)) Perform text guided de-noising diffusion in the potential space of learning . Take the part we want to modify as the foreground (fg), Use the rest as the background (bg), Follow the idea of mixed diffusion , And repeatedly mix the two parts in this potential space , As the diffusion proceeds . Use VAE Encoder 𝑧init ∼ 𝐸(𝑥) The input image 𝑥 Code into potential space . Potential space still has a spatial dimension ( because VAE Convolution property of ), But the width and height are smaller than the input image (8 times ).

therefore , The input mask 𝑚 Down sampling to these spatial dimensions , To get the potential space mask 𝑚latent, It will be used to perform blending .

Background reconstruction comparison

Background reconstruction using decoder weights finetuning

Thin mask progression

Progressive mask shrinking

Comparison to baselines: A comparison with (1) Local CLIP-guided diffusion [Crowson 2021], (2) PaintByWord++ [Bau et al. 2021; Crowson et al. 2022], (3) Blended Diffusion [Avrahami et al. 2021], (4) GLIDE [Nichol et al. 2021] and (5) GLIDE-filtered [Nichol et al. 2021].

Limit : Top line , be based on CLIP Our ranking only considers mask Area , So sometimes the results are only piecewise realistic , The overall image does not look realistic . Bottom line : The model has text bias - It might try to create movie posters with text / Book cover , Or in addition to generating actual objects .

THE END

Please contact the official account for authorization.

The learning group of computer vision research institute is waiting for you to join !

ABOUT

Institute of computer vision

The Institute of computer vision is mainly involved in the field of deep learning , Mainly devoted to face detection 、 Face recognition , Multi target detection 、 Target tracking 、 Image segmentation and other research directions . The Research Institute will continue to share the latest paper algorithm new framework , The difference of our reform this time is , We need to focus on ” Research “. After that, we will share the practice process for the corresponding fields , Let us really experience the real scene of getting rid of the theory , Develop the habit of hands-on programming and brain thinking !

VX:2311123606

Previous recommendation

In recent years, several good papers have implemented the code ( With source code download )

Based on hierarchical self - supervised learning, vision Transformer Scale to gigapixel images

YOLOS: Rethink through target detection Transformer( With source code )

Fast YOLO: For real-time embedded target detection ( Attached thesis download )

边栏推荐

- 【opencvsharp】opencvsharp_samples.core示例代码笔记

- Pyqt5 enables the qplaintextedit control to support line number display

- 2022 national question bank and mock examination for safety officer-b certificate

- Question ac: Horse Vaulting in Chinese chess

- 为什么芯片设计也需要「匠人精神」?

- 【opencvsharp】斑点检测 条码解码 图像操作 图像旋转/翻转/缩放 透视变换 图像显示控件 demo笔记

- Leetcode 1974. Minimum time to type words using a special typewriter (yes, once)

- 2022年R1快开门式压力容器操作考试题库及模拟考试

- How can the project manager repel the fear of being dominated by work reports?

- Pytest test framework Basics

猜你喜欢

2022年高处安装、维护、拆除考试模拟100题及在线模拟考试

MySQL quick start instance (no loss)

【opencvsharp】斑点检测 条码解码 图像操作 图像旋转/翻转/缩放 透视变换 图像显示控件 demo笔记

Pyqt5 enables the qplaintextedit control to support line number display

What is a generic? Why use generics? How do I use generics? What about packaging?

微服务连接云端Sentinel 控制台失败及连接成功后出现链路空白问题(已解决)

20 full knowledge maps of HD data analysis have been completed. It is strongly recommended to collect them!

![[sword finger offer] 21 Adjust array order so that odd numbers precede even numbers](/img/ba/8fa84520bacbc56ce7cbe02ee696c8.png)

[sword finger offer] 21 Adjust array order so that odd numbers precede even numbers

2022起重机司机(限桥式起重机)考试题模拟考试题库及模拟考试

2022 high altitude installation, maintenance and demolition test simulation 100 questions and online simulation test

随机推荐

RDKit 安装

Pytest测试框架基础篇

If you want to learn ArrayList well, it is enough to read this article

2022 high altitude installation, maintenance and demolition test simulation 100 questions and online simulation test

信息收集常用工具及命令

【pytest学习】pytest 用例执行失败后其他不再执行

Go quick start of go language (I): the first go program

Can I eat meat during weight loss? Will you get fat?

[learn FPGA programming from scratch -17]: quick start chapter - operation steps 2-5- VerilogHDL hardware description language symbol system and program framework (both software programmers and hardwa

时间复杂度与空间复杂度解析

20 full knowledge maps of HD data analysis have been completed. It is strongly recommended to collect them!

Differences between list and set access elements

Transfer learning

2022年安全员-B证国家题库及模拟考试

美团获得小样本学习榜单FewCLUE第一!Prompt Learning+自训练实战

书籍《阅读的方法》读后感

Project workspace creation steps - Zezhong ar automated test tool

RSP:遥感预训练的实证研究

Toolbar details of user interface - autorunner automated test tool

[ISITDTU 2019]EasyPHP