当前位置:网站首页>[paper notes] contextual transformer networks for visual recognition

[paper notes] contextual transformer networks for visual recognition

2022-06-25 15:13:00 【m0_ sixty-one million eight hundred and ninety-nine thousand on】

The paper

Thesis title :Contextual Transformer Networks for Visual Recognition

Published in : CVPR 2021

Address of thesis :https://arxiv.org/pdf/2107.12292.pdf

Project address :GitHub - JDAI-CV/CoTNet: This is an official implementation for "Contextual Transformer Networks for Visual Recognition".

Preface

With self attention Transformer It triggered a revolution in the field of natural language processing , Recently also inspired Transformer The emergence of Architectural Design , It has achieved competitive results in many computer vision tasks .

For all that , Most existing designs are directly in 2D Self attention is used on the feature graph to obtain the attention matrix of independent queries and key pairs based on each spatial location , But it does not make full use of the Rich context . In the work shared today , The researchers designed a novel Transformer Style module , namely Contextual Transformer (CoT) block , For visual recognition . This design Make full use of the context information between input keys to guide the learning of dynamic attention matrix , So as to enhance the ability of visual representation . Technically speaking ,CoT The block first passes through 3×3 Convolution encodes the input key context , This produces a static context representation of the input .

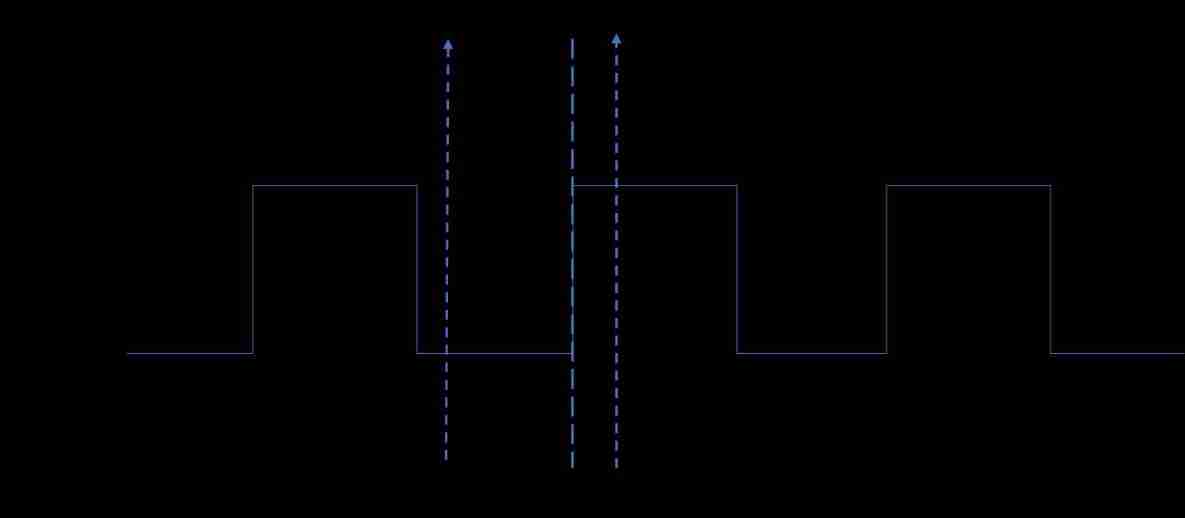

Upper figure (a) It's traditional self-attention Use only isolated queries - Key pairs to measure the attention matrix , But the rich context between keys is not fully utilized .

(b) Namely CoT block

The researchers further linked the coded keys to the input query , Through two consecutive 1×1 Convolution to learn dynamic multi head attention matrix . The learned attention matrix is multiplied by the input value to realize the dynamic context representation of the input . The fusion of static and dynamic context representation is finally output .CoT This piece is very attractive , Because it can be easily replaced ResNet Every... In the architecture 3 × 3 Convolution , Generate a named Contextual Transformer Networks (CoTNet) Of Transformer Type backbone . Through a wide range of applications ( For example, image recognition 、 Object detection and instance segmentation ) A lot of experiments , Verified CoTNet Advantages of being a more powerful backbone .

background

Attention Attention mechanism and self-attention Self attention mechanism

Why the attention mechanism ?

stay Attention Before the birth of , There has been a CNN and RNN And its variant model , Then why introduce attention Mechanism ? There are two main reasons , as follows :

(1) Limitations of computing power : When you have to remember a lot “ Information “, The model will become more complex , However, computing power is still the bottleneck that limits the development of neural networks .

(2) Limitations of optimization algorithms :LSTM Only to a certain extent RNN Long distance dependence in , And information “ memory ” Ability is not high .

What is attention mechanism ?

Before introducing what is the attention mechanism , Let's look at a picture first . When you see the picture below , What will you see first ? When overload information comes into view , Our brains focus on the main information , This is the attention mechanism of the brain .

Again , When we read a sentence , The brain also remembers important words first , In this way, attention mechanism can be applied to natural language processing tasks , So people use the human brain to deal with information overload , Put forward Attention Mechanism .

self-attention It's one of the attention mechanisms , It's also transformer An important part of . Self attention mechanism It's a variant of the attention mechanism , It reduces dependence on external information , Better at capturing the internal correlation of data or features . Application of self attention mechanism in text , Mainly by calculating the interaction between words , To solve the problem of long-distance dependence .

frame

1 Multi-head Self-attention in Vision Backbones

ad locum , Researchers have proposed a general formula for scalable local multi head self attention in the visual trunk , Pictured above (a) Shown . Formally , Given size is H ×W ×C(H: Height ,W: Width ,C: The channel number ) The input of 2D Characteristics of figure X, take X Convert to query Q = XWq, key K=XWk, value V = XWv, Through embedding matrix (Wq, Wk, Wv). It is worth noting that , Each embedding matrix is implemented as 1×1 Convolution .

![]()

Local relation matrix R Further enriches each k × k Grid location information :

![]()

Next , Attention matrix A It is through the channel dimension of each head Softmax Operation pair enhanced spatial perception local relation matrix Rˆ Normalized to achieve :A = Softmax(Rˆ). take A The eigenvector of each spatial position is reshaped as Ch Local attention matrix ( size :k × k), The final output characteristic diagram is calculated for each k × k Aggregation of all values in the grid and the learned local attention matrix :

![]()

2 Contextual Transformer Block

Traditional self attention can trigger feature interaction in different spatial locations , It depends on the input itself . However , In the traditional self attention mechanism , All pairs of query key relationships are learned independently through isolated query key pairs , Without exploring the rich context . This seriously limits the application of self attention learning in 2D The ability to learn visual representation on feature maps .

To alleviate the problem , The researchers constructed a new Transformer Building blocks of style , Above figure (b) Medium Contextual Transformer (CoT) block , It integrates context information mining and self attention learning into a unified architecture .

3 Contextual Transformer Networks

ResNet-50 (left) and CoTNet50 (right)

ResNeXt-50 with a 32×4d template (left) and CoTNeXt-50 with a 2×48d template (right).

Experiment and visualization

Performance comparison of different ways to explore context information , That is, use only static context (Static Context), Use only dynamic context (Dynamic Context), Linear fusion of static and dynamic contexts (Linear Fusion), And the full version CoT block . The backbone is CoTNet-50 And use the default settings in ImageNet Training on .

stay ImageNet On dataset Inference Time vs. Accuracy Curve

The above table summarizes the results in COCO Use... On datasets Faster-RCNN and Cascade-RCNN Performance comparison of target detection in different pre training trunks . Will have the same network depth (50 layer /101 layer ) Visual trunk grouping . From observation , In the process of the training CoTNet Model (CoTNet50/101 and CoTNeXt-50/101) Show obvious performance , Yes ConvNets The trunk (ResNet-50/101 and ResNeSt-50/101) For all IoU Each network depth threshold and target size . The results basically prove that the integration self-attention The advantages of learning use CoTNet Context information mining in , Even if it is transferred to the downstream task of target detection .

Refer to the post

ResNet Super variant : JD.COM AI New open source computer vision module !( With source code )

边栏推荐

- Basic syntax and common commands of R language

- What moment makes you think there is a bug in the world?

- C language escape character and its meaning

- Common operations in VIM

- 搭建极简GB28181 网守和网关服务器,建立AI推理和3d服务场景,然后开源代码(一)

- Remove interval (greedy)

- Biscuit distribution

- Ideal L9 in the eyes of the post-90s: the simplest product philosophy, creating the most popular products

- Custom structure type

- C language LNK2019 unresolved external symbols_ Main error

猜你喜欢

(1) Introduction

如何裁剪动图大小?试试这个在线照片裁剪工具

Build a minimalist gb28181 gatekeeper and gateway server, establish AI reasoning and 3D service scenarios, and then open source code (I)

Shared memory synchronous encapsulation

System Verilog — interface

How to cut the size of a moving picture? Try this online photo cropping tool

SPARQL learning notes of query, an rrdf query language

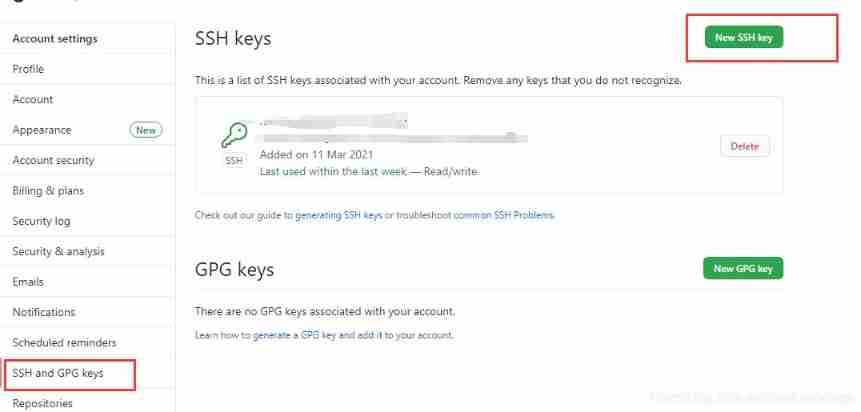

Solution of push code failure in idea

电源自动测试系统NSAT-8000,精准高速可靠的电源测试设备

GDB debugging

随机推荐

有哪个瞬间让你觉得这个世界出bug了?

Stack and queue

Cross compilation correlation of curl Library

Use Matplotlib to draw a line chart

[try to hack] vulhub shooting range construction

Flexible layout (display:flex;) Attribute details

Common classes in QT

Design and implementation of thread pool

BM setup process

High precision addition

Qmake uses toplevel or topbuilddir

If multiple signals point to the same slot function, you want to know which signal is triggered.

Iterator failure condition

dev/mapper的解释

Core mode and immediate rendering mode of OpenGL

14 -- 验证回文字符串 Ⅱ

Two advanced playing methods of QT signal and slot

RDB and AOF persistence of redis

14 -- validate palindrome string II

One question per day, a classic simulation question