当前位置:网站首页>torch.optim.Adam() function usage

torch.optim.Adam() function usage

2022-07-30 16:54:00 【Mick..】

Adam: A method for stochastic optimization

Adam adaptively controls the size of the learning rate of each parameter through the first-order moment and second-order moment of the gradient.

Initialization of adam

def __init__(self, params, lr=1e-3, betas=(0.9, 0.999), eps=1e-8,weight_decay=0, amsgrad=False):Args:params (iterable): iterable of parameters to optimize or dicts definingparameter groupslr (float, optional): learning rate (default: 1e-3)betas (Tuple[float, float], optional): coefficients used for computingrunning averages of gradient and its square (default: (0.9, 0.999))eps (float, optional): term added to the denominator to improvenumerical stability (default: 1e-8)weight_decay (float, optional): weight decay (L2 penalty) (default: 0)amsgrad (boolean, optional): whether to use the AMSGrad variant of thisalgorithm from the paper `On the Convergence of Adam and Beyond`_(default: False)

@torch.no_grad()def step(self, closure=None):"""Performs a single optimization step.Args:closure (callable, optional): A closure that reevaluates the modeland returns the loss."""loss = Noneif closure is not None:with torch.enable_grad():loss = closure()for group in self.param_groups:params_with_grad = []grads = []exp_avgs = []exp_avg_sqs = []max_exp_avg_sqs = []state_steps = []beta1, beta2 = group['betas']for p in group['params']:if p.grad is not None:params_with_grad.append(p)if p.grad.is_sparse:raise RuntimeError('Adam does not support sparse gradients, please consider SparseAdam instead')grads.append(p.grad)state = self.state[p]# Lazy state initializationif len(state) == 0:state['step'] = 0# Exponential moving average of gradient valuesstate['exp_avg'] = torch.zeros_like(p, memory_format=torch.preserve_format)# Exponential moving average of squared gradient valuesstate['exp_avg_sq'] = torch.zeros_like(p, memory_format=torch.preserve_format)if group['amsgrad']:# Maintains max of all exp. moving avg. of sq. grad. valuesstate['max_exp_avg_sq'] = torch.zeros_like(p, memory_format=torch.preserve_format)exp_avgs.append(state['exp_avg'])exp_avg_sqs.append(state['exp_avg_sq'])if group['amsgrad']:max_exp_avg_sqs.append(state['max_exp_avg_sq'])# update the steps for each param group updatestate['step'] += 1# record the step after step updatestate_steps.append(state['step'])F.adam(params_with_grad,grads,exp_avgs,exp_avg_sqs,max_exp_avg_sqs,state_steps,amsgrad=group['amsgrad'],beta1=beta1,beta2=beta2,lr=group['lr'],weight_decay=group['weight_decay'],eps=group['eps'])return loss边栏推荐

- 游戏窗口化的逆向分析

- The way of life, share with you!

- PHP留言反馈管理系统源码

- 镜像站收集

- 【Linux Operating System】 Virtual File System | File Cache

- 微信小程序picker滚动选择器使用详解

- 你是一流的输家,你因此成为一流的赢家

- Nervegrowold d2l (7) kaggle housing forecast model, numerical stability and the initialization and activation function

- MySql统计函数COUNT详解

- 3D激光SLAM:LeGO-LOAM论文解读---系统概述部分

猜你喜欢

Public Key Retrieval is not allowed报错解决方案

win下搭建php环境的方法

实现web实时消息推送的7种方案

云风:不加班、不炫技,把复杂的问题简单化

![[NCTF2019] Fake XML cookbook-1|XXE vulnerability|XXE information introduction](/img/29/92b9d52d17a203b8bdead3eb2c902e.png)

[NCTF2019] Fake XML cookbook-1|XXE vulnerability|XXE information introduction

安全业务收入增速超70% 三六零筑牢数字安全龙头

LeetCode167:有序数组两数之和

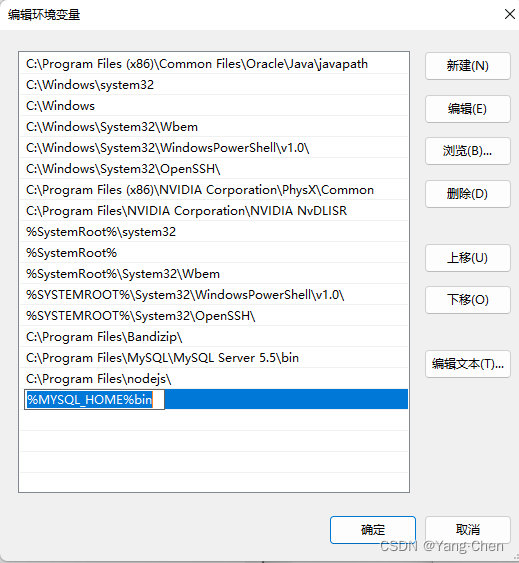

MySQL 8.0.29 解压版安装教程(亲测有效)

DTSE Tech Talk丨第2期:1小时深度解读SaaS应用系统设计

Login Module Debugging - Getting Started with Software Debugging

随机推荐

Nervegrowold d2l (7) kaggle housing forecast model, numerical stability and the initialization and activation function

Go新项目-编译热加载使用和对比,让开发更自由(3)

[NCTF2019] Fake XML cookbook-1|XXE vulnerability|XXE information introduction

DTSE Tech Talk丨第2期:1小时深度解读SaaS应用系统设计

归一化与标准化

[TypeScript]简介、开发环境搭建、基本类型

登录模块调试-软件调试入门

How to use Redis for distributed applications in Golang

Leetcode 118. Yanghui Triangle

【HMS core】【FAQ】A collection of typical questions about Account, IAP, Location Kit and HarmonyOS 1

Discuz杂志/新闻报道模板(jeavi_line)UTF8-GBK模板

Navisworks切换语言

DTSE Tech Talk丨第2期:1小时深度解读SaaS应用系统设计

Moonbeam创始人解读多链新概念Connected Contract

李沐d2l(七)kaggle房价预测+数值稳定性+模型初始化和激活函数

04、Activity的基本使用

MySQL索引常见面试题(2022版)

vivo宣布延长产品保修期限 系统上线多种功能服务

Goland opens file saving and automatically formats

Horizontal Pod Autoscaler(HPA)