当前位置:网站首页>Nervegrowold d2l (7) kaggle housing forecast model, numerical stability and the initialization and activation function

Nervegrowold d2l (7) kaggle housing forecast model, numerical stability and the initialization and activation function

2022-07-30 16:23:00 【madkeyboard】

一、kaggle房价预测

数据获取

首先去kaggleDownload the provided dataset above,通过pandas从文件中读取数据.

import numpy as np

import pandas as pd

import torch

from torch import nn

from d2l import torch as d2l

train_data = pd.read_csv("8房价预测\\train.csv")

test_data = pd.read_csv("8房价预测\\test.csv")

print(train_data.shape) #(1460, 81)

print(test_data.shape) #(1459, 80)

print(train_data.iloc[0:4, [0,1,2,3,-3,-2,-1]])

''' Id MSSubClass MSZoning LotFrontage SaleType SaleCondition SalePrice 0 1 60 RL 65.0 WD Normal 208500 1 2 20 RL 80.0 WD Normal 181500 2 3 60 RL 68.0 WD Normal 223500 3 4 70 RL 60.0 WD Abnorml 140000 '''

数据处理

You can see from the partial data printed above,A column existsId,This column is generally not used during training,So we need to remove it.此外,The last column is the result we need to predict,So it needs to be deleted too.

all_features = pd.concat((train_data.iloc[:, 1:-1], test_data.iloc[:, 1:])) # 1:-1Removed the last column

print(all_features.iloc[:4, [0,1,2,3,-3,-2,-1]])

''' MSSubClass MSZoning LotFrontage LotArea YrSold SaleType SaleCondition 0 60 RL 65.0 8450 2008 WD Normal 1 20 RL 80.0 9600 2007 WD Normal 2 60 RL 68.0 11250 2008 WD Normal 3 70 RL 60.0 9550 2006 WD Abnorml '''

之前在学习Numpy和pandasDirty data is always processed,For the dirty data in the dataset given this time,We can replace the missing values with the mean of the corresponding features(通过将特征重新缩放到零均值和单位方差来标准化数据),对于为nanThe value of is directly supplemented as 0.

#脏数据处理

numeric_features = all_features.dtypes[all_features.dtypes != 'object'].index #Find features of numeric type

all_features[numeric_features] = all_features[numeric_features].apply( #The test set is given during the competition,So before it was to combine them,Usually, it is necessary to calculate the mean value in the training set before applying it to the test set

lambda x : (x - x.mean() / (x.std())) #This line is equivalent to changing the mean to 0,方差变为1(said in the video,没太懂)

)

all_features[numeric_features] = all_features[numeric_features].fillna(0) #Define an invalid value as the mean0

#处理离散值,Replace them with one-hot encoding

all_features = pd.get_dummies(all_features, dummy_na=True)

print(all_features.shape)

转换格式

# 从pandas格式中提取Numpy格式,并将其转换为张量表示

n_train = train_data.shape[0]

train_features = torch.tensor(all_features[:n_train].values,

dtype=torch.float32)

test_features = torch.tensor(all_features[n_train:].values,

dtype=torch.float32)

train_labels = torch.tensor(train_data.SalePrice.values.reshape(-1,1),

dtype=torch.float32)

训练

# 训练

loss = nn.MSELoss()

in_features = train_features.shape[1] # 331

def get_net():

net = nn.Sequential(nn.Linear(in_features, 1)) # 线性回归

return net

Previously we used the true value for the error - 预测值,But the result is not accurate(For example, the prediction error is 10w,some houses100w,Then the error will be larger,虽然对于10wThere is a small error in the price of the left and right.)

So here we focus more on relative errory-y’/y

def log_rmse(net, features, labels):

clipped_preds = torch.clamp(net(features), 1, float('inf'))

rmse = torch.sqrt(loss(torch.log(clipped_preds), torch.log(labels)))

return rmse.item()

训练函数

def train(net, train_features, train_labels, test_features, test_labels,

num_epochs, learning_rate, weight_decay, batch_size):

train_ls, test_ls = [], []

train_iter = d2l.load_array((train_features, train_labels), batch_size)

optimizer = torch.optim.Adam(net.parameters(), lr=learning_rate,

weight_decay=weight_decay) #Can be seen as a smoother oneSGD

for epoch in range(num_epochs):

for X, y in train_iter:

optimizer.zero_grad()

l = loss(net(X), y)

l.backward()

optimizer.step()

train_ls.append(log_rmse(net, train_features, train_labels))

if test_labels is not None:

test_ls.append(log_rmse(net, test_features, test_labels))

return train_ls, test_ls

K折交叉验证

def get_k_fold_data(k, i, X, y):

assert k > 1

fold_size = X.shape[0] // k # The size of each fold is 样本数/K

X_train, y_train = None, None

for j in range(k):

idx = slice(j * fold_size, (j + 1) * fold_size)

X_part, y_part = X[idx, :], y[idx]

if j == i: # i It's the current fold,and make it a validation set

X_valid, y_valid = X_part, y_part

elif X_train is None:

X_train, y_train = X_part, y_part

else:

X_train = torch.cat([X_train, X_part], 0)

y_train = torch.cat([y_train, y_part], 0)

return X_train, y_train, X_valid, y_valid

# 返回训练和验证误差的平均值

def k_fold(k, X_train, y_train, num_epochs, learning_rate, weight_decay,

batch_size):

train_l_sum, valid_l_sum = 0, 0

for i in range(k):

data = get_k_fold_data(k, i, X_train, y_train)

net = get_net()

train_ls, valid_ls = train(net, *data, num_epochs, learning_rate,

weight_decay, batch_size)

train_l_sum += train_ls[-1]

valid_l_sum += valid_ls[-1]

if i == 0:

d2l.plot(list(range(1, num_epochs + 1)), [train_ls, valid_ls],

xlabel='epoch', ylabel='rmse', xlim=[1, num_epochs],

legend=['train', 'valid'], yscale='log')

print(f'fold {

i + 1}, train log rmse {

float(train_ls[-1]):f}, '

f'valid log rmse {

float(valid_ls[-1]):f}')

return train_l_sum / k, valid_l_sum / k

模型选择

k, num_epochs, lr, weight_decay, batch_size = 5, 100, 5, 0, 64

train_l, valid_l = k_fold(k, train_features, train_labels, num_epochs, lr,

weight_decay, batch_size)

print(f'{

k}-折验证: 平均训练log rmse: {

float(train_l):f}, '

f'平均验证log rmse: {

float(valid_l):f}')

''' fold 1, train log rmse 0.168286, valid log rmse 0.170249 fold 2, train log rmse 0.165790, valid log rmse 0.182056 fold 3, train log rmse 0.177228, valid log rmse 0.176420 fold 4, train log rmse 0.194456, valid log rmse 0.189484 fold 5, train log rmse 0.184219, valid log rmse 0.207091 5-折验证: 平均训练log rmse: 0.177996, 平均验证log rmse: 0.185060 '''

kaggle上提交结果

def train_and_pred(train_features, test_feature, train_labels, test_data,

num_epochs, lr, weight_decay, batch_size):

net = get_net()

train_ls, _ = train(net, train_features, train_labels, None, None,

num_epochs, lr, weight_decay, batch_size)

d2l.plot(np.arange(1, num_epochs + 1), [train_ls], xlabel='epoch',

ylabel='log rmse', xlim=[1, num_epochs], yscale='log')

print(f'train log rmse {

float(train_ls[-1]):f}')

preds = net(test_features).detach().numpy()

test_data['SalePrice'] = pd.Series(preds.reshape(1, -1)[0])

submission = pd.concat([test_data['Id'], test_data['SalePrice']], axis=1)

submission.to_csv('submission.csv', index=False)

train_and_pred(train_features, test_features, train_labels, test_data,

num_epochs, lr, weight_decay, batch_size)

''' train log rmse 0.200159 '''

二、数值的稳定性

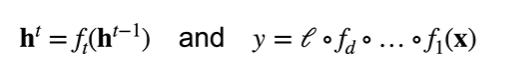

考虑如下有d层的神经网络

t表示层.

数值稳定性常见的两个问题

梯度爆炸:

- 值超出值域

- 对学习率敏感

梯度消失:

- 梯度值变为0

- 训练无进展

- 对于底部层尤为严重

When the value is too large or too small, it will cause numerical problems,常发生在深度模型中,因为会对nMultiply with numbers.

三、模型初始化和激活函数

让每层的方差是一个常数:

- 将每层的输出和梯度都看做随机变量

- 让它们的均值和方差都保持一致

权重初始化:

- Randomly test parameters within a reasonable interval

- 训练开始的时候更容易有数值不稳定

- 使用N(0, 0.01)来初始可能对小网络没问题,但不能保证深度神经网络.

positive variance:

反向均值和方差:

边栏推荐

猜你喜欢

随机推荐

【SOC】Classic output hello world

在树莓派上驱动CSI摄像头

Shell脚本的概念

Horizontal Pod Autoscaler(HPA)

Sparse-PointNet: See Further in Autonomous Vehicles 论文笔记

04、Activity的基本使用

SocialFi 何以成就 Web3 去中心化社交未来

23. Please talk about the difference between IO synchronization, asynchronous, blocking and non-blocking

武汉星起航:海外仓基础建设成为跨境电商企业的一大出海利器

Minio 入门

配置Path环境变量

在 Chrome 浏览器中安装 JSON 显示插件

Scheduling_Channel_Access_Based_on_Target_Wake_Time_Mechanism_in_802.11ax_WLANs

Jetpack Compose 到底优秀在哪里?| 开发者说·DTalk

SMI 与 Gateway API 的 GAMMA 倡议意味着什么?

二、判断 & 循环

tiup list

为什么中年男人爱出轨?

华为ADS获取转化跟踪参数报错:getInstallReferrer IOException: getInstallReferrer not found installreferrer

3D激光SLAM:LeGO-LOAM论文解读---特征提取部分