当前位置:网站首页>College entrance examination score line climbing

College entrance examination score line climbing

2022-07-02 15:37:00 【jidawanghao】

import json

import numpy as np

import pandas as pd

import requests

import os

import time

import random

class School:

school_id:""

type:""

name:""

province_name:""

city_name:""

f211:""

f985:""

dual_class_name:""

# Data of colleges and universities over the years

# First cycle to get the colleges in each page id, Then from colleges and Universities id Data acquisition over the years for preparation

def get_one_page(page_num):

heads={'user-agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.106 Safari/537.36'}# Request header , This is still understandable

url='https://api.eol.cn/gkcx/api/?access_token=&admissions=¢ral=&department=&dual_class=&f211=&f985=&is_doublehigh=&is_dual_class=&keyword=&nature=&page=%s&province_id=&ranktype=&request_type=1&school_type=&signsafe=&size=20&sort=view_total&top_school_id=[766,707]&type=&uri=apidata/api/gk/school/lists'

list=[]

df_all = pd.DataFrame()

for i in range(1, page_num): # You don't have to say that , use i To replace the page number , from 1 To 143

response = requests.get(url % (i), headers=heads) # Needless to say , Get a data

print(response.text)

json_data = json.loads(response.text) # get json data , Don't know Baidu , I also copy the great God

#print(json_data)

# try: This usage Baidu , Prevent the program from running down

my_json = json_data['data']['item'] # get josn The root directory of the data

for my in my_json: # Make a cycle , Pick up the school id And the name of the school

ss=School()

ss.school_id=my['school_id']

ss.name = my['name']

ss.province_name = my['province_name']

ss.city_name = my['city_name']

if my['f211']==1:

ss.f211=1

else: ss.f211=0

if my['f985']==1:

ss.f985=1

else: ss.f985=0

if my['dual_class_name']==" Double top ":

ss.dual_class_name=1

else: ss.dual_class_name=0

ss.type=my['type_name']

li = {my['school_id']: my['name']} # Every school id Form a dictionary key value pair with the school \

#2021

urlkzx = 'https://static-data.eol.cn/www/2.0/schoolprovinceindex/2021/%s/35/2/1.json' # Construction is really a website ,

res = requests.get(urlkzx % (my['school_id']), headers=heads) # Get data ,school_id From the last cycle of crawling the school name

print(my['school_id'])

json_data=json.loads(res.text)

print(json_data)

if json_data!='':

data=json_data['data']['item'][0]

df_one = pd.DataFrame({

' School id':my['school_id'],

' School name ': ss.name,

' Province ': ss.province_name,

' The city name ': ss.city_name,

' Double top ': ss.dual_class_name,

'f985': ss.f985,

'f211': ss.f211,

' School type ': ss.type,

' year ': data['year'],

' batch ': data['local_batch_name'],

' type ': data['zslx_name'],

' Lowest score ': data['min'],

' Lowest ranking ': data['min_section'],

' Batch fraction ': data['proscore'],

}, index=[0])

print(df_one)

df_all = df_all.append(df_one, ignore_index=True)

#2020

urlkzx = 'https://static-data.eol.cn/www/2.0/schoolprovinceindex/2020/%s/35/2/1.json' # Construction is really a website ,

res = requests.get(urlkzx % (my['school_id']), headers=heads) # Get data ,school_id From the last cycle of crawling the school name

print(my['school_id'])

json_data = json.loads(res.text)

if json_data != '':

data = json_data['data']['item'][0]

df_one = pd.DataFrame({

' School id': my['school_id'],

' School name ': ss.name,

' Province ': ss.province_name,

' The city name ': ss.city_name,

' Double top ': ss.dual_class_name,

'f985': ss.f985,

'f211': ss.f211,

' School type ': ss.type,

' year ': data['year'],

' batch ': data['local_batch_name'],

' type ': data['zslx_name'],

' Lowest score ': data['min'],

' Lowest ranking ': data['min_section'],

' Batch fraction ': data['proscore'],

}, index=[0])

print(df_one)

df_all = df_all.append(df_one, ignore_index=True)

#2019

urlkzx = 'https://static-data.eol.cn/www/2.0/schoolprovinceindex/2019/%s/35/2/1.json' # Construction is really a website ,

res = requests.get(urlkzx % (my['school_id']), headers=heads) # Get data ,school_id From the last cycle of crawling the school name

print(my['school_id'])

json_data = json.loads(res.text)

if json_data != '':

data = json_data['data']['item'][0]

df_one = pd.DataFrame({

' School id': my['school_id'],

' School name ': ss.name,

' Province ': ss.province_name,

' The city name ': ss.city_name,

' Double top ': ss.dual_class_name,

'f985': ss.f985,

'f211': ss.f211,

' School type ': ss.type,

' year ': data['year'],

' batch ': data['local_batch_name'],

' type ': data['zslx_name'],

' Lowest score ': data['min'],

' Lowest ranking ': data['min_section'],

' Batch fraction ': data['proscore'],

}, index=[0])

print(df_one)

df_all = df_all.append(df_one, ignore_index=True)

#2018

urlkzx = 'https://static-data.eol.cn/www/2.0/schoolprovinceindex/2018/%s/35/2/1.json' # Construction is really a website ,

res = requests.get(urlkzx % (my['school_id']), headers=heads) # Get data ,school_id From the last cycle of crawling the school name

print(my['school_id'])

json_data = json.loads(res.text)

if json_data != '':

data = json_data['data']['item'][0]

df_one = pd.DataFrame({

' School id': my['school_id'],

' School name ': ss.name,

' Province ': ss.province_name,

' The city name ': ss.city_name,

' Double top ': ss.dual_class_name,

'f985': ss.f985,

'f211': ss.f211,

' School type ': ss.type,

' year ': data['year'],

' batch ': data['local_batch_name'],

' type ': data['zslx_name'],

' Lowest score ': data['min'],

' Lowest ranking ': data['min_section'],

' Batch fraction ': data['proscore'],

}, index=[0])

print(df_one)

df_all = df_all.append(df_one, ignore_index=True)

return df_all

# College information data

def detail(page_num):

heads = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.106 Safari/537.36'} # Request header , This is still understandable

url = 'https://api.eol.cn/gkcx/api/?access_token=&admissions=¢ral=&department=&dual_class=&f211=&f985=&is_doublehigh=&is_dual_class=&keyword=&nature=&page=%s&province_id=&ranktype=&request_type=1&school_type=&signsafe=&size=20&sort=view_total&top_school_id=[766,707]&type=&uri=apidata/api/gk/school/lists'

list = []

d2 = pd.DataFrame()

for i in range(1, page_num): # use i To replace the page number

response = requests.get(url % (i), headers=heads) # Get a data

print(response.text)

json_data = json.loads(response.text) # get json data

my_json = json_data['data']['item'] # get josn The root directory of the data

for my in my_json: # Make a cycle , Pick up the school id And the name of the school

ss = School()

ss.school_id = my['school_id']

ss.name = my['name']

ss.province_name = my['province_name']

ss.city_name = my['city_name']

if my['f211'] == 1:

ss.f211 = 1

else:

ss.f211 = 0

if my['f985'] == 1:

ss.f985 = 1

else:

ss.f985 = 0

if my['dual_class_name'] == " Double top ":

ss.dual_class_name = 1

else:

ss.dual_class_name = 0

ss.type = my['type_name']

li = {my['school_id']: my['name']} # Every school id Form a dictionary key value pair with the school

# 2021

urlkzx = 'https://static-data.eol.cn/www/2.0/schoolprovinceindex/2020/%s/35/2/1.json' # Construction is really a website ,

res = requests.get(urlkzx % (my['school_id']), headers=heads) # Get data ,school_id From the last cycle of crawling the school name

print(my['school_id'])

json_data = json.loads(res.text)

print(json_data)

if json_data != '':

data = json_data['data']['item'][0]

df2 = pd.DataFrame({

' School id': my['school_id'],

' School name ': ss.name,

' Province ': ss.province_name,

' The city name ': ss.city_name,

' Double top ': ss.dual_class_name,

'f985': ss.f985,

'f211': ss.f211,

' School type ': ss.type,

}, index=[0])

print(df2)

d2 = d2.append(df2, ignore_index=True)

return d2

def get_all_page(all_page_num):

print(all_page_num)

# Storage table

df_all1 = pd.DataFrame()

# Call function

df_all = get_one_page(page_num=all_page_num)

# Additional

df_all1 = df_all1.append(df_all, ignore_index=True)

time.sleep(5)

return df_all1

def getdetail(all_page_num):

print(all_page_num)

# Storage table

d1 = pd.DataFrame()

# Call function

d2 = detail(page_num=all_page_num)

# Additional

d2 = d2.append(d1, ignore_index=True)

time.sleep(5)

return d2

df_school = get_all_page(100)

dd=getdetail(100)

df_school.to_excel('data.xlsx', index=False)

dd.to_excel('dd.xlsx', index=False)

边栏推荐

- 2022 college students in Liaoning Province mathematical modeling a, B, C questions (related papers and model program code online disk download)

- Evaluation of embedded rz/g2l processor core board and development board of Feiling

- Be a good gatekeeper on the road of anti epidemic -- infrared thermal imaging temperature detection system based on rk3568

- 【LeetCode】577-反转字符串中的单词 III

- 20_ Redis_ Sentinel mode

- 士官类学校名录

- [leetcode] 200 number of islands

- Storage read-write speed and network measurement based on rz/g2l | ok-g2ld-c development board

- Case introduction and problem analysis of microservice

- 11_ Redis_ Hyperloglog_ command

猜你喜欢

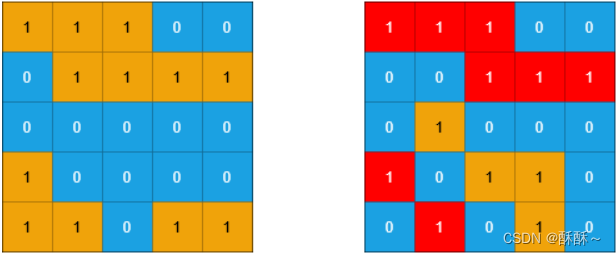

【LeetCode】1905-统计子岛屿

There are 7 seats with great variety, Wuling Jiachen has outstanding product power, large humanized space, and the key price is really fragrant

Solution of Queen n problem

LeetCode刷题——去除重复字母#316#Medium

自定义异常

飞凌嵌入式RZ/G2L处理器核心板及开发板上手评测

2022 college students in Liaoning Province mathematical modeling a, B, C questions (related papers and model program code online disk download)

Leetcode skimming - remove duplicate letters 316 medium

Case introduction and problem analysis of microservice

Download blender on Alibaba cloud image station

随机推荐

Solution of Queen n problem

Infra11199 database system

FPGA - clock-03-clock management module (CMT) of internal structure of 7 Series FPGA

LeetCode刷题——递增的三元子序列#334#Medium

[leetcode] 577 reverse word III in string

folium,确诊和密接轨迹上图

Engineer evaluation | rk3568 development board hands-on test

【LeetCode】1254-统计封闭岛屿的数量

Leetcode skimming -- incremental ternary subsequence 334 medium

16_ Redis_ Redis persistence

Yolov5 code reproduction and server operation

Real estate market trend outlook in 2022

士官类学校名录

Build your own semantic segmentation platform deeplabv3+

07_ Hash

Beijing rental data analysis

14_Redis_乐观锁

MD5 encryption

5. Practice: jctree implements the annotation processor at compile time

10_Redis_geospatial_命令