当前位置:网站首页>Multiple linear regression (gradient descent method)

Multiple linear regression (gradient descent method)

2022-07-05 08:50:00 【Python code doctor】

import numpy as np

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

# Reading data

data = np.loadtxt('Delivery.csv',delimiter=',')

print(data)

# Structural features x, The goal is y

# features

x_data = data[:,0:-1]

y_data = data[:,-1]

# Initialize learning rate ( step )

learning_rate = 0.0001

# initialization intercept

theta0 = 0

# initialization coefficient

theta1 = 0

theta2 = 0

# Maximum number of initialization iterations

n_iterables = 100

def compute_mse(theta0,theta1,theta2,x_data,y_data):

''' Computational cost function '''

total_error = 0

for i in range(len(x_data)):

# Calculate the loss ( True value - Predictive value )**2

total_error += (y_data[i]-(theta0 + theta1*x_data[i,0]+theta2*x_data[i,1]))**2

mse_ = total_error/len(x_data)/2

return mse_

def gradient_descent(x_data,y_data,theta0,theta1,theta2,learning_rate,n_iterables):

''' Gradient descent method '''

m = len(x_data)

# loop

for i in range(n_iterables):

# initialization theta0,theta1,theta2 Partial derivative

theta0_grad = 0

theta1_grad = 0

theta2_grad = 0

# Calculate the sum of partial derivatives and then average

# Traverse m Time

for j in range(m):

theta0_grad += (1/m)*((theta1*x_data[j,0]+theta2*x_data[j,1]+theta0)-y_data[j])

theta1_grad += (1/m)*((theta1*x_data[j,0]+theta2*x_data[j,1]+theta0)-y_data[j])*x_data[j,0]

theta2_grad += (1/m)*((theta1*x_data[j,0]+theta2*x_data[j,1]+theta0)-y_data[j])*x_data[j,1]

# to update theta

theta0 = theta0 - (learning_rate*theta0_grad)

theta1 = theta1 - (learning_rate*theta1_grad)

theta2 = theta2 - (learning_rate*theta2_grad)

return theta0,theta1,theta2

# Visual distribution

fig = plt.figure()

ax = Axes3D(fig)

ax.scatter(x_data[:,0],x_data[:,1],y_data)

plt.show()

print(f" Start : intercept theta0={

theta0},theta1={

theta1},theta2={

theta2}, Loss ={

compute_mse(theta0,theta1,theta2,x_data,y_data)}")

print(" Start running ~")

theta0,theta1,theta2 = gradient_descent(x_data,y_data,theta0,theta1,theta2,learning_rate,n_iterables)

print(f" iteration {

n_iterables} Next time : intercept theta0={

theta0},theta1={

theta1},theta2={

theta2}, Loss ={

compute_mse(theta0,theta1,theta2,x_data,y_data)}")

# Draw the desired plane

x_0 = x_data[:,0]

x_1 = x_data[:,1]

# Generate grid matrix

x_0,x_1 = np.meshgrid(x_0,x_1)

# y

y_hat = theta0 + theta1*x_0 +theta2*x_1

ax.plot_surface(x_0,x_1,y_hat)

# Set the label

ax.set_xlabel('Miles')

ax.set_ylabel('nums')

ax.set_zlabel('Time')

plt.show()

边栏推荐

- OpenFeign

- Halcon color recognition_ fuses. hdev:classify fuses by color

- Esp8266 interrupt configuration

- It cold knowledge (updating ing~)

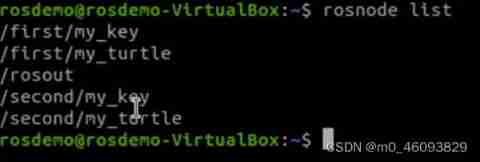

- Programming implementation of ROS learning 2 publisher node

- TF coordinate transformation of common components of ros-9 ROS

- location search 属性获取登录用户名

- 资源变现小程序添加折扣充值和折扣影票插件

- 12、动态链接库,dll

- Kubedm series-00-overview

猜你喜欢

随机推荐

Business modeling of software model | vision

Latex improve

Digital analog 1: linear programming

Array,Date,String 对象方法

Classification of plastic surgery: short in long long long

图解八道经典指针笔试题

Install the CPU version of tensorflow+cuda+cudnn (ultra detailed)

Program error record 1:valueerror: invalid literal for int() with base 10: '2.3‘

Redis implements a high-performance full-text search engine -- redisearch

[牛客网刷题 Day4] JZ32 从上往下打印二叉树

MPSoC QSPI flash upgrade method

猜谜语啦(5)

Guess riddles (5)

Shift operation of complement

猜谜语啦(9)

Halcon wood texture recognition

【日常訓練--騰訊精選50】557. 反轉字符串中的單詞 III

File server migration scheme of a company

golang 基础 —— golang 向 mysql 插入的时间数据和本地时间不一致

Meta标签详解