当前位置:网站首页>In air operation, only distance mapping is used to robustly locate occluded targets (ral2022)

In air operation, only distance mapping is used to robustly locate occluded targets (ral2022)

2022-06-21 19:05:00 【3D vision workshop】

The author 丨 [email protected] You know

Source https://zhuanlan.zhihu.com/p/457168226

Editor 3D Visual workshop

Introduce that we have been RA-L An article accepted . This article is not about SLAM, But and SLAM Is closely related to the , It's still interesting . Only the general idea is introduced here , Please refer to the thesis for details .

Thesis link :https://shiyuzhao.westlake.edu.cn/style/2022RAL_XiaoyuZhang.pdf

Video link :https://www.youtube.com/watch?v=t6-0zaRuFIY;

https://www.bilibili.com/video/BV11T4y1m7tN?share_source=copy_web&vd_source=534ab035a008ff26525503ac9b890e83

background

stay SLAM in , We want to implement sensors ( robot ) Their own position , Therefore, it is necessary to establish an environment map based on geometric features such as spatial points ; The position and attitude state of the sensor is solved by the geometric constraint relationship between the map feature and the sensor observation .

And in this work , We hope to achieve the positioning of our goals , That is to obtain the three-dimensional position of the target point relative to the sensor coordinate system . The background of this work is the aerial robot , The occlusion of the target 、 There are many problems such as loss . Therefore, we hope to achieve the location of occluded objects , That is to say Even if you don't see the target , You can also know its relative position .

This problem can also be solved by SLAM Method implementation of , For example, establish the target point in the environment map , The relative position to the camera can be easily calculated . But the accuracy of this method will be affected by the position accuracy of the target point , Camera positioning accuracy and other factors .

In this paper , We hope that the positioning of the target does not depend on the self positioning of the camera . therefore , We imitate SLAM A new target location method is proposed , To this end, we have established a new map form , The final goal is to calculate the relative position of the target point , In this process, the camera is not positioned .

Method

This method is based on RGB-D Camera implementation , It can also be extended to binocular cameras . The target position is calculated and saved in the form of points , It needs to be given in the first frame of the image . In the implementation , We use feature points to represent the target points , Therefore, the observed target points in this paper refer to the successful matching with the target points .

The core algorithm of target location is actually relatively simple , Namely When the target point is observed , Save the spatial relationship between the target point and the surrounding feature points ; When no target point is observed , The position of the target point is calculated by using the spatial relationship between the matched feature point and the target point . The spatial relationship here can take different forms , For example, direction vector, etc . But we chose distance . Because the distance is independent of the coordinate system , Therefore, it is not necessary to know the pose of the camera in the process of target positioning . Distance based target location is also relatively simple , Be similar to GPS Equal three-point positioning ( May refer to :iBeacon location - Three point positioning implementation ). In space , Four points that are not in the same plane are known , And their distance to the target point , The unique target point position can be calculated .

In particular , We extract... From each frame of the image ORB Characteristic point , The depth value can be calculated by depth map or binocular matching , Therefore, the three-dimensional coordinates of the feature points in the camera coordinate system can be obtained :

Do the same for the target point , Therefore, the distance between the target point and the feature point can be calculated in one frame of image :

System

According to the above method , We imitate SLAM Build a target positioning system :

pipeline

You can see it , Here with SLAM It's very similar , It is also divided into Drawing and location Two parts , But the meaning is different . Under construction , We only save the distance from the feature point to the target point , and Do not save the 3D coordinates of the point , In the paper , The map is called target-centered range-only map. Positioning , The realization is to solve the three-dimensional position of the target point in the current camera coordinate system , The core is to build the above optimization equation , The difficulty is feature point matching .

Modelled on the SLAM System , In the feature point matching, it also adopts the method of matching with the previous frame , Multiple processes such as matching in local maps . The result of the previous frame is used as the initial value when calculating and solving . Build new map points from keyframes , Update previous map points, etc . Other implementation details can be found in the paper , Basically, and SLAM The system has one-to-one correspondence , There is only one loopback detection , In fact, you can also add ( But I'm lazy ). The whole system is not complicated , Especially for those who are familiar with SLAM For my classmates , So I won't repeat it here .

experiment

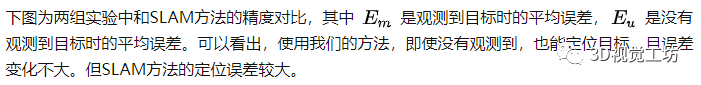

Two sets of data were selected for the experiment , A group came from ICL-NUIM Data sets , Because it can provide the true value of the target point ; The other group is for our own use realsense Collected data , And use VICON Provides the truth value . In two experiments , We are all with SLAM(ORB-SLAM3) The accuracy is compared .

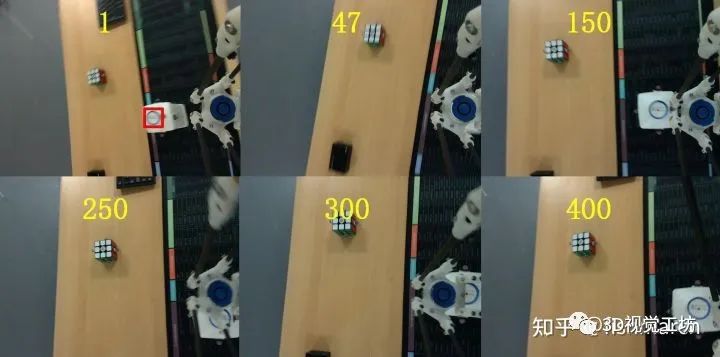

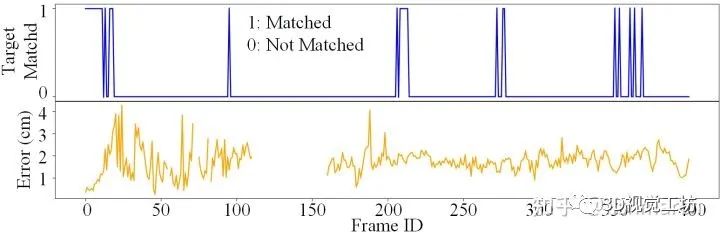

One set of experimental data is shown in the figure above , Mark the bottle cap as the target in the first frame , As the UAV moves , The target will be blocked by the robot arm , Or remove the camera picture , But throughout the process , Our methods all provide the target location . The positioning error is shown in the figure below , The blue curve indicates whether the target point is matched , The color change curve is the positioning error , It can be seen that whether the target is blocked or not , The target can be located at , And the error is almost constant . There is a small segment that fails due to feature matching and other reasons , But it can be recovered later .

SLAM The error in the method is large , In addition to cumulative errors, etc . I think it's because ,SLAM Is to locate all feature points 、 The camera pose is put into an optimization equation to solve a result with the minimum overall error , But this does not mean that one of the errors ( Target location ) It's going to get smaller , It may even get bigger . For example, the following figure shows in ICL The result of a certain location in the dataset , Use SLAM When the method is used , The position of the target point has changed greatly after a local optimization , The error increases immediately .

All in all , Use SLAM Method positioning , The position of the target point will be affected by the pose of the camera , The influence of various errors such as the position of other feature points . Our method hopes to make the target positioning accuracy not affected by these additional quantities , And it can deal with the target occlusion 、 Problems such as loss .

This article is only for academic sharing , If there is any infringement , Please contact to delete .

3D Visual workshop boutique course official website :3dcver.com

1. Multi sensor data fusion technology for automatic driving field

2. For the field of automatic driving 3D Whole stack learning route of point cloud target detection !( Single mode + Multimodal / data + Code )

3. Thoroughly understand the visual three-dimensional reconstruction : Principle analysis 、 Code explanation 、 Optimization and improvement

4. China's first point cloud processing course for industrial practice

5. laser - Vision -IMU-GPS The fusion SLAM Algorithm sorting and code explanation

6. Thoroughly understand the vision - inertia SLAM: be based on VINS-Fusion The class officially started

7. Thoroughly understand based on LOAM Framework of the 3D laser SLAM: Source code analysis to algorithm optimization

8. Thorough analysis of indoor 、 Outdoor laser SLAM Key algorithm principle 、 Code and actual combat (cartographer+LOAM +LIO-SAM)

10. Monocular depth estimation method : Algorithm sorting and code implementation

11. Deployment of deep learning model in autopilot

12. Camera model and calibration ( Monocular + Binocular + fisheye )

13. blockbuster ! Four rotor aircraft : Algorithm and practice

14.ROS2 From entry to mastery : Theory and practice

15. The first one in China 3D Defect detection tutorial : theory 、 Source code and actual combat

blockbuster !3DCVer- Academic paper writing contribution Communication group Established

Scan the code to add a little assistant wechat , can Apply to join 3D Visual workshop - Academic paper writing and contribution WeChat ac group , The purpose is to communicate with each other 、 Top issue 、SCI、EI And so on .

meanwhile You can also apply to join our subdivided direction communication group , At present, there are mainly 3D Vision 、CV& Deep learning 、SLAM、 Three dimensional reconstruction 、 Point cloud post processing 、 Autopilot 、 Multi-sensor fusion 、CV introduction 、 Three dimensional measurement 、VR/AR、3D Face recognition 、 Medical imaging 、 defect detection 、 Pedestrian recognition 、 Target tracking 、 Visual products landing 、 The visual contest 、 License plate recognition 、 Hardware selection 、 Academic exchange 、 Job exchange 、ORB-SLAM Series source code exchange 、 Depth estimation Wait for wechat group .

Be sure to note : Research direction + School / company + nickname , for example :”3D Vision + Shanghai Jiaotong University + quietly “. Please note... According to the format , Can be quickly passed and invited into the group . Original contribution Please also contact .

▲ Long press and add wechat group or contribute

▲ The official account of long click attention

3D Vision goes from entry to mastery of knowledge : in the light of 3D In the field of vision Video Course cheng ( 3D reconstruction series 、 3D point cloud series 、 Structured light series 、 Hand eye calibration 、 Camera calibration 、 laser / Vision SLAM、 Automatically Driving, etc )、 Summary of knowledge points 、 Introduction advanced learning route 、 newest paper Share 、 Question answer Carry out deep cultivation in five aspects , There are also algorithm engineers from various large factories to provide technical guidance . meanwhile , The planet will be jointly released by well-known enterprises 3D Vision related algorithm development positions and project docking information , Create a set of technology and employment as one of the iron fans gathering area , near 4000 Planet members create better AI The world is making progress together , Knowledge planet portal :

Study 3D Visual core technology , Scan to see the introduction ,3 Unconditional refund within days

There are high quality tutorial materials in the circle 、 Answer questions and solve doubts 、 Help you solve problems efficiently

Feel useful , Please give me a compliment ~

边栏推荐

- AWS Device Shadow使用

- 秒云云原生信创全兼容解决方案再升级,助力信创产业加速落地

- Type checking for typescript

- R语言 各种logistic回归 普通 条件 IPTW

- 南信大2020-2021第一学期FPGA/CPLD期末试卷

- 这篇寒门博士论文致谢火了:回首望过去,可怜无数山

- Global installation of node

- Fpga/cpld final examination paper for the first semester of Nanjing University of information technology 2020-2021

- Character processing of node

- Microbial personal notes taxonkit

猜你喜欢

随机推荐

R language various logistic regression common conditions iptw

Deep Copy

Book at the end of the article | new work by teacher Li Hang! A new upgrade of the classic machine learning work statistical learning methods

The best network packet capturing tool mitmproxy

Day11QPainter2021-09-26

Compound type of typescript

C语言__attribute__(packed)属性(学习一下)

JDBC编程六步

Canvas球体粒子变幻颜色js特效

Leetcode (210) - Schedule II

Cookies and sessions

Make interface automation test flexible

【Go语言】Go语言我们应该这样学~全网较全的学习教程

canvas交互式颜色渐变js特效代码

中国两颗风云气象“新星”主要数据产品将向全球用户开放共享

36氪首发 | 聚焦健康险产品创新,「英仕健康」已获4轮融资

Module import method of node

El expression

WWDC22 多媒体特性汇总

秒云云原生信创全兼容解决方案再升级,助力信创产业加速落地